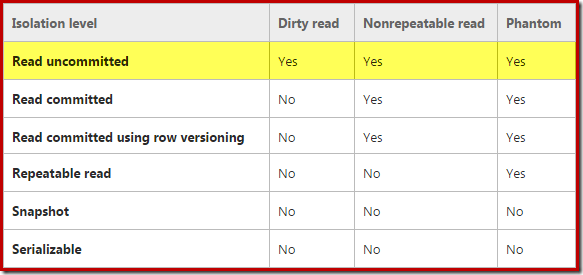

Read uncommitted is the weakest of the four transaction isolation levels defined in the SQL Standard (and of the six implemented in SQL Server). It allows all three so-called "concurrency phenomena", dirty reads, non-repeatable reads, and phantoms:

Most database people are aware of these phenomena, at least in outline, but not everyone realises that they do not fully describe the isolation guarantees on offer; nor do they intuitively describe the different behaviours one can expect in a specific implementation like SQL Server. More on that later.

Transaction Isolation – the 'I' in ACID

Every SQL command executes within a transaction (explicit, implicit, or auto-commit). Every transaction has an associated isolation level, which determines how isolated it is from the effects of other concurrent transactions. This somewhat technical concept has important implications for the way queries execute and the quality of the results they produce.

Consider a simple query that counts all the rows in a table. If this query could be executed instantaneously (or with zero concurrent data modifications), there could be only one correct answer: the number of rows physically present in the table at that moment in time. In reality, executing the query will take a certain amount of time, and the result will depend on how many rows the execution engine actually encounters as it traverses whatever physical structure is chosen to access the data.

If rows are being added to (or deleted from) the table by concurrent transactions while the counting operation is in progress, different results might be obtained depending on whether the row-counting transaction encounters all, some, or none of those concurrent changes – which in turn depends on the isolation level of the row-counting transaction.

Depending on isolation level, physical details, and timing of the concurrent operations, our counting transaction could even produce a result that was never a true reflection of the committed state of the table at any point of time during the transaction.

Example

Consider a row-counting transaction that starts at time T1, and scans the table from start to end (in clustered index key order, for the sake of argument). At that moment, there are 100 committed rows in the table. Some time later (at time T2), our counting transaction has encountered 50 of those rows. At the same moment, a concurrent transaction inserts two rows to the table, and commits a short time later at time T3 (before the counting transaction ends). One of the inserted rows happens to fall within the half of the clustered index structure that our counting transaction has already processed, while the other inserted row sits in the uncounted portion.

When the row-counting transaction completes, it will report 101 rows in this scenario; 100 rows initially in the table plus the single inserted row that was encountered during the scan. This result is at odds with the committed history of the table: there were 100 committed rows at times T1 and T2, then 102 committed rows at time T3. There was never a time when there were 101 committed rows.

The surprising thing (perhaps, depending on how deeply you have thought about these things before) is that this result is possible at the default (locking) read committed isolation level, and even under repeatable read isolation. Both those isolation levels are guaranteed to read only committed data, yet we obtained a result that represents no committed state of the database!

Analysis

The only transaction isolation level that provides complete isolation from concurrency effects is serializable. The SQL Server implementation of the serializable isolation level means a transaction will see the latest committed data, as of the moment that the data was first locked for access. In addition, the set of data encountered under serializable isolation is guaranteed not to change its membership before the transaction ends.

The row-counting example highlights a fundamental aspect of database theory: we need to be clear about what a "correct" result means for a database that experiences concurrent modifications, and we need to understand the trade-offs we are making when selecting an isolation level lower than serializable.

If we need a point-in-time view of the committed state of the database, we should use snapshot isolation (for transaction-level guarantees) or read committed snapshot isolation (for statement-level guarantees). Note though that a point-in-time view means we are not necessarily operating on the current committed state of the database; in effect, we may be using out-of-date information. On the other hand, if we are happy with results based on committed data only (albeit possibly from different points in time), we could choose to stick with the default locking read committed isolation level.

To be sure of producing results (and making decisions!) based on the latest set of committed data, for some serial history of operations against the database, we would need serializable transaction isolation. Of course this option is typically the most expensive in terms of resource use and lowered concurrency (including a heightened risk of deadlocks).

In the row-counting example, both snapshot isolation levels (SI and RCSI) would give a result of 100 rows, representing the count of committed rows at the start of the statement (and transaction in this case). Running the query at locking read committed or repeatable read isolation could produce a result of 100, 101, or 102 rows – depending on timing, lock granularity, row insert position, and the physical access method chosen. Under serializable isolation, the result would be either 100 or 102 rows, depending on which of the two concurrent transactions is considered to have executed first.

How Bad Is Read Uncommitted?

Having introduced read uncommitted isolation as the weakest of the available isolation levels, you should be expecting it to offer even lower isolation guarantees than locking read committed (the next highest isolation level). Indeed it does; but the question is: how much worse than locking read committed isolation is it?

So that we start with the correct context, here is a list of the main concurrency effects that can be experienced under the SQL Server default locking read committed isolation level:

- Missing committed rows

- Rows encountered multiple times

- Different versions of the same row encountered in a single statement/query plan

- Committed column data from different points in time in the same row (example)

These concurrency effects are all due to the locking implementation of read committed only taking very short-term shared locks when reading data. The read uncommitted isolation level goes one step further, by not taking shared locks at all, resulting in the additional possibility of "dirty reads."

Dirty Reads

As a quick reminder, a "dirty read" refers to reading data that is being changed by another concurrent transaction (where "change" incorporates insert, update, delete, and merge operations). Put another way, a dirty read occurs when a transaction reads data that another transaction has modified, before the modifying transaction has committed or aborted those changes.

Advantages and Disadvantages

The primary advantages of read uncommitted isolation are the reduced potential for blocking and deadlocking due to incompatible locks (including unnecessary blocking due to lock escalation), and possibly increased performance (by avoiding the need to acquire and release shared locks).

The most obvious potential drawback of read uncommitted isolation is (as the name suggests) that we might read uncommitted data (even data that is never committed, in the case of a transaction rollback). In a database where rollbacks are relatively rare, the question of reading uncommitted data might be seen as a mere timing issue, since the data in question will surely be committed at some stage, and probably quite soon. We have already seen timing-related inconsistencies in the row-counting example (which was operating at a higher isolation level) so one might well question how much of a concern it is to read data "too soon."

Clearly the answer depends on local priorities and context, but an informed decision to use read uncommitted isolation certainly seems possible. There is more to think about though. The SQL Server implementation of the read uncommitted isolation level includes some subtle behaviours that we need to be aware of before making that "informed choice."

Allocation Order Scans

Using read uncommitted isolation is taken by SQL Server as a signal that we are prepared to accept the inconsistencies that might arise as the result of an allocation-ordered scan.

Ordinarily, the storage engine can only choose an allocation-ordered scan if the underlying data is guaranteed not to change during the scan (because, for example, the database is read-only, or a table locking hint was specified). However, when read uncommitted isolation is in use, the storage engine may still choose an allocation-ordered scan even where the underlying data might be modified by concurrent transactions.

In these circumstances, the allocation-ordered scan can miss some committed data completely, or encounter other committed data more than once. The emphasis there is on missing or double-counting committed data (not reading uncommitted data) so it is not a case of "dirty reads" as such. This design decision (to allow allocation-ordered scans under read uncommitted isolation) is seen by some people as rather controversial.

As a caveat, I should be clear that the more general risk of missing or double-counting committed rows is not confined to read uncommitted isolation. It is certainly possible to see similar effects under locking read committed and repeatable read (as we saw earlier) but this occurs via a different mechanism. Missing committed rows or encountering them multiple times due to an allocation-ordered scan over changing data is specific to using read uncommitted isolation.

Reading "Corrupt" Data

Results that seem to defy logic (and even check constraints!) are possible under locking read committed isolation (again, see this article by Craig Freedman for some examples). To summarize, the point is that locking read committed can see committed data from different points in time – even for a single row if, for example, the query plan uses techniques like index intersection.

These results may be unexpected, but they are completely in-line with the guarantee to only read committed data. There is just no getting away from the fact that higher data consistency guarantees require higher isolation levels.

Those examples may even be quite shocking, if you have not seen them before. The same outcomes are possible under read uncommitted isolation, of course, but allowing dirty reads adds an extra dimension: the results may include committed and uncommitted data from different points in time, even for the same row.

Going further, it is even possible for a read uncommitted transaction to read a single column value in a mixed state of committed and uncommitted data. This can occur when reading a LOB value (for example, xml, or any of the 'max' types) if the value is stored across multiple data pages. An uncommitted read can encounter committed or uncommitted data from different points in time on different pages, resulting in a final single-column value that is a mixture of values!

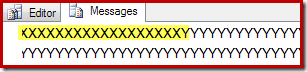

To take an example, consider a single varchar(max) column that initially contains 10,000 'x' characters. A concurrent transaction updates this value to 10,000 'y' characters. A read uncommitted transaction can read 'x' characters from one page of the LOB, and 'y' characters from another, resulting in a final read value containing a mixture of 'x' and 'y' characters. It is hard to argue that this does not represent reading "corrupt" data.

Demo

Create a clustered table with a single row of LOB data:

CREATE TABLE dbo.Test

(

RowID integer PRIMARY KEY,

LOB varchar(max) NOT NULL,

);

INSERT dbo.Test

(RowID, LOB)

VALUES

(1, REPLICATE(CONVERT(varchar(max), 'X'), 16100));

In a separate session, run the following script to read the LOB value at read uncommitted isolation:

-- Run this in session 2

SET NOCOUNT ON;

DECLARE

@ValueRead varchar(max) = '',

@AllXs varchar(max) = REPLICATE(CONVERT(varchar(max), 'X'), 16100),

@AllYs varchar(max) = REPLICATE(CONVERT(varchar(max), 'Y'), 16100);

WHILE 1 = 1

BEGIN

SELECT @ValueRead = T.LOB

FROM dbo.Test AS T WITH (READUNCOMMITTED)

WHERE T.RowID = 1;

IF @ValueRead NOT IN (@AllXs, @AllYs)

BEGIN

PRINT LEFT(@ValueRead, 8000);

PRINT RIGHT(@ValueRead, 8000);

BREAK;

END

END;

In the first session, run this script to write alternating values to the LOB column:

-- Run this in session 1

SET NOCOUNT ON;

DECLARE

@AllXs varchar(max) = REPLICATE(CONVERT(varchar(max), 'X'), 16100),

@AllYs varchar(max) = REPLICATE(CONVERT(varchar(max), 'Y'), 16100);

WHILE 1 = 1

BEGIN

UPDATE dbo.Test

SET LOB = @AllYs

WHERE RowID = 1;

UPDATE dbo.Test

SET LOB = @AllXs

WHERE RowID = 1;

END;

After a short time, the script in session two will terminate, having read a mixed state for the LOB value, for example:

This particular issue is confined to reads of LOB column values that are spread across multiple pages, not because of any guarantees provided by the isolation level, but because SQL Server happens to use page-level latches to ensure physical integrity. A side-effect of this implementation detail is that it prevents such "corrupt" data reads if the data for a single read operation happens to reside on a single page.

Depending on the version of SQL Server you have, if "mixed state" data is read for an xml column, you will either get an error resulting from the possibly-malformed xml result, no error at all, or the uncommitted-specific error 601, "could not continue scan with NOLOCK due to data movement." Reading mixed-state data for other LOB types does not generally result in an error message; the consuming application or query has no way to know it has just experienced the worst kind of dirty read. To complete the analysis, a non-LOB mixed-state row read as a result of an index intersection is never reported as an error.

The message here is that if you use read uncommitted isolation, you accept that dirty reads include the possibility of reading "corrupt" mixed-state LOB values.

The NOLOCK hint

I suppose no discussion of the read uncommitted isolation level would be complete without at least mentioning this (widely overused and misunderstood) table hint. The hint itself is just a synonym of the READUNCOMMITTED table hint. It performs exactly the same function: the object to which it is applied is accessed using read uncommitted isolation semantics (though there is an exception).

As far as the name "NOLOCK" is concerned, it simply means that no shared locks are taken when reading data. Other locks (schema stability, exclusive locks for data modification and so on) are still taken as normal.

Generally speaking, NOLOCK hints should be about as common as other per-object isolation level table hints like SERIALIZABLE and READCOMMITTEDLOCK. That is to say: not very common at all, and only used where there is no good alternative, a well-defined purpose to it, and a complete understanding of the consequences.

One example of a legitimate use of NOLOCK (or READUNCOMMITTED) is when accessing DMVs or other system views, where a higher isolation level might cause unwanted contention on non-user data structures. Another edge-case example might be where a query needs to access a significant portion of a large table, which is guaranteed to never experience data changes while the hinted query is executing. There would need to be a good reason not to use snapshot or read committed snapshot isolation instead, and the expected performance increases would need to be tested, validated, and compared with, say, using a single shared table lock hint.

The least desirable use of NOLOCK is the one that is unfortunately most common: applying it to every object in a query as a sort of go-faster magic switch. With the best will in the world, there is just no better way to make SQL Server code look decidedly amateurish. If you legitimately need read uncommitted isolation for a query, code block or module, it is probably better to set the session isolation level appropriately, and supply comments to justify the action.

Final Thoughts

Read uncommitted is a legitimate choice for transaction isolation level, but it does need to be an informed choice. As a reminder, here are some of the concurrency phenomena possible under the SQL Server default locking read committed isolation:

- Missing previously committed rows

- Committed rows encountered multiple times

- Different committed versions of the same row encountered in a single statement/query plan

- Committed data from different points in time in the same row (but different columns)

- Committed data reads that appear to contradict enabled and checked constraints

Depending on your point of view, that might be quite a shocking list of possible inconsistencies for the default isolation level. To that list, read uncommitted isolation adds:

- Dirty reads (encountering data that has not yet, and might never be, committed)

- Rows containing a mixture of committed and uncommitted data

- Missed/duplicate rows due to allocation-ordered scans

- Mixed-state ("corrupt") individual (single-column) LOB values

- Error 601 – "could not continue scan with NOLOCK due to data movement" (example).

If your primary transactional concerns are about the side-effects of locking read-committed isolation – blocking, locking overhead, reduced concurrency due to lock escalation and so on – you might be better served by a row-versioning isolation level like read committed snapshot isolation (RCSI) or snapshot isolation (SI). These are not free, however, and updates under RCSI in particular have some counter-intuitive behaviours.

For scenarios that demand the very highest levels of consistency guarantees, serializable remains the only safe choice. For performance-critical operations on read-only data (for example, large databases that are effectively read-only between ETL windows), explicitly setting the database to READ_ONLY can be a good choice as well (shared locks are not taken when the database is read only, and there is no risk of inconsistency).

There will also be a relatively small number of applications for which read uncommitted isolation is the right choice. These applications need to be happy with approximate results and the possibility of occasionally inconsistent, apparently invalid (in terms of constraints), or "arguably corrupt" data. If data changes relatively infrequently, the risk of these inconsistencies is correspondingly lower as well.

Hi Paul, thank you for the article, lots of details.

A note regarding one of the subtleties – namely, the different impact on data consistency of Allocation Order Scans (AOS) vs Index/Range Order Scans (IOS).

I remember I have once come up with the following conclusion: AOS should be considered more vulnerable to the concurrency phenomena (i.e. missing committed rows and reading duplicate rows) than IOS. The reason is that whereas AOS and IOS are both prone to these phenomena resulting from new index pages being added to a b-tree, only AOS is prone to these phenomena resulting from page splits.

Would you agree with the accuracy of this statement?

Yes I would :)

One genuinely useful application for read uncommitted isolation is debugging: while stepping through a complex stored procedure using Visual Studio or SSMS, I can interrogate its not-yet-committed effects on the database from a separate session.

That's essentially a poor man's "immediate window" – hopefully we'll get a real one at some point…

Well, that works fine if you're the only developer doing that. What if two developers are debugging the same stored procedure (or different stored procedures that touch the same tables) at the same time? :-)

Fortunately, we mostly use LocalDB for development. :)

If the risk of using corrupted data with READ UNCOMMITED is so high, why do large applications like SAP use it so often? Maybe because their customers do not know that the data presented to them through these appliacations might be inconsistent or even plain wrong? SAP is even using a separate connection to perform most of its SELECTs using this isolation level, I guess to avoid lock contention and 'boost' SQL performance. Or do they implement their own data locking mechanism parallel to that of SQL Server, to circumvent some of it limitations or tune it for their specific needs? It seems strange to me as a DBA to trust data that has been requested using the READ UNCOMMITED isolation level, even if they have gone throught great lengths to avoid corruption by using some smart application level logic, knowing how hard it is to get concurrency right in applications. But hey, I am both a DBA and a programmer, not an every day SAP user, so maybe I am somewhat too cautious about this …

READ UNCOMMITED can also be useful for static data. We use a snapshot of yesterdays data for most reports. Since this data is static from midnight to midnight we put this at the top of sp's to avoid the cost of locking. It can also be useful on tables that rarely change once you have considered the issues if it does change. Too many people say 'never use' when there are always uses, just not very common.

The last time I was on an SAP implementation they promised that they did their own internal locking. I never was able to test this.

On the flip side, you say NOLOCK Is always safe for static data, because the data is always static. But then you have some bulk activity or rebuild or something that makes the data *not* static, say, during the running of a certain report. This type of thing can always happen, just not very common. RCSI is still safer.