SQL Server provides two physical implementations of the read committed isolation level defined by the SQL standard, locking read committed and read committed snapshot isolation (RCSI). While both implementations meet the requirements laid down in the SQL standard for read committed isolation behaviours, RCSI has quite different physical behaviours from the locking implementation we looked at in the previous post in this series.

Logical Guarantees

The SQL standard requires that a transaction operating at the read committed isolation level not experience any dirty reads. Another way to express this requirement is to say a read committed transaction must only encounter committed data.

The standard also says that read committed transactions might experience the concurrency phenomena known as non-repeatable reads and phantoms (though they are not actually required to do so). As it happens, both physical implementations of read committed isolation in SQL Server can experience non-repeatable reads and phantom rows, though the precise details are quite different.

A point-in-time view of committed data

If the database option READ_COMMITTED_SNAPSHOT is ON, SQL Server uses a row-versioning implementation of the read committed isolation level. When this is enabled, transactions requesting read committed isolation automatically use the RCSI implementation; no changes to existing T-SQL code is required to use RCSI. Note carefully though that this is not the same as saying that code will behave the same under RCSI as when using the locking implementation of read committed, in fact this is quite generally not the case.

There is nothing in the SQL standard that requires the data read by a read committed transaction to be the most-recently committed data. The SQL Server RCSI implementation takes advantage of this to provide transactions with a point-in-time view of committed data, where that point in time is the moment the current statement began execution (not the moment any containing transaction started).

This is quite different from the behaviour of the SQL Server locking implementation of read committed, where the statement sees the most-recently committed data as of the moment each item is physically read. Locking read committed releases shared locks as quickly as possible, so the set of data encountered may come from very different points in time.

To summarize, locking read committed sees each row as it was at the time it was briefly locked and physically read; RCSI sees all rows as they were at the time the statement began. Both implementations are guaranteed to never see uncommitted data, but the data they encounter can be very different.

The implications of a point-in-time view

Seeing a point-in-time view of committed data might seem self-evidently superior to the more complex behaviour of the locking implementation. It is clear, for example, that a point-in-time view cannot suffer from the problems of missing rows or encountering the same row multiple times, which are both possible under locking read committed isolation.

A second important advantage of RCSI is that it does not acquire shared locks when reading data, because the data comes from the row version store rather than being accessed directly. The lack of shared locks can dramatically improve concurrency by eliminating conflicts with concurrent transactions looking to acquire incompatible locks. This advantage is commonly summarized by saying that readers do not block writers under RCSI, and vice-versa. As a further consequence of reducing blocking due to incompatible lock requests, the opportunity for deadlocks is usually greatly reduced when running under RCSI.

However, these benefits do not come without costs and caveats. For one thing, maintaining versions of committed rows consumes system resources, so it is important that the physical environment is configured to cope with this, primarily in terms of tempdb performance and memory/disk space requirements.

The second caveat is a little more subtle: RCSI provides a snapshot view of committed data as it was at the start of the statement, but there is nothing to prevent the real data from being changed (and those changes committed) while the RCSI statement is executing. There are no shared locks, remember. An immediate consequence of this second point is that T-SQL code running under RCSI might make decisions based on out of date information, as compared with the current committed state of the database. We will talk more about this shortly.

There is one last (implementation-specific) observation I want to make about RCSI before we move on. Scalar and multi-statement functions execute using a different internal T-SQL context from the containing statement. This means that the point-in-time view seen inside a scalar or multi-statement function invocation can be later than the point-in-time view seen by the rest of the statement. This can result in unexpected inconsistencies, as different parts of the same statement see data from different points in time. This weird and confusing behaviour does not apply to in-line functions, which see the same snapshot as the statement they appear in.

Non-repeatable reads and phantoms

Given a statement-level point-in-time view of the committed state of the database, it might not be immediately apparent how a read committed transaction under RCSI might experience the non-repeatable read or phantom row phenomena. Indeed, if we limit our thinking to the scope of a single statement, neither of these phenomena are possible under RCSI.

Reading the same data multiple times within the same statement under RCSI will always return the same data values, no data will disappear between those reads, and no new data will appear either. If you are wondering what sort of statement might read the same data more than once, think about queries that reference the same table more than once, perhaps in a subquery.

Statement-level read consistency is an obvious consequence of the reads being issued against a fixed snapshot of the data. The reason that RCSI does not provide protection from non-repeatable reads and phantoms is that these SQL standard phenomena are defined at the transaction level. Multiple statements within a transaction running at RCSI may see different data, because each statement sees a point-in-time view as of the moment that particular statement started.

To summarize, each statement within an RCSI transaction sees a static committed data set, but that set can change between statements inside the same transaction.

Out-of-date data

The possibility of our T-SQL code making an important decision based on out-of-date information is more than a little unsettling. Consider for a moment that the point-in-time snapshot used by a single statement running under RCSI might be arbitrarily old.

A statement that runs for a considerable period a time will continue to see the committed state of the database as it was when the statement began. Meanwhile, the statement is missing all the committed changes that occurred in the database since that time.

This is not to say that problems associated with accessing stale data under RCSI are limited to long-running statements, but the issues certainly might be more pronounced in such cases.

A question of timing

This issue of out-of-date data applies to all RCSI statements in principle, no matter how quickly they might complete. How ever small the time window is, there is always a chance that a concurrent operation might modify the data set we are working with, without us being aware of that change. Let us look again at one of the simple examples we used before when exploring the behaviour of locking read committed:

INSERT dbo.OverdueInvoices

SELECT I.InvoiceNumber

FROM dbo.Invoices AS I

WHERE I.TotalDue >

(

SELECT SUM(P.Amount)

FROM dbo.Payments AS P

WHERE P.InvoiceNumber = I.InvoiceNumber

);

When run under RCSI, this statement cannot see any committed database modifications that occur after the statement starts executing. While we will not encounter the problems of missed or multiply-encountered rows possible under the locking implementation, a concurrent transaction might add a payment that ought to prevent a customer from being sent a stern warning letter about an overdue payment after the statement above starts executing.

You can probably think of many other potential problems that might occur in this scenario, or in others that are conceptually similar. The longer the statement runs for, the more out-of-date its view of the database becomes, and the greater the scope for possibly-unintended consequences.

Of course, there are plenty of mitigating factors in this specific example. The behaviour might well be seen as perfectly acceptable. After all, sending a reminder letter because a payment arrived a few seconds too late is an easily defended action. The principle remains however.

Business Rule Failures and Integrity Risks

More serious issues can arise from the use of out-of-date information than sending a warning letter a few seconds early. A good example of this class of weakness can be seen with trigger code used to enforce an integrity rule that is perhaps too complex to enforce with declarative referential integrity constraints. To illustrate, consider the following code, which uses a trigger to enforce a variation of a foreign key constraint, but one that enforces the relationship for only certain child table rows:

ALTER DATABASE Sandpit

SET READ_COMMITTED_SNAPSHOT ON

WITH ROLLBACK IMMEDIATE;

GO

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

GO

CREATE TABLE dbo.Parent (ParentID integer PRIMARY KEY);

GO

CREATE TABLE dbo.Child

(

ChildID integer IDENTITY PRIMARY KEY,

ParentID integer NOT NULL,

CheckMe bit NOT NULL

);

GO

CREATE TRIGGER dbo.Child_AI

ON dbo.Child

AFTER INSERT

AS

BEGIN

-- Child rows with CheckMe = true

-- must have an associated parent row

IF EXISTS

(

SELECT ins.ParentID

FROM inserted AS ins

WHERE ins.CheckMe = 1

EXCEPT

SELECT P.ParentID

FROM dbo.Parent AS P

)

BEGIN

RAISERROR ('Integrity violation!', 16, 1);

ROLLBACK TRANSACTION;

END

END;

GO

-- Insert parent row #1

INSERT dbo.Parent (ParentID) VALUES (1);

Now consider a transaction running in another session (use another SSMS window for this if you are following along) which deletes parent row #1, but does not commit yet:

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

BEGIN TRANSACTION;

DELETE FROM dbo.Parent

WHERE ParentID = 1;

Back in our original session, we try to insert a (checked) child row that references this parent:

INSERT dbo.Child (ParentID, CheckMe)

VALUES (1, 1);

The the trigger code executes, but because RCSI sees only committed data as of the time the statement started, it still sees the parent row (not the uncommitted deletion) and the insert succeeds!

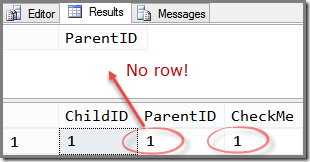

The transaction that deleted the parent row can now commit its change successfully, leaving the database in an inconsistent state in terms of our trigger logic:

COMMIT TRANSACTION;

SELECT P.* FROM dbo.Parent AS P;

SELECT C.* FROM dbo.Child AS C;

This is a simplified example of course, and one which could easily be circumvented using the built-in constraint facilities. Much more complex business rules and pseudo-integrity constraints can be written inside and outside of triggers. The potential for incorrect behaviour under RCSI should be obvious.

Blocking behaviour and latest-committed data

I mentioned earlier that T-SQL code is not guaranteed to behave in the same way under RCSI read committed as it did using the locking implementation. The preceding trigger code example is a good illustration of that, but I need to emphasise that the general problem is not limited to triggers.

RCSI is typically not a good choice for any T-SQL code whose correctness depends on blocking if a concurrent uncommitted change exists. RCSI might also not be the right choice if the code depends on reading current committed data, rather than the latest committed data as at the time the statement started. These two considerations are related, but they are not the same thing.

Locking read committed under RCSI

SQL Server provides one way to request locking read committed when RCSI is enabled, using the table hint READCOMMITTEDLOCK. We can modify our trigger to avoid the problems shown above by adding this hint to the table that needs blocking behaviour to perform correctly:

ALTER TRIGGER dbo.Child_AI

ON dbo.Child

AFTER INSERT

AS

BEGIN

-- Child rows with CheckMe = true

-- must have an associated parent row

IF EXISTS

(

SELECT ins.ParentID

FROM inserted AS ins

WHERE ins.CheckMe = 1

EXCEPT

SELECT P.ParentID

FROM dbo.Parent AS P WITH (READCOMMITTEDLOCK) -- NEW!!

)

BEGIN

RAISERROR ('Integrity violation!', 16, 1);

ROLLBACK TRANSACTION;

END

END;

With this change in place, the attempt to insert the potentially-orphaned child row blocks until the deleting transaction commits (or aborts). If the delete commits, the trigger code detects the integrity violation and raises the expected error.

Identifying queries that might not perform correctly under RCSI is a non-trivial task that may require extensive testing to get right (and please remember these issues are quite general and not confined to trigger code!) Also, adding the READCOMMITTEDLOCK hint to every table that needs it can be a tedious and error-prone process. Until SQL Server provides a more broadly-scoped option to request the locking implementation where needed, we are stuck with using the table hints.

Next Time

The next post in this series continues our examination of read committed snapshot isolation, with a look at the surprising behaviour of data modification statements under RCSI.

Very interesting. Thanks for the article.

Thank you very much for your thoughtful article. I learned so many things here. The most important of which is not to jump in using a new feature or technology for any situation, without first thoroughly examining the consequences.

Regarding your trigger example, however, I think the problematic situation here is not a consequence of using RCSI, but the core of the problem is because of the fact that there is a lack of data integrity enforcement in deleting rows from Parent table. In your example, the row is going to be deleted a fraction of a second after the trigger has finished execution, but it could be deleted 10 days later, after the trigger has finished execution, and still leaving the database in exactly the same inconsistent state, no matter if you use RCSI or not.

So, using a locking mechanism in your scenario, only is a fix for the generally low-probability case that the trigger and the deleting transaction happen to be executed in that specific order and timing.

Nevertheless, I think your example conveys the point you are trying make. – thank you again

(I really do love this series and I am learning a ton. Thank you so much for writing this!)

One concern that I have with this particular entry in the series, is that I found this in the documentation of snapshot isolation on the MSDN Developer Network Site:

Snapshot isolation uses an optimistic concurrency model. If a snapshot transaction attempts to commit modifications to data that has changed since the transaction began, the transaction will roll back and an error will be raised. You can avoid this by using UPDLOCK hints for SELECT statements that access data to be modified. See "Locking Hints" in SQL Server Books Online for more information.

I assume that the same is true for Read Committed Snapshot Isolation. I see no mention of optimistic locking in this article and wonder if that has an impact on the description here? If you update an older version of a row in a long running transaction. Then a fast running transaction sneaks in and updates the same row. With optimistic locking, a database engine would recognize that the row version that the long running transaction is trying to change is not the same version that is now committed in the database. With optimistic locking in this mode of operation, the database will rollback the transaction if it encounters this situation. So you won't have update conflicts. But I can see that if one process changes the parent row and the other changes the child row there could be inconsistencies. I think that is what is described above. But I do feel that people need to understand all aspects of this isolation level and not just the issues.

Thank you for the comment Aaron. Indeed things work differently for updates under RCSI (largely because RCSI's use of versions is scoped to a single statement). See my later article in the series http://sqlperformance.com/2014/05/t-sql-queries/data-modifications-under-rcsi for more details. I'm glad you're enjoying the series so far!

Your example with trigger is strange. In real database I will have a foreign key between Child.ParentID and Parent.ParentID and INSERT will wait and end with an error, not need a TRIGGER and CheckMe.

Whether or not RCSI is on or off doesn't change much. As long as the decision making is not performed while a lock is held then there could be inconsistencies.

E.g.

DECLARE @status int = (SELECT status …);

IF @status = 1 DO SOMETHING;

Here the @status has to be considered potentially stale whether a S lock was acquired or not. Every time you run that kind of logic in a READ COMMITTED transaction you are subject to invariant violations.

The only way to prevent violations is to run in SERIALIZABLE or use optimistic locking.