When an execution plan includes a scan of a b-tree index structure, the storage engine may be able to choose between two physical access strategies when the plan is executed:

- Follow the index b-tree structure; or,

- locate pages using internal page allocation information.

Where a choice is available, the storage engine makes the runtime decision on each execution. A plan recompilation is not required for it to change its mind.

The b-tree strategy starts at the root of the tree, descends to an extreme edge of the leaf level (depending on whether the scan is forward or backward), then follows leaf-level page links until the other end of the index is reached. The allocation strategy uses Index Allocation Map (IAM) structures to locate database pages allocated to the index. Each IAM page maps allocations to a 4GB interval in a single physical database file, so scanning the IAM chains associated with an index tends to access index pages in physical file order (at least as far as SQL Server can tell).

The main differences between the two strategies are:

- A b-tree scan can deliver rows to the query processor in index key order; an IAM-driven scan cannot;

- a b-tree scan may not be able to issue large read-ahead I/O requests if logically contiguous index pages are not also physically contiguous (e.g. as a result of page splitting in the index).

A b-tree scan is always available for an index. The conditions often cited for allocation order scans to be available are:

- The query plan must allow an unordered scan of the index;

- the index must be at least 64 pages in size; and,

- either a

TABLOCKorNOLOCKhint must be specified.

The first condition simply means that the query optimizer must have marked the scan with the Ordered:False property. Marking the scan Ordered:False means that correct results from the execution plan do not require the scan to return rows in index key order (though it may do so if it is convenient or otherwise necessary).

The second condition (size) applies only to SQL Server 2005 and later. It reflects the fact that there is a certain start-up cost to performing an IAM-driven scan, so there needs to be a minimum number of pages for the potential savings to repay the initial investment. The “64 pages” refers to the value of data_pages for the IN_ROW_DATA allocation unit only, as reported in sys.allocation_units.

Of course, there can only be a payoff from an allocation order scan if the possibly-larger read-ahead considerations actually come into play, but SQL Server does not currently consider this factor. In particular, it does not account for how much of the index is currently in memory, nor does it care how fragmented the index is.

The third condition is probably the least complete description in the list. Hints are not in fact required, though they can be used to meet the real requirements: The data must be guaranteed not to change during the scan, or (more controversially) we must indicate that we do not care about potentially inaccurate results, by performing the scan at the read uncommitted isolation level.

Even with these clarifications, the list of conditions for an allocation-ordered scan is still not complete. There are a number of important caveats and exceptions, which we will come to shortly.

Demo

The following query uses the AdventureWorks sample database:

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

GO

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P;

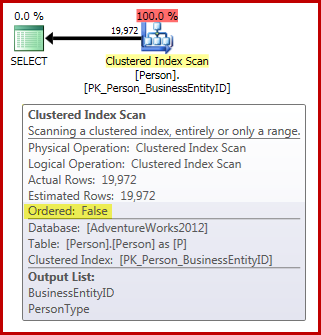

Note that the Person table contains 3,869 pages. The post-execution (actual) plan is as follows (shown in SQL Sentry Plan Explorer):

In terms of the allocation-order scanning requirements we have so far:

- The plan has the required

Ordered:Falseproperty; and, - the table has more than 64 pages; but,

- we have done nothing to ensure the data cannot change during the scan. Assuming our session is using the default read committed isolation level, the scan is not being performed at the read uncommitted isolation level either.

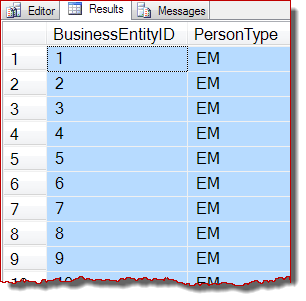

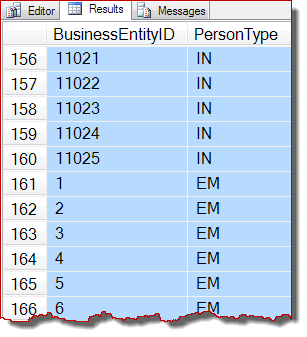

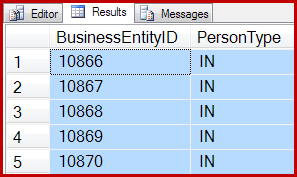

As a consequence, we would expect this scan to be performed by scanning the b-tree rather than being IAM-driven. The query results indicate that this is likely true:

The rows are returned in Clustered Index key order (by BusinessEntityID). I should state clearly that this result ordering is absolutely not guaranteed, and should not be relied on. Ordered results are only guaranteed by an appropriate top-level ORDER BY clause.

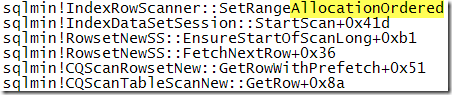

Nevertheless, the observed output order is circumstantial evidence that the scan was performed this time by following the clustered index b-tree structure. If more evidence is needed, we can attach a debugger and look at the code path SQL Server is executing during the scan:

The call stack clearly shows the scan following the b-tree.

Adding a table lock hint

We now modify the query to include a table-lock hint:

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P

WITH (TABLOCK);

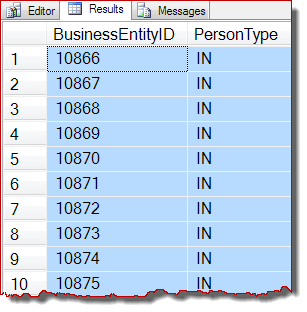

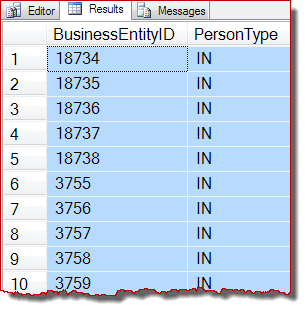

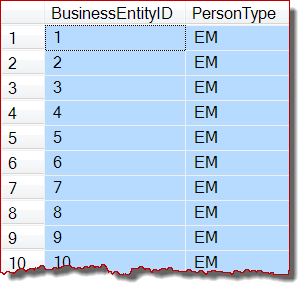

At the default locking read committed isolation level, the shared table-level lock prevents any possible concurrent modifications to the data. With all three preconditions for IAM-driven scans met, we would now expect SQL Server to use an allocation-order scan. The execution plan is the same as before, so I won’t repeat it, but the query results certainly look different:

The results are still apparently ordered by BusinessEntityID, but the starting point (10866) is different. Indeed, if we scroll down the results, we soon encounter sections that are more obviously out of key order:

The partial ordering is due to the allocation-order scan processing a whole index page at a time. The results within a page happen to be returned ordered by the index key, but the order of the scanned pages is now different. Again, I should stress that the results may look different for you: there is no guarantee of output order, even within a page, without a top-level ORDER BY on the original query.

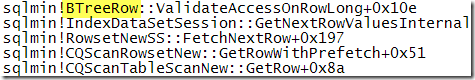

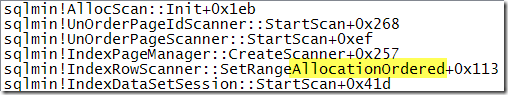

For comparison with the call stack shown earlier, this is a stack trace obtained while SQL Server was processing the query with the TABLOCK hint:

Stepping on a little further through the execution:

Clearly, SQL Server is performing an allocation-ordered scan when the table lock is specified. It is a shame there is no indication in a post-execution plan of which type of scan was used at runtime. As a reminder, the type of scan is chosen by the storage engine, and can change between executions without a plan recompilation.

Other ways to meet the third condition

I said before that to get an IAM-driven scan, we need to ensure the data cannot change underneath the scan while it is in progress, or we need to run the query at the read uncommitted isolation level. We have seen that a table lock hint at locking read committed isolation is sufficient to meet the first of those requirements, and it is easy to show that using a NOLOCK/READUNCOMMITTED hint also enables an allocation-order scan with the demo query.

In fact there are many ways to meet the third condition, including:

- Altering the index to only allow table locks;

- making the database read-only (so data is guaranteed not to change); or,

- changing the session isolation level to

READ UNCOMMITTED.

There are, however, much more interesting variations on this theme that mean we need to amend the three conditions stated previously…

Row-versioning isolation levels

Enable read committed snapshot isolation (RCSI) on the AdventureWorks database, and run the test with the TABLOCK hint again (at read committed isolation):

ALTER DATABASE AdventureWorks2012

SET READ_COMMITTED_SNAPSHOT ON

WITH ROLLBACK IMMEDIATE;

SET TRANSACTION ISOLATION LEVEL READ COMMITTED;

GO

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

GO

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P

WITH (TABLOCK);

GO

ALTER DATABASE AdventureWorks2012

SET READ_COMMITTED_SNAPSHOT OFF

WITH ROLLBACK IMMEDIATE;

With RCSI active, an index-ordered scan is used with TABLOCK, not the allocation-order scan we saw just before. The reason is the TABLOCK hint specifies a table-level shared lock, but with RCSI enabled, no shared locks are taken. Without the shared table lock, we have not met the requirement to prevent concurrent modifications to the data while the scan is in progress, so an allocation-ordered scan cannot be used.

Achieving an allocation-ordered scan when RCSI is enabled is possible, however. One way is to use a TABLOCKX hint (for a table-level exclusive lock) instead of TABLOCK. We could also retain the TABLOCK hint and add another one like READCOMMITTEDLOCK, or REPEATABLE READ or SERIALIZABLE … and so on. All these work by preventing the possibility of concurrent modifications by taking a shared table lock, at the cost of losing the benefits of RCSI. We can also still achieve an allocation-order scan using a NOLOCK or READUNCOMMITTED hint, of course.

The situation under snapshot isolation (SI) is very similar to RCSI, and not explored in detail for space reasons.

TABLESAMPLE always* performs an allocation-order scan

The TABLESAMPLE clause is an interesting exception to many of the things we have discussed so far.

Specifying a TABLESAMPLE clause always* results in an allocation-order scan, even under RCSI or SI, and even without hints. To be clear about it, the allocation-order scan that results from using TABLESAMPLE retains RCSI/SI semantics – the scan uses row versions and reading does not block writing (and vice versa).

A second surprise is that TABLESAMPLE always* performs an IAM-driven scan even if the table has fewer than 64 pages. This makes some sense because the documentation at least hints that the SYSTEM sampling method uses the IAM structure (so there is no choice but to do an allocation-order scan):

SYSTEM Is an implementation-dependent sampling method specified by ISO standards. In SQL Server, this is the only sampling method available and is applied by default. SYSTEM applies a page-based sampling method in which a random set of pages from the table is chosen for the sample, and all the rows on those pages are returned as the sample subset.

* An exception occurs if the ROWS or PERCENT specification in the TABLESAMPLE clause works out to mean 100% of the table. Specifying more ROWS than the metadata indicates are currently in the table will not work either. Using TABLESAMPLE SYSTEM (100 PERCENT) or equivalent will not force an allocation-order scan.

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

GO

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P

TABLESAMPLE SYSTEM (50 ROWS)

REPEATABLE (12345678)

--WITH (TABLOCK);

Results:

The effect of TOP and SET ROWCOUNT

In short, neither of these has any effect on the decision to use an allocation-order scan or not. This may seem surprising in cases where it is "obvious" that fewer than 64 pages will be scanned.

For example, the following queries both use an IAM-driven scan to return 5 rows from a scan:

SELECT TOP (5)

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P WITH (TABLOCK)

SET ROWCOUNT 5;

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P WITH (TABLOCK)

SET ROWCOUNT 0;

The results are the same for both:

This means that TOP and SET ROWCOUNT queries might incur the overhead of setting up an allocation-order scan, even if fewer than 64 pages are scanned. In mitigation, more complex TOP queries with selective predicates pushed into the scan could still benefit from an allocation-order scan. If the scan must process 10,000 pages to find the first 5 rows that match, an allocation-order scan could still be a win.

Preventing all* allocation-order scans instance-wide

This is not something you would ever likely do intentionally, but there is a server setting that will prevent allocation-order scans for all* user queries in all databases.

Unlikely as it may seem, the setting in question is the cursor threshold server configuration option, which has the following description in Books Online:

The cursor threshold option specifies the number of rows in the cursor set at which cursor keysets are generated asynchronously. When cursors generate a keyset for a result set, the query optimizer estimates the number of rows that will be returned for that result set. If the query optimizer estimates that the number of returned rows is greater than this threshold, the cursor is generated asynchronously, allowing the user to fetch rows from the cursor while the cursor continues to be populated. Otherwise, the cursor is generated synchronously, and the query waits until all rows are returned.

If the cursor threshold option is set to anything other than –1 (the default), no allocation-order scans will occur for user queries in any database on the SQL Server instance.

In other words, if asynchronous cursor population is enabled, no IAM-driven scans for you.

* The exception is (non-100%) TABLESAMPLE queries. The internal queries generated by the system for statistics creation and statistics updates also continue to use allocation-ordered scans.

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

GO

-- WARNING! Disables allocation-order scans instance-wide

EXECUTE sys.sp_configure

@configname = 'cursor threshold',

@configvalue = 5000;

RECONFIGURE WITH OVERRIDE;

GO

-- Would normally result in an allocation-order scan

SELECT

P.BusinessEntityID,

P.PersonType

FROM Person.Person AS P

WITH (READUNCOMMITTED);

GO

-- Reset to default allocation-order scans

EXECUTE sys.sp_configure

@configname = 'cursor threshold',

@configvalue = -1;

RECONFIGURE WITH OVERRIDE;

Results (no allocation-order scan):

One can only guess that asynchronous cursor population does not work well with allocation-order scans for some reason. It is entirely unexpected that this restriction would affect all non-cursor user queries as well though. Perhaps it is too hard for SQL Server to detect if a query is running as part of an externally-issued API cursor? Who knows.

It would be nice if this side-effect were officially documented somewhere, though it is hard to know exactly where it should go in Books Online. I wonder how many production systems out there are not using allocation-order scans because of this? Maybe not many, but you never know.

To wrap things up, here is a summary. An allocation-ordered scan is available if:

- The server option

cursor thresholdis set to –1 (the default); and, - the query plan scan operator has the

Ordered:Falseproperty; and, - the total data_pages of the

IN_ROW_DATAallocation units is at least 64; and, - either:

- SQL Server has an acceptable guarantee that concurrent modifications are impossible; or,

- the scan is running at the read uncommitted isolation level.

Regardless of all the above, a scan with a TABLESAMPLE clause always uses allocation-ordered scans (with the one technical exception noted in the main text).

Why don't read only filegroups enable allocation order scans for indexes purely contained in such filegroups? Or for those partitions that are in a read only file group.

If you have a datawarehouse being loaded daily, would it be a good idea to set it to 'readonly' at the end of the ETL process?

I read a lot of places where people warn against using NOLOCK or transaction isolation levels other than the default, but does that really apply to a system not being written-to during operation?

Is retrieving data in allocation order always a good thing? Does the read-ahead reads benefit you mentioned apply to SSDs? What if your query uses the data retrieved from this table and then uses it to join to another table. If you retrieve the data where Ordered=True then perhaps you will get a fast merge join without a sort operator. If you get the data in allocation order maybe it will force a sort, or use a hash instead. What are the pros & cons of these things?

Not that you haven't thoroughly covered this topic in this blog post (which is awesome btw) I just have more questions!

Maybe because the IAM pages that drive the scan aren't guaranteed to be in the same filegroup? I don't really know. It could also simply be an implementation gap. One thing's for sure: it doesn't work that way today.

Setting the DW to read only at the end of the ETL process might be a good idea, yes. One of the factors to consider in making that decision would be the usefulness of allocation-order scans. A database that isn't being written to doesn't have to worry about isolation levels, no. At least, not in terms of transaction isolation guarantees.

No, retrieving data in allocation order is not always a good thing. There is a definite start-up cost, so the strategy only pays off if larger I/O is actually realized, as I mentioned in the main text. Yes, larger I/O is generally beneficial whatever the underlying storage system is. The query optimizer accounts for some ordered-data possibilities when deciding on the final plan, but you're right to mention the possibility of order preservation because it is possible for the QO to miss opportunities in this area. That's not an observation that is specific to allocation-ordered scans though. It is sometimes possible for a skilled human to do better than the QO where order-preservation is a factor.

Thanks Paul, very helpful.

Just FYI – IAM pages will always be from the same filegroup, but not necessarily from the same file as the GAM interval they represent.

Is there any way to find out the type of the scan – b-tree or allocation order? Seems like there is nothing in execution plan indicating this.

Thank you, Paul!

No way I am aware of. I did look for things – extended events, trace flags etc. – but didn't find anything, hence the debugger shots in the article. Would be great if Microsoft were to add this information somewhere.

hi paul,

I have seen if the storage engine chooses Allocation Order Scan(IAM driven scan) then number of logical reads = number of pages in the table (data+index), when it uses B tree structure its data+index +1 in serial execution. In parallel version logical reads are data+index +x number of pages. can you explain it.