The fastest way to compute a median uses the SQL Server 2012 OFFSET extension to the ORDER BY clause. Running a close second, the next fastest solution uses a (possibly nested) dynamic cursor that works on all versions. This article looks at a common pre-2012 ROW_NUMBER solution to the median calculation problem to see why it performs less well, and what can be done to make it go faster.

Single Median Test

The sample data for this test consists of a single ten million row table (reproduced from Aaron Bertrand's original article):

CREATE TABLE dbo.obj

(

id integer NOT NULL IDENTITY(1,1),

val integer NOT NULL

);

INSERT dbo.obj WITH (TABLOCKX)

(val)

SELECT TOP (10000000)

AO.[object_id]

FROM sys.all_columns AS AC

CROSS JOIN sys.all_objects AS AO

CROSS JOIN sys.all_objects AS AO2

WHERE AO.[object_id] > 0

ORDER BY

AC.[object_id];

CREATE UNIQUE CLUSTERED INDEX cx

ON dbo.obj(val, id);

The OFFSET solution

To set the benchmark, here is the SQL Server 2012 (or later) OFFSET solution created by Peter Larsson:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT

Median = AVG(1.0 * SQ1.val)

FROM

(

SELECT O.val

FROM dbo.obj AS O

ORDER BY O.val

OFFSET (@Count - 1) / 2 ROWS

FETCH NEXT 1 + (1 - (@Count % 2)) ROWS ONLY

) AS SQ1;

SELECT Peso = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

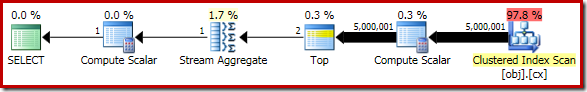

The query to count the rows in the table is commented out and replaced with a hard-coded value so as to concentrate on the performance of the core code. With a warm cache and execution plan collection turned off, this query runs for 910 ms on average on my test machine. The execution plan is shown below:

As a side note, it is interesting that this moderately complex query qualifies for a trivial plan:

The ROW_NUMBER Solution

For systems running SQL Server 2008 R2 or earlier, the best-performing of the alterative solutions uses a dynamic cursor as mentioned previously. If you are unable (or unwilling) to consider that as an option, it is natural to think about emulating the 2012 OFFSET execution plan using ROW_NUMBER.

The basic idea is to number the rows in the appropriate order, then filter for just the one or two rows needed to compute the median. There are several ways to write this in Transact SQL; a compact version that captures all the key elements is as follows:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT AVG(1.0 * SQ1.val) FROM

(

SELECT

O.val,

rn = ROW_NUMBER() OVER (

ORDER BY O.val)

FROM dbo.obj AS O

) AS SQ1

WHERE

SQ1.rn BETWEEN (@Count + 1)/2 AND (@Count + 2)/2;

SELECT Pre2012 = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

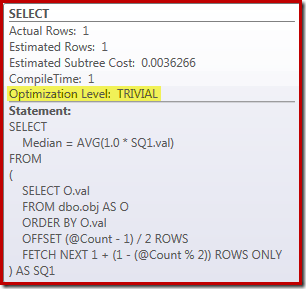

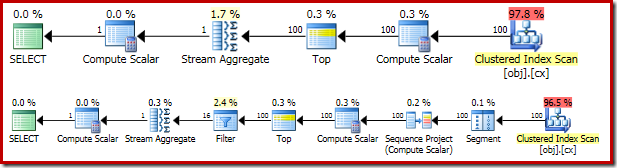

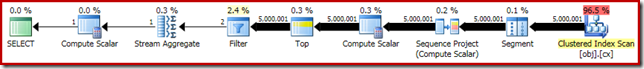

The resulting execution plan is quite similar to the OFFSET version:

It is worth looking at each of the plan operators in turn to understand them fully:

- The Segment operator is redundant in this plan. It would be required if the

ROW_NUMBERranking function had aPARTITION BYclause, but it does not. Even so, it remains in the final plan. - The Sequence Project adds a calculated row number to the stream of rows.

- The Compute Scalar defines an expression associated with the need to implicitly convert the

valcolumn to numeric so it can be multiplied by the constant literal1.0in the query. This computation is deferred until needed by a later operator (which happens to be the Stream Aggregate). This runtime optimization means the implicit conversion is only performed for the two rows processed by the Stream Aggregate, not the 5,000,001 rows indicated for the Compute Scalar. - The Top operator is introduced by the query optimizer. It recognises that at most, only the first

(@Count + 2) / 2rows are needed by the query. We could have added aTOP ... ORDER BYin the subquery to make this explicit, but this optimization makes that largely unnecessary. - The Filter implements the condition in the

WHEREclause, filtering out all but the two 'middle' rows needed to compute the median (the introduced Top is also based on this condition). - The Stream Aggregate computes the

SUMandCOUNTof the two median rows. - The final Compute Scalar computes the average from the sum and count.

Raw Performance

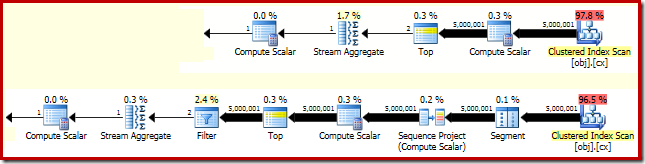

Compared with the OFFSET plan, we might expect that the additional Segment, Sequence Project, and Filter operators are going to have some adverse effect on performance. It is worth taking a moment to compare the estimated costs of the two plans:

The OFFSET plan has an estimated cost of 0.0036266 units, while the ROW_NUMBER plan is estimated at 0.0036744 units. These are very small numbers, and there is little difference between the two.

So, it is perhaps surprising that the ROW_NUMBER query actually runs for 4000 ms on average, compared with 910 ms average for the OFFSET solution. Some of this increase can surely be explained by the overhead of the extra plan operators, but a factor of four seems excessive. There must be more to it.

You have probably also noticed that the cardinality estimates for both estimated plans above are pretty hopelessly wrong. This is due to the effect of the Top operators, which have an expression referencing a variable as their row count limits. The query optimizer cannot see the contents of variables at compilation time, so it resorts to its default guess of 100 rows. Both plans actually encounter 5,000,001 rows at runtime.

This is all very interesting, but it does not directly explain why the ROW_NUMBER query is more than four times slower than the OFFSET version. After all, the 100 row cardinality estimate is just as wrong in both cases.

Improving the performance of the ROW_NUMBER solution

In my previous article, we saw how the performance of the grouped median OFFSET test could be almost doubled by simply adding a PAGLOCK hint. This hint overrides the storage engine's normal decision to acquire and release shared locks at the row granularity (due to the low expected cardinality).

As a further reminder, the PAGLOCK hint was unnecessary in the single median OFFSET test due to a separate internal optimization that can skip row level shared locks, resulting in only a small number of intent-shared locks being taken at the page level.

We might expect the ROW_NUMBER single median solution to benefit from the same internal optimization, but it does not. Monitoring locking activity while the ROW_NUMBER query executes, we see over half a million individual row level shared locks being taken and released.

So, now we know what the problem is, we can improve the locking performance in the same way we did previously: either with a PAGLOCK lock granularity hint, or by increasing the cardinality estimate using documented trace flag 4138.

Disabling the "row goal" using the trace flag is the less satisfactory solution for several reasons. First, it is only effective in SQL Server 2008 R2 or later. We would most likely prefer the OFFSET solution in SQL Server 2012, so this effectively limits the trace flag fix to SQL Server 2008 R2 only. Second, applying the trace flag requires administrator-level permissions, unless applied via a plan guide. A third reason is that disabling row goals for the whole query may have other undesirable effects, especially in more complex plans.

By contrast, the PAGLOCK hint is effective, available in all versions of SQL Server without any special permissions, and does not have any major side effects beyond locking granularity.

Applying the PAGLOCK hint to the ROW_NUMBER query increases performance dramatically: from 4000 ms to 1500 ms:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT AVG(1.0 * SQ1.val) FROM

(

SELECT

O.val,

rn = ROW_NUMBER() OVER (

ORDER BY O.val)

FROM dbo.obj AS O WITH (PAGLOCK) -- New!

) AS SQ1

WHERE

SQ1.rn BETWEEN (@Count + 1)/2 AND (@Count + 2)/2;

SELECT Pre2012 = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

The 1500 ms result is still significantly slower than the 910 ms for the OFFSET solution, but at least it is now in the same ballpark. The remaining performance differential is simply due to the extra work in the execution plan:

In the OFFSET plan, five million rows are processed as far as the Top (with the expressions defined at the Compute Scalar deferred as discussed earlier). In the ROW_NUMBER plan, the same number of rows have to be processed by the Segment, Sequence Project, Top, and Filter.

It seems to be a feature gap that SQL Server cannot convert between the handful of common patterns for paging. Paging is a very common thing after all. To me it made no sense when I found out that OFFSET almost always results in a different plan despite identical semantics.

Yes, but I believe this 'feature gap' to be deliberate. Consider that in maybe 99% of cases rewriting common

ROW_NUMBERpatterns to useOFFSETwould boost performance. You can bet the other 1% will occur at large, loud customers that are looking above all else for plan stability when upgrading.The possibility of regressions (especially in more complex plans) would mean providing something like a trace flag, query hint, or compatibility level tie-in so people could 'un-apply' the transform if and when that 1% strikes. It is probably safer all round to rely on customers making a conscious choice to move to

OFFSETwith all the actual testing that should involve.So, the more modern syntax is not guaranteed to offer better performance in all cases; I showed in an earlier article that the offset solution loses to a nested cursor unless a hint is used. That was due to a subtle interaction of engine features – I wouldn't want to be the one responsible for checking all those before introducing such a rewrite into the optimizer.