A simple definition of the median is:

To flesh that out a little, we can find the median of a list of numbers using the following procedure:

- Sort the numbers (in ascending or descending order, it does not matter which).

- The middle number (by position) in the sorted list is the median.

- If there are two "equally middle" numbers, the median is the average of the two middle values.

Aaron Bertrand has previously performance-tested several ways to compute the median in SQL Server:

Rob Farley recently added another approach aimed at pre-2012 installations:

This article introduces a new method using a dynamic cursor.

The 2012 OFFSET-FETCH Method

We will start by looking at the best-performing implementation, created by Peter Larsson. It uses the SQL Server 2012 OFFSET extension to the ORDER BY clause to efficiently locate the one or two middle rows needed to compute the median.

OFFSET Single Median

Aaron's first article tested computing a single median over a ten million row table:

CREATE TABLE dbo.obj

(

id integer NOT NULL IDENTITY(1,1),

val integer NOT NULL

);

INSERT dbo.obj WITH (TABLOCKX)

(val)

SELECT TOP (10000000)

AO.[object_id]

FROM sys.all_columns AS AC

CROSS JOIN sys.all_objects AS AO

CROSS JOIN sys.all_objects AS AO2

WHERE AO.[object_id] > 0

ORDER BY

AC.[object_id];

CREATE UNIQUE CLUSTERED INDEX cx

ON dbo.obj(val, id);

Peter Larsson's solution using the OFFSET extension is:

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT

Median = AVG(1.0 * SQ1.val)

FROM

(

SELECT O.val

FROM dbo.obj AS O

ORDER BY O.val

OFFSET (@Count - 1) / 2 ROWS

FETCH NEXT 1 + (1 - @Count % 2) ROWS ONLY

) AS SQ1;

SELECT Peso = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

The code above hard-codes the result of counting the rows in the table. All tested methods for computing the median need this count in order to calculate the median row numbers, so it is a constant cost. Leaving the row-counting operation out of the timing avoids one possible source of variation.

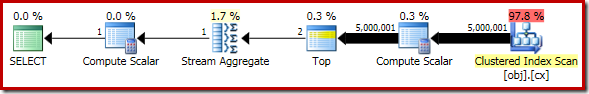

The execution plan for the OFFSET solution is shown below:

The Top operator quickly skips over the unnecessary rows, passing just the one or two rows needed to compute the median on to the Stream Aggregate. When run with a warm cache and execution plan collection tuned off, this query runs for 910 ms on average on my laptop. This is a machine with an Intel i7 740QM processor running at 1.73 GHz with Turbo disabled (for consistency).

OFFSET Grouped Median

Aaron's second article tested the performance of calculating a median per group, using a million row Sales table with ten thousand entries for each of one hundred sales people:

CREATE TABLE dbo.Sales

(

SalesPerson integer NOT NULL,

Amount integer NOT NULL

);

WITH X AS

(

SELECT TOP (100)

V.number

FROM master.dbo.spt_values AS V

GROUP BY

V.number

)

INSERT dbo.Sales WITH (TABLOCKX)

(

SalesPerson,

Amount

)

SELECT

X.number,

ABS(CHECKSUM(NEWID())) % 99

FROM X

CROSS JOIN X AS X2

CROSS JOIN X AS X3;

CREATE CLUSTERED INDEX cx

ON dbo.Sales

(SalesPerson, Amount);

Again, the best-performing solution uses OFFSET:

DECLARE @s datetime2 = SYSUTCDATETIME();

DECLARE @Result AS table

(

SalesPerson integer PRIMARY KEY,

Median float NOT NULL

);

INSERT @Result

SELECT d.SalesPerson, w.Median

FROM

(

SELECT SalesPerson, COUNT(*) AS y

FROM dbo.Sales

GROUP BY SalesPerson

) AS d

CROSS APPLY

(

SELECT AVG(0E + Amount)

FROM

(

SELECT z.Amount

FROM dbo.Sales AS z WITH (PAGLOCK)

WHERE z.SalesPerson = d.SalesPerson

ORDER BY z.Amount

OFFSET (d.y - 1) / 2 ROWS

FETCH NEXT 2 - d.y % 2 ROWS ONLY

) AS f

) AS w(Median);

SELECT Peso = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());

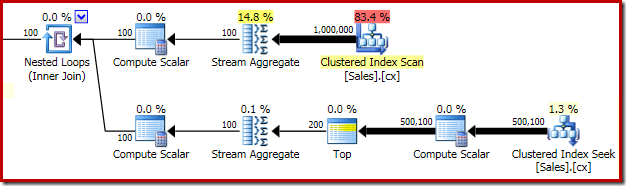

The important part of the execution plan is shown below:

The top row of the plan is concerned with finding the group row count for each sales person. The lower row uses the same plan elements seen for the single-group median solution to compute the median for each sales person. When run with a warm cache and execution plans turned off, this query executes in 320 ms on average on my laptop.

Using a Dynamic Cursor

It might seem crazy to even think about using a cursor to calculate the median. Transact SQL cursors have a (mostly well-deserved) reputation for being slow and inefficient. It is also often thought that dynamic cursors are the worst type of cursor. These points are valid in some circumstances, but not always.

Transact SQL cursors are limited to processing a single row at a time, so they can indeed be slow if many rows need to be fetched and processed. That is not the case for the median calculation though: all we need to do is locate and fetch the one or two middle values efficiently. A dynamic cursor is very suitable for this task as we shall see.

Single Median Dynamic Cursor

The dynamic cursor solution for a single median calculation consists of the following steps:

- Create a dynamic scrollable cursor over the ordered list of items.

- Calculate the position of the first median row.

- Reposition the cursor using

FETCH RELATIVE. - Decide if a second row is needed to compute the median.

- If not, return the single median value immediately.

- Otherwise, fetch the second value using

FETCH NEXT. - Compute the average of the two values and return.

Notice how closely that list reflects the simple procedure for finding the median given at the start of this article. A complete Transact SQL code implementation is shown below:

-- Dynamic cursor

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE

@RowCount bigint, -- Total row count

@Row bigint, -- Median row number

@Amount1 integer, -- First amount

@Amount2 integer, -- Second amount

@Median float; -- Calculated median

SET @RowCount = 10000000;

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

DECLARE Median CURSOR

LOCAL

SCROLL

DYNAMIC

READ_ONLY

FOR

SELECT

O.val

FROM dbo.obj AS O

ORDER BY

O.val;

OPEN Median;

-- Calculate the position of the first median row

SET @Row = (@RowCount + 1) / 2;

-- Move to the row

FETCH RELATIVE @Row

FROM Median

INTO @Amount1;

IF @Row = (@RowCount + 2) / 2

BEGIN

-- No second row, median is the single value we have

SET @Median = @Amount1;

END

ELSE

BEGIN

-- Get the second row

FETCH NEXT

FROM Median

INTO @Amount2;

-- Calculate the median value from the two values

SET @Median = (@Amount1 + @Amount2) / 2e0;

END;

SELECT Median = @Median;

SELECT DynamicCur = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

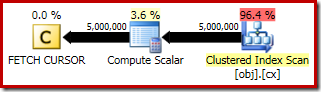

The execution plan for the FETCH RELATIVE statement shows the dynamic cursor efficiently repositioning to the first row required for the median calculation:

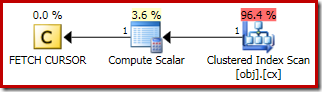

The plan for the FETCH NEXT (only required if there is a second middle row, as in these tests) is a single row fetch from the saved position of the cursor:

The advantages of using a dynamic cursor here are:

- It avoids traversing the whole set (reading stops after the median rows are found); and

- No temporary copy of the data is made in tempdb, as it would be for a static or keyset cursor.

Note we cannot specify a FAST_FORWARD cursor here (leaving the choice of a static-like or dynamic-like plan to the optimizer) because the cursor needs to be scrollable to support FETCH RELATIVE. Dynamic is the optimal here choice anyway.

When run with a warm cache and execution plan collection tuned off, this query runs for 930 ms on average on my test machine. This is a little slower than the 910 ms for the OFFSET solution, but the dynamic cursor is significantly faster than the other methods Aaron and Rob tested, and it does not require SQL Server 2012 (or later).

I am not going to repeat testing the other pre-2012 methods here, but as an example of the size of the performance gap, the following row-numbering solution takes 1550 ms on average (70% slower):

DECLARE @Start datetime2 = SYSUTCDATETIME();

DECLARE @Count bigint = 10000000

--(

-- SELECT COUNT_BIG(*)

-- FROM dbo.obj AS O

--);

SELECT AVG(1.0 * SQ1.val) FROM

(

SELECT

O.val,

rn = ROW_NUMBER() OVER (

ORDER BY O.val)

FROM dbo.obj AS O WITH (PAGLOCK)

) AS SQ1

WHERE

SQ1.rn BETWEEN (@Count + 1)/2 AND (@Count + 2)/2;

SELECT RowNumber = DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

Grouped Median Dynamic Cursor Test

It is simple to extend the single median dynamic cursor solution to compute grouped medians. For the sake of consistency, I am going to use nested cursors (yes, really):

- Open a static cursor over the sales people and row counts.

- Compute the median for each person using a new dynamic cursor each time.

- Save each result to a table variable as we go.

The code is shown below:

-- Timing

DECLARE @s datetime2 = SYSUTCDATETIME();

-- Holds results

DECLARE @Result AS table

(

SalesPerson integer PRIMARY KEY,

Median float NOT NULL

);

-- Variables

DECLARE

@SalesPerson integer, -- Current sales person

@RowCount bigint, -- Current row count

@Row bigint, -- Median row number

@Amount1 float, -- First amount

@Amount2 float, -- Second amount

@Median float; -- Calculated median

-- Row counts per sales person

DECLARE SalesPersonCounts

CURSOR

LOCAL

FORWARD_ONLY

STATIC

READ_ONLY

FOR

SELECT

SalesPerson,

COUNT_BIG(*)

FROM dbo.Sales

GROUP BY SalesPerson

ORDER BY SalesPerson;

OPEN SalesPersonCounts;

-- First person

FETCH NEXT

FROM SalesPersonCounts

INTO @SalesPerson, @RowCount;

WHILE @@FETCH_STATUS = 0

BEGIN

-- Records for the current person

-- Note dynamic cursor

DECLARE Person CURSOR

LOCAL

SCROLL

DYNAMIC

READ_ONLY

FOR

SELECT

S.Amount

FROM dbo.Sales AS S

WHERE

S.SalesPerson = @SalesPerson

ORDER BY

S.Amount;

OPEN Person;

-- Calculate median row 1

SET @Row = (@RowCount + 1) / 2;

-- Move to median row 1

FETCH RELATIVE @Row

FROM Person

INTO @Amount1;

IF @Row = (@RowCount + 2) / 2

BEGIN

-- No second row, median is the single value

SET @Median = @Amount1;

END

ELSE

BEGIN

-- Get the second row

FETCH NEXT

FROM Person

INTO @Amount2;

-- Calculate the median value

SET @Median = (@Amount1 + @Amount2) / 2e0;

END;

-- Add the result row

INSERT @Result (SalesPerson, Median)

VALUES (@SalesPerson, @Median);

-- Finished with the person cursor

CLOSE Person;

DEALLOCATE Person;

-- Next person

FETCH NEXT

FROM SalesPersonCounts

INTO @SalesPerson, @RowCount;

END;

---- Results

--SELECT

-- R.SalesPerson,

-- R.Median

--FROM @Result AS R;

-- Tidy up

CLOSE SalesPersonCounts;

DEALLOCATE SalesPersonCounts;

-- Show elapsed time

SELECT NestedCur = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());

The outer cursor is deliberately static because all rows in that set will be touched (also, a dynamic cursor is not available due to the grouping operation in the underlying query). There is nothing particularly new or interesting to see in the execution plans this time around.

The interesting thing is the performance. Despite the repeated creation and deallocation of the inner dynamic cursor, this solution performs really well on the test data set. With a warm cache and execution plans turned off, the cursor script completes in 330 ms on average on my test machine. This is again a tiny bit slower than the 320 ms recorded by the OFFSET grouped median, but It beats the other standard solutions listed in Aaron's and Rob's articles by a large margin.

Again, as an example of the performance gap to the other non-2012 methods, the following row-numbering solution runs for 485 ms on average on my test rig (50% worse):

DECLARE @s datetime2 = SYSUTCDATETIME();

DECLARE @Result AS table

(

SalesPerson integer PRIMARY KEY,

Median numeric(38, 6) NOT NULL

);

INSERT @Result

SELECT

S.SalesPerson,

CA.Median

FROM

(

SELECT

SalesPerson,

NumRows = COUNT_BIG(*)

FROM dbo.Sales

GROUP BY SalesPerson

) AS S

CROSS APPLY

(

SELECT AVG(1.0 * SQ1.Amount) FROM

(

SELECT

S2.Amount,

rn = ROW_NUMBER() OVER (

ORDER BY S2.Amount)

FROM dbo.Sales AS S2 WITH (PAGLOCK)

WHERE

S2.SalesPerson = S.SalesPerson

) AS SQ1

WHERE

SQ1.rn BETWEEN (S.NumRows + 1)/2 AND (S.NumRows + 2)/2

) AS CA (Median);

SELECT RowNumber = DATEDIFF(MILLISECOND, @s, SYSUTCDATETIME());

Results Summary

In the single median test the dynamic cursor ran for 930 ms versus 910 ms for the OFFSET method.

In the grouped median test, the nested cursor ran for 330 ms versus 320 ms for OFFSET.

In both cases, the cursor method was significantly faster than the other non-OFFSET methods. If you need to calculate a single or grouped median on a pre-2012 instance, a dynamic cursor or nested cursor really could be the optimal choice.

Cold Cache Performance

Some of you may be wondering about cold cache performance. Running the following before each test:

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

These are the results for the single median test:

OFFSET method: 940 ms

Dynamic cursor: 955 ms

For the grouped median:

OFFSET method: 380 ms

Nested cursors: 385 ms

Final Thoughts

The dynamic cursor solutions really are significantly faster than the non-OFFSET methods for both single and grouped medians, at least with these sample data sets. I deliberately chose to reuse Aaron's test data so the data sets were not intentionally skewed toward the dynamic cursor. There might be other data distributions for which the dynamic cursor is not a good option. Nevertheless, it does show that there are still times when a cursor can be a fast and efficient solution to the right sort of problem. Even dynamic and nested cursors.

Eagle-eyed readers may have noticed the PAGLOCK hint in the OFFSET grouped median test. This is required for best performance, for reasons I will cover in my next article. Without it, the 2012 solution actually loses to the nested cursor by a decent margin (590ms versus 330ms).