Lock Lifetimes

The examples so far in this series (part 1 | part 2) have all used repeatable read isolation to acquire shared locks on qualifying rows and hold them to the end of the transaction. This was convenient for the demos but repeatable read isolation isn’t common in everyday use.

Locking optimizations

When SQL Server reads data under locking read committed isolation (the default for non-cloud offerings) and chooses row-level locking granularity, it normally only locks one row at a time. The engine processes the row through parent operators before releasing the shared lock prior to locking and reading the next row.

When the engine chooses page-level locking granularity, it takes and holds a shared page lock while processing rows on the current page and then releases the lock right before acquiring a lock on the next page.

In general, SQL Server is careful to take and hold locks only when needed and only long enough to guarantee correct results, taking account of the current isolation level. When locking at row granularity, the engine can skip row locks entirely when it’s safe to do.

Changing data

From a lock escalation perspective, we’re more concerned with the times locks need to be held longer than usual for locking read committed.

This is common when identifying rows to update (or delete) when a blocking operator appears in the execution plan. Rows qualifying for the update must continue to qualify until the change is performed and committed.

In this context, a blocking operator is any operator potentially consuming more than one row before producing an output row. It need not be fully blocking (reading its entire input before producing any output).

There’s no problem with only locking one row at a time if any changes needed are always applied before processing the next row. This is the case for a pipelined (or streaming) execution plan (one without blocking operators).

When a blocking operator is present (for Halloween protection or any other reason), this scheme breaks down because more than one row can be read before any changes are made.

Releasing locks after each row would allow another transaction to change a row we’ve decided to update, allowing lost updates and incorrect results. For an example, see Craig Freedman’s article Read Committed and Updates on the Microsoft documentation site.

Lock classes

SQL Server solves this problem using lock classes.

A lock class is a container for locks needing a lifetime extension within the current statement. Only locks needing to be held longer than the default are added to the lock class. Locks associated with a lock class are released in bulk at the end of the statement but may be released earlier in some circumstances, as I’ll describe later on. The maximum life extension granted by a lock class is the duration of the current statement.

This is different from using an isolation level like repeatable read, which holds shared locks for the duration of the current transaction. Using lock classes is better for resource usage, concurrency, and performance than internally escalating to repeatable read would be.

Lock classes are an internal implementation detail and not visible in regular execution plans.

Reading data

Lock classes may be needed when reading data. This can happen for several reasons when the server needs to ensure more than one row at a time remains unchanged for longer than normal during statement execution. This typically requires a blocking operator (as previously defined) in the plan.

Microsoft’s Craig Freedman gave some examples in blog posts now hosted on the Microsoft documentation site:

Lock classes are needed for key lookups when a blocking operator is present before the lookup. This includes the hidden operators for nested loops prefetching and batch sorting, as well as visible blocking operators like Sort.

The second of Craig’s posts explains locks are held longer than usual when a blocking operator is used and large objects (LOBs) are accessed by reference as an internal optimization. SQL Server needs to hold locks in this case to ensure the by-reference LOB pointers remain valid.

Let’s look at this in a bit of detail.

Test 5—Lock escalation reading data

Replace the test statement in the procedure from part one with the following:

-- Test statement(s)

SELECT

LT.lob

FROM dbo.LockTest AS LT

WITH (ROWLOCK)

ORDER BY

LT.i

OFFSET 0 ROWS

FETCH FIRST 1 ROW ONLY

OPTION (MAXDOP 1);

-- End test statement(s)

Note the absence of a REPEATABLEREAD isolation level hint. All the tests in this part are run under locking read committed isolation.

Run the test with 6214 rows in a clustered table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

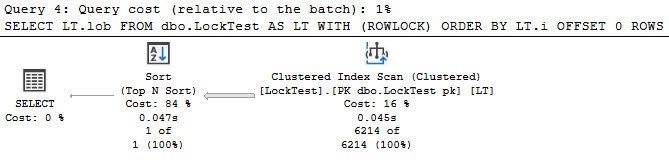

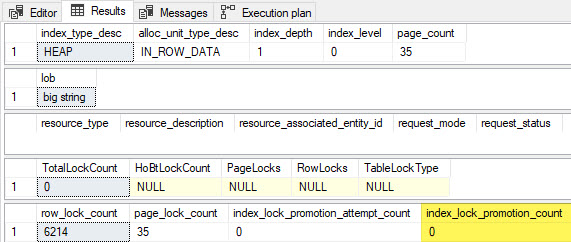

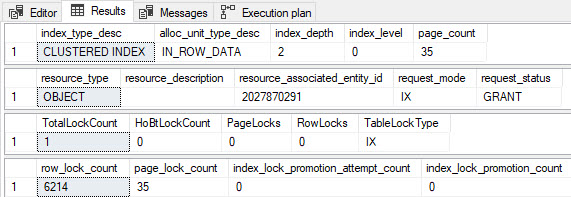

The execution plan reads the LOB column before a blocking sort:

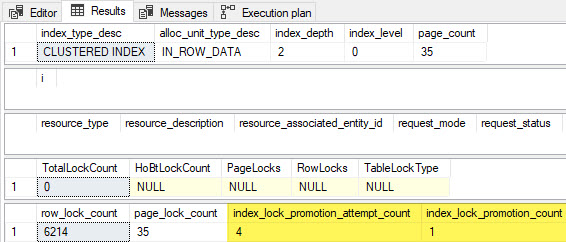

The test results confirm lock escalation occurred:

No locks are shown in the output because lock classes only hold locks for the duration of the current statement at most. The procedure displays locks still held after the test statement completes.

Nevertheless, we can see locks were held longer than usual because four lock promotion attempts were recorded, and lock escalation was successful. The escalated table lock was associated with the lock class and released at the end of the test statement.

Refer back to part one of the series if you need a reminder of how lock ‘promotion attempts’ and escalation work in detail.

Test 6—No escalation on a heap

Let’s run test 5 again, but this time on a heap table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'false';

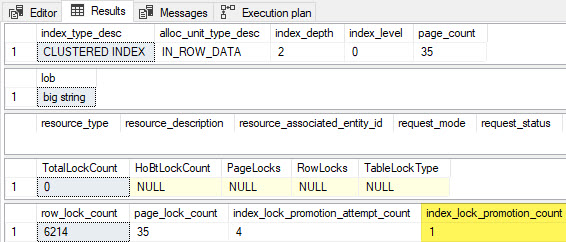

The execution plan is:

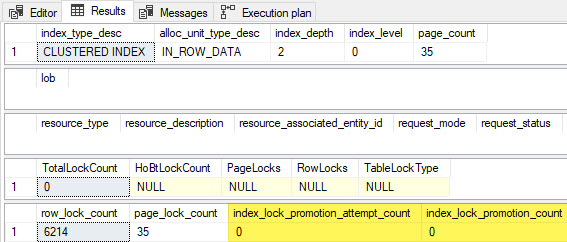

The results show no lock ‘promotion attempts’, and no escalation:

The by-reference internal LOB optimization is only applied to indexes, so locks don’t need to be held longer than usual in this heap table test.

Lock classes are available in this execution plan, but heap tables only use them to hold locks longer (for stability reasons) when the LOB data is off-row. All of the LOB data in this test is held in-row because it’s small enough and the data type is nvarchar(max).

I’m not going to demonstrate it for space reasons, but if you change the column data type to the deprecated ntext type (which defaults to off-row LOB storage) or use sp_tableoption to store the nvarchar(max) data off-row, locks will be held for longer.

You would need to increase the number of rows in the test to 6418 to see lock escalation due to the lock class usage (at 6250 held locks). The increase to 6418 rows compensates for the smaller number of in-row pages when LOB data is off-row. Fewer intent-share page locks mean we need a few more row locks to reach the target.

I mention this as a side note to avoid giving the misleading impression lock escalation can’t occur on heap tables because of LOB data. It can, but not in this test.

Let’s now look at another example based on one of Craig’s LOB tests where lock escalation occurs without a blocking operator. I’ll give some additional explanation and show how lock classes are involved.

Test 7—Lock escalation without a blocking operator

Replace the test statement with:

-- Test statement(s)

SELECT

LT.i

FROM dbo.LockTest AS LT

WITH (ROWLOCK)

WHERE

LT.lob = N'banana'

OPTION (MAXDOP 1);

-- End test statement(s)

None of the rows in the table will match the test predicate, but this isn’t important for the test—it merely makes the output smaller and faster.

Run the test with 6214 rows in a clustered table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

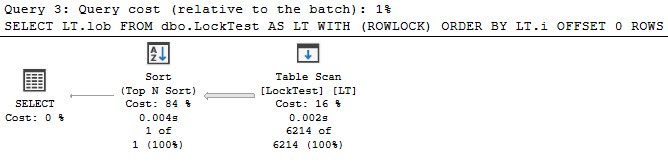

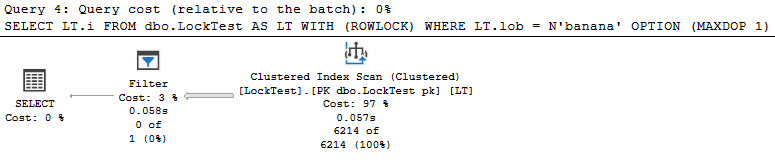

The post-execution plan contains no blocking operators:

The results are:

This test shows lock escalation where none is expected. Craig explains this as SQL Server being “overly conservative” in deciding when to hold locks for longer. This is true, of course, but there’s a bit more to say.

Test 8—No lock escalation with LOB projection

Let’s run test 7 again with a small modification:

-- Test statement(s)

SELECT

LT.lob

FROM dbo.LockTest AS LT

WITH (ROWLOCK)

WHERE

LT.lob = N'banana'

OPTION (MAXDOP 1);

-- End test statement(s)

The only change is to return the lob column instead of column i.

Run the test again with the same number of rows on a clustered table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

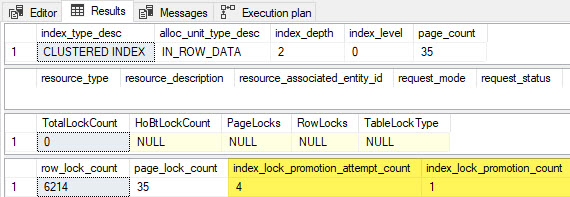

The execution plan is the same as for test 7, but the results are different:

This time we see no lock ‘promotion attempts’ and no lock escalation. As is usual under locking read committed isolation, locks were acquired and released one at a time, and lock classes weren’t needed.

SQL Server can avoid using a lock class (overly conservatively) when we project the LOB column unmodified. Other columns and expressions may be output as well, but it’s essential the LOB column appears undisturbed.

Test 9—Lock escalation with variable assignment

When I say the LOB column must appear in the output unmodified, I mean no changes at all. Even assigning it to a variable is enough to cause lock escalation again:

-- Test statement(s)

DECLARE @lob nvarchar(max);

SELECT

@lob = LT.lob

FROM dbo.LockTest AS LT

WITH (ROWLOCK)

WHERE

LT.lob = N'banana'

OPTION (MAXDOP 1);

-- End test statement(s)

The execution plan is the same once again, but the results show lock escalation has returned:

This is an interesting example of variable assignment changing test results.

To be clear, holding locks for longer than usual is unnecessary in this test, as it was in test 7. The logic SQL Server uses to determine when lock classes and longer locks are needed is imperfect.

Update lock lifetimes

The documentation states that update locks (U) requested via the UPDLOCK table hint are taken and held to the end of the transaction.

This is generally true, but there’s an exception. Locks are taken before the row is tested to see if it qualifies for the change. When the same query plan operator taking the U lock determines the row doesn’t qualify, the U lock is released immediately.

Let’s look at a couple of examples to see when this does and doesn’t apply.

Test 10—Update row locks released early

Change the test statement to one which locates 10 rows, with five qualifying for the update:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK, UPDLOCK)

SET i += 1

WHERE

pk BETWEEN 1 AND 10

AND i <= 5;

-- End test statement(s)

Run the test on a clustered table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

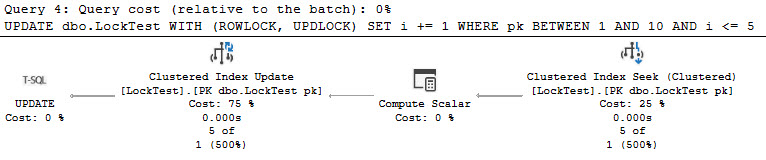

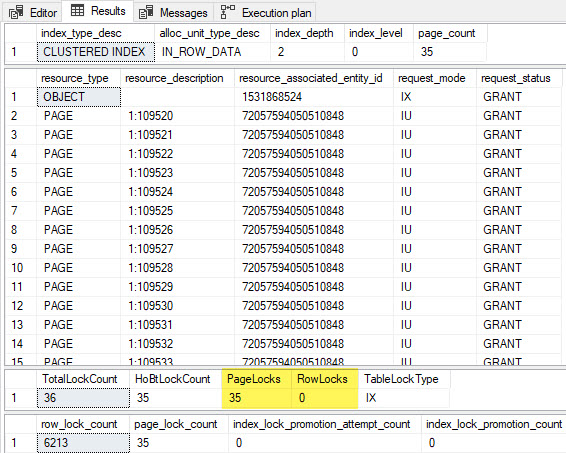

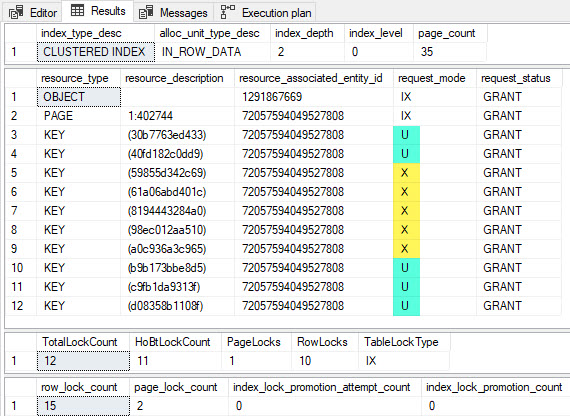

The execution plan is:

The Clustered Index Seek locates the 10 rows matching the pk predicate and applies the unindexed test on column i as a residual predicate.

The results of the test are:

The Clustered Index Seek took 10 row-level U locks but released five of them early (before the end of the transaction) because the residual predicate wasn’t satisfied. This was possible because the same operator was responsible for both acquiring and releasing the locks.

The five remaining U locks were converted to exclusive X locks at the Clustered Index Update operator right before making the required data changes.

Notice the final result set shows 15 row-level locks were taken, 10 U locks at the seek, and five X locks at the update. This is a consequence of the way the sys.dm_db_index_operational_stats DMV counts lock requests.

Test 11—Intent-update locks not released early

Expand the pk range from test 10 to cover the whole table:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK, UPDLOCK)

SET i += 1

WHERE

pk BETWEEN 1 AND 6214

AND i <= 0;

-- End test statement(s)

Run the test on the same table:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

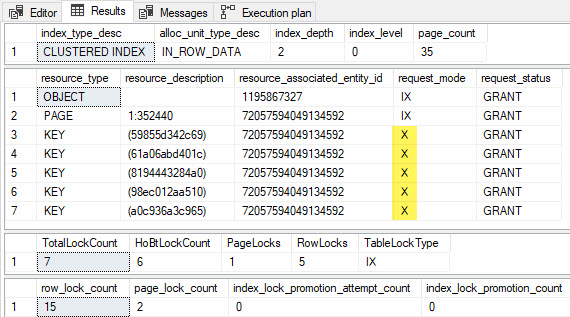

The execution plan is the same, but the results are:

The row-level U locks were released, but the intent-update IU locks remain. On large tables, these unnecessary page-level locks could lead to lock escalation. A similar thing happens with released row-level S locks under repeatable read isolation—the IS page locks remain.

Test 12—Update row locks not released early

Let’s now return to the 10-row update statement from test 10, modifying the residual predicate slightly:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK, UPDLOCK)

SET i += 1

WHERE

pk BETWEEN 1 AND 10

AND i <= CONVERT(sql_variant, 5);

-- End test statement(s)

Run the test again:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

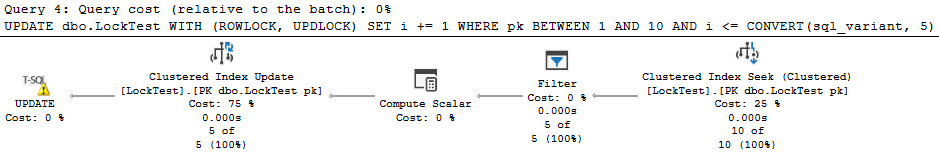

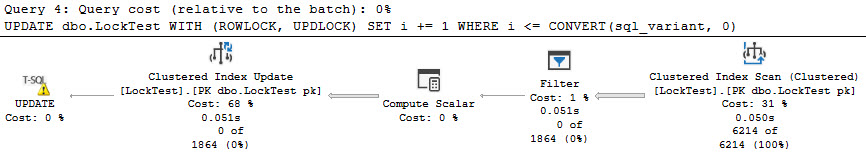

The execution plan has now changed to feature a separate Filter operator because sql_variant tests can’t be pushed down into a seek or scan as a residual predicate:

The residual predicate on column i is no longer tested by the same operator acquiring the U locks. This is reflected in the test results:

The five X locks converted from U locks seen in test 10 are still present, but they’ve been joined by five U locks from rows locked at the Clustered Index Seek that didn’t qualify at the Filter operator. Because the rows were found not to qualify by a different plan operator, the unnecessary U locks weren’t released early.

The test is a little contrived for compactness, but it’s extremely common for plans to apply predicate tests outside the original locking operator, for example after a join, aggregation, or row numbering exercise.

This is something to be aware of if you use UPDLOCK hints.

Test 13—Lock escalation from update locks

It’s easy to see how this could lead to lock escalation, but for completeness, I’ll show a quick example where no rows qualify for the update:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK, UPDLOCK)

SET i += 1

WHERE

i <= CONVERT(sql_variant, 0);

-- End test statement(s)

Run the test as before (switch to a heap table if you like):

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

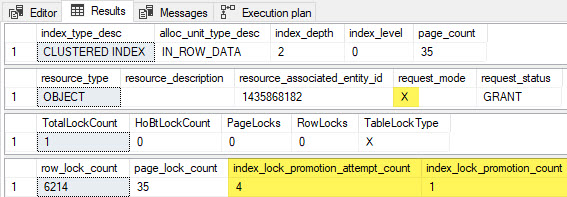

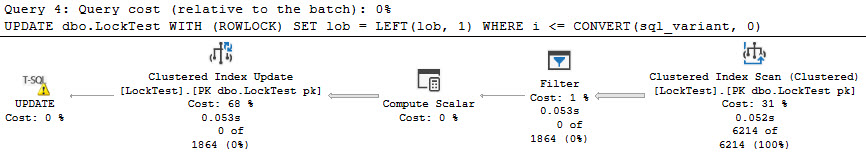

The execution plan again features a separate Filter:

The results are:

The unnecessary U locks couldn’t be released early, resulting in lock escalation to an exclusive table lock (table-level update locks don’t exist). This lock will be held to the end of the transaction, despite our update affecting no rows.

If you run the test again with only 6213 rows in the table, lock escalation will not occur, and you’ll see 6213 row-level U locks, 35 IU page locks, and an IX table lock. All of these locks will be held to the end of the transaction.

Internal update locks

Even when a data-changing statement (not just an update) doesn’t include the UPDLOCK table hint, the engine still acquires some update locks. This is an optimization intended to avoid a common cause of deadlocks when multiple processes read from and write to the same table.

SQL Server takes internal update locks when reading rows needed for a data-changing operation, but only at the plan operator responsible for initially retrieving the row locator (clustering key or RID for heaps).

Internal update locks differ from update locks acquired from the UPDLOCK in three important ways:

- The maximum lifetime of an internal update lock is the current statement. They’re not held to the end of the transaction though they may be converted to an exclusive lock, which will be held until the transaction ends.

- Internal update locks can usually be released by any operator in the plan. The outcomes seen in tests 12 and 13 don’t generally apply to internal update locks.

- When a row-level internal update lock is released, the associated

IUpage lock is also released.

I’m not going to demonstrate all these differences for space reasons and because my test procedure isn’t set up to show the types of locks released before the end of the transaction. You can validate for yourself by running the UPDLOCK tests without the hint while monitoring the locks taken and released.

I qualified point two above because there’s an important exception. Internal update locks can be released by any operator unless the lock is associated with a lock class. In this case, the lock can only be released by the acquiring operator.

Test 14—Internal update locks released with a lock class

The following update requires a lock class because it retrieves a LOB column accessed by reference:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK)

SET lob = LEFT(lob, 1)

WHERE

i <= 0;

-- End test statement(s)

A clustered table is necessary for the by-reference LOB access:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

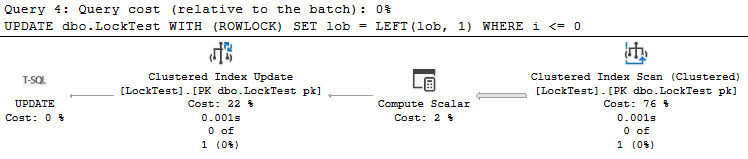

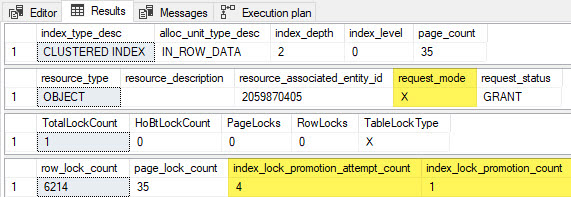

The execution plan shows the residual predicate on column i pushed down into the scan. No rows qualify for the update:

The results show all 6214 internal update row locks were released:

A lock class was needed, but the internal update row locks were released by the same operator acquiring them.

Test 15—Internal update locks held in a lock class

Modifying the residual predicate in test 14 introduces the familiar Filter operator:

---- Test statement(s)

UPDATE dbo.LockTest

WITH (ROWLOCK)

SET lob = LEFT(lob, 1)

WHERE

i <= CONVERT(sql_variant, 0);

-- End test statement(s)

Run the test again:

EXECUTE dbo.RunTest

@Rows = 6214,

@Clustered = 'true';

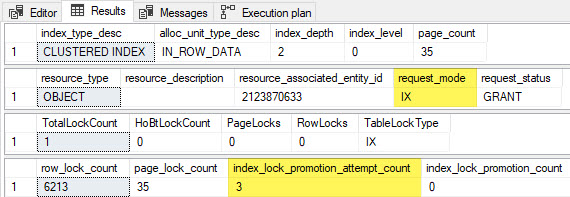

The execution plan is:

The results are:

The internal update row locks associated with a lock class couldn’t be released this time because they were acquired at the scan and only found not to qualify at the filter. This lead to a lock escalation, although no rows were updated.

The escalated table X lock couldn’t be released at the end of the statement because exclusive locks are always held to the end of the transaction.

Without lock escalation, the internal update locks are held to the end of the statement, then released in bulk by the lock class. You can see this by running the test again with 6213 rows in the clustered table:

The results in this case are:

Notice there were three lock ‘promotion attempts’ confirming the update locks were held for the duration of the statement. As usual, no escalation occurred because the HoBt only had 4998 locks when the check at 5000 held locks was made.

Monitoring

You can monitor lock escalation with Profiler, an extended event, or undocumented trace flag 611 (with 3604 for output to the SSMS messages pane).

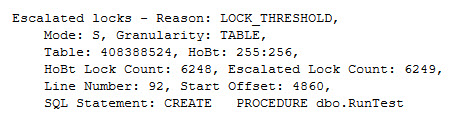

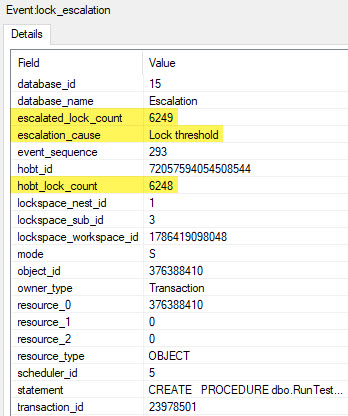

All methods provide the reason for escalation (lock threshold or memory usage), the number of locks escalated (including the current lock), and the number of locks held by the HoBt at the time (not including the current lock).

Unfortunately, there’s no way to observe the lock manager’s held lock count without a debugger.

Use of the by-reference LOB access optimization can be monitored with the Access Methods performance counter object.

End notes

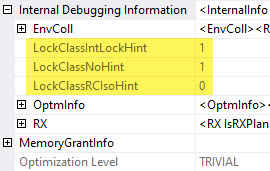

There’s no way to observe lock classes in regular execution plans. You can see they were used at the root node of a verbose showplan (enabled with undocumented trace flag 8666). The verbose plan only shows lock class availability, not where and when they were used (or not) by particular plan operators. I didn’t include this in the tests because it makes them less repeatable.

An example screenshot from test 15 is shown below:

You may see extra lock escalations for some of the tests when verbose showplan is enabled because the lock manager counts all locks, including metadata and statistics locks acquired during showplan production. An extra lock or two can be enough to trigger an escalation check when trying to demo edge cases. Many of these locks are taken before the tests start, but they don’t contribute to the held lock count since they’re released.

The test procedure includes extra statements to prevent plan caching in another attempt to make the tests more repeatable. With caching, an extra intent-share object lock can be acquired on the dropped test table during the schema-changed recompilation process.

With a partitioned test table and lock escalation set to AUTO, an additional IS lock is acquired on the partition. This is counted by the lock manager as a held lock but doesn’t count for the per-HoBt counters. The net effect: escalation occurs with one less row in the test table. At 5000 held locks, the HoBt counter is 4997 (omitting the table, HoBt, and current locks). The next test at 6250 held locks is reached one row earlier due to the extra HoBt lock. Escalation occurs with 6247 locks on the HoBt instead of 6248.

I hope this series has given you more insight into the complexities of locking in the SQL Server engine.