As soon as I saw the SQL 2016 feature AT TIME ZONE, which I wrote about here at sqlperformance.com a few months ago, I remembered a report that needed this feature. This post forms a case study on how I saw it work out, which fits into this month’s T-SQL Tuesday hosted by Matt Gordon (@sqlatspeed). (It’s the 87th T-SQL Tuesday, and I really need to write more blog posts, particularly about things that are not prompted by T-SQL Tuesdays.)

As soon as I saw the SQL 2016 feature AT TIME ZONE, which I wrote about here at sqlperformance.com a few months ago, I remembered a report that needed this feature. This post forms a case study on how I saw it work out, which fits into this month’s T-SQL Tuesday hosted by Matt Gordon (@sqlatspeed). (It’s the 87th T-SQL Tuesday, and I really need to write more blog posts, particularly about things that are not prompted by T-SQL Tuesdays.)

The situation was this, and this may sound familiar if you read that earlier post of mine.

Long before LobsterPot Solutions existed, I needed to produce a report on incidents that occurred, and in particular, show the number of times that responses were made within SLA and the number of times that the SLA was missed. For example, a Sev2 incident that occurred at 4:30pm on a weekday would need to have a response within 1 hour, while a Sev2 incident that occurred at 5:30pm on a weekday would need to have a response within 3 hours. Or something like that – I forget the numbers involved, but I do remember that the helpdesk employees would breathe a sigh of relief when 5pm would roll around, because they wouldn’t need to respond to things so quickly. The 15-minute Sev1 alerts would suddenly stretch out to an hour, and the urgency would disappear.

But a problem would come whenever daylight saving time started or ended.

I’m sure if you’ve dealt with databases, you’ll know the pain that daylight saving time is. Supposedly Ben Franklin came up with the idea – and for that he should be struck by lightning or something. Western Australia tried it for a few years recently, and sensibly abandoned it. And the general consensus is to store date/time data is to do so in UTC.

If you don’t store data in UTC, you run the risk of having an event start at 2:45am and end at 2:15am after the clocks have gone back. Or having an SLA incident that starts at 1:59am just before the clocks go forward. Now, these times are fine if you store the time zone that they’re in, but in UTC time just works as expected.

…except for reporting.

Because how am I supposed to know whether a particular date was before daylight saving started or after? I might know that an incident occurred at 6:30am in UTC, but is that 4:30pm in Melbourne or 5:30pm? Obviously I can consider which month it’s in, because I know that Melbourne observes daylight saving time from the first Sunday in October to the first Sunday in April, but then if there are customers in Brisbane, and Auckland, and Los Angeles, and Phoenix, and various places within Indiana, things get a lot more complicated.

To get around this, there were very few time zones in which SLAs could be defined for that company. It was just considered too hard to cater for more than that. A report could then be customised to say “Consider that on a particular date the time zone changed from X to Y”. It felt messy, but it worked. There was no need for anything to look up the Windows registry, and it basically just worked.

But these days, I would’ve done it differently.

Now, I would’ve used AT TIME ZONE.

You see, now I could store the customer’s time zone information as a property of the customer. I could then store each incident time in UTC, allowing me to do the necessary calculations around the number of minutes to respond, resolve, and so on, while being able to report using the customer’s local time. Assuming my IncidentTime had actually been stored using datetime, rather than datetimeoffset, it would simply be a matter of using code like:

i.IncidentTime AT TIME ZONE 'UTC' AT TIME ZONE c.tz

…which first puts the timezoneless i.IncidentTime into UTC, before converting it to the time zone of the customer. And this time zone can be 'AUS Eastern Standard Time', or 'Mauritius Standard Time', or whatever. And the SQL Engine is left to figure out what offset to use for that.

At this point, I can very easily create a report that lists each incident across a time period, and show it in the customer’s local time zone. I can convert the value to the time data type, and then report against how many incidents were within business hours or not.

And all of this is very useful, but what about the indexing to handle this nicely? After all, AT TIME ZONE is a function. But changing the time zone doesn’t change the order in which the incidents actually occurred, so it should be okay.

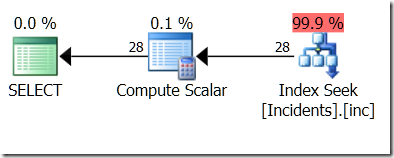

To test this, I created a table called dbo.Incidents, and indexed the IncidentTime column. Then I ran this query, and confirmed that an index seek was used.

select i.IncidentTime, itz.LocalTime

from dbo.Incidents i

cross apply (select i.IncidentTime AT TIME ZONE 'UTC'

AT TIME ZONE 'Cen. Australia Standard Time') itz (LocalTime)

where i.IncidentTime >= '20170201'

and i.IncidentTime < '20170301';

But I want to filter on itz.LocalTime…

select i.IncidentTime, itz.LocalTime

from dbo.Incidents i

cross apply (select i.IncidentTime AT TIME ZONE 'UTC'

AT TIME ZONE 'Cen. Australia Standard Time') itz (LocalTime)

where itz.LocalTime >= '20170201'

and itz.LocalTime < '20170301';

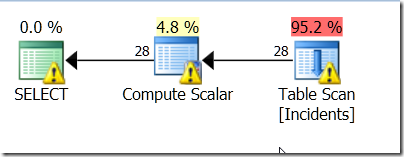

No luck. It didn’t like the index.

The warnings are because it’s having to look through a lot more than the data that I’m interested in.

I even tried using a table with a datetimeoffset field. After all, AT TIME ZONE can change the order when moving from datetime to datetimeoffset, even though the order isn’t changed when moving from datetimeoffset to another datetimeoffset. I even tried making sure that the thing I was comparing it to was in the time zone.

select i.IncidentTime, itz.LocalTime

from dbo.IncidentsOffset i

cross apply (select i.IncidentTime AT TIME ZONE 'Cen. Australia Standard Time') itz (LocalTime)

where itz.LocalTime >= cast('20170201' as datetimeoffset)

AT TIME ZONE 'Cen. Australia Standard Time'

and itz.LocalTime < cast('20170301' as datetimeoffset)

AT TIME ZONE 'Cen. Australia Standard Time';

Still no luck!

So now I had two options. One was to store the converted version alongside the UTC version, and index that. I think that’s a pain. It’s certainly a lot more of a database change than I’d like.

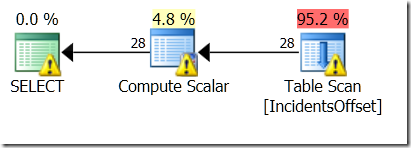

The other option was to use what I call helper predicates. These are the kind of thing that you see when you use LIKE. They’re predicates that can be used as Seek Predicates, but not exactly what you’re asking for.

I figure that no matter what time zone I’m interested in, the IncidentTimes that I care about are within a very specific range. That range is no more than a day bigger than my preferred range, on either side.

So I’ll include two extra predicates.

select i.IncidentTime, itz.LocalTime

from dbo.IncidentsOffset i

cross apply (select i.IncidentTime

AT TIME ZONE 'Cen. Australia Standard Time') itz (LocalTime)

where itz.LocalTime >= cast('20170201' as datetimeoffset)

AT TIME ZONE 'Cen. Australia Standard Time'

and itz.LocalTime < cast('20170301' as datetimeoffset)

AT TIME ZONE 'Cen. Australia Standard Time

and i.IncidentTime >= dateadd(day,-1,'20170201')

and i.IncidentTime < dateadd(day, 1,'20170301');

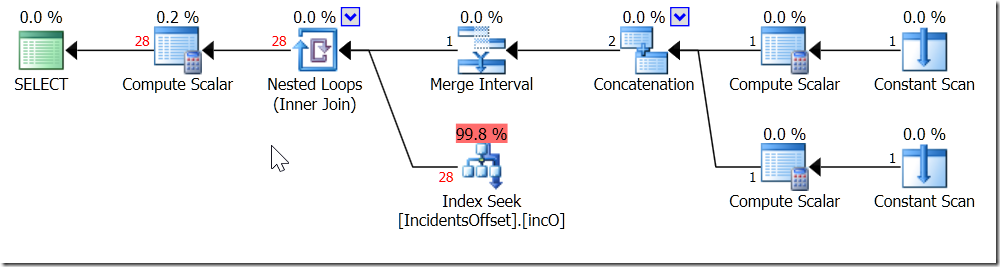

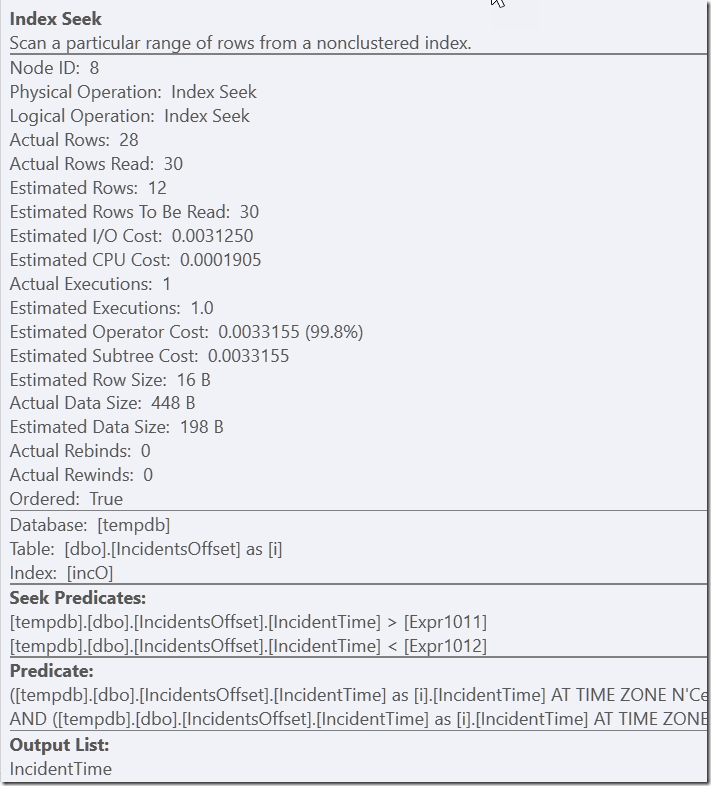

Now, my index can be used. It’s having to look through 30 rows before filtering it to the 28 that it cares about – but that’s a lot better than scanning the whole thing.

And you know – this is the kind of behaviour that I see all the time from regular queries, like when I do CAST(myDateTimeColumns AS DATE) = @SomeDate, or use LIKE.

I’m okay with this. AT TIME ZONE is great for letting me handle my time zone conversions, and by considering what’s going on with my queries, I don’t need to sacrifice performance either.

Funny a report developer for dynamics AX was asking me about a similar issue this afternoon. If his date issue cannot be solved via application side changes, this new functionality might be the answer for him. Thanks for this article.