Windows Server 2012 introduced a new feature called Scale-Out File Server (SOFS). Historically, SOFS has mainly been used as a shared storage tier (as an alternative to a shared SAN) for Hyper-V virtualization hosts, but this feature is also useful for SQL Server 2012 and newer, which can store both system and user database files on SMB 3.0 file shares for both stand-alone and clustered instances of SQL Server. SOFS is made up of a set of clustered file servers that make up a transparent failover file server cluster. The database server connects to the SOFS using SMB 3.0 networking (which requires Windows Server 2012 or newer on both the file servers and the database servers). You also need one or more JBOD enclosures that each SOFS cluster node is connected to using SAS cables. Network adapters with Remote Direct Memory Access (RDMA) capability using SMB Direct are required on both sides of the connection. RDMA network adapters are available in three different types: Internet Wide Area RDMA Protocol (iWARP), Infiniband, or RDMA over Converged Ethernet (RoCE).

Storage Spaces is used to aggregate the SAS disks of the JBOD enclosure(s). Virtual disks are created from the aggregated SAS disks, providing resiliency against disk or enclosure failure, as well as enabling SSD/HDD tiered storage and write-back caching. In Windows Server 2012 and 2012 R2, an HA storage system using Storage Spaces requires all of the disks to be physically connected to all of the storage nodes. To allow for the disks to be physically connected to all storage nodes, they need to be SAS disks and they need to be installed in an external JBOD chassis with each storage node having physical connectivity to the external JBOD chassis.

An example of this type of deployment is shown in Figure 1:

Figure 1: Windows Server 2012 and 2012 R2 Shared JBOD Scale-Out File Server

Figure 1: Windows Server 2012 and 2012 R2 Shared JBOD Scale-Out File Server

The two main weaknesses of SOFS is the cost and complexity of the SAS storage tier, and the fact that only SAS HDDs and SSDs are supported (meaning no lower cost SATA HDDs or SSDs). You also cannot use local internal drives or PCIe storage cards in the individual file server nodes with SOFS in Windows Server 2012 R2.

Storage Spaces Direct

One of the more exciting new features in Windows Server 2016 is called Storage Spaces Direct (S2D), which enables organizations to use multiple, clustered commodity file server nodes to build highly available, scalable storage systems with local storage, using SATA, SAS, or PCIe NVMe devices. You can use internal drives in each storage node, or direct-attached disk devices using “Just a Bunch of Disks” (JBOD) where each JBOD is only connected to a single storage node. This eliminates the previous requirement for a shared SAS fabric and its complexities (which was required with Windows Server 2012 R2 Storage Spaces and SOFS), and also enables using less expensive storage devices such as SATA disks.

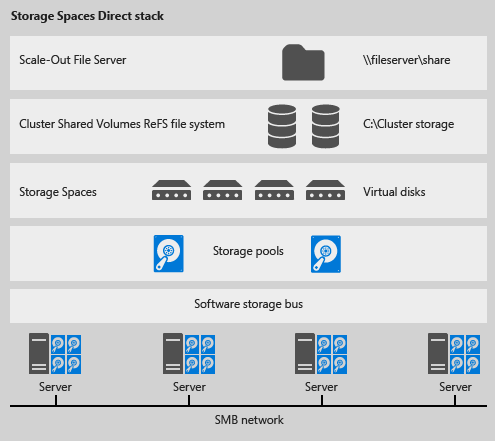

In order to use S2D, you need at least four clustered file servers that each can have a mixture of internal drives (SAS or SATA), PCIe flash storage cards, or direct-attached disk devices that will be pooled using Storage Spaces. Up to 240 disks can be in a single pool, shared by up to 12 file servers. A Software Storage Bus replaces the SAS layer of a shared SAS JBOD SOFS. This software storage bus uses SMB 3.1.1 networking with RDMA (SMB Direct) between the S2D cluster nodes for communications. The Storage Spaces feature aggregates the local and DAS disks into a storage pool, where one or more virtual disks are created from the pool. The virtual disks (LUNs) are formatted with Resilient File System (ReFS) and then converted into cluster shared volumes (CSVs), which make them active across the entire file server cluster.

The S2D stack is shown in Figure 2:

Figure 2: Storage Spaces Direct (S2D) stack (Image Credit: Microsoft)

Figure 2: Storage Spaces Direct (S2D) stack (Image Credit: Microsoft)

The reason why this matters so much for SQL Server database professionals is that S2D will give you another high performance deployment choice for your storage subsystem that will work with stand-alone SQL Server instances, with traditional FCI instances (that require shared storage), and with instances that are using AlwaysOn AG nodes.

If you have the proper network adapters (not your garden variety, embedded Broadcom Gigabit Ethernet NICs) for both your clustered file servers and for your database servers, you will be able to take advantage of SMB Direct and RDMA so that the SMB network can deliver extremely high throughput, with very low latency, and low CPU utilization by the network adapters, which enables the remote file server to resemble local storage from a performance perspective. The new S2D feature will make it easier and less expensive to deploy a Scale-Out File Server cluster that can deliver extremely high performance for SQL Server usage. Not only will this work for bare-metal, non-virtualized SQL Server instances, it will also be a good solution for virtualized SQL Server instances, where the virtualization host can get much better I/O performance than from a typical SAN.

For example, if you have a 56Gb InfiniBand (FDR) host channel adapter (HCA) plugged into a PCIe 3.0 x8 slot of your database server (or virtualization host server) and your file servers, that will give you about 6.5GB/sec of sequential throughput for each connection. I have some more detailed information about sequential throughput speeds and feeds here. Currently, you have to use PowerShell to deploy and manage Storage Spaces Direct. This TechNet article has some good information and examples of how to test S2D in Windows Server 2016 Technical Preview 3.

By the time Windows Server 2016 and SQL Server 2016 are GA, we will probably have the new 14nm Intel Xeon E5-2600 v4 "Broadwell-EP" processor, that will have up to 22 physical cores per socket and 55MB of shared L3 cache, along with DDR4 2400 memory support. This new processor family will work with existing server models, such as the Dell PowerEdge R730, since it is socket compatible with the current 22nm "Haswell-EP" family processors. This will give you the best underlying server hardware platform to take full advantage of S2D.

I might be reading too much into this, Glenn, so help me out. But is this a shot across the bow for many storage products that are primarily JBOD, like DROBO and various NAS products? Why would I want to pay a premium for those products, when it seems like S2D now does everything I'm likely to want from within Windows? Thanks, -Kev

Excellent overview, very useful, thank you.

@Glenn, yes, great stuff! This is the best, succinct, most consumable overview I've seen. Well done.

@Kevin, you're thinking is absolutely on the right track–but not only does S2D have the real potential to usurp other NAS implementations, but it's going to replace a lot of SANs. Sure, there are some sophisticated features that will still for now require sophisticated SANs, but S2D will be seen as a *far* more affordable alternative–a few hundreds of thousands of dollars for an end-to-end performant, redundant enterprise solution for S2D vs. an expense in the millions for many SANs.

I've had the opportunity to work a bit already with S2D at a customer lab. Imagine software-defined tiered-storage that works! I wouldn't've believed it if I'd not seen it with my own eyes. And this is just the tip of the iceberg in terms of S2D's potential.

Hello Glen,

may S2D implementation and SQL reside on the same machine? Or do we have to have physical servers for S2D and other physical server for SQL Server machines (or physical servers as virtualization hosts on which sql server machines run)?

Other simple question, is S2D supported with Failover Cluster Intances?

Andrea asked the exact same question I had in mind. Would be very useful to know.

It’s a nice article but there are some limitations with SQL SERVER 2012 on SOFS w.r.t CHECKDB . If you can watch the below video from 19th minute you will get it :

https://sqlbits.com/Sessions/Event12/Running_SQL_Server_2014_on_a_Scale_Out_File_Server

Any tips to overcome that problem ?????