I have been talking about the importance of sequential throughput performance for SQL Server for quite some time. Sequential throughput is critically important for many common operations in SQL Server, including:

- Full database backups and restores

- Index creation and maintenance work

- Initializing replication snapshots and subscriptions

- Initializing AlwaysOn AG replicas

- Initializing database mirrors

- Initializing log-shipping secondary's

- Relational data warehouse query workloads

- Relational data warehouse ETL operations

Despite the importance of sequential throughput for SQL Server, I still see many people who focus only on random I/O performance and latency, while largely ignoring sequential throughput performance. As you are thinking about sequential throughput performance, you need to consider the complete path of the data, from it being at rest on your storage media, to it being consumed by your processor cores.

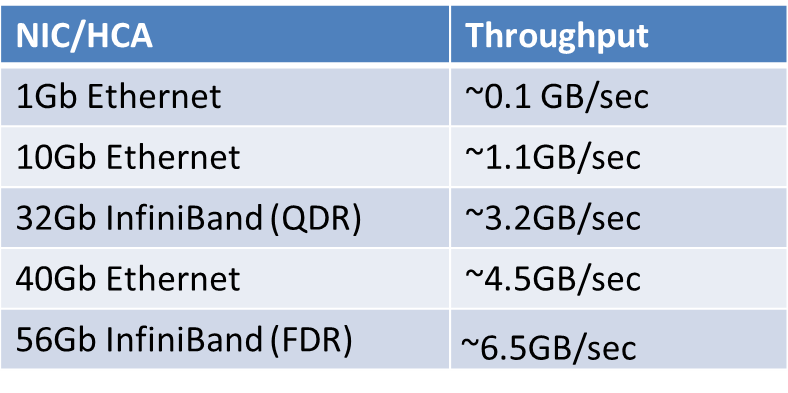

Depending on what type of storage technology you are using, it may be connected to your host server by an Ethernet network interface card (NIC) or InfiniBand host channel adapter (HCA) card. This type of connection is most common for iSCSI SANs and for remote server message block (SMB) 3.0 file shares. Microsoft TechNet has a good reference about this subject, called Improve Performance of a File Server with SMB Direct.

The theoretical sequential throughput of a single NIC/HCA device (per port) is shown in Figure 1:

Figure 1: Theoretical NIC/HCA Throughput

Figure 1: Theoretical NIC/HCA Throughput

Just in case you are wondering what InfiniBand (QDR) or Infiniband (FDR) refer to, the InfiniBand Trade Association maintains an InfiniBand Roadmap that shows the history and planned advances in InfiniBand bandwidth over the next several years. Infiniband has a list of acronyms that describe the relative performance of an InfiniBand link as shown below:

- SDR – Single Data Rate

- DDR – Double Data Rate

- QDR – Quad Data Rate

- FDR – Fourteen Data Rate

- EDR – Enhanced Data Rate

- HDR – High Data Rate

- NDR – Next Data Rate

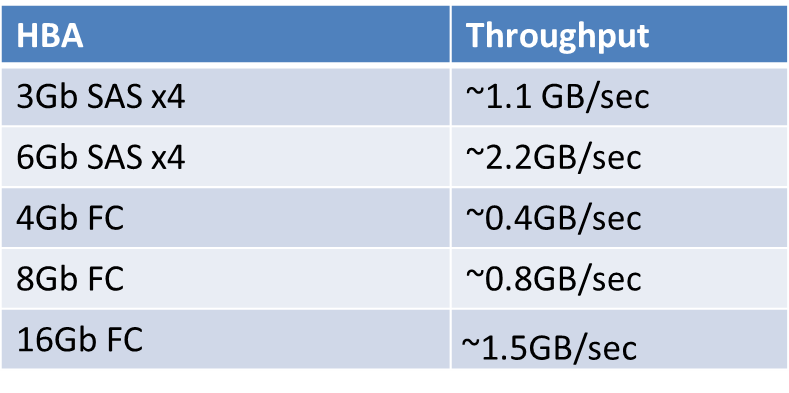

Traditional fiber channel (FC) SANs typically use one or more host bus adapters (HBA) that have one or more ports for optical cables that connect to a FC switch or directly to the FC SAN itself. It is still relatively rare to see 16Gb FC HBAs in widespread use, so most host servers still have either 4Gb or 8Gb FC HBAs.

The theoretical sequential throughput of a single HBA device (per port) is shown in Figure 2:

Figure 2: Theoretical HBA Throughput

Figure 2: Theoretical HBA Throughput

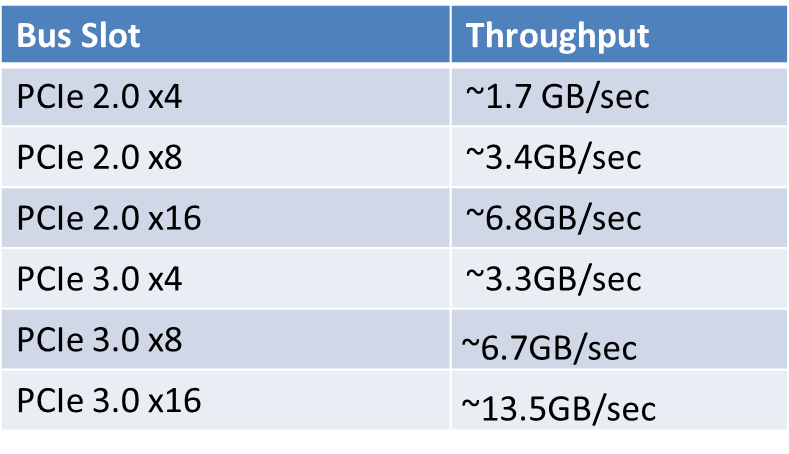

Regardless of what type of interface device or technology you are using, you need to be concerned about what kind of expansion bus slot it is plugged into on your host server. Depending on the age of your server and the type of processor it is using, this can have a huge impact on the total bandwidth that the bus slot can support. Peripheral Component Interconnect Express (PCIe) Gen 3 slots have twice the bandwidth per lane as an older PCIe Gen 2 slot. You can determine how many and what type of expansion slots you have in your host server by looking at the specifications and documentation for your brand and model of server. So far, only Intel Xeon E3 v2, Xeon E3 v3, Xeon E5, Xeon E5 v2, Xeon E5 v3, and Xeon E7 v2 family processors support PCIe Gen 3 slots. Anything older than this will have PCIe Gen 2 slots, or maybe even PCIe Gen 1 slots if it is extremely old (in computer terms).

The theoretical sequential throughput of a single PCIe slot is shown in Figure 3:

Figure 3: Theoretical Expansion Slot Throughput

Figure 3: Theoretical Expansion Slot Throughput

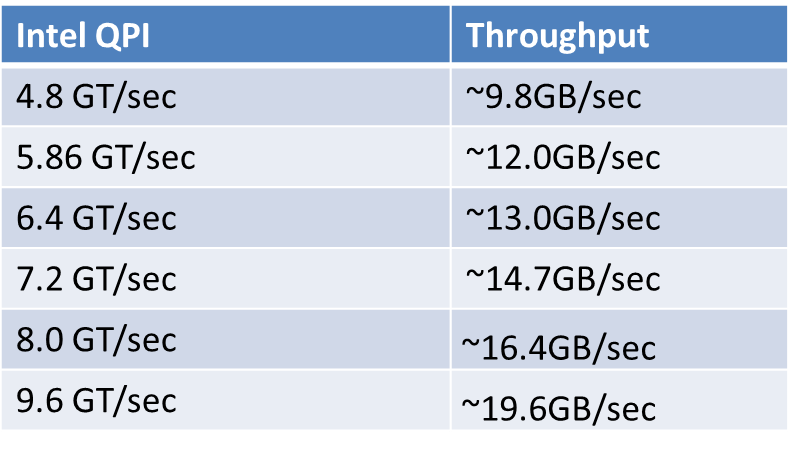

Since the introduction of the Intel Nehalem microarchitecture back in 2008, all 2P and larger Intel server processors have supported Intel QuickPath Interconnect (QPI) for connections between the processors and the memory in a server, giving these newer processors support for Non-Uniform Memory Access (NUMA), which replaced the the older front-side bus (FSB). NUMA improves scalability pretty significantly as the number of physical processors in a system increases, especially when you have four or more physical processors in a host system.

Modern Intel processors have integrated memory controllers that support Intel QPI. When you look at the specifications for a particular Intel processor in the online ARK database, you will be able to see its rated QPI performance in GigaTransfers per second (GT/sec). A GigaTransfer per second is simply one billion operations that transfer data per second.

For example, a modern, high-end Intel Xeon E5-2667 v3 processor (Haswell-EP) has an Intel QPI Speed of 9.6 GT/sec, while an older, low-end Intel Xeon E5503 processor (Nehalem-EP) has an Intel QPI Speed of only 4.8 GT/sec. Even among processors from the same generation microarchitecture, you will see variations in the rated Intel QPI Speed. For example, in the 22nm Haswell-EP family, the low-end Intel Xeon E5-2603 v3 processor is rated at 6.4 GT/sec, while the higher-end Intel Xeon E5-2660 v3 processor is rated at 9.6 GT/sec. Intel Xeon E5, E5 v2 and E5 v3 processors have two QPI links per processor, while Intel E7 and E7 v2 family processors have three QPI links per processor.

The theoretical sequential throughput of a single Intel QPI link is shown in Figure 4:

Figure 4: Theoretical Intel QPI Throughput

Figure 4: Theoretical Intel QPI Throughput

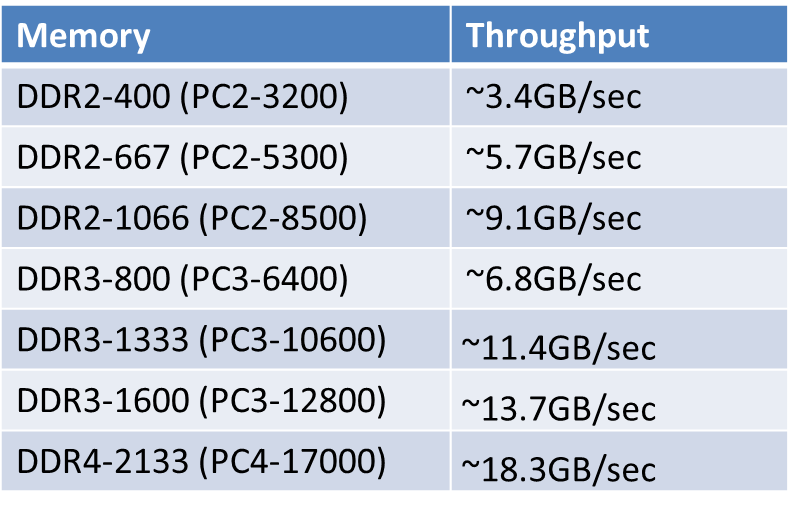

Depending on the age of your host server, the exact processor it is using, and the type, speed and amount of memory that is installed, you will have different amounts of theoretical sequential throughput available from each memory module. All modern Intel processors have integrated memory controllers that control the amount and frequency of the memory that the processor will support. Most recent vintage host servers will use DDR3 memory, while the latest generation Haswell-EP (Intel Xeon E5 v3 family) processors support DDR4 memory. DDR4 memory supports higher speeds, higher throughput, higher memory density, lower power consumption, and improved reliability compared to DDR3 memory.

The theoretical sequential throughput of a single memory module is shown in Figure 5:

Figure 5: Theoretical Memory Module Throughput

Figure 5: Theoretical Memory Module Throughput

As you look at the individual components in an existing or planned new system, you should be on the lookout for mismatched components that can introduce artificial sequential performance bottlenecks into the system. For example, you might have a brand-new server with high-end processors that is crippled by the fact that it is using a 4Gb FC HBA to access a SAN. Another example could be a very fast PCIe flash storage card or RAID controller that was installed in a low bandwidth PCIe 2.0 x4 slot, which artificially limited the total sequential throughput of the device.

I know that this has been a lot of technical information to digest, especially for people who are not hardware enthusiasts. I think the main idea you should take away from this article is that sequential throughput performance is quite important for many common SQL Server tasks and workloads. The low-level details about processors, memory types, expansion slots, and expansion devices actually make a lot of difference in the sequential throughput performance that you will get from a server and its storage subsystem. Having good sequential throughput makes your life so much easier as a database professional, allowing you to support your organization at a much higher level.

Nice write up Glenn!

'In-memory’ speed is all about perception; but how fast can your really search through memory with SQLServer? (My favorite perfmon counter for this is from the SQLServer Buffer Manager – Page lookups/sec * 8192 bytes) to have an impression of how much data is 'touched' under the hood.

If you, at some point, need more throughput, consider to switch over to a MPP (Massive Parallel Processing) architecture to scale out – that’s why we have SQL APS ! http://www.microsoft.com/aps

Hi Glenn,

based upon my experience the throughput is almost never a bottleneck, consider a transactional log file of a heavy loaded OLTP system, it's uncommon to see more than 15MB / sec (megabytes), I think that the real key of performance is the latency of you storage-chain, not the throughput itslef.

Let’s move now from hardware to SQL Server database file configuration, and look at five common IO myths mistakes.