Some interesting discussions always evolve around the topic of splitting strings. In two previous blog posts, "Split strings the right way – or the next best way" and "Splitting Strings : A Follow-Up," I hope I have demonstrated that chasing the "best-performing" T-SQL split function is fruitless. When splitting is actually necessary, CLR always wins, and the next best option can vary depending on the actual task at hand. But in those posts I hinted that splitting on the database side may not be necessary in the first place.

SQL Server 2008 introduced table-valued parameters, a way to pass a "table" from an application to a stored procedure without having to build and parse a string, serialize to XML, or deal with any of this splitting methodology. So I thought I would check how this method compares to the winner of our previous tests – since it may be a viable option, whether you can use CLR or not. (For the ultimate bible on TVPs, please see fellow SQL Server MVP Erland Sommarskog's comprehensive article.)

The Tests

For this test I'm going to pretend we are dealing with a set of version strings. Imagine a C# application that passes in a set of these strings (say, that have been collected from a set of users) and we need to match the versions against a table (say, which indicates the service releases that are applicable to a specific set of versions). Obviously a real application would have more columns than this, but just to create some volume and still keep the table skinny (I also use NVARCHAR throughout because that is what the CLR split function takes and I want to eliminate any ambiguity due to implicit conversion):

CREATE TABLE dbo.VersionStrings(left_post NVARCHAR(5), right_post NVARCHAR(5));

CREATE CLUSTERED INDEX x ON dbo.VersionStrings(left_post, right_post);

;WITH x AS

(

SELECT lp = CONVERT(DECIMAL(4,3), RIGHT(RTRIM(s1.[object_id]), 3)/1000.0)

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

)

INSERT dbo.VersionStrings

(

left_post, right_post

)

SELECT

lp - CASE WHEN lp >= 0.9 THEN 0.1 ELSE 0 END,

lp + (0.1 * CASE WHEN lp >= 0.9 THEN -1 ELSE 1 END)

FROM x;

Now that the data is in place, the next thing we need to do is create a user-defined table type that can hold a set of strings. The initial table type to hold this string is pretty simple:

CREATE TYPE dbo.VersionStringsTVP AS TABLE (VersionString NVARCHAR(5));

Then we need a couple of stored procedures to accept the lists from C#. For simplicity, again, we'll just take a count so that we can be sure to perform a complete scan, and we'll ignore the count in the application:

CREATE PROCEDURE dbo.SplitTest_UsingCLR

@list NVARCHAR(MAX)

AS

BEGIN

SET NOCOUNT ON;

SELECT c = COUNT(*)

FROM dbo.VersionStrings AS v

INNER JOIN dbo.SplitStrings_CLR(@list, N',') AS s

ON s.Item BETWEEN v.left_post AND v.right_post;

END

GO

CREATE PROCEDURE dbo.SplitTest_UsingTVP

@list dbo.VersionStringsTVP READONLY

AS

BEGIN

SET NOCOUNT ON;

SELECT c = COUNT(*)

FROM dbo.VersionStrings AS v

INNER JOIN @list AS l

ON l.VersionString BETWEEN v.left_post AND v.right_post;

END

GO

Note that a TVP passed into a stored procedure must be marked as READONLY – there is currently no way to perform DML on the data like you would for a table variable or temp table. However, Erland has submitted a very popular request that Microsoft make these parameters more flexible (and plenty of deeper insight behind his argument here).

The beauty here is that SQL Server no longer has to deal with splitting a string at all – neither in T-SQL nor in handing it off to CLR – as it's already in a set structure where it excels.

Next, a C# console application that does the following:

- Accepts a number as an argument to indicate how many string elements should be defined

- Builds a CSV string of those elements, using StringBuilder, to pass to the CLR stored procedure

- Builds a DataTable with the same elements to pass to the TVP stored procedure

- Also tests the overhead of converting a CSV string to a DataTable and vice-versa before calling the appropriate stored procedures

The code for the C# app is found at the end of the article. I can spell C#, but I am by no means a guru; I am sure there are inefficiencies you can spot there that may make the code perform a bit better. But any such changes should affect the entire set of tests in a similar way.

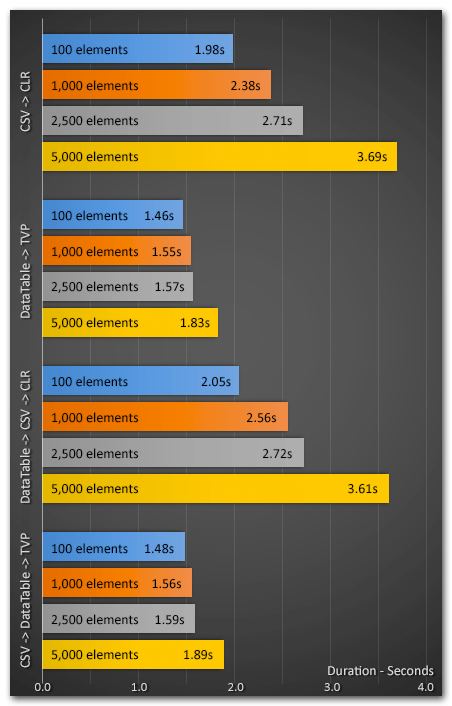

I ran the application 10 times using 100, 1,000, 2,500 and 5,000 elements. The results were as follows (this is showing average duration, in seconds, across the 10 tests):

Performance Aside…

In addition to the clear performance difference, TVPs have another advantage – table types are much simpler to deploy than CLR assemblies, especially in environments where CLR has been forbidden for other reasons. I am hoping that barriers to CLR are gradually disappearing, and new tools are making deployment and maintenance less painful, but I doubt the ease of initial deployment for CLR will ever be easier than native approaches.

On the other hand, on top of the read-only limitation, table types are like alias types in that they are difficult to modify after the fact. If you want to change the size of a column or add a column, there is no ALTER TYPE command, and in order to DROP the type and re-create it, you must first remove references to the type from all procedures that are using it. So for example in the above case if we needed to increase the VersionString column to NVARCHAR(32), we'd have to create a dummy type and alter the stored procedure (and any other procedure that is using it):

CREATE TYPE dbo.VersionStringsTVPCopy AS TABLE (VersionString NVARCHAR(32));

GO

ALTER PROCEDURE dbo.SplitTest_UsingTVP

@list dbo.VersionStringsTVPCopy READONLY

AS

...

GO

DROP TYPE dbo.VersionStringsTVP;

GO

CREATE TYPE dbo.VersionStringsTVP AS TABLE (VersionString NVARCHAR(32));

GO

ALTER PROCEDURE dbo.SplitTest_UsingTVP

@list dbo.VersionStringsTVP READONLY

AS

...

GO

DROP TYPE dbo.VersionStringsTVPCopy;

GO

(Or alternatively, drop the procedure, drop the type, re-create the type, and re-create the procedure.)

Conclusion

The TVP method consistently outperformed the CLR splitting method, and by a greater percentage as the number of elements increased. Even adding in the overhead of converting an existing CSV string to a DataTable yielded much better end-to-end performance. So I hope that, if I hadn't already convinced you to abandon your T-SQL string splitting techniques in favor of CLR, I have urged you to give table-valued parameters a shot. It should be easy to test out even if you're not currently using a DataTable (or some equivalent).

The C# Code Used For These Tests

As I said, I'm no C# guru, so there are probably plenty of naïve things I am doing here, but the methodology should be quite clear.

using System;

using System.IO;

using System.Data;

using System.Data.SqlClient;

using System.Text;

using System.Collections;

namespace SplitTester

{

class SplitTester

{

static void Main(string[] args)

{

DataTable dt_pure = new DataTable();

dt_pure.Columns.Add("Item", typeof(string));

StringBuilder sb_pure = new StringBuilder();

Random r = new Random();

for (int i = 1; i <= Int32.Parse(args[0]); i++)

{

String x = r.NextDouble().ToString().Substring(0,5);

sb_pure.Append(x).Append(",");

dt_pure.Rows.Add(x);

}

using

(

SqlConnection conn = new SqlConnection(@"Data Source=.;

Trusted_Connection=yes;Initial Catalog=Splitter")

)

{

conn.Open();

// four cases:

// (1) pass CSV string directly to CLR split procedure

// (2) pass DataTable directly to TVP procedure

// (3) serialize CSV string from DataTable and pass CSV to CLR procedure

// (4) populate DataTable from CSV string and pass DataTable to TCP procedure

// ********** (1) ********** //

write(Environment.NewLine + "Starting (1)");

SqlCommand c1 = new SqlCommand("dbo.SplitTest_UsingCLR", conn);

c1.CommandType = CommandType.StoredProcedure;

c1.Parameters.AddWithValue("@list", sb_pure.ToString());

c1.ExecuteNonQuery();

c1.Dispose();

write("Finished (1)");

// ********** (2) ********** //

write(Environment.NewLine + "Starting (2)");

SqlCommand c2 = new SqlCommand("dbo.SplitTest_UsingTVP", conn);

c2.CommandType = CommandType.StoredProcedure;

SqlParameter tvp1 = c2.Parameters.AddWithValue("@list", dt_pure);

tvp1.SqlDbType = SqlDbType.Structured;

c2.ExecuteNonQuery();

c2.Dispose();

write("Finished (2)");

// ********** (3) ********** //

write(Environment.NewLine + "Starting (3)");

StringBuilder sb_fake = new StringBuilder();

foreach (DataRow dr in dt_pure.Rows)

{

sb_fake.Append(dr.ItemArray[0].ToString()).Append(",");

}

SqlCommand c3 = new SqlCommand("dbo.SplitTest_UsingCLR", conn);

c3.CommandType = CommandType.StoredProcedure;

c3.Parameters.AddWithValue("@list", sb_fake.ToString());

c3.ExecuteNonQuery();

c3.Dispose();

write("Finished (3)");

// ********** (4) ********** //

write(Environment.NewLine + "Starting (4)");

DataTable dt_fake = new DataTable();

dt_fake.Columns.Add("Item", typeof(string));

string[] list = sb_pure.ToString().Split(',');

for (int i = 0; i < list.Length; i++)

{

if (list[i].Length > 0)

{

dt_fake.Rows.Add(list[i]);

}

}

SqlCommand c4 = new SqlCommand("dbo.SplitTest_UsingTVP", conn);

c4.CommandType = CommandType.StoredProcedure;

SqlParameter tvp2 = c4.Parameters.AddWithValue("@list", dt_fake);

tvp2.SqlDbType = SqlDbType.Structured;

c4.ExecuteNonQuery();

c4.Dispose();

write("Finished (4)");

}

}

static void write(string msg)

{

Console.WriteLine(msg + ": "

+ DateTime.UtcNow.ToString("HH:mm:ss.fffff"));

}

}

}

"… table types are much simpler to deploy than CLR assemblies …"

This phrase and indeed its containing paragraph reminded me a solution to CLR deployment I had created based on this challenge. It doesn't address the fact that some systems may have CLR off, but it will help with deployment when CLR is available.

I hadn't blogged in a while so this gave me reason (i don't have all the formatting as this blog but I hope the content is useful).

http://dnhlmssql.blogspot.com/2012/08/creating-all-text-deployment-for-sql.html

Thanks for being so detailed Aaron.