Introduction

Achieving minimal logging with INSERT...SELECT can be a complicated business. The considerations listed in the Data Loading Performance Guide are still pretty comprehensive, though one also needs to read SQL Server 2016, Minimal logging and Impact of the Batchsize in bulk load operations by Parikshit Savjani of the SQL Server Tiger Team to get the updated picture for SQL Server 2016 and later, when bulk loading into clustered rowstore tables. That said, this article is purely concerned with providing new details about minimal logging when bulk loading traditional (not “memory-optimized”) heap tables using INSERT...SELECT. Tables with a b-tree clustered index are covered separately in part two of this series.

Heap tables

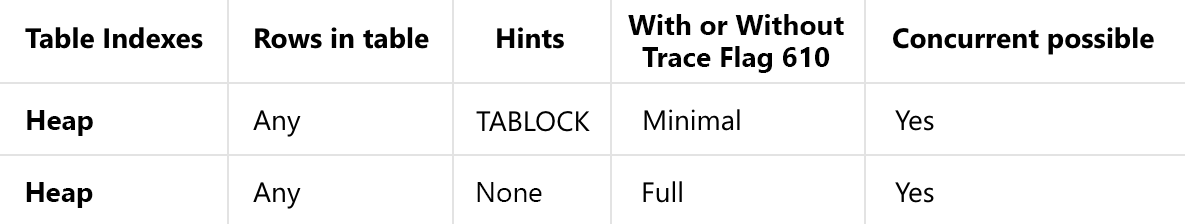

When inserting rows using INSERT...SELECT into a heap with no nonclustered indexes, the documentation universally states that such inserts will be minimally logged as long as a TABLOCK hint is present. This is reflected in the summary tables included in the Data Loading Performance Guide and the Tiger Team post. The summary rows for heap tables without indexes are the same in both documents (no changes for SQL Server 2016):

An explicit TABLOCK hint is not the only way to meet the requirement for table-level locking. We can also set the ‘table lock on bulk load’ option for the target table using sp_tableoption or by enabling documented trace flag 715. (Note: These options are not sufficient to enable minimal logging when using INSERT...SELECT because INSERT...SELECT does not support bulk update locks).

The “concurrent possible” column in the summary only applies to bulk loading methods other than INSERT...SELECT. Concurrent loading of a heap table is not possible with INSERT...SELECT. As noted in the Data Loading Performance Guide, bulk loading with INSERT...SELECT takes an exclusive X lock on the table, not the bulk update BU lock required for concurrent bulk loads.

All that aside — and assuming there is no other reason not to expect minimal logging when bulk loading an unindexed heap with TABLOCK (or equivalent) — the insert still might not be minimally logged…

An Exception to the Rule

The following demo script should be run on a development instance in a new test database set to use the SIMPLE recovery model. It loads a number of rows into a heap table using INSERT...SELECT with TABLOCK, and reports on the transaction log records generated:

CREATE TABLE dbo.TestHeap

(

id integer NOT NULL IDENTITY,

c1 integer NOT NULL,

padding char(45) NOT NULL

DEFAULT ''

);

GO

-- Clear the log

CHECKPOINT;

GO

-- Insert rows

INSERT dbo.TestHeap WITH (TABLOCK)

(c1)

SELECT TOP (897)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

GO

-- Show log entries

SELECT

FD.Operation,

FD.Context,

FD.[Log Record Length],

FD.[Log Reserve],

FD.AllocUnitName,

FD.[Transaction Name],

FD.[Lock Information],

FD.[Description]

FROM sys.fn_dblog(NULL, NULL) AS FD;

GO

-- Count the number of fully-logged rows

SELECT

[Fully Logged Rows] = COUNT_BIG(*)

FROM sys.fn_dblog(NULL, NULL) AS FD

WHERE

FD.Operation = N'LOP_INSERT_ROWS'

AND FD.Context = N'LCX_HEAP'

AND FD.AllocUnitName = N'dbo.TestHeap';

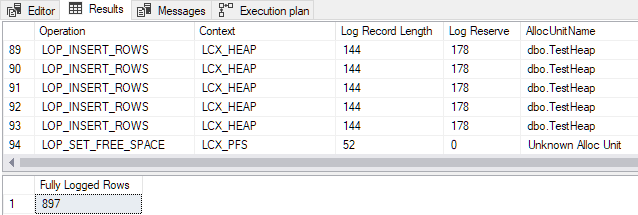

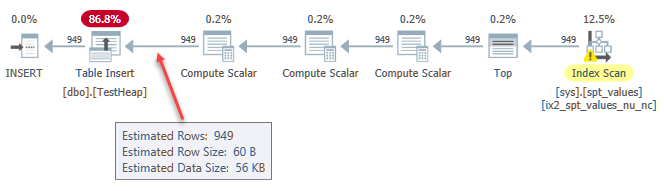

The output shows that all 897 rows were fully logged despite apparently meeting all the conditions for minimal logging (only a sample of log records are shown for space reasons):

The same result is seen if the insert is repeated (i.e. it does not matter if the heap table is empty or not). This result contradicts the documentation.

The Minimal Logging Threshold for Heaps

The number of rows one needs to add in a single INSERT...SELECT statement to achieve minimal logging into an unindexed heap with table locking enabled depends on a calculation SQL Server performs when estimating the total size of the data to be inserted. The inputs to this calculation are:

- The version of SQL Server.

- The estimated number of rows leading into the Insert operator.

- Target table row size.

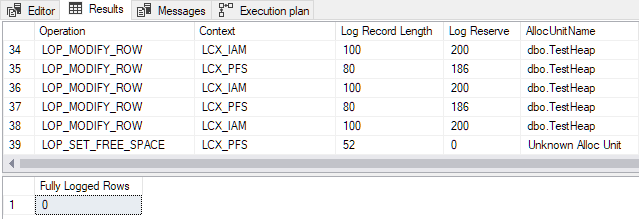

For SQL Server 2012 and earlier, the transition point for this particular table is 898 rows. Changing the number in the demo script TOP clause from 897 to 898 produces the following output:

The transaction log entries generated are concerned with page allocation and the maintenance of Index Allocation Map (IAM) and Page Free Space (PFS) structures. Remember that minimal logging means that SQL Server does not log each row insertion individually. Instead, only changes to metadata and allocation structures are logged. Changing from 897 to 898 rows enables minimal logging for this specific table.

For SQL Server 2014 and later, the transition point is 950 rows for this table. Running the INSERT...SELECT with TOP (949) will use full logging – changing to TOP (950) will produce minimal logging.

The thresholds are not dependent on the Cardinality Estimation model in use or the database compatibility level.

The Data Size Calculation

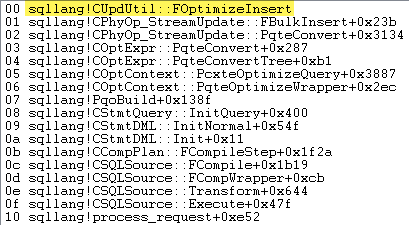

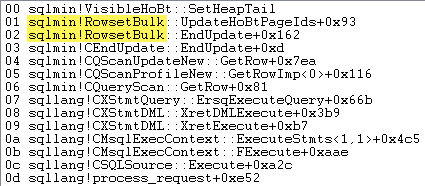

Whether SQL Server decides to use rowset bulk load — and therefore whether minimal logging is available or not — depends on the result of a series of calculations performed in a method called sqllang!CUpdUtil::FOptimizeInsert, which either returns true for minimal logging, or false for full logging. An example call stack is shown below:

The essence of the test is:

- The insert must be for more than 250 rows.

- The total insert data size must be calculated as at least 8 pages.

The check for more than 250 rows depends solely on the estimated number of rows arriving at the Table Insert operator. This is shown in the execution plan as ‘Estimated Number of Rows’. Be careful with this. It is easy to produce a plan with a low estimated number of rows, for example by using a variable in the TOP clause without OPTION (RECOMPILE). In that case, the optimizer guesses at 100 rows, which will not reach the threshold, and so prevent bulk load and minimal logging.

The total data size calculation is more complex, and does not match the ‘Estimated Row Size’ flowing into the Table Insert operator. The way the calculation is performed is slightly different in SQL Server 2012 and earlier compared with SQL Server 2014 and later. Still, both produce a row size result that is different from what is seen in the execution plan.

The Row Size Calculation

The total insert data size is calculated by multiplying the estimated number of rows by the expected maximum row size. The row size calculation is the point that differs between SQL Server versions.

In SQL Server 2012 and earlier, the calculation is performed by sqllang!OptimizerUtil::ComputeRowLength. For the test heap table (deliberately designed with simple fixed-length non-null columns using the original FixedVar row storage format) an outline of the calculation is:

- Initialize a FixedVar metadata generator.

- Get type and attribute information for each column in the Table Insert input stream.

- Add typed columns and attributes to the metadata.

- Finalize the generator and ask it for the maximum row size.

- Add overhead for the null bitmap and number of columns.

- Add four bytes for the row status bits and row offset to the number of columns data.

Physical Row Size

The result of this calculation might be expected to match the physical row size, but it does not. For example, with row versioning turned off for the database:

SELECT

DDIPS.index_type_desc,

DDIPS.alloc_unit_type_desc,

DDIPS.page_count,

DDIPS.record_count,

DDIPS.min_record_size_in_bytes,

DDIPS.max_record_size_in_bytes,

DDIPS.avg_record_size_in_bytes

FROM sys.dm_db_index_physical_stats

(

DB_ID(),

OBJECT_ID(N'dbo.TestHeap', N'U'),

0, -- heap

NULL, -- all partitions

'DETAILED'

) AS DDIPS;

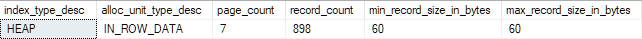

…gives a record size of 60 bytes in every row of the test table:

This is as described in Estimate the Size of a Heap:

- Total byte size of all fixed-length columns = 53 bytes:

id integer NOT NULL= 4 bytesc1 integer NOT NULL= 4 bytespadding char(45) NOT NULL= 45 bytes.

- Null bitmap = 3 bytes:

- = 2 + int((Num_Cols + 7) / 8)

- = 2 + int((3 + 7) / 8)

- = 3 bytes.

- Row header = 4 bytes.

- Total 53 + 3 + 4 = 60 bytes.

It also matches the estimated row size shown in the execution plan:

Internal Calculation Details

The internal calculation used to determine if bulk load is used comes up with a different result, based on the following insert stream column information obtained using a debugger. The type numbers used match sys.types:

- Total fixed-length column size = 66 bytes:

- Type id 173

binary(8)= 8 bytes (internal). - Type id 56

integer= 4 bytes (internal). - Type id 104

bit= 1 byte (internal). - Type id 56

integer= 4 bytes (idcolumn). - Type id 56

integer= 4 bytes (c1column). - Type id 175

char(45)= 45 bytes (paddingcolumn).

- Type id 173

- Null bitmap = 3 bytes (as before).

- Row header overhead = 4 bytes (as before).

- Calculated row size = 66 + 3 + 4 = 73 bytes.

The difference is that the input stream feeding the Table Insert operator contains three extra internal columns. These are stripped out when showplan is generated. The extra columns make up the table insert locator, which includes the bookmark (RID or row locator) as its first component. It is metadata for the insert and does not end up being added to the table.

The extra columns explain the discrepancy between the calculation performed by OptimizerUtil::ComputeRowLength and the physical size of the rows. This could be viewed as a bug: SQL Server should not count metadata columns in the insert stream toward the final physical size of the row. On the other hand, the calculation may simply be a best-effort estimate using the generic update operator.

The calculation also takes no account of other factors like the 14-byte overhead of row versioning. This can be tested by re-running the demo script with either of the snapshot isolation or read committed snapshot isolation database options enabled. The physical size of the row will increase by 14 bytes (from 60 bytes to 74), but the threshold for minimal logging remains unchanged at 898 rows.

Threshold Calculation

We now have all the details we need to see why the threshold is 898 rows for this table on SQL Server 2012 and earlier:

- 898 rows meets the first requirement for more than 250 rows.

- Computed row size = 73 bytes.

- Estimated number of rows = 897.

- Total data size = 73 bytes * 897 rows = 65481 bytes.

- Total pages = 65481 / 8192 = 7.9932861328125.

- This is just below the second requirement for >= 8 pages.

- For 898 rows, the number of pages is 8.002197265625.

- This is >=8 pages so minimal logging is activated.

In SQL Server 2014 and later, the changes are:

- The row size is calculated by the metadata generator.

- The internal integer column in the table locator is no longer present in the insert stream. This represents the uniquifier, which only applies to indexes. It seems likely this was removed as a bug fix.

- The expected row size changes from 73 to 69 bytes due to the omitted integer column (4 bytes).

- The physical size is still 60 bytes. The remaining difference of 9 bytes is accounted for by the extra 8-byte RID and 1-byte bit internal columns in the insert stream.

To reach the threshold of 8 pages with 69 bytes per row:

- 8 pages * 8192 bytes per page = 65536 bytes.

- 65535 bytes / 69 bytes per row = 949.7971014492754 rows.

- We therefore expect a minimum of 950 rows to enable rowset bulk load for this table on SQL Server 2014 onward.

Summary and Final Thoughts

In contrast to the bulk loading methods that support batch size, as covered in the post by Parikshit Savjani, INSERT...SELECT into an unindexed heap (empty or not) does not always result in minimal logging when table-locking is specified.

To enable minimal logging with INSERT...SELECT, SQL Server must expect more than 250 rows with a total size of at least one extent (8 pages).

When calculating the estimated total insert size (to compare with the 8 page threshold), SQL Server multiplies the estimated number of rows by a calculated maximum row size. SQL Server counts internal columns present in the insert stream when computing the row size. For SQL Server 2012 and earlier, this adds 13 bytes per row. For SQL Server 2014 and later, it adds 9 bytes per row. This only affects the calculation; it does not affect the final physical size of the rows.

When minimally-logged heap bulk load is active, SQL Server does not insert rows one at a time. Extents are allocated in advance, and rows to be inserted are collected into whole new pages by sqlmin!RowsetBulk before being added to the existing structure. An example call stack is shown below:

Logical reads are not reported for the target table when minimally-logged heap bulk load is used – the Table Insert operator does not need to read an existing page to locate the insertion point for each new row.

Execution plans currently do not show how many rows or pages were inserted using rowset bulk load and minimal logging. Perhaps this useful information will be added to the product in a future release.

Paul,

Here you are talking about the two extra requirements including a minimum number of rows and the cumulative size of data (a whole extent), but in the linked SQL Server 2016 article about minimal logging, they are warning about all the wasted space due to extent-size allocations taking place during "fast loads" of batches consisting of as few as 10 rows. What am I missing?

Nick,

Thanks for the thoughtful comment.

This (introductory) article is about minimal logging into heaps via the 'bulk rowset' mechanism.

The 'fast loads' mentioned in the 2016 Tiger Team update use a different mechanism enabled by TF 610.

The 'fast loads' can result in inefficient space utilization when a batch size is specified; however, it is not possible to specify a batch size with

INSERT...SELECTso I won't be covering that aspect specifically in this mini-series.If it seems like some of this is confusing and imperfectly documented, that is because it is.

I will be covering inserts into b-trees (fast load context) in the next part(s) of this series.

Paul

Hi Paul,

This great article is incredibly timely for me. I've been working with indexes a whole lot lately and have been particularly interested in the documents that you also cited because I'm also seriously interested in Minimal Logging for secondary+ inserts into Clustered Rowstores.

As usual for your articles, you've done an awesome job on your analysis for Heaps with Insert..Select. I can't wait to read your next article.

Thanks for doing the research and testing to write such an article. Again, awesome job as usual.

Thanks very much, Jeff. There is a lot of ground to cover here, and I'm going to try to be as clear as I am able in the next two parts of this series. I will try to anticipate as many interests as possible, but if I miss something, or an aspect isn't totally clear, please let me know.

Other parts in this series:

Part 2: Minimal Logging with INSERT…SELECT into Empty Clustered Tables

Part 3: Minimal Logging with INSERT…SELECT and Fast Load Context