Introduction

Achieving minimal logging using INSERT...SELECT into an empty clustered index target is not quite as simple as described in the Data Performance Loading Guide.

This post provides new details about the requirements for minimal logging when the insert target is an empty traditional clustered index. (The word “traditional” there excludes columnstore and memory-optimized (‘Hekaton’) clustered tables). For the conditions that apply when the target table is a heap, see the previous article in this series.

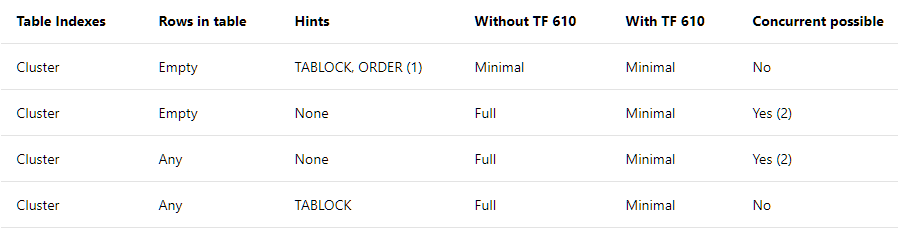

Summary for Clustered Tables

The Data Loading Performance Guide contains a high-level summary of the conditions required for minimal logging into clustered tables:

This post is concerned with the top row only. It states that TABLOCK and ORDER hints are required, with a note that says:

If using BULK INSERT the order hint must be used.

Empty Target with Table Lock

The summary top row suggests that all inserts to an empty clustered index will be minimally logged as long as TABLOCK and ORDER hints are specified. The TABLOCK hint is required to enable the RowSetBulk facility as used for heap table bulk loads. An ORDER hint is required to ensure rows arrive at the Clustered Index Insert plan operator in target index key order. Without this guarantee, SQL Server might add index rows that are not sorted correctly, which would not be good.

Unlike other bulk loading methods, it is not possible to specify the required ORDER hint on an INSERT...SELECT statement. This hint is not the same as using an ORDER BY clause on the INSERT...SELECT statement. An ORDER BY clause on an INSERT only guarantees the way any identity values are assigned, not row insert order.

For INSERT...SELECT, SQL Server makes its own determination whether to ensure rows are presented to the Clustered Index Insert operator in key order or not. The outcome of this assessment is visible in execution plans through the DMLRequestSort property of the Insert operator. The DMLRequestSort property must be set to true for INSERT...SELECT into an index to be minimally logged. When it is set to false, minimal logging cannot occur.

Having DMLRequestSort set to true is the only acceptable guarantee of insert input ordering for SQL Server. One might inspect the execution plan and predict that rows should/will/must arrive in clustered index order, but without the specific internal guarantees provided by DMLRequestSort, that assessment counts for nothing.

When DMLRequestSort is true, SQL Server may introduce an explicit Sort operator in the execution plan. If it can internally guarantee ordering in other ways, the Sort may be omitted. If both sort and no-sort alternatives are available, the optimizer will make a cost-based choice. The cost analysis does not account for minimal logging directly; it is driven by the expected benefits of sequential I/O and the avoidance of page splitting.

DMLRequestSort Conditions

Both of the following tests must pass for SQL Server to choose to set DMLRequestSort to true when inserting to an empty clustered index with table locking specified:

- An estimate of more than 250 rows at the input side of the Clustered Index Insert operator; and

- An estimated data size of more than 2 pages. The estimated data size is not an integer, so a result of 2.001 pages would meet this condition.

(This may remind you of the conditions for heap minimal logging, but the required estimated data size here is two pages rather than eight.)

Data Size Calculation

The estimated data size calculation here is subject to the same quirks described in the previous article for heaps, except that the 8-byte RID is not present.

For SQL Server 2012 and earlier, this means 5 extra bytes per row are included in the data size calculation: One byte for an internal bit flag, and four bytes for the uniquifier (used in the calculation even for unique indexes, which do not store a uniquifier).

For SQL Server 2014 and later, the uniquifier is correctly omitted for unique indexes, but the one extra byte for the internal bit flag is retained.

Demo

The following script should be run on a development SQL Server instance in a new test database set to use the SIMPLE or BULK_LOGGED recovery model.

The demo loads 268 rows into a brand new clustered table using INSERT...SELECT with TABLOCK, and reports on the transaction log records generated.

IF OBJECT_ID(N'dbo.Test', N'U') IS NOT NULL

BEGIN

DROP TABLE dbo.Test;

END;

GO

CREATE TABLE dbo.Test

(

id integer NOT NULL IDENTITY

CONSTRAINT [PK dbo.Test (id)]

PRIMARY KEY,

c1 integer NOT NULL,

padding char(45) NOT NULL

DEFAULT ''

);

GO

-- Clear the log

CHECKPOINT;

GO

-- Insert rows

INSERT dbo.Test WITH (TABLOCK)

(c1)

SELECT TOP (268)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

GO

-- Show log entries

SELECT

FD.Operation,

FD.Context,

FD.[Log Record Length],

FD.[Log Reserve],

FD.AllocUnitName,

FD.[Transaction Name],

FD.[Lock Information],

FD.[Description]

FROM sys.fn_dblog(NULL, NULL) AS FD;

GO

-- Count the number of fully-logged rows

SELECT

[Fully Logged Rows] = COUNT_BIG(*)

FROM sys.fn_dblog(NULL, NULL) AS FD

WHERE

FD.Operation = N'LOP_INSERT_ROWS'

AND FD.Context = N'LCX_CLUSTERED'

AND FD.AllocUnitName = N'dbo.Test.PK dbo.Test (id)';

(If you run the script on SQL Server 2012 or earlier, change the TOP clause in the script from 268 to 252, for reasons that will be explained in a moment.)

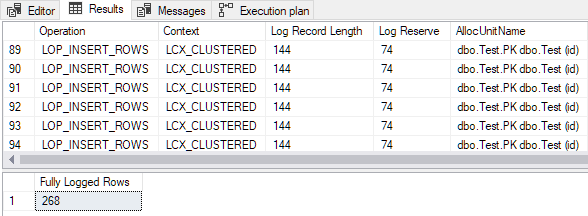

The output shows that all inserted rows were fully logged despite the empty target clustered table and the TABLOCK hint:

Calculated insert data size

The execution plan properties of the Clustered Index Insert operator show that DMLRequestSort is set to false. This is because although the estimated number of rows to insert is more than 250 (meeting the first requirement), the calculated data size does not exceed two 8KB pages.

The calculation details (for SQL Server 2014 onward) are as follows:

- Total fixed-length column size = 54 bytes:

- Type id 104

bit= 1 byte (internal). - Type id 56

integer= 4 bytes (idcolumn). - Type id 56

integer= 4 bytes (c1column). - Type id 175

char(45)= 45 bytes (paddingcolumn).

- Type id 104

- Null bitmap = 3 bytes.

- Row header overhead = 4 bytes.

- Calculated row size = 54 + 3 + 4 = 61 bytes.

- Calculated data size = 61 bytes * 268 rows = 16,348 bytes.

- Calculated data pages = 16,384 / 8192 = 1.99560546875.

The calculated row size (61 bytes) differs from the true row storage size (60 bytes) by the extra one byte of internal metadata present in the insert stream. The calculation also does not account for the 96 bytes used on each page by the page header, or other things like row versioning overhead. The same computation on SQL Server 2012 adds a further 4 bytes per row for the uniquifier (which is not present in unique indexes as previously mentioned). The extra bytes mean fewer rows are expected to fit on each page:

- Calculated row size = 61 + 4 = 65 bytes.

- Calculated data size = 65 bytes * 252 rows = 16,380 bytes

- Calculated data pages = 16,380 / 8192 = 1.99951171875.

Changing the TOP clause from 268 rows to 269 (or from 252 to 253 for 2012) makes the expected data size calculation just tip over the 2 page minimum threshold:

- SQL Server 2014

- 61 bytes * 269 rows = 16,409 bytes.

- 16,409 / 8192 = 2.0030517578125 pages.

- SQL Server 2012

- 65 bytes * 253 rows = 16,445 bytes.

- 16,445 / 8192 = 2.0074462890625 pages.

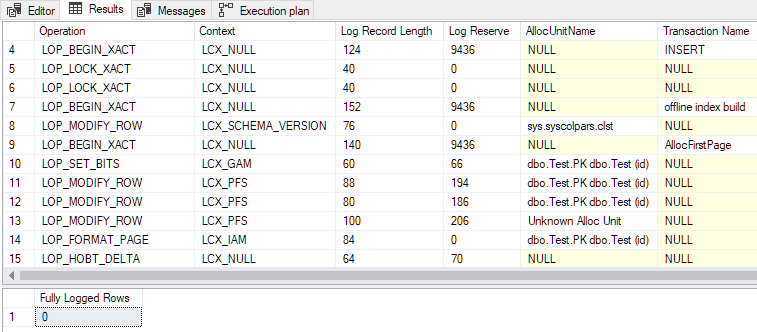

With the second condition now also satisfied, DMLRequestSort is set to true, and minimal logging is achieved, as shown in the output below:

Some other points of interest:

- A total of 79 log records are generated, compared with 328 for the fully-logged version. Fewer log records are the expected result of minimal logging.

- The

LOP_BEGIN_XACTrecords in the minimally-logged records reserve a comparatively large amount of log space (9436 bytes each). - One of the transaction names listed in the log records is “offline index build”. While we did not ask for an index to be created as such, bulk loading rows into an empty index is essentially the same operation.

- The fully-logged insert takes a table-level exclusive lock (

Tab-X), while the minimally-logged insert takes schema modification (Sch-M) just like a ‘real’ offline index build does. - Bulk loading an empty clustered table using

INSERT...SELECTwithTABLOCKandDMRequestSortset to true uses theRowsetBulkmechanism, just as the minimally-logged heap loads did in the previous article.

Cardinality Estimates

Watch out for low cardinality estimates at the Clustered Index Insert operator. If either of the thresholds required to set DMLRequestSort to true is not reached due to inaccurate cardinality estimation, the insert will be fully logged, regardless of the actual number of rows and total data size encountered at execution time.

For example, changing the TOP clause in the demo script to use a variable results in a fixed cardinality guess of 100 rows, which is below the 251 row minimum:

-- Insert rows

DECLARE @NumRows bigint = 269;

INSERT dbo.Test WITH (TABLOCK)

(c1)

SELECT TOP (@NumRows)

CHECKSUM(NEWID())

FROM master.dbo.spt_values AS SV;

Plan Caching

The DMLRequestSort property is saved as part of the cached plan. When a cached plan is reused, the value of DMLRequestSort is not recalculated at execution time, unless a recompilation occurs. Note that recompilations do not occur for TRIVIAL plans based on changes in statistics or table cardinality.

One way to avoid any unexpected behaviours due to caching is to use an OPTION (RECOMPILE) hint. This will ensure the appropriate setting for DMLRequestSort is recalculated, at the cost of a compile on each execution.

Trace Flag

It is possible to force DMLRequestSort to be set to true by setting undocumented and unsupported trace flag 2332, as I wrote in Optimizing T-SQL queries that change data. Unfortunately, this does not affect minimal logging eligibility for empty clustered tables — the insert must still be estimated at more than 250 rows and 2 pages. This trace flag does affect other minimal-logging scenarios, which are covered in the final part of this series.

Summary

Bulk loading an empty clustered index using INSERT...SELECT reuses the RowsetBulk mechanism used to bulk load heap tables. This requires table locking (normally achieved with a TABLOCK hint) and an ORDER hint. There is no way to add an ORDER hint to an INSERT...SELECT statement. As a consequence, achieving minimal logging into an empty clustered table requires that the DMLRequestSort property of the Clustered Index Insert operator is set to true. This guarantees to SQL Server that rows presented to the Insert operator will arrive in target index key order. The effect is the same as when using the ORDER hint available for other bulk insert methods like BULK INSERT and bcp.

In order for DMLRequestSort to be set to true, there must be:

- More than 250 rows estimated to be inserted; and

- An estimated insert data size of more than two pages.

The estimated insert data size calculation does not match the result of multiplying the execution plan estimated number of rows and estimated row size properties at the input to the Insert operator. The internal calculation (incorrectly) includes one or more internal columns in the insert stream, which are not persisted in the final index. The internal calculation also does not account for page headers or other overheads like row versioning.

When testing or debugging minimal logging issues, beware of low cardinality estimates, and remember that the setting of DMLRequestSort is cached as part of the execution plan.

The final part of this series details the conditions required to achieve minimal logging without using the RowsetBulk mechanism. These correspond directly to the new facilities added under trace flag 610 to SQL Server 2008, then changed to be on by default from SQL Server 2016 onward.