Azure SQL Database currently has three service tiers to choose from for your workload. These tiers consist of Basic, Standard, and Premium. Basic supports only one size of 5 DTUs. Premium starts at 125 DTUs and goes up to 4,000 DTUs. The Premium tier is the top tier which is built for higher I/O workloads and provides lower latency per I/O, and an order of magnitude more IOPS per DTU, than in the Standard tier.

Prior to August 2017, the Standard tier only supported DTU sizes between 15 and 100 DTUs. Currently available in preview are new performance levels and storage add-ons that offer price optimization benefits for CPU-intensive workloads. With those, the Standard tier now supports up to 3,000 DTUs.

At this point, you may be asking yourself what, exactly, is a DTU? A DTU is a Database Transaction Unit and is a mixture of CPU, memory, and data and transaction log I/O. (Andy Mallon, @AMtwo, recently addressed this in "What the heck is a DTU?") You can hit your DTU limit by maxing out CPU, memory, or I/O.

Previously, the Standard tier only offered 4 levels: 15, 30, 50, and 100 DTUs, with a database size limit of 250GB, with standard disk. If you had a database that was larger than 250GB, however did not need more than 100 DTUs for CPU, memory, or I/O, you were stuck paying a Premium price just for database size. With the new changes, you can now have up to a 1TB database in the Standard tier; you just have to pay the extra storage. Currently storage is being billed at $0.085/GB during the preview. Increasing from the included size of 250GB to 1TB increases by 774GB at a cost of $65.79 per month.

The new Standard preview DTU sizes support 200, 400, 800, 1,600, and 3,000 DTU options. If you have a SQL Server database workload that is more CPU-bound than I/O, these Standard tier options have the potential to save you a lot of money; however, if your workload is I/O bound, the Premium tier is going to outperform the Standard tier.

I decided to try two different workloads to see how different the Standard and Premium tiers compared to each other. I wanted to create simple and reproducible test so that others can try to validate for themselves. For my first test, I wanted to generate a healthy mix of CPU and I/O. I was hoping that I would be pushing more CPU than I/O, and be able to show that the expanded Standard tier would outperform a Premium tier with the same DTU size. I didn’t exactly get the results I was hoping for.

To setup this demo, I created a table with three GUID columns, inserted 1 million rows, and then updated two of the three columns with new IDs. The sample code is below:

CREATE TABLE dbo.TestTable

(

Table_id UNIQUEIDENTIFIER DEFAULT NEWID(),

Customer_id UNIQUEIDENTIFIER DEFAULT NEWID(),

Cust_Name VARCHAR(40) DEFAULT CAST(NEWID() AS VARCHAR(40))

);

SET NOCOUNT ON;

GO

INSERT INTO dbo.TestTable DEFAULT VALUES;

GO 1000000

CREATE CLUSTERED INDEX [ClustTestTable] ON [dbo].[TestTable]

(

[Table_id] ASC,

[Customer_id] ASC

);

SET STATISTICS TIME ON;

UPDATE TestTable

SET Table_id = NEWID(), Customer_id = NEWID();

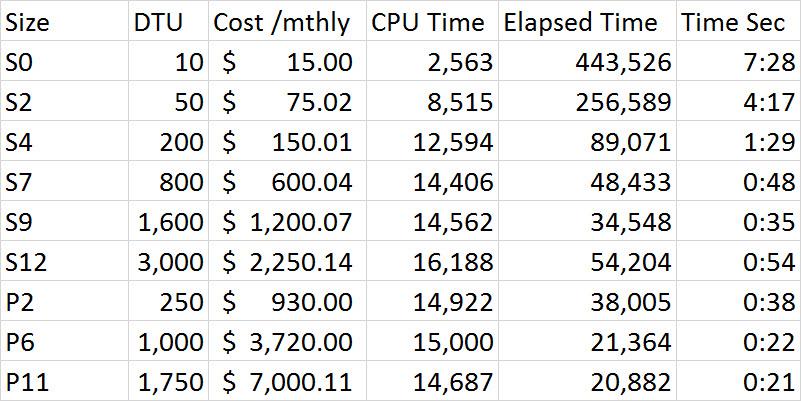

As I ran through the series of tests, performance steadily improved in the Standard tier until I got to the S12 option where, oddly, CPU and elapsed time increased. I ran the test multiple times and S12 was consistently 54 seconds. It is pretty clear with my first test, that the Premium tier outperformed the Standard tier. For example, the S9 and P2 are closest in time, however the DTU size for Standard is 1,600 compared to 250 for the P2. This test is more about the I/O capabilities. The chart below shows the size, DTU level, cost, CPU time, elapsed time, and time in seconds for each test:

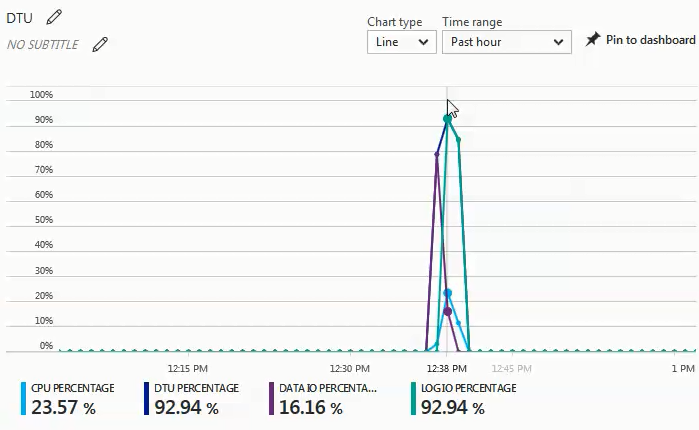

As the tests were being executed, I observed in the monitor dashboard that data I/O and log I/O percentage were the driving force behind the DTU percentage. The following chart was from a run against an S4 database:

I then decided to try another series of tests that would be more CPU-heavy. For that test I used the following script:

SET STATISTICS TIME ON;

SELECT SUM(CONVERT(BIGINT, t1.object_id)

+ CONVERT(BIGINT, t2.object_id)

+ CONVERT(BIGINT, t3.object_id)

+ CONVERT(BIGINT, t4.object_id))

FROM sys.objects t1

CROSS JOIN sys.objects t2

CROSS JOIN sys.objects t3

CROSS JOIN sys.objects t4;

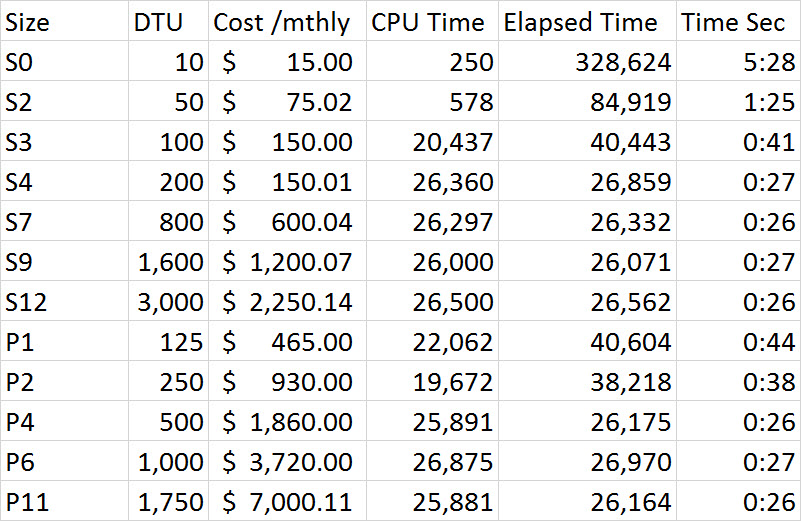

What I observed in the monitor dashboard on this series of tests is that CPU percentage was the sole driver of DTU percentage. As I went through the series of tests in the Standard tier, the test seemed to plateau at roughly 27 seconds, and started at the S4 size. What struck me as odd is that an S4 at 200 DTU took 27 seconds to complete and the corresponding P2 at 250 DTU took 38 seconds; a P4 at 500 DTU was more comparable. If we look at the cost differential for this demo, an S4 during preview only cost $150.01, while a P4 cost $1,860; the S4 provides a cost savings of just over $1,700. Let’s imagine that a P2 at 250 DTUs performed like we had expected; a P2 costs $930 and would still cost $780 more than an S4.

The full results of all tests in the second demo are included in the following chart:

Unlike the first demo, this was 100% CPU-driven. I had tried to include one additional cross join, but the demo then took hours per session instead of minutes. For a future test I’ll try to come up with a few additional scenarios that push a more realistic OLTP workload; one that is higher CPU, and one that is more I/O bound, and then a decent blend of the two.

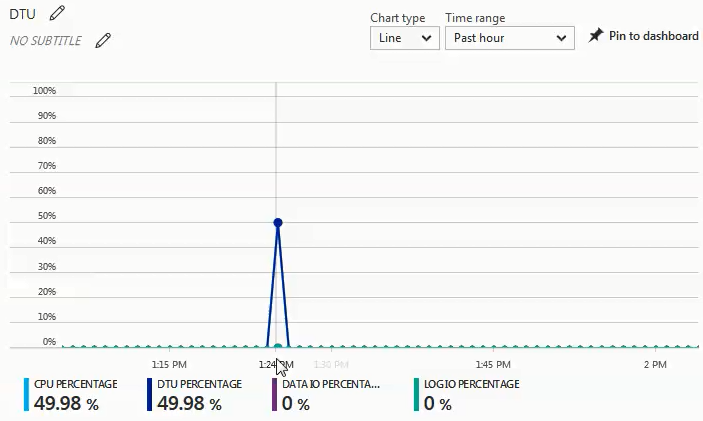

You can see from the graph below that, on this run against an S4 database, CPU spiked at nearly 50%, while DTU percentage matched exactly:

From the two different workloads that I tested, it is very evident that if you have any significant I/O workload, you will need the Premium tier, but if your workload is mostly CPU-bound without any significant I/O needs, the higher Standard tiers can provide you substantial savings over the Premium tier.

If you are considering a migration to an Azure SQL Database, the DTU calculator is a great place to start to get an idea of a starting point for sizing; however, at the time of writing, the DTU calculator doesn’t take into consideration the expanded Standard tier. What is great about the DTU calculator is that it will break out CPU, IOPs, and log utilization to let you know what the driving factor for the DTU level recommendation is. For example, I ran the last demo on a 4 vCPU, 4GB virtual machine, and the DTU calculator recommended a P2. When I chose to ‘view more details,’ I got the following messages.

Service Tier/Performance Level for CPU – Based solely on CPU utilization, we recommend you migrate your SQL Server workload to Premium – P2. This Service Tier/Performance Level should cover approximately 100.00 % of your CPU utilization.

Service Tier/Performance Level for Iops – Based solely on Iops utilization, we recommend you migrate your SQL Server workload to Basic. This Service Tier/Performance Level should cover approximately 89.92 % of your Iops utilization.

NOTE: There is approximately 10.08 % of your workload that falls into a higher Service Tier/Performance Level. After migrating your database to Azure, you should evaluate your database's performance using the guidance mentioned in the information section above.

Service Tier/Performance Level for Log – Based solely on Log utilization, we recommend you migrate your SQL Server workload to Basic. This Service Tier/Performance Level should cover approximately 100.00 % of your Log utilization.

Since I know this workload is heavily CPU-bound, if I can’t tune the workload to decrease the CPU requirement, I have up to 3,000 DTUs available in Standard tier. Rather than spend $930 per month for a P2 with 250 DTUs, an S4 with 200 DTUs at $150 per month (or an S6 with 400 DTUs at $300.02 per month) would be a much more economical option.

In conclusion, there are tools available to help you determine a good starting point for the size of your Azure SQL Database migrations, however the absolute best method is to test your workload. Migrating a copy of your production database, capturing a production workload, and replaying that workload against the Azure SQL Database will give you a much better understanding of what DTU size you truly need.

Thanks for documenting the tests for us Tim. The CPU tests showing comparable performance (in this particular scenario) between the S4 and P11 is an eye opener.