On July 11, 2017, Intel officially launched their new 14nm Intel Xeon Scalable Processor family (Skylake-SP) of server processors. This family replaces the previous generation of 14nm Intel Xeon E7 v4 and 14nm Intel Xeon E5 v4 (Broadwell-EX and Broadwell-EP) processors.

Branding and Pricing

Intel calls this overall family a new “converged platform” that is segmented into four distinct product lines called Intel Xeon Platinum, Intel Xeon Gold, Intel Xeon Silver, and Intel Xeon Bronze. For SQL Server usage, only the Platinum and Gold lines make sense if you are concerned about getting the best performance for each one of your SQL Server processor core licenses.

Unlike the previous generation Xeon processors, the new Xeon Platinum processors have up to 28 physical cores and can all be used in any socket count server, whether it is a two-socket, four-socket, or eight-socket machine. The Xeon Gold processors have up to 22 physical cores, and can be used in two-socket or four-socket machines.

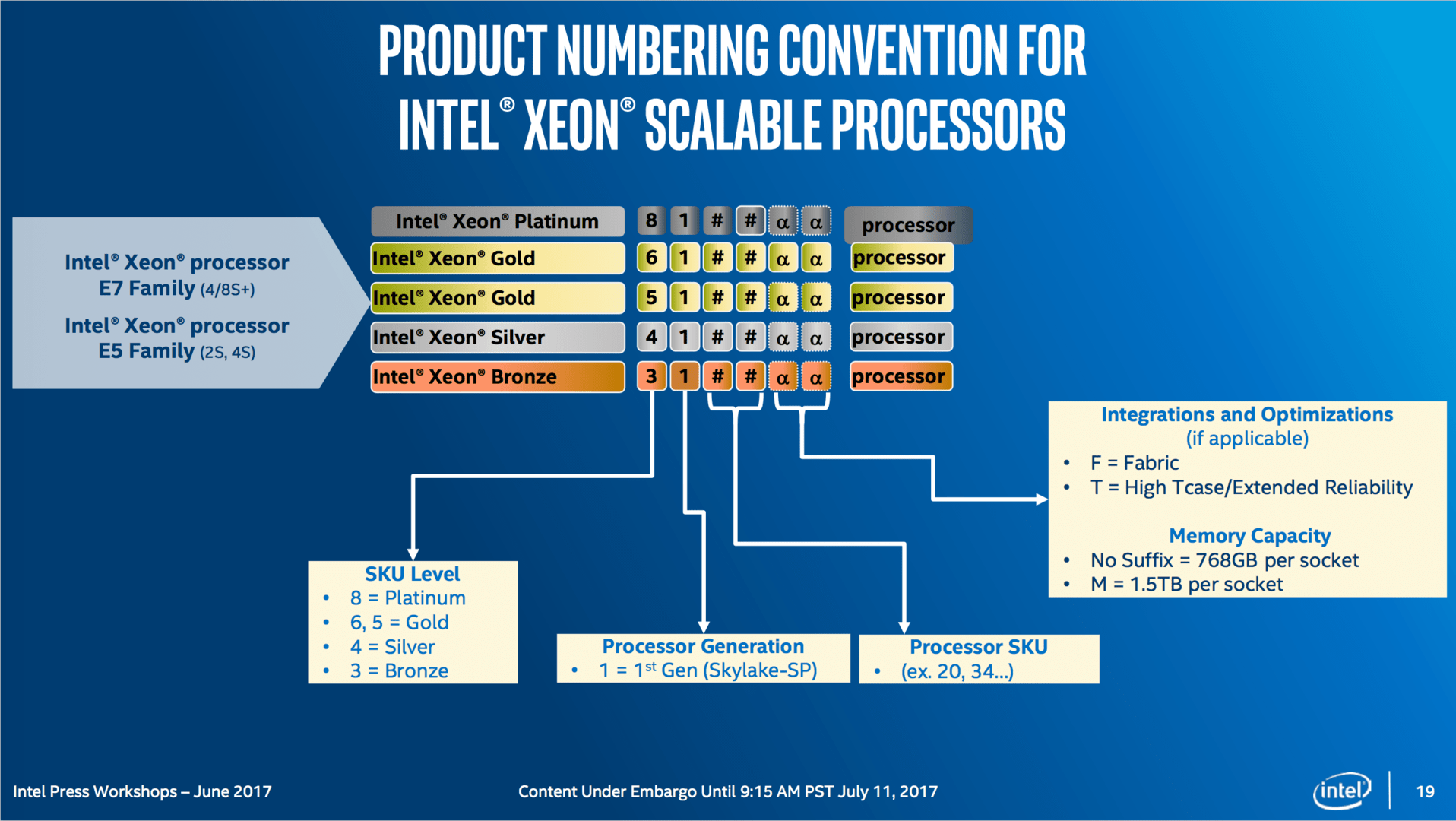

Intel is using a new product branding and numbering convention that is detailed in Figure 1. Unlike the new AMD EPYC processors, Intel is doing a lot of marketing and profitability-driven product segmentation in this lineup that makes it more difficult to understand, and makes it more difficult to pick the right processor for your workload.

Figure 1: Intel Xeon Scalable Processor Product Numbering Convention

One prime example of this harmful product segmentation are the “M” SKU processors (processor models with an M suffix) that support 1.5TB of memory per socket as opposed to 768GB of memory per socket for the non-“M” SKU models. Intel charges about a $3000.00 premium (per processor) for that extra memory support, which is a pretty large increase, especially for the lower cost processors. The eight-core Intel Xeon Gold 6134 processor is $2,214.00 each, while the eight-core Intel Xeon Gold 6134M processor is $5,217.00 each. All of the other specifications (and performance) are identical for those two processors. For SQL Server usage, it may make perfect sense to pay that premium to get twice the memory capacity per socket (especially given your SQL Server license costs and overall memory costs), but I don’t like the fact that Intel is doing some price gouging here.

Architecture Changes

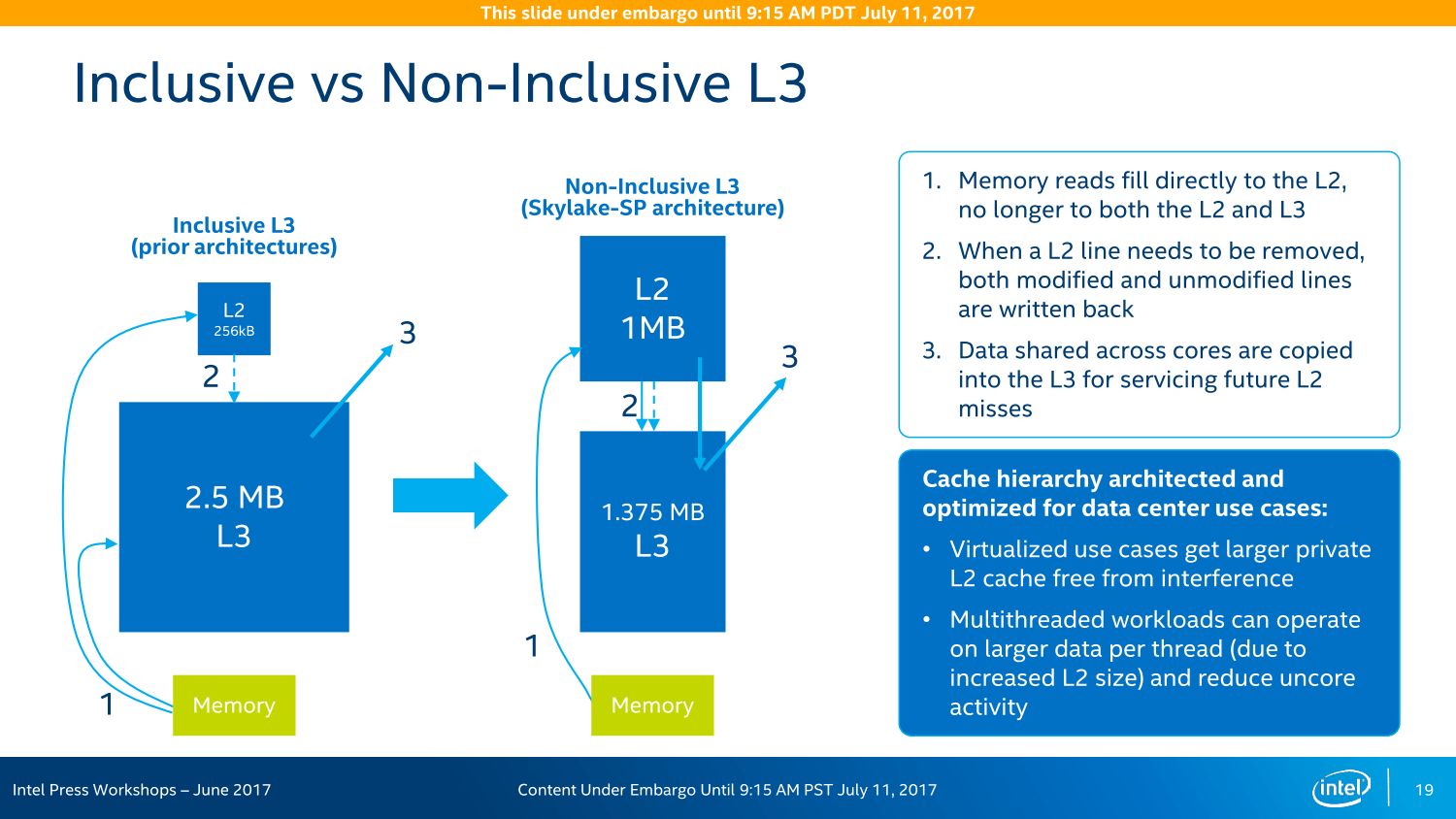

The Skylake-SP has a different cache architecture that changes from a shared-distributed model used in Broadwell-EP/EX to a private-local model used in Skylake-SP. How this change will affect SQL Server workloads remains to be seen.

In Broadwell-EP/EX, each physical core had a 256KB private L2 cache, while all of the cores shared a larger L3 cache that could be as large as 60MB (typically 2.5MB/core). All of the lines in the L2 cache for each core were also present in the inclusive, shared L3 cache.

In Skylake-SP, each physical core has a 1MB private L2 cache, while all of the cores share a larger L3 cache that can be as large as 38.5MB (typically 1.375MB/core). All of the lines in the L2 cache for each core may not be present in the non-inclusive, shared L3 cache.

A larger L2 cache increases the hit ratio from the L2 cache, resulting in lower effective memory latency and lowered demand on the L3 cache and the mesh interconnect. L2 cache is typically about 4X faster than L3 cache in Skylake-SP. Figure 2 details the new cache architecture changes in Skylake-SP.

Figure 2: Comparing Cache Architectures

Another new architectural improvement is Intel Ultra Path Interconnect (UPI), which replaces the previous generation Intel Quick Path Interconnect (QPI). Intel UPI is a coherent interconnect for systems containing multiple processors in a single shared address space. Intel Xeon processors that support Intel UPI, provide either two or three Intel UPI links for connecting to other Intel Xeon processors using a high-speed, low-latency path to the other CPU sockets. Intel UPI has a maximum data rate of 10.4 GT/s (giga-transfers/second) compared to a maximum data rate of 9.6 GT/s for the Intel QPI used in the fastest Broadwell-EP/EX processors.

Another important new feature in Skylake-SP is the inclusion of AVX-512 support, which allows 512-bit wide vectors to be computed, which greatly improves speed compared to older vector instruction sets like AVX2, AVX, and SSE. These instruction sets are typically used for things like compression and encryption.

AVX-512 also has much better power efficiency in terms of GFLOPS/Watt and GFLOPS/GHz compared to the older instruction sets, so that Intel does not have to reduce the clock speed of all of the cores if AVX code is running on any of the cores and they also can have each core run at a different speed depending on what type of AVX code is running on that core.

SQL Server Hardware Support

In SQL Server 2016, Microsoft introduced support for SSE and AVX instructions supported by the CPU to improve the performance for row bucketing in Columnstore indexes and bulk inserts. They also added hardware support for AES-NI encryption. I wrote about how this new software support for specific hardware mapped to different processor generations here. Hopefully, Microsoft will extend this type of code to cover AVX-512 support in SQL Server 2017.

Another new feature in Skylake-SP is Intel Speed Shift support, which allows the processor cores to change their p-states and c-states much more effectively (which lets the processor cores “throttle up” much more quickly). This feature builds on the Hardware Power Management (HWPM) introduced in Broadwell with a new mode that allows HWPM and the operating system to work together, called native mode. Native mode is supported on Linux kernel 4.10 and in Windows Server 2016.

According to some of the early benchmarks I have seen, these Skylake-SP processors have about a 10% IPC improvement over Broadwell-EP cores running at the same clock speed. Software that takes advantage of specific new features (such as AVX-512 and FMA) could see much higher performance increases.

Regarding SQL Server 2017 OLTP workloads, on June 27, 2017, Lenovo submitted a TPC-E benchmark result for a Lenovo ThinkSystem SR650 two-socket server, with two 28-core Intel Xeon Platinum 8180 processors. The raw score for this system was 6,598.36. Dividing that score by 56 physical cores, we get a score/core of 117.83 (which is a measure of single-threaded CPU performance).

For comparison’s sake Lenovo submitted a TPC-E benchmark result for a Lenovo System x3650 M5 two-socket server with two 22-core Intel Xeon E5-2699 v4 processors. The raw score for this system was 4938.14. Dividing that score by 44 physical cores, we get a score/core of 112.23. The Skylake-SP system is about 5% faster for single-threaded performance here, but keep in mind that this is for a pre-release version of SQL Server 2017.

If you want an even more detailed view of the specific changes and improvements in the Intel Xeon Scalable Processor family compared to the previous generation Xeon processors, you can read about it here.

Sorry for that, but this is a bad result! you compare a new 2.5ghz cpu with an old 2.2ghz one. an increase of 13% in frequency which finally increase the performance by 5%. it will be good to compare SQL 2016 against SQL 2016 to see if the problem is the hardware or the optimization of the code.

I expect to see an increase of 15 to 20% to justify the investment.

Well, Glenn did mention toward the end of the article that the performance was based on a CTP version of the upcoming release, and given past history, tightening up performance across the board usually doesn't happen until the latter stages leading up to release. So don't be too critical of the performance delta you see now.