Guest Author : Derik Hammer (@SQLHammer)

Recently Aaron Bertrand blogged about Harmful, Pervasive SQL Server Performance Myths. As an extension of this blog series, I am going to disprove this common myth:

Reading the manual

Going straight to the source, I looked at the Books Online article on tables which includes table variables. Even though the article references benefits of using table variables, the fact that they are 100% in-memory is conspicuously missing.

A missing affirmative does not imply a negative, however. Since In-Memory OLTP tables were released, there is now a lot more documentation in BOL for in-memory processing. That is where I found this article on making temp table and table variables faster by using memory optimization.

The entire article revolves around how to make your temporary objects use the in-memory OLTP feature, and this is where I found the affirmative I was looking for.

Table variables are not in-memory constructs. In order to use the in-memory technology you have to explicitly define a TYPE which is memory optimized and use that TYPE to define your table variable.

Prove it

Documentation is one thing but seeing it with my own eyes is quite another. I know that temporary tables create objects in tempdb and will write data to disk. First I will show you what that looks like for the temporary tables and then I will use the same method to validate the hypothesis that table variables act the same way.

Log record analysis

This query will run a CHECKPOINT to give me a clean starting point and then show the number of log records and the transaction names which exist in the log.

USE tempdb;

GO

CHECKPOINT;

GO

SELECT COUNT(*) [Count]

FROM sys.fn_dblog (NULL, NULL);

SELECT [Transaction Name]

FROM sys.fn_dblog (NULL, NULL)

WHERE [Transaction Name] IS NOT NULL;

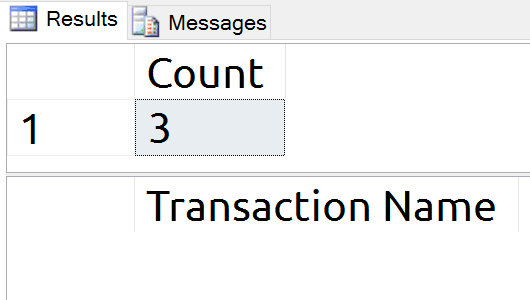

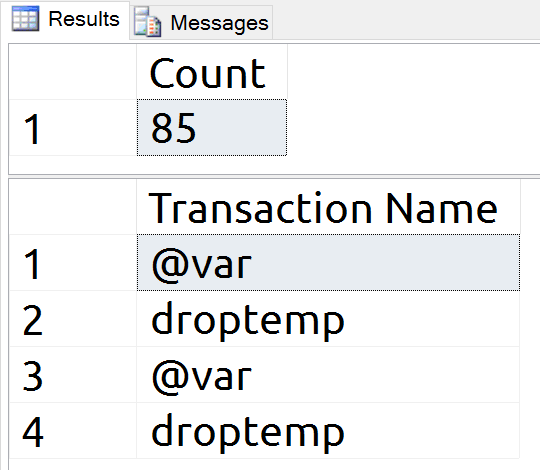

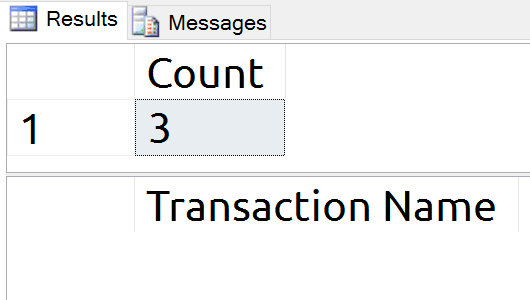

Running the T-SQL repeatedly resulted in a consistent three record count on SQL Server 2016 SP1.

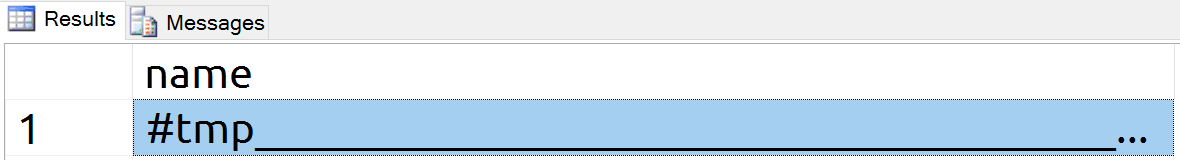

This creates a temporary table and displays the object record, proving that this is a real object in tempdb.

USE tempdb;

GO

DROP TABLE IF EXISTS #tmp;

GO

CREATE TABLE #tmp (id int NULL);

SELECT name

FROM sys.objects o

WHERE is_ms_shipped = 0;

Now I will show the log records again. I will not re-run the CHECKPOINT command.

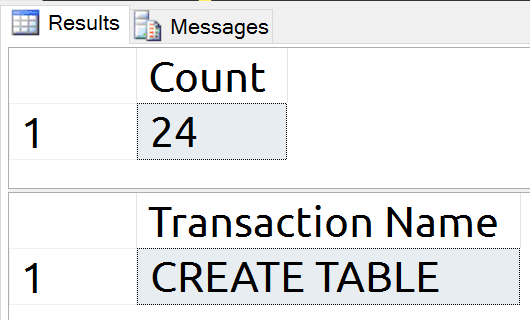

Twenty one log records were written, proving that these are on-disk writes, and our CREATE TABLE is clearly included in these log records.

To compare these results to table variables I will reset the experiment by running CHECKPOINT and then executing the below T-SQL, creating a table variable.

USE tempdb;

GO

DECLARE @var TABLE (id int NULL);

SELECT name

FROM sys.objects o

WHERE is_ms_shipped = 0;

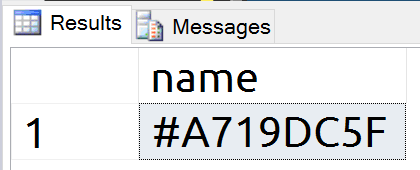

Once again we have a new object record. This time, however, the name is more random than with temporary tables.

There are eighty two new log records and transaction names proving that my variable is being written to the log, and therefore, to disk.

Actually in-memory

Now it is time for me to make the log records disappear.

I created an in-memory OLTP filegroup and then created a memory optimized table type.

USE Test;

GO

CREATE TYPE dbo.inMemoryTableType

AS TABLE

( id INT NULL INDEX ix1 )

WITH (MEMORY_OPTIMIZED = ON);

GO

I executed the CHECKPOINT again and then created the memory optimized table.

USE Test;

GO

DECLARE @var dbo.inMemoryTableType;

INSERT INTO @var (id) VALUES (1)

SELECT * from @var;

GO

After reviewing the log, I did not see any log activity. This method is in fact 100% in-memory.

Take away

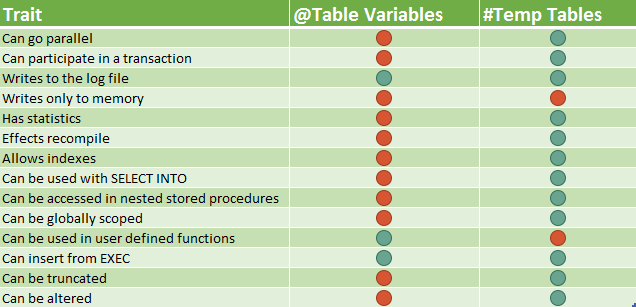

Table variables use tempdb similar to how temporary tables use tempdb. Table variables are not in-memory constructs but can become them if you use memory optimized user defined table types. Often I find temporary tables to be a much better choice than table variables. The main reason for this is because table variables do not have statistics and, depending upon SQL Server version and settings, the row estimates work out to be 1 row or 100 rows. In both cases these are guesses and become detrimental pieces of misinformation in your query optimization process.

Note that some of these feature differences may change over time – for example, in recent versions of SQL Server, you can create additional indexes on a table variable using inline index syntax. The following table has three indexes; the primary key (clustered by default), and two non-clustered indexes:

DECLARE @t TABLE

(

a int PRIMARY KEY,

b int,

INDEX x (b, a DESC),

INDEX y (b DESC, a)

);

There is a great answer on DBA Stack Exchange where Martin Smith exhaustively details the differences between table variables and #temp tables:

About the Author

Derik is a data professional and freshly-minted Microsoft Data Platform MVP focusing on SQL Server. His passion focuses around high availability, disaster recovery, continuous integration, and automated maintenance. His experience has spanned long-term database administration, consulting, and entrepreneurial ventures working in the financial and healthcare industries. He is currently a Senior Database Administrator in charge of the Database Operations team at Subway Franchise World Headquarters. When he is not on the clock, or blogging at SQLHammer.com, Derik devotes his time to the #sqlfamily as the chapter leader for the FairfieldPASS SQL Server user group in Stamford, CT.

Derik is a data professional and freshly-minted Microsoft Data Platform MVP focusing on SQL Server. His passion focuses around high availability, disaster recovery, continuous integration, and automated maintenance. His experience has spanned long-term database administration, consulting, and entrepreneurial ventures working in the financial and healthcare industries. He is currently a Senior Database Administrator in charge of the Database Operations team at Subway Franchise World Headquarters. When he is not on the clock, or blogging at SQLHammer.com, Derik devotes his time to the #sqlfamily as the chapter leader for the FairfieldPASS SQL Server user group in Stamford, CT.

Excellent! I'll have to give this a try – very intriguing to me that the temp table resulted in 21 log records while the table variable resulted in 82 log records. Without ever looking, I'd expect global temp table creation to require more log records than local temp table, and local temp table to require more than table variable…

You can have constraints on a table variable and contraints is upheld with indexes in SQL Server.

DECLARE @Sample TABLE

(

RowID INT PRIMARY KEY CLUSTERED,

Number INT UNIQUE NOT NULL

);

INSERT @Sample VALUES(1, 1);

INSERT @Sample VALUES(1, 2);

GO

DECLARE @Sample TABLE

(

RowID INT PRIMARY KEY CLUSTERED,

Number INT UNIQUE NOT NULL

);

INSERT @Sample VALUES(1, 1);

INSERT @Sample VALUES(2, 1);

GO

Also, look at the execution plan for this code

DECLARE @Sample TABLE

(

RowID INT PRIMARY KEY CLUSTERED,

Number INT UNIQUE NOT NULL

);

INSERT @Sample VALUES(1, 1);

select * from @Sample where rowid = 1;

select * from @Sample where number = 1;

when you use that in a batch (procedure) sql server still think that your table has only one record…

in order to bypass that, there are several "tricks". none of them are really totally functional.

I needed to use a DBCC TRACEON(2453) command in order to make sql take the real number in the table into account. The problem with that command is that the user issuing it needs high privilege…

I am working with several million rows that needs to be filtered by a join to the parameter table. Without the DBCC command and so with Sql thinking 1 row is in the table, the process needs several minutes to complete. With the DBCC command, 2 seconds are needed…

Derik – check out Q4 in this KB: https://support.microsoft.com/en-us/kb/305977

This feature is only available from SQL2016 onwards.

https://docs.microsoft.com/en-us/sql/relational-databases/in-memory-oltp/faster-temp-table-and-table-variable-by-using-memory-optimization

That answer is very true, however, there are log records which go to disk in all cases and in some cases the working set is flushed to disk.

Great Post. Thank you.