Between my travels, presentations, and Q & A moderation, I talk to a lot of people about a wide variety of SQL Server performance issues. Recently, I've had a few interactions where people believe things that are either altogether incorrect, or are only correct in a very narrow set of use cases. Yet their adamance that these things are universally true is unsettling.

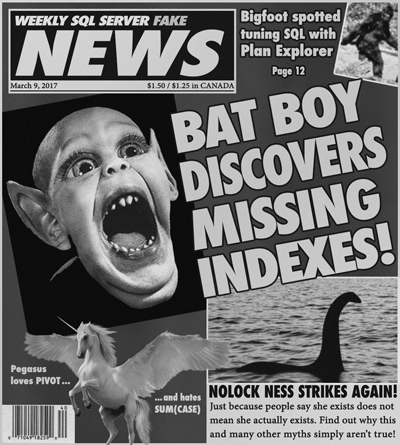

So, I thought I would start a new series to help quell some of these myths. Not to point at people and prove that they're wrong, but to stop the spread. When they make these blanket statements in their workplace, or on twitter, or in forums, if they go unchecked, they can "teach" impressionable or less experienced users.

Note that I don't intend to prove that these things are never true, because some can certainly be true in isolated or contrived scenarios. My aim is simply to demonstrate at least one case where it is not true; hopefully, this can start to change these stubborn mindsets.

Here are some of the "facts" I've been told recently, in no particular order:

- "A clustered index is always better than a non-clustered index"

- "Dynamic SQL made my query slow"

- "PIVOT is faster than SUM(CASE)"

- "NULLs always cause terrible performance issues"

- "Execution plans are useless except for missing indexes"

- "NOLOCK is okay because a lot of people use it"

- "Oversizing varchar/nvarchar columns is okay"

As I write each post, I'll update this page by linking the corresponding item in the above list.

Do you have any performance myths that are passed around as absolute fact, but you suspect (or maybe even know) that they aren't always true? Let me know in the comments below, on twitter, or at abertrand@sentryone.com.

* TRUNCATE TABLE is not logged (and can't be rolled back)

* A seek is always better than a scan

Great series, very much looking forward to reading more!!

One that I often come across is that "scans are bad" and that's the end of it. Yes, in most situations a scan operator might indicate that there's potential for some tuning, I'm happy with that, but at the same time there are times when scans are ideal; small tables, low selectivity etc (that's why the optimiser chooses them).

Having seen many of these discussions unfold on dba.se recently, I'm looking forward to the drubbing.

Add to myths: Joining tables hurts performance (argument for denormalization).

A few that I come across:-

A table scan always reads the whole table.

Key lookups are always bad.

Partitioning tables will increase performance.

And, bizarrely, read uncommitted isolation level is better than NOLOCK (no idea who dreamt that one up….)

Eagerly waiting your response on these points..

Table variables are more efficient than temp tables.

– Cursors are always "bad" decision.

– The primary key must be clustered index.

– Any data colmn independent from the data type will not request space as far as we don't store values, i.e. null

Views should NEVER be used they kill ALL queries performance

Query optimizer will arrive at same plan for query regardless of specified join order.

Linked Servers are the fastes and best way for what ever

Heap tables are always bad.

Oh god… This *100.

My favorite howler, told to me by a developer with a query that ran for hours.

The order of your indexed columns should always follow the order of the columns in the WHERE clause. This was on an accounting detail table with 1 billion rows and growing.

In SQL Server, the INFORMATION_SCHEMA views are better to use than the sys catalog views because they require fewer joins to get the metadata you want.

A page life expectancy of 300 sec is fine, no need to worry at all with that 500GB maxmem instance.

Temp tables are always better than table variables.

Yes the defragmentation argument and the confusion between rebuilding and reorg snd thr staristics. Also the amazing use of frofiling and the dta ….someone please simplify this and make it less of a dark art…

At SQL Saturday in Chicago last weekend, I asked Adam Machanic which idea about query tuning people found most surprising. His answer: that IN and EXISTS are logically equivalent and that replacing one with the other does nothing to improve performance. "IT'S A MYTH!!!" he shouted.

I was surprised because I'd always believed EXISTS would execute quicker. Apparently not.

0_o Someone said that?!

I don't know how pervasive this is, but:

"It is understood that rewriting the query with temporary tables can lead to some slowdown due to additional expenses: creation of temporary tables. If the database will not be mistaken with the choice of the query plan, it will perform the old query faster than a new one. However, this slowdown will always be negligible. Typically the creation of a temporary table takes a few milliseconds. That is, the delay can not have a significant impact on system performance, and usually can be ignored."

http://stackoverflow.com/a/16770129/2333499

Upvote! I remember seeing this thrown about by people who should have known better going back to the days before they used this to advocate NoSQL solutions even.

Set MAXDOP to one in order to sort out your parallelism/CXPACKET problems. (alternatively just set it to one for no reason at all)

Use DISTINCT at the start of all your queries to sort out duplicate result problems.

Use NOLOCK on your queries to sort your performance[concurrency] problems.

Use OPTION(RECOMPILE) in all your stored procedures because parameter sniffing is bad.

Don't use transactions because they slow down your queries.

Don't use foreign keys because they slow down your queries as well.

I should create this specific index because missing indexes/dta suggested it.

My backups are not failing therefore my data is safe.

CTEs are great for performance

No way! Wow.

I have not tested this in SQL Server, but when I was working with Oracle I was running a query with IN on a large table with somebody watching and I was getting impatient. I'd heard about EXISTS and had time to find and example on the internet, make a few mistakes coding it and it still finished before the original query. Several minutes for IN, a couple of seconds for EXISTS. I was not allowed to look at plans but I expect it was a scan vs a seek using the index. I have become very fond of EXISTS ever since.

"col BETWEEN x and y" never uses indexes, you have to use "col >= x AND col <= y" instead.

SQL is powerful – you should do everything in the database.

This was used to justify doing all sorts of CAST, CONVERT and string formatting operations in stored procedures that properly belonged in the application layer.

An identity column should be used as the clustering key on (nearly) all tables. As a DBA, just let me say, ARGHHHHH.

Re read uncommitted is better than NOLOCK: In my opinion, yes and no. Technically there is no difference, true. What I tell my dev team is that when I see NOLOCK all over a stored procedure, I assume they are just copying what someone else did. It was probably a bad idea then and probably is now too. On the other hand, if I see SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED at the start of a stored procedure, I am inclined to think the developer actually understands the issue and made a conscious decision to use it. I regard it as a deviation from best practice and as such there should be an inline comment explaining why.

A favorite of mine is…

Table variables are faster than temp tables because the variable is "in memory".

Paul, funny, Derik Hammer recently published that one:

https://sqlperformance.com/2017/04/performance-myths/table-variables-in-memory

This is something I always felt was true but after seeing some of the comments here, I'm not sure any longer.

If I place entries in a where clause that entirely uses 'and' such that the most likely drop point is first, the query will be faster. Is this right?

No, except in a few forced cases, SQL Server will not necessarily process your WHERE clause in the order you wrote it – though it still might make sense to write it that way for maintainability purposes.

MAXDOP 1 = Enterprise-wide Standard (Not joking)

Recently did a lot of work to implement partitioning and eventually got a 10-20% performance boost. Then got a new SAN and performance went up by 50%. Leads me to think partitioning is not worth the effort.

We do use NOLOCK a lot but I think we know what we are doing :-)

Setting MAXDOP to 1 *did* solve a parallelism/CXPACKET problem (see comment from mrTea) but we know we have to go back into the code and fix it for real.

We used to be in the mode of adding indexes all the time and now we are getting some performance boost by pulling them back out. Hoping the pendulum stops swinging.

I guess the myth part is not about the execution speed but about being equivalent. One being quicker than the other… it depends.

The other one I hear frequently: CTE's are bad for performance.

Gail Shaw has good info comparing IN vs EXISTS and IN vs JOIN

"Exists is better for when comparisons are needed on two or more columns. For eg, this cannot be done easily with an IN"

http://sqlinthewild.co.za/index.php/2009/08/17/exists-vs-in/

"Cursors (FAST_FORWARD / STATIC) are always faster than do while loops."

Ha, LOL. I tell developers that because I find that their answer to most questions involves nested views with scalar UDFs in the the column list :)

You can determine what is most efficient by solely inspecting T-SQL, no matter what the costs may be.