Every once in a while, a conversation crops up where people are convinced that comments either do or don't have an impact on performance.

In general, I will say that, no, comments do not impact performance, but there is always room for an "it depends" disclaimer. Let's create a sample database and a table full of junk:

CREATE DATABASE CommentTesting;

GO

USE CommentTesting;

GO

SELECT TOP (1000) n = NEWID(), * INTO dbo.SampleTable

FROM sys.all_columns ORDER BY NEWID();

GO

CREATE UNIQUE CLUSTERED INDEX x ON dbo.SampleTable(n);

GO

Now, I want to create four stored procedures – one with 20 characters of comments, one with 2000, one with 20,000, and one with 200,000. And I want to do that again where the comments are embedded *within* a query statement within the procedure, as opposed to being independent (which will have an effect on the plan XML). Finally, I repeated the process adding OPTION (RECOMPILE) to the query.

DECLARE @comments nvarchar(max) = N'',

@basesql nvarchar(max),

@sql nvarchar(max);

SELECT TOP (5000) -- * 40 character strings

@comments += N'--' + RTRIM(NEWID()) + CHAR(13) + CHAR(10)

FROM sys.all_columns;

SET @basesql = N'CREATE PROCEDURE dbo.$name$

AS

BEGIN

SET NOCOUNT ON;

/* $comments1$ */

DECLARE @x int;

SELECT @x = COUNT(*) /* $comments2$ */ FROM dbo.SampleTable OPTION (RECOMPILE);

END';

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Small_Separate'), N'$comments1$', LEFT(@comments, 20));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Medium_Separate'), N'$comments1$', LEFT(@comments, 2000));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Large_Separate'), N'$comments1$', LEFT(@comments, 20000));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'ExtraLarge_Separate'), N'$comments1$', LEFT(@comments, 200000));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Small_Embedded'), N'$comments2$', LEFT(@comments, 20));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Medium_Embedded'), N'$comments2$', LEFT(@comments, 2000));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'Large_Embedded'), N'$comments2$', LEFT(@comments, 20000));

EXEC sys.sp_executesql @sql;

SET @sql = REPLACE(REPLACE(@basesql, N'$name$', N'ExtraLarge_Embedded'), N'$comments2$', LEFT(@comments, 200000));

EXEC sys.sp_executesql @sql;

Now, I needed to generate the code to run each procedure 100,000 times, measure the duration from sys.dm_exec_procedure_stats, and also check the size of the plan in cache.

DECLARE @hammer nvarchar(max) = N'';

SELECT @hammer += N'

DBCC FREEPROCCACHE;

DBCC DROPCLEANBUFFERS;

GO

EXEC dbo.' + [name] + N';

GO 100000

SELECT [size of ' + [name] + ' (b)] = DATALENGTH(definition)

FROM sys.sql_modules

WHERE [object_id] = ' + CONVERT(varchar(32),([object_id])) + N';

SELECT [size of ' + [name] + ' (b)] = size_in_bytes

FROM sys.dm_exec_cached_plans AS p

CROSS APPLY sys.dm_exec_sql_text(p.plan_handle) AS t

WHERE t.objectid = ' + CONVERT(varchar(32),([object_id])) + N';

SELECT N''' + [name] + N''',

avg_dur = total_elapsed_time*1.0/execution_count

FROM sys.dm_exec_procedure_stats

WHERE [object_id] = ' + CONVERT(varchar(32),([object_id])) + N';'

FROM sys.procedures

WHERE [name] LIKE N'%[_]Separate' OR [name] LIKE N'%[_]Embedded';

PRINT @hammer;

First, let's look at the size of the procedure bodies. No surprises here, just confirming that my construction code above generated the expected size of comments in each procedure:

| Procedure | Size (bytes) |

|---|---|

| Small_Separate / Small_Embedded | 378 |

| Medium_Separate / Medium_Embedded | 4,340 |

| Large_Separate / Large_Separate | 40,338 |

| ExtraLarge_Separate / ExtraLarge_Separate | 400,348 |

Next, how large were the plans in the cache?

| Procedure | Size (bytes) |

|---|---|

| Small_Separate / Small_Embedded | 40,360 |

| Medium_Separate / Medium_Embedded | 40,360 |

| Large_Separate / Large_Separate | 40,360 |

| ExtraLarge_Separate / ExtraLarge_Separate | 40,360 |

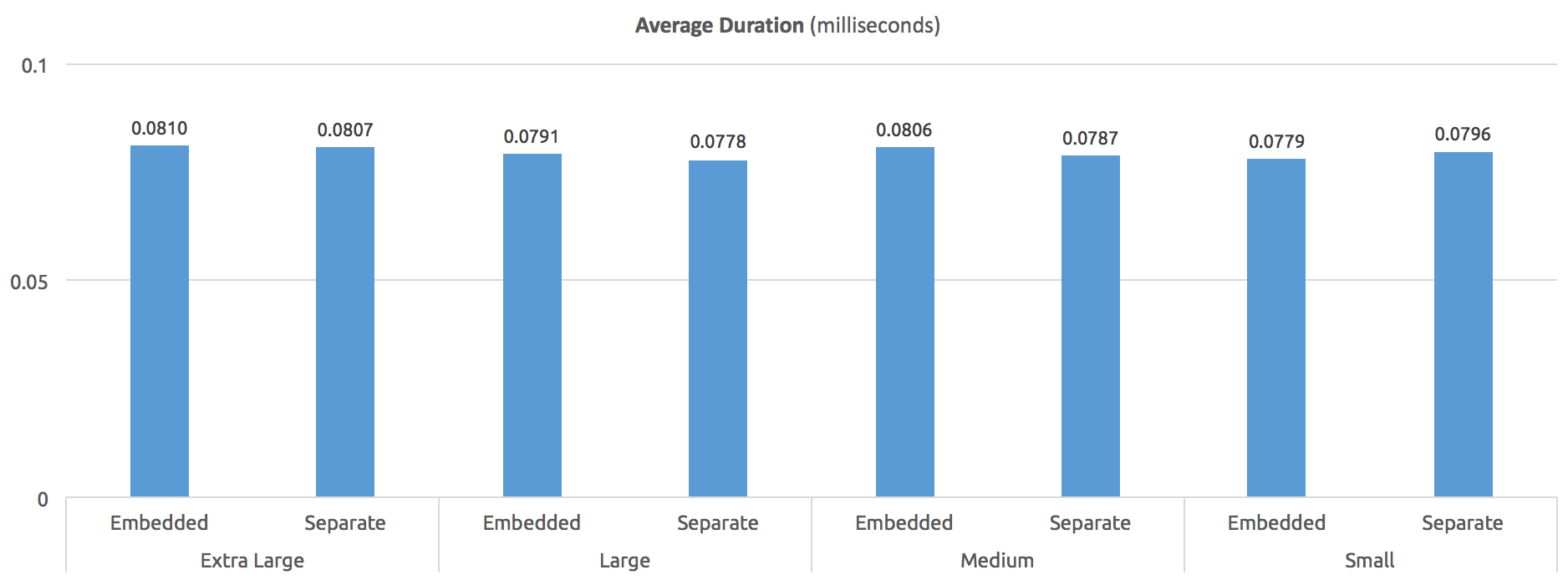

Finally, what was the performance like? Without OPTION (RECOMPILE), here is the average execution time, in milliseconds – pretty consistent across all procedures:

Average duration (milliseconds) – without OPTION (RECOMPILE)

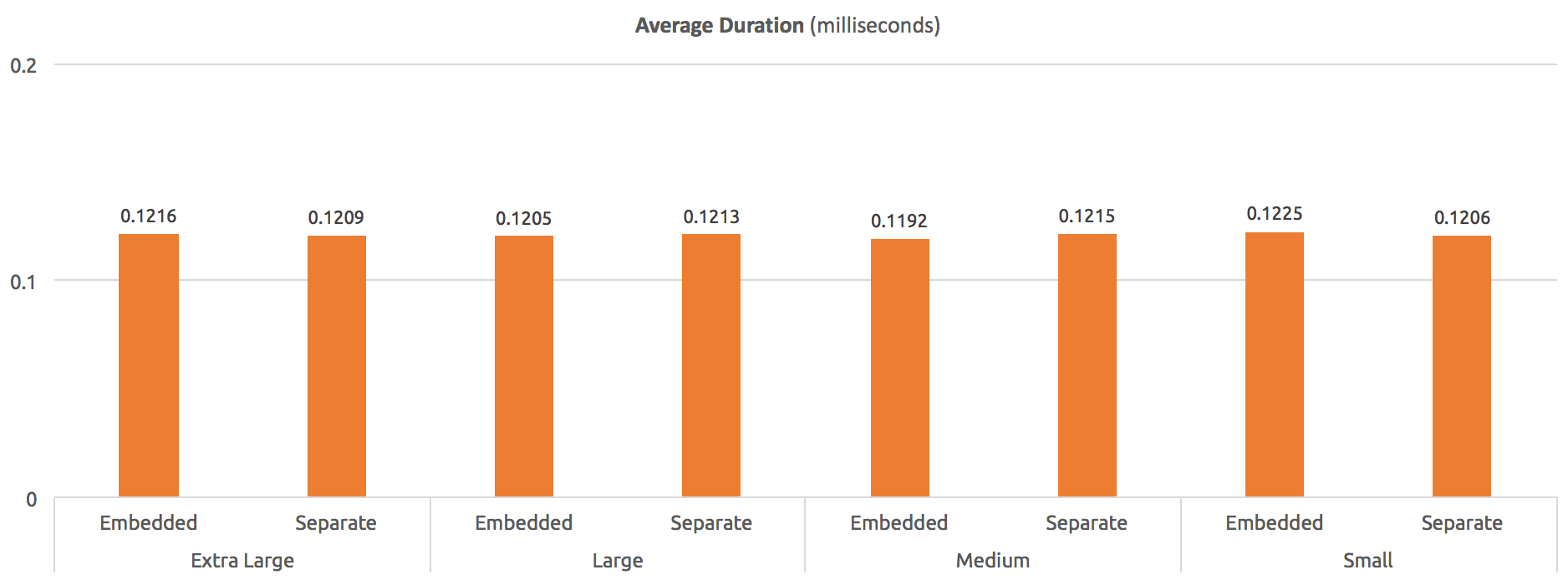

With statement-level OPTION (RECOMPILE), we can see about a 50% hit in average duration across the board compared to no recompilation, but still pretty even:

Average duration (milliseconds) – with OPTION (RECOMPILE)

In both cases, while the OPTION (RECOMPILE) version generally ran slower, there was virtually ZERO difference in runtime, regardless of comment size in the procedure body.

What about higher compilation costs?

Next, I wanted to see if these large comments would have a huge impact on compile costs, for example if the procedures were created WITH RECOMPILE. The construction code above was easy to change to account for this. But in this case, I couldn't rely on sys.dm_exec_procedure_stats, because this doesn't work for procedures WITH RECOMPILE. So my generation code for the test was a little different, since I'd have to track average duration manually:

DECLARE @hammer nvarchar(max) = N'';

SELECT @hammer += N'

DBCC FREEPROCCACHE;

DBCC DROPCLEANBUFFERS;

SELECT SYSDATETIME();

GO

EXEC dbo.' + [name] + N';

GO 100000

SELECT SYSDATETIME();';

PRINT @hammer;

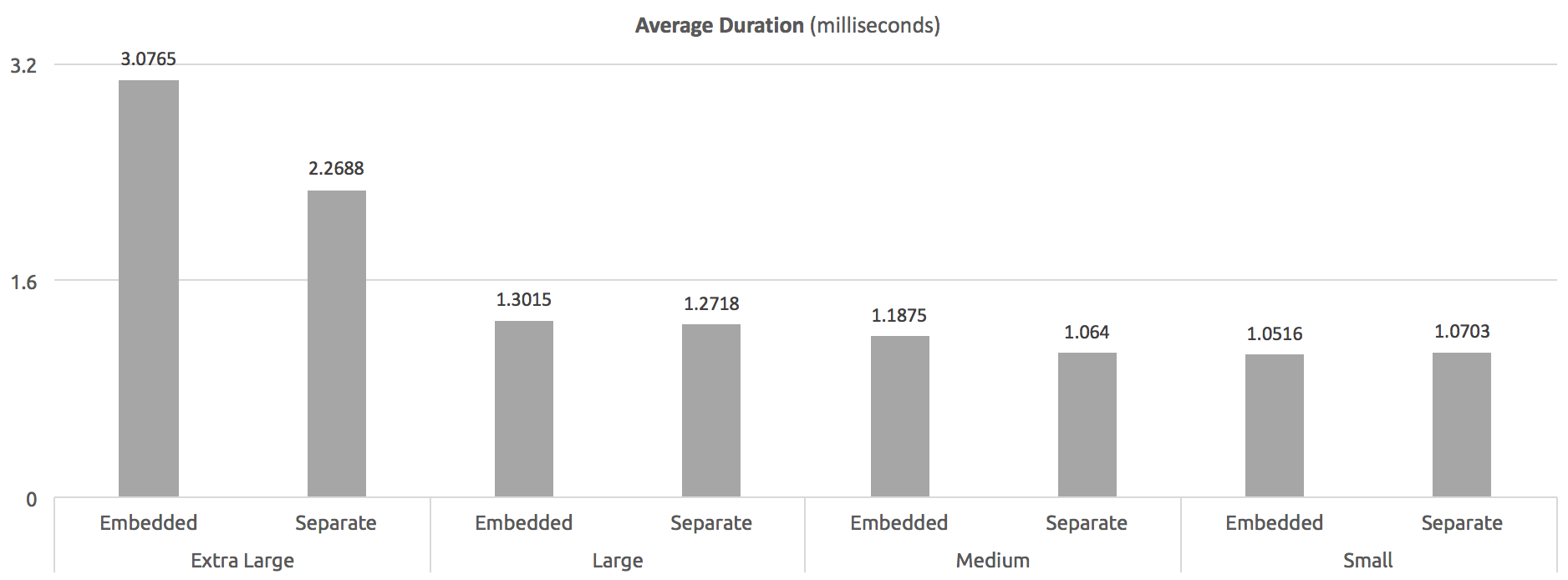

In this case, I couldn't check the size of the plans in cache, but I was able to determine average runtime of the procedures, and there was a difference based on comment size (or, perhaps, just procedure body size):

Average duration (milliseconds) – WITH RECOMPILE at procedure level

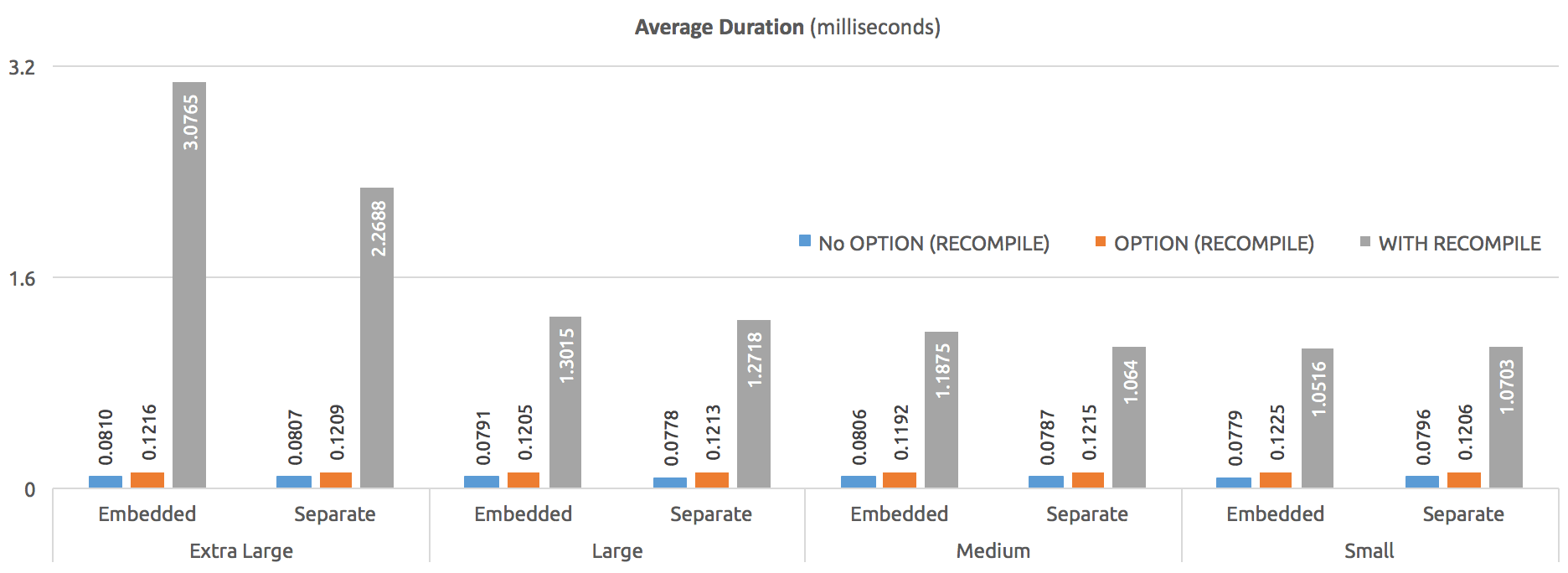

If we put them all together on a graph, it's clear how much more expensive the WITH RECOMPILE usage can be:

Average duration (milliseconds) – comparing all three methods

I will probably take a closer look at this at a later time to see exactly where that hockey stick comes into play – I envision testing in 10,000-character increments. For now, though, I'm pretty satisfied that I have answered the question.

Summary

Comments seem to be completely unrelated to actual, observable stored procedure performance, except in the case where the procedure is defined WITH RECOMPILE. Personally, I don't see this being used in the wild anymore, but YMMV. For the subtle differences between this option and statement-level OPTION (RECOMPILE), see Paul White's article, "Parameter Sniffing, Embedding, and the RECOMPILE Options."

Personally, I think comments can be extremely valuable for anyone who has to review, maintain, or troubleshoot your code. This includes future you. I highly recommend against worrying about the performance impact of a reasonable amount of comments, and instead focus on prioritizing the usefulness of context that the comments provide. As someone on Twitter said, there is a limit. If your comments amount to the abridged version of War and Peace, you might consider – at the risk of decoupling the code from its documentation – putting that documentation elsewhere, and reference the link in the procedure body comments.

To minimize the risk of decoupling, or the documentation and code otherwise becoming out of sync over time, you could create a second procedure, with the suffix _documentation or _comments, and putting the comments (or a commented version of the code) there. Maybe put it in a different schema to keep it out of the main sort lists. At least the documentation stays with the database wherever it goes, though it doesn't guarantee it will be maintained. It's unfortunate that a normal procedure can't be created WITH SCHEMABINDING, in which case you could explicitly tie the comment procedure to the source.

This is a cool fundamentals post! Thanks for sharing.

Wow, that's some perfectionist job:)

Great analyses!

Awesome post! Thanks.

Awesome.

I wonder if the impact occurs when the definition hits the 4k/8k character limit and has to be stored in BLOB?

This is a great analysis that explains it well. Thank you.

Never thought of decoupling comments as described above. Thanks!

Great article, as always!

I would never expect comments to effect the final query plan. But I would think it would have to effect the total pool space used by that proc. Thus, my concern with excessive comments, and I've seen some extreme examples, is that they indirectly cause more recompiles and other (minor?) performance issues because less pool buffer space is available for executable code, given too much extraneous commenting.

Thanks Scott! Remember that the plan cache stores XML versions of the plan itself and a hash of the query text. From everything I can tell, comments are stripped from the query text, which is why you won't see a huge difference… Actually that makes me think that a good follow-on test would be to create a whole bunch of different procedures and see total plan cache impact depending on whether the procedures have small or large comments. I focused here on performance and not plan cache usage.

Is there any performance overhead if you have highly attributed tables and procedures with a lot of extended property meta data ?

That's a *great* q. I'd like to know for sure one way or the other on that too.

Great job! Thank you.

Not germane to the point of this article, but in case of tangential interest, before deployment we process procedure / trigger / function / View code to strip out comments and unnecessary whitespace for a couple of reasons:

Code protection (when installed on Client servers). We also set WITH ENCRYPTION.

Deployment, across WAN, is improved (increasingly a NULL-feature as WAN tends to Fibre :)

Some storage (size) reduction in the Production Database.

We remove comments / spaces at the point that we move the code from DEV to QA, so all final testing is done on the comment-free version, and that then carries through to deployment to Production.

Great post indeed.

I can't add much to the profuse-comments/no-comments debate. Personally, I think what I enjoyed more of this article is your approach to proving a theory with hard evidence gathered in an automated fashion via dynamic sql and other cool tricks like using the REPLACE function to test variations.

I think you can extrapolate this approach from the fact you were investigating on comments and even use it to find the answer on those MANY occasions when we ask ourselves "what's the impact of doing XXX to the code?" (with XXX being anything from variations of T-SQL to adding/dropping indexes or query hints)