I wrote previously about the Actual Rows Read property. It tells you how many rows are actually read by an Index Seek, so that you can see how selective the Seek Predicate is, compared to the selectiveness of the Seek Predicate plus Residual Predicate combined.

But let’s have a look at what’s actually going on inside the Seek operator. Because I’m not convinced that “Actual Rows Read” is necessarily an accurate description of what’s going on.

I want to look at an example that queries addresses of particular address types for a customer, but the principle here would easily apply to many other situations if the shape of your query fits, such as looking up attributes in a Key-Value Pair table, for example.

SELECT AddressTypeID, FullAddress

FROM dbo.Addresses

WHERE CustomerID = 783

AND AddressTypeID IN (2,4,5);

I know I haven’t shown you anything about the metadata – I’ll come back to that in a minute. Let’s have a think about this query and what kind of index we’d like to have for it.

Firstly, we know the CustomerID exactly. An equality match like this generally makes it an excellent candidate for the first column in an index. If we had an index on this column we could dive straight into the addresses for that customer – so I’d say that’s a safe assumption.

The next thing to consider is that filter on AddressTypeID. Adding a second column to the keys of our index is perfectly reasonable, so let’s do that. Our index is now on (CustomerID, AddressTypeID). And let’s INCLUDE FullAddress too, so that we don’t need to do any lookups to complete the picture.

And I think we’re done. We should be able to safely assume that the ideal index for this query is:

CREATE INDEX ixIdealIndex

ON dbo.Addresses (CustomerID, AddressTypeID)

INCLUDE (FullAddress);

We could potentially declare it as a unique index – we’ll look at the impact of that later.

So let’s create a table (I’m using tempdb, because I don’t need it to persist beyond this blog post) and test this out.

CREATE TABLE dbo.Addresses (

AddressID INT IDENTITY(1,1) PRIMARY KEY,

CustomerID INT NOT NULL,

AddressTypeID INT NOT NULL,

FullAddress NVARCHAR(MAX) NOT NULL,

SomeOtherColumn DATE NULL

);

I’m not interested in foreign key constraints, or what other columns there might be. I’m only interested in my Ideal Index. So create that too, if you haven’t already.

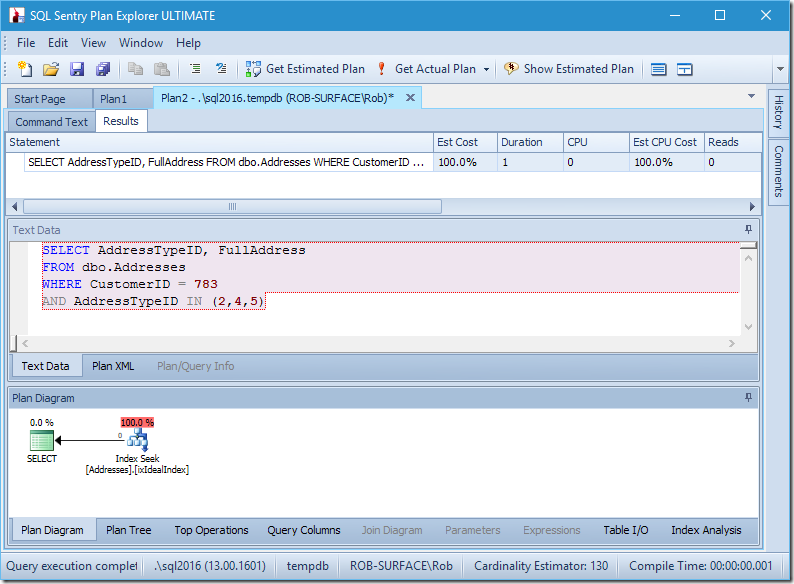

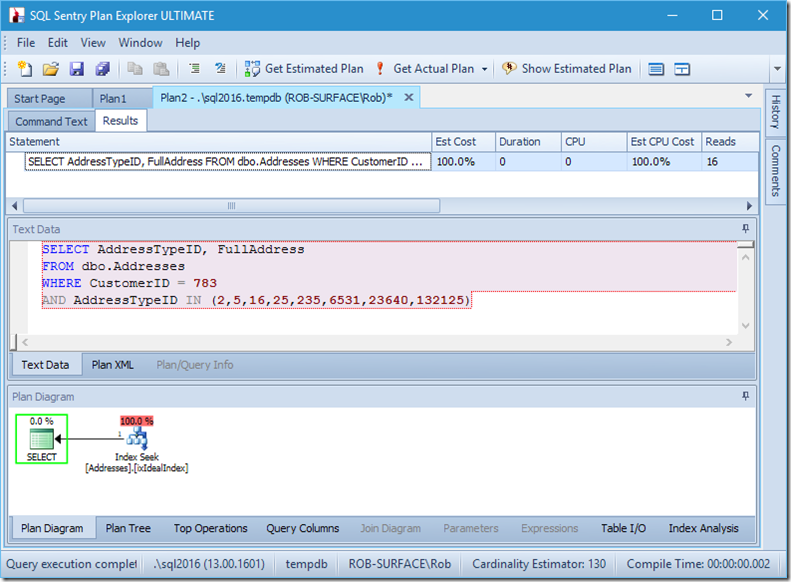

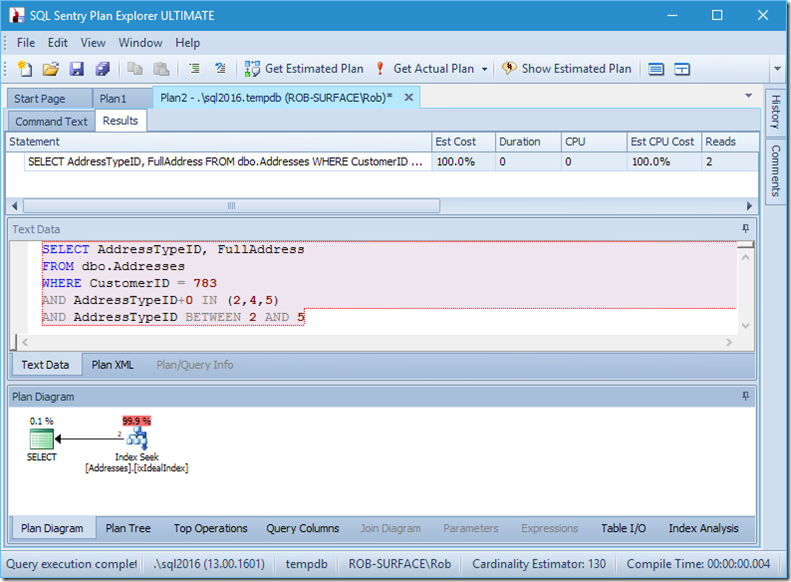

My plan seems pretty perfect.

I have an index seek, and that’s it.

Granted, there’s no data, so there’s no reads, no CPU, and it runs pretty quickly too. If only all queries could be tuned as well as this.

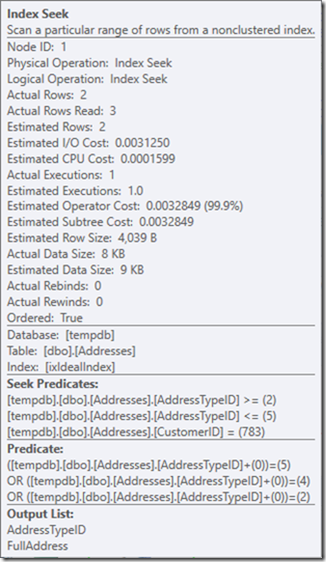

Let’s see what’s going on a little closer, by looking at the properties of the Seek.

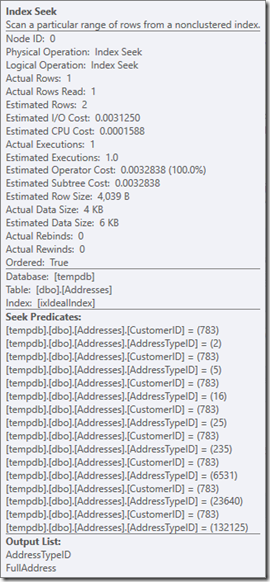

We can see the Seek Predicates. There are six. Three about the CustomerID, and three about the AddressTypeID. What we actually have here are three sets of seek predicates, indicating three seek operations within the single Seek operator. The first seek is looking for Customer 783 and AddressType 2. The second is looking for 783 and 4, and the last 783 and 5. Our Seek operator appeared once, but there were three seeks going on inside it.

We don’t even have data, but we can see how our index is going to be used.

Let’s put some dummy data in, so that we can look at some of the impact of this. I’m going to put addresses in for types 1 to 6. Every customer (over 2000, based on the size of master..spt_values) will have an address of type 1. Maybe that’s the Primary Address. I’m letting 80% have a type 2 address, 60% a type 3, and so on, up to 20% for type 5. Row 783 will get addresses of type 1, 2, 3, and 4, but not 5. I’d rather have gone with random values, but I want to make sure we’re on the same page for the examples.

WITH nums AS (

SELECT row_number() OVER (ORDER BY (SELECT 1)) AS num

FROM master..spt_values

)

INSERT dbo.Addresses (CustomerID, AddressTypeID, FullAddress)

SELECT num AS CustomerID, 1 AS AddressTypeID, N'Some sample text for the address' AS FullAddress

FROM nums

UNION ALL

SELECT num AS CustomerID, 2 AS AddressTypeID, N'Some sample text for the address' AS FullAddress

FROM nums

WHERE num % 10 < 8

UNION ALL

SELECT num AS CustomerID, 3 AS AddressTypeID, N'Some sample text for the address' AS FullAddress

FROM nums

WHERE num % 10 < 6

UNION ALL

SELECT num AS CustomerID, 4 AS AddressTypeID, N'Some sample text for the address' AS FullAddress

FROM nums

WHERE num % 10 < 4

UNION ALL

SELECT num AS CustomerID, 5 AS AddressTypeID, N'Some sample text for the address' AS FullAddress

FROM nums

WHERE num % 10 < 2

;

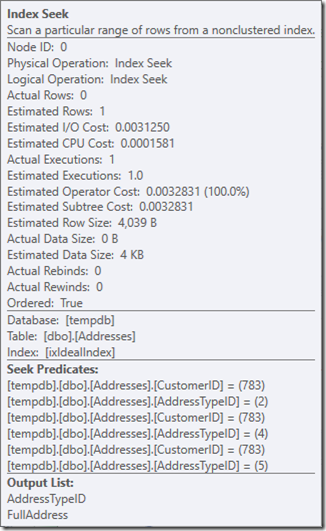

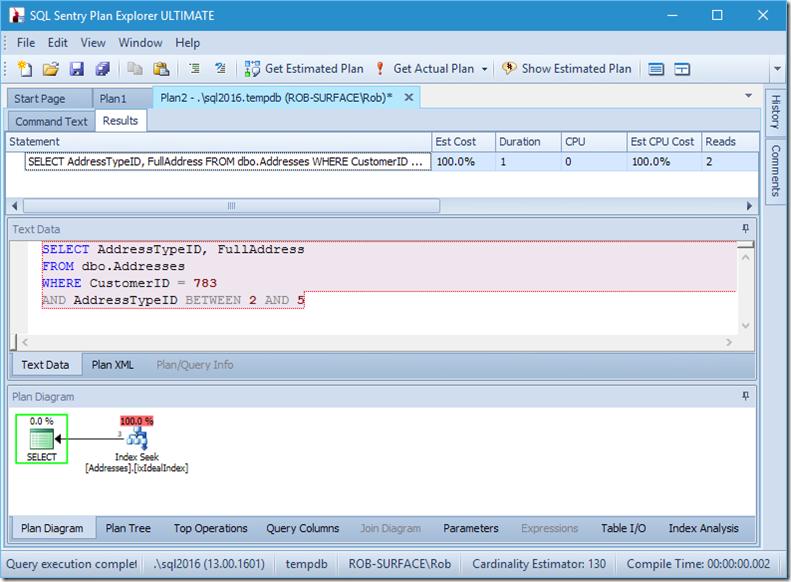

Now let’s look at our query with data. Two rows are coming out. It’s like before, but we now see the two rows coming out of the Seek operator, and we see six reads (in the top-right).

Six reads makes sense to me. We have a small table, and the index fits on just two levels. We’re doing three seeks (within our one operator), so the engine is reading the root page, finding out which page to go down to and reading that, and doing that three times.

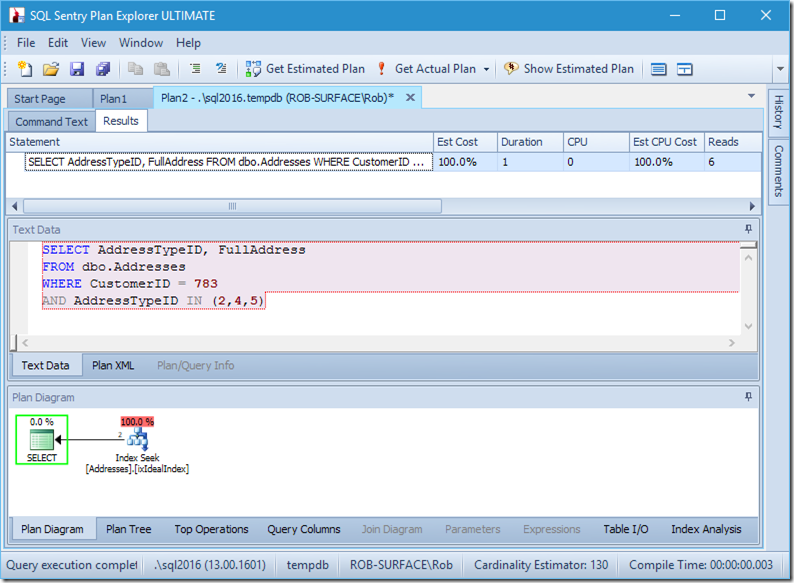

If we were to just look for two AddressTypeIDs, we’d see just 4 reads (and in this case, a single row being outputted). Excellent.

And if we were looking for 8 address types, then we’d see 16.

Yet each of these show that the Actual Rows Read matches the Actual Rows exactly. No inefficiency at all!

Let’s go back to our original query, looking for address types 2, 4, and 5, (which returns 2 rows) and think about what’s going on inside the seek.

I’m going to assume the Query Engine has already done the work to figure out that the Index Seek is the right operation, and that it has the page number of the index root handy.

At this point, it loads that page into memory, if it’s not already there. That’s the first read that gets counted in the execution of the seek. Then it locates the page number for the row it’s looking for, and reads that page in. That’s the second read.

But we often gloss over that ‘locates the page number’ bit.

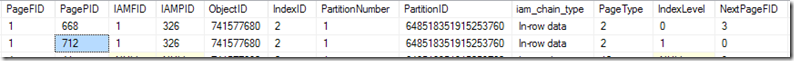

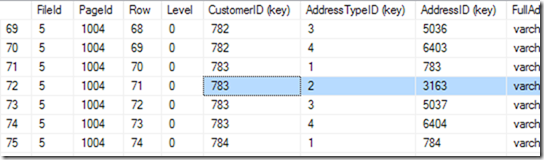

By using DBCC IND(2, N'dbo.Address', 2); (the first 2 is the database id because I’m using tempdb; the second 2 is the index id of ixIdealIndex), I can discover that the 712 in file 1 is the page with the highest IndexLevel. In the screenshot below, I can see that page 668 is IndexLevel 0, which the root page.

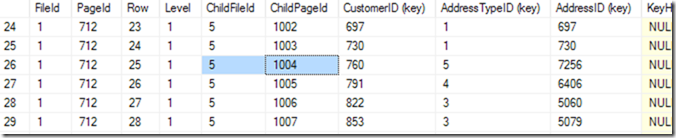

So now I can use DBCC TRACEON(3604); DBCC PAGE (2,1,712,3); to see the contents of page 712. On my machine, I get 84 rowscoming back, and I can tell that CustomerID 783 is going to be on page 1004 of file 5.

But I know this by scrolling through my list until I see the one I want. I started by scrolling down a bit, and then came back up, until I found the row I wanted. A computer calls this a binary search, and it’s a bit more precise than me. It’s looking for the row where the (CustomerID, AddressTypeID) combination is smaller that the one I’m looking for, with the next page being larger or the same as it. I say “the same” because there could be two that match, spread across two pages. It knows there are 84 rows (0 to 83) of data in that page (it reads that in the page header), so it’ll start by checking row 41. From there, it knows which half to search in, and (in this example), it will read row 20. A few more reads (making 6 or 7 in total)* and it knows that row 25 (please look at the column called ‘Row’ for this value, not the row number provided by SSMS) is too small, but row 26 is too big – so 25 is the answer!

*In a binary search, the searching can be marginally quicker if it gets lucky when it splits the block into two if there’s no middle slot, and depending on whether the middle slot can be eliminated or not.

Now it can go into page 1004 in file 5. Let’s use DBCC PAGE on that one.

This one gives me 94 rows. It does another binary search to find the start of the range that it’s looking for. It has to look through 6 or 7 rows to find that.

“Start of the range?” I can hear you ask. But we’re looking for address type 2 of customer 783.

Right, but we didn’t declare this index as unique. So there could be two. If it is unique, the seek can do a singleton search, and could stumble across it during the binary search, but in this case, it must complete the binary search, to find the first row in the range. In this case, it’s the row 71.

But we don’t stop here. Now we need to see if there really is a second one! So it reads row 72 as well, and finds that the CustomerID+AddressTypeiD pair is indeed too big, and its seek is done.

And this happens three times. The third time, it doesn’t find a row for customer 783 and address type 5, but it doesn’t know this ahead of time, and still needs to complete the seek.

So the rows actually being read across these three seeks (to find two rows to output) is a lot more than the number being returned. There’s about 7 at index level 1, and about 7 more at the leaf level just to find the start of the range. Then it reads the row we care about, and then the row after that. That sounds more like 16 to me, and it does this three times, making about 48 rows.

But Actual Rows Read is not about the number of rows actually read, but the number of rows returned by the Seek Predicate, that get tested against the Residual Predicate. And in that, it’s only the 2 rows that get found by the 3 seeks.

You might be thinking at this point that there’s a certain amount of ineffectiveness here. The second seek would’ve also read page 712, checked the same 6 or 7 rows there, and then read page 1004, and hunted through it... as would have the third seek.

So perhaps it would’ve been better to get this in a single seek, reading page 712 and page 1004 only once each. After all, if I were doing this with a paper-based system, I would’ve done a seek to find customer 783, and then scanned through all their address types. Because I know that a customer doesn’t tend to have many addresses. That’s an advantage I have over the database engine. The database engine knows through its statistics that a seek will be best, but it doesn’t know that the seek should only go down one level, when it can tell that it has what seems like the Ideal Index.

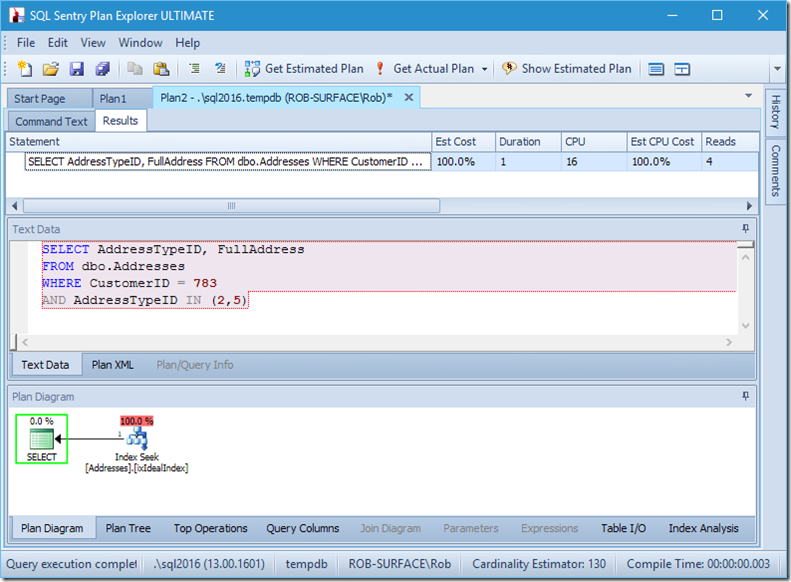

If I change my query to grab a range of address types, from 2 to 5, then I get almost the behaviour I want:

Look – the reads are down to 2, and I know which pages they are...

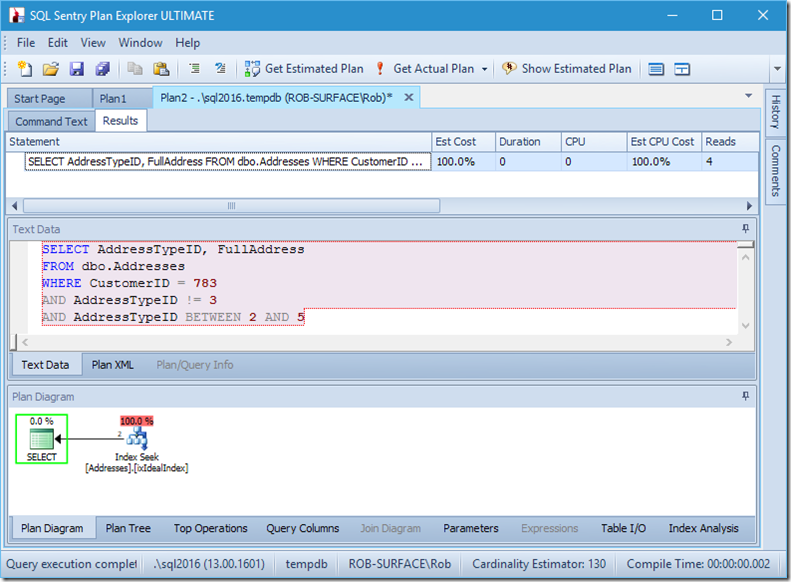

...but my results are wrong. Because I only want address types 2, 4, and 5, not 3. I need to tell it not to have 3, but I have to be careful how I do this. Look at the next two examples.

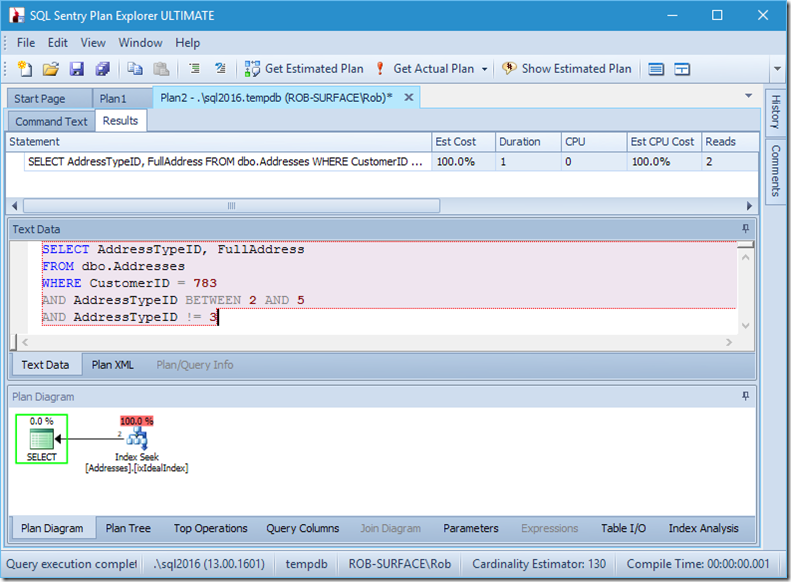

I can assure you that predicate order doesn’t matter, but here it clearly does. If we put the “not 3” first, it does two seeks (4 reads), but if we put the “not 3” second, it does a single seek (2 reads).

The problem is that AddressTypeID != 3 gets converted to (AddressTypeID > 3 OR AddressTypeID < 3), which is then seen as two very useful seek predicates.

And so my preference is to use a non-sargable predicate to tell it that I only want address types 2, 4, and 5. And I can do that by modifying AddressTypeID in some way, such as adding zero to it.

Now I have a nice and tight range scan within a single seek, and I’m still making sure that my query is returning only the rows that I want.

Oh, but that Actual Rows Read property? That’s now higher than the Actual Rows property, because the Seek Predicate finds address type 3, which the Residual Predicate rejects.

I’ve traded three perfect seeks for a single imperfect seek, which I’m fixing up with a residual predicate.

And for me, that’s sometimes a price worth paying, getting me a query plan that I’m much happier about. It’s not considerably cheaper, even though it has only a third of the reads (because there would only ever be two physical reads), but when I think about the work it’s doing, I’m much more comfortable with what I’m asking it to do this way.

Rob,

Did using Plan Explorer Ultimate help with this example? I have it too and know what it can do.

Chris

The new version of Plan Explorer can help, but I don't think Pro v Ultimate is necessarily a factor. I have Ultimate installed, but I would encourage people to use any version.

I was thinking about the index analysis function. Just wondered if this had helped you.

I have seen you present so I have an idea how detailed you look at queries.

Chris

Ah – I didn't use it in this case. There are some excellent features, but I'm still getting used to seeing how it goes in various situations. So far it's best when I'm wanting quick answers rather than explaining some deeper concept.

Since this table should always be queried by CustomerID or by CustomerID and AddressTypeID, I'd instead *cluster* the table on ( CustomerID, AddressTypeID ). I would think that would be a unique key. If so, no identity column is needed at all. If not (really?), you could add an identity as the PK (nonclustered, of course) just to guarantee a unique key for the row.

As it stands, you're repeating almost the entire table in another index, and the second you use one of the other columns that not in the nonclustered index, you've forced a full table scan (yikes!).

Hi Scott,

Thanks for your comment – you raise some valid points, but none that I was actually looking to address (ha!) in this post.

I didn't want to introduce the concept of clustered v non-clustered indexes in this post. The scenario I was looking at was whether multiple seeks within a single Seek operator could be turned into a single seek to reduce reads. That behaviour occurs whether the index is clustered or not. None of my examples used the clustered index, because my non-clustered index was completely covering.

You could certainly drop the identity column and use a clustered index if you like, repeat all the same examples, and see the exact same behaviour. I just didn't want to have people think the examples didn't apply to them because they couldn't make decisions about clustered indexes.

Your "yikes" about forcing a table scan when other columns get involved is certainly valid, but the 'tipping point' situation is not one that I was looking to discuss in this post. There are plenty of examples out there already around that.

Rob

The clustered index would be efficient for the seek but what if new address types are added for customers (say customer x adds a business address or whatever)

If this happened frequently it could cause page splits and fragmentation on inserts.

True, but rows are read vastly more times than they are inserted. Some minor fragmentation will occur on any table, so don't get overly stressed about a few page splits. Also, you can always reorganize the table if/when you need to.

I prefer clustered indexes as Scott's but set the fillfactor for them – depending on the table and how often I think it will have insert to old records – to e.g. 95 %.

By the way: if a customer adds a single new record in the application, pagesplits are negligible – the application will have usually much higher latency than the splitt costs. So if you are not inserting multiple records in a fast time (e.g. when running your invoice procedure that adds thousands records to some tables), I would not really worry about page splits and rely on Ola's maintenance solution to defrag the few to heavy fragmented indexes on the weekend.

It would be great if all database design issues could be fixed, but sometimes you have to just deal with it. Like when your company is bought by another and you have to now convert to "Some Accounting Program". If you think they're going to risk a corporate audit by approving a change request to modify a clustered index because any or all DBAs in the company claim "They're Doing It Wrong!", you are sadly mistaken.

So all I can do is find tricks like those discussed in this fine article to try to get reasonable query performance. And dream about what I would like to do to the clustered indexes, and to the misbegotten idiot ex-COBOL programmers that decided that the ideal data type for fiscal year is nvarchar(4).

In the Tab "statement" what's the number in the column "CPU"? seconds? threads? i could not find where that number came from.

That would be milliseconds, Oscar.