Note: This post was originally published only in our eBook, High Performance Techniques for SQL Server, Volume 2. You can find out about our eBooks here.

Summary: This article examines some surprising behaviour of INSTEAD OF triggers and reveals a serious cardinality estimation bug in SQL Server 2014.

Triggers and Row Versioning

Only DML AFTER triggers use row versioning (in SQL Server 2005 onward) to provide the inserted and deleted pseudo-tables inside a trigger procedure. This point is not clearly made in much of the official documentation. In most places, the documentation simply says that row-versioning is used to build the inserted and deleted tables in triggers without qualification (examples below):

Row Versioning Resource Usage

Understanding Row Versioning-Based Isolation Levels

Controlling Trigger Execution When Bulk Importing Data

Presumably, the original versions of these entries were written before INSTEAD OF triggers were added to the product, and never updated. Either that, or it is a simple (but repeated) oversight.

Anyway, the way row-versioning works with AFTER triggers is quite intuitive. These triggers fire after the modifications in question have been performed, so it's easy to see how maintaining versions of the modified rows enables the database engine to provide the inserted and deleted pseudo-tables. The deleted pseudo-table is constructed from versions of the affected rows before the modifications took place; the inserted pseudo-table is formed from the versions of the affected rows as at the time the trigger procedure started.

Instead Of Triggers

INSTEAD OF triggers are different because this type of DML trigger completely replaces the triggered action. The inserted and deleted pseudo-tables now represent changes that would have been made, had the triggering statement actually executed. Row-versioning cannot be used for these triggers because no modifications have occurred, by definition. So, if not using row versions, how does SQL Server do it?

The answer is that SQL Server modifies the execution plan for the triggering DML statement when an INSTEAD OF trigger exists. Rather than modifying the affected tables directly, the execution plan writes information about the changes to a hidden worktable. This worktable contains all the data needed to perform the original changes, the type of modification to perform on each row (delete or insert), as well as any information needed in the trigger for an OUTPUT clause.

Execution plan without a trigger

To see all this in action, we will first run a simple test without an INSTEAD OF trigger present:

CREATE TABLE Test

(

RowID integer NOT NULL,

Data integer NOT NULL,

CONSTRAINT PK_Test_RowID

PRIMARY KEY CLUSTERED (RowID)

);

GO

INSERT dbo.Test

(RowID, Data)

VALUES

(1, 100),

(2, 200),

(3, 300);

GO

DELETE dbo.Test;

GO

DROP TABLE dbo.Test;

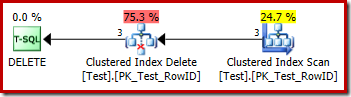

The execution plan for the delete is very straightforward:

Each row that qualifies is passed directly to a Clustered Index Delete operator, which deletes it. Easy.

Execution plan with an INSTEAD OF trigger

Now let's modify the test to include an INSTEAD OF DELETE trigger (one that just performs the same delete action for simplicity):

CREATE TABLE Test

(

RowID integer NOT NULL,

Data integer NOT NULL,

CONSTRAINT PK_Test_RowID

PRIMARY KEY CLUSTERED (RowID)

);

GO

INSERT dbo.Test

(RowID, Data)

VALUES

(1, 100),

(2, 200),

(3, 300);

GO

CREATE TRIGGER dbo_Test_IOD

ON dbo.Test

INSTEAD OF DELETE

AS

BEGIN

SET NOCOUNT ON;

DELETE FROM dbo.Test

WHERE EXISTS

(

SELECT * FROM Deleted

WHERE Deleted.RowID = dbo.Test.RowID

);

END;

GO

DELETE dbo.Test;

GO

DROP TABLE dbo.Test;

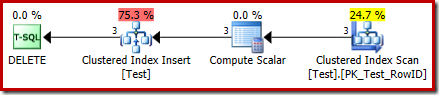

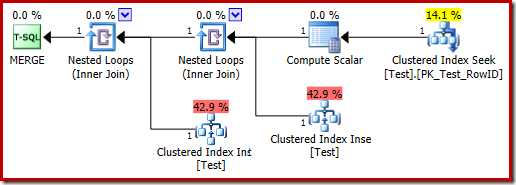

The execution plan for the DELETE is now quite different:

The Clustered Index Delete operator has been replaced by a Clustered Index Insert. This is the insert to the hidden worktable, which is renamed (in the public execution plan representation) to the name of the base table affected by the delete. The renaming occurs when the XML show plan is generated from the internal execution plan representation, so there is no documented way to see the hidden worktable.

As a result of this change, the plan therefore appears to perform an insert to the base table in order to delete rows from it. This is confusing, but it does at least disclose the presence of an INSTEAD OF trigger. Replacing the Insert operator with a Delete might be even more confusing. Perhaps the ideal would be a new graphical icon for an INSTEAD OF trigger worktable? Anyway, it is what it is.

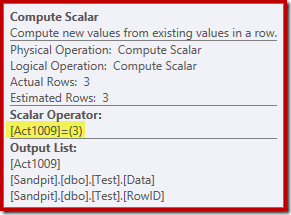

The new Compute Scalar operator defines the type of action performed on each row. This action code is an integer, with the following meanings:

- 3 = DELETE

- 4 = INSERT

- 259 = DELETE in a MERGE plan

- 260 = INSERT in a MERGE plan

For this query, the action is a constant 3, meaning every row is to be deleted:

Update Actions

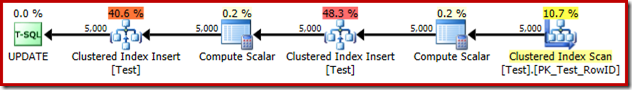

As an aside, an INSTEAD OF UPDATE execution plan replaces a single Update operator with two Clustered Index Inserts to the same hidden worktable – one for the inserted pseudo-table rows, and one for the deleted pseudo-table rows. An example execution plan:

A MERGE that performs an UPDATE also produces an execution plan with two inserts to the same base table for similar reasons:

The Trigger Execution Plan

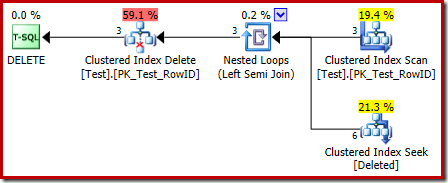

The execution plan for the trigger body also has some interesting features:

The first thing to notice is that the graphical icon used for the deleted table is not the same as the icon used in AFTER trigger plans:

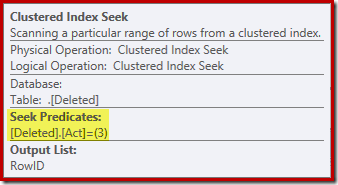

The representation in the INSTEAD OF trigger plan is a Clustered Index Seek. The underlying object is the same internal worktable we saw earlier, though here it is named deleted instead of being given the base table name, presumably for some sort of consistency with AFTER triggers.

The seek operation on the deleted table might not be what you were expecting (if you were expecting a seek on RowID):

This 'seek' returns all rows from the worktable that have an action code of 3 (delete), making it exactly equivalent to the Deleted Scan operator seen in AFTER trigger plans. The same internal worktable is used to hold rows for both inserted and deleted pseudo-tables in INSTEAD OF triggers. The equivalent of an Inserted Scan is a seek on action code 4 (which is possible in a delete trigger, but the result will always be empty). There are no indexes on the internal worktable aside from the non-unique clustered index on the action column alone. In addition, there are no statistics associated with this internal index.

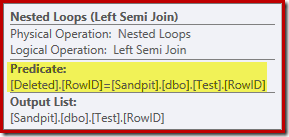

The analysis so far might leave you wondering where the join between the RowID columns is performed. This comparison occurs at the Nested Loops Left Semi Join operator as a residual predicate:

Now that we know the 'seek' is effectively a full scan of the deleted table, the execution plan chosen by the query optimizer seems pretty inefficient. The overall flow of the execution plan is that each row from the Test table is potentially compared with the entire set of deleted rows, which sounds a lot like a cartesian product.

The saving grace is that the join is a semi join, meaning the comparison process stops for a given Test row as soon as the first deleted row satisfies the residual predicate. Nevertheless, the strategy seems a curious one. Perhaps the execution plan would be better if the Test table contained more rows?

Trigger test with 1,000 rows

The following script can be used to test the trigger with a larger number of rows. We will start with 1,000:

CREATE TABLE Test

(

RowID integer NOT NULL,

Data integer NOT NULL,

CONSTRAINT PK_Test_RowID

PRIMARY KEY CLUSTERED (RowID)

);

GO

SET STATISTICS XML OFF;

SET NOCOUNT ON;

GO

DECLARE @i integer = 1;

WHILE @i <= 1000

BEGIN

INSERT dbo.Test (RowID, Data)

VALUES (@i, @i * 100);

SET @i += 1;

END;

GO

CREATE TRIGGER dbo_Test_IOD

ON dbo.Test

INSTEAD OF DELETE

AS

BEGIN

SET NOCOUNT ON;

DELETE FROM dbo.Test

WHERE EXISTS

(

SELECT * FROM Deleted

WHERE Deleted.RowID = dbo.Test.RowID

);

END;

GO

SET STATISTICS XML ON;

GO

DELETE dbo.Test;

GO

DROP TABLE dbo.Test;

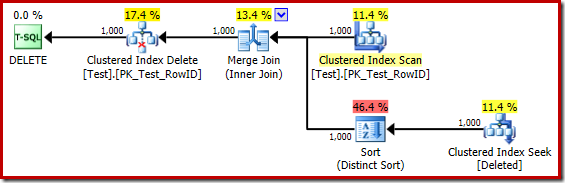

The execution plan for the trigger body is now:

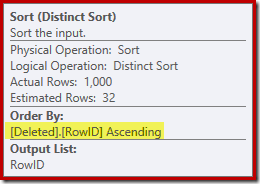

Mentally replacing the (misleading) Clustered Index Seek with a Deleted Scan, the plan looks generally pretty good. The optimizer has chosen a one-to-many Merge Join instead of a Nested Loops Semi Join, which seems reasonable. The Distinct Sort is a curious addition though:

This sort is performing two functions. First, it is providing the merge join with the sorted input it needs, which is fair enough because there is no index on the internal worktable to provide the necessary order. The second thing the sort is doing is to distinct on RowID. This might seem odd, because RowID is the primary key of the base table.

The issue is that rows in the deleted table are simply candidate rows that the original DELETE query identified. Unlike an AFTER trigger, these rows have not been checked for constraint or key violations yet, so the query processor has no guarantee they are in fact unique.

Generally, this is a very important point to bear in mind with INSTEAD OF triggers: there is no guarantee that the rows provided meet any of the constraints on the base table (including NOT NULL). This is not only important for the trigger author to remember; it also limits the simplifications and transformations the query optimizer can perform.

A second issue shown in the Sort properties above, but not highlighted, is that the output estimate is just 32 rows. The internal worktable has no statistics associated with it, so the optimizer guesses at the effect of the Distinct operation. We 'know' the RowID values are unique, but without any hard information to go on, the optimizer makes a poor guess. This issue will return to haunt us in the next test.

Trigger test with 5,000 rows

Now modify the test script to generate 5,000 rows:

CREATE TABLE Test

(

RowID integer NOT NULL,

Data integer NOT NULL,

CONSTRAINT PK_Test_RowID

PRIMARY KEY CLUSTERED (RowID)

);

GO

SET STATISTICS XML OFF;

SET NOCOUNT ON;

GO

DECLARE @i integer = 1;

WHILE @i <= 5000

BEGIN

INSERT dbo.Test (RowID, Data)

VALUES (@i, @i * 100);

SET @i += 1;

END;

GO

CREATE TRIGGER dbo_Test_IOD

ON dbo.Test

INSTEAD OF DELETE

AS

BEGIN

SET NOCOUNT ON;

DELETE FROM dbo.Test

WHERE EXISTS

(

SELECT * FROM Deleted

WHERE Deleted.RowID = dbo.Test.RowID

);

END;

GO

SET STATISTICS XML ON;

GO

DELETE dbo.Test;

GO

DROP TABLE dbo.Test;

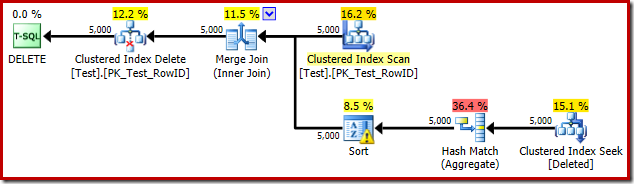

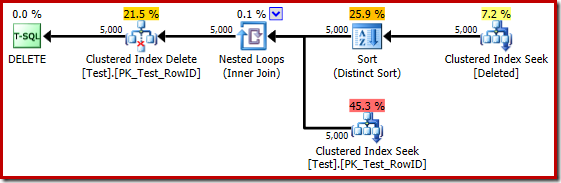

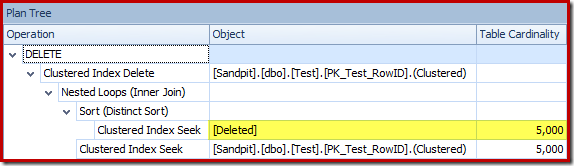

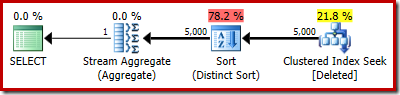

The trigger execution plan is:

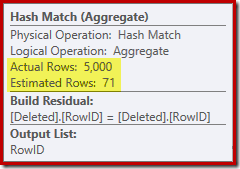

This time the optimizer has decided to split the distinct and sort operations. The distinct on RowID is performed by the Hash Match (Aggregate) operator:

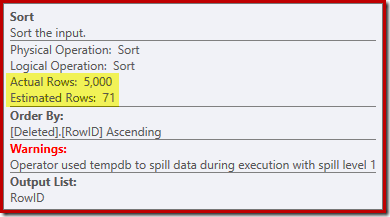

Notice the optimizer's estimate for the output is 71 rows. In fact, all 5,000 rows survive the distinct because RowID is unique. The inaccurate estimate means that an inadequate fraction of the query memory grant is allocated to the Sort, which ends up spilling to tempdb:

This test has to be performed on SQL Server 2012 or higher in order to see the sort warning in the execution plan. In prior versions, the plan contains no information about spills – a Profiler trace on the Sort Warnings event would be needed to reveal it (and you would need to correlate that back to the source query somehow).

Trigger test with 5,000 rows on SQL Server 2014

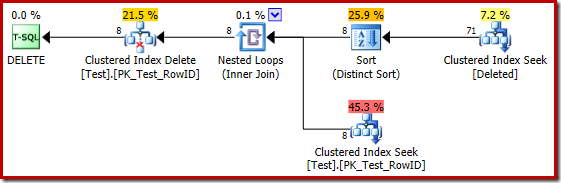

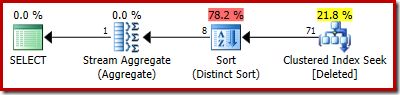

If the previous test is repeated on SQL Server 2014, in a database set to compatibility level 120 so the new cardinality estimator (CE) is used, the trigger execution plan is different again:

In some ways, this execution plan seems like an improvement. The (unnecessary) Distinct Sort is still there, but the overall strategy seems more natural: for each distinct candidate RowID in the deleted table, join to the base table (so verifying that the candidate row actually exists) and then delete it.

Unfortunately, the 2014 plan is based on worse cardinality estimates than we saw in SQL Server 2012. Switching SQL Sentry Plan Explorer to display the estimated row counts shows the problem clearly:

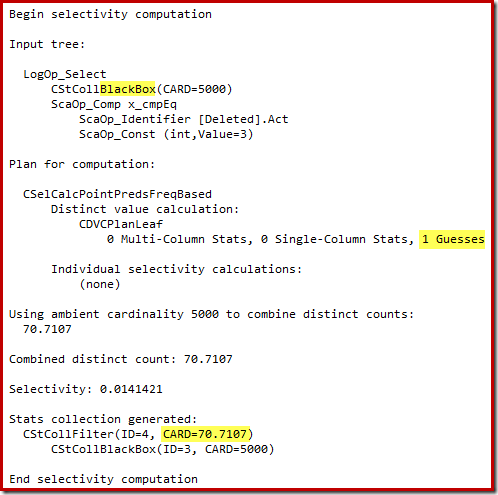

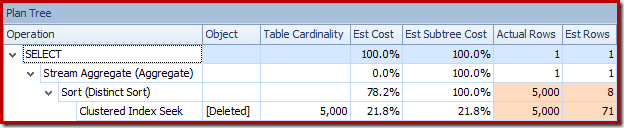

The optimizer chose a Nested Loops strategy for the join because it expected a very small number of rows on its top input. The first issue occurs at the Clustered Index Seek. The optimizer knows the deleted table contains 5,000 rows at this point, as we can see by switching to Plan Tree view and adding the optional Table Cardinality column (which I wish were included by default):

The 'old' cardinality estimator in SQL Server 2012 and earlier is smart enough to know that the 'seek' on the internal worktable would return all 5,000 rows (so it chose a merge join). The new CE is not so smart. It sees the worktable as a 'black box' and guesses at the effect of the seek on action code = 3:

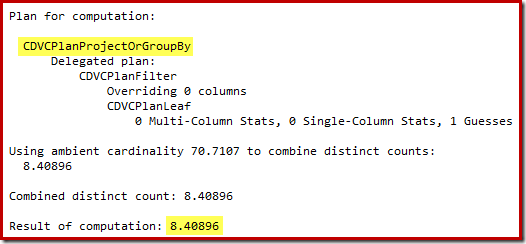

The guess of 71 rows (rounded up) is a pretty miserable outcome, but the error is compounded when the new CE estimates the rows for the distinct operation on those 71 rows:

Based on the expected 8 rows, the optimizer chooses the Nested Loops strategy. Another way to see these estimation errors is to add the following statement to the trigger body (for test purposes only):

SELECT COUNT_BIG(DISTINCT RowID)

FROM Deleted;

The estimated plan shows the estimation errors clearly:

The actual plan still shows 5,000 rows of course:

Or you could compare estimate versus actual at the same time in Plan Tree view:

A million rows…

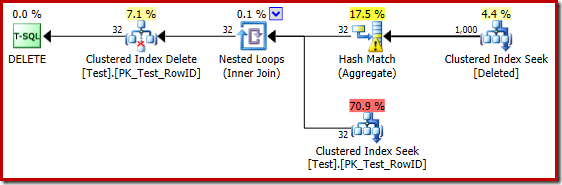

The poor guess-estimates when using the 2014 cardinality estimator cause the optimizer to select a Nested Loops strategy even when the Test table contains a million rows. The 2014 new CE estimated plan for that test is:

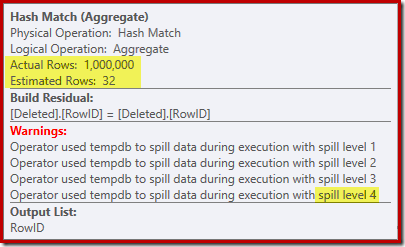

The 'seek' estimates 1,000 rows from the known cardinality of 1,000,000 and the distinct estimate is 32 rows. The post-execution plan reveals the effect on the memory reserved for the Hash Match:

Expecting only 32 rows, the Hash Match gets into real trouble, recursively spilling its hash table before eventually completing.

Final Thoughts

While it is true that a trigger should never be written to do something that can be achieved with declarative referential integrity, it is also true that a well-written trigger that uses an efficient execution plan can be comparable in performance to the cost of maintaining an extra nonclustered index.

There are two practical problems with the above statement. First (and with the best will in the world) people don't always write good trigger code. Second, getting a good execution plan from the query optimizer in all circumstances can be difficult. The nature of triggers is that they are called with a wide range of input cardinalities and data distributions.

Even for AFTER triggers, the lack of indexes and statistics on the deleted and inserted pseudo-tables means plan selection is often based on guesses or misinformation. Even where a good plan is initially selected, later executions may reuse the same plan when a recompilation would have been a better choice. There are ways to work around the limitations, primarily through the use of temporary tables and explicit indexes/statistics but even there great care is required (since triggers are a form of stored procedure).

With INSTEAD OF triggers, the risks can be even greater because the contents of the inserted and deleted tables are unverified candidates - the query optimizer cannot use constraints on the base table to simplify and refine its execution plan. The new cardinality estimator in SQL Server 2014 also represents a real step backwards when it comes to INSTEAD OF trigger plans. Guessing at the effect of a seek operation that the engine introduced itself is a surprising and unwelcome oversight.

Great internals post!

SQL Server should be improved so that hashing and sorting operators can dynamically increase memory usage. This is a common problem.

Thanks. It might be better not to have regressed the estimations in the first place, but I take your point: It might be nice if workspace memory reservations could be expanded at runtime, within some sensible limit. I understand the original design works that way to prioritize SQL Server's ability to process many requests concurrently. Any memory increase at run time has to come from somewhere, after all. Index building sorts can dynamically increase memory by design, but they're expected to be run during a maintenance window.

Great posting. I wonder how other products do this ..

Aha! I could tell triggers were behaving differently with 2014 compatibility level than with 2012 compatibility level, but wasn't able to piece it all together!

Thanks!

Now on to reassuring myself with testing that trace flag 9481 for legacy CE with 2014 compatibility level will achieve what I'm after…

Mark, I think that is possibly what trace flag 7470 will do (see KB #3088480), but the KB is a little light on details so far. And of course, if that is in fact what it does, there will always be scenarios where it can't be honored (including Resource Governor and literally no more memory).

Wow Paul, nice post! How do you find these types of issues!?!?!

Thanks! Mostly from investigating slow queries or general performance problems. That's how this one came up, anyway.

Paul,

Question: if DML triggers rely on row-versioning, does it mean data rows in tables with, e.g., an update trigger undergo the same type of modifications as under one of the row-versioning isolation levels? By which I mean an additional 14 bytes is appended to each row for an XSN and a versionstore pointer?

If yes, then does it mean that merely introducing a DML trigger to an existing table can lead to page splits because of the extra space required to enable row-versioning?

If not, then does it mean there is another mechanism used that allows to locate the needed row versions in this case?

Yes it does, but it depends. I wrote about that in a bit of detail in August 2012:

https://www.sql.kiwi/2012/08/deletes-that-split-pages-and-forwarded-ghosts.html

Excellent, thanks for that link!