As part of T-SQL Tuesday #69, I've blogged about the limitations of Always Encrypted, and I mentioned there that performance could be negatively impacted by its use (as you might expect, stronger security often does have trade-offs). In this post, I wanted to take a quick look at this, keeping in mind (again) that these results are based off of CTP 2.2 code, so very early in the development cycle, and are not necessarily reflective of the performance you'll see come RTM.

First, I wanted to demonstrate that Always Encrypted works from client applications even if the latest version of SQL Server 2016 is not installed there. You do, though, have to install the .NET Framework 4.6 preview (most recent version here, and that may change) in order to support the Column Encryption Setting connection string attribute. If you are running Windows 10, or have installed Visual Studio 2015, this step is not necessary, as you should already have a recent enough version of the .NET Framework.

Next, you need to make sure the Always Encrypted certificate exists on all clients. You create the master and column encryption keys within the database, as any Always Encrypted tutorial will show you, then you need to export the certificate from that machine, and import it on the others where application code will run. Open certmgr.msc, and expand Certificates – Current User > Personal > Certificates, and there should be one there called Always Encrypted Certificate. Right-click that, choose All Tasks > Export, and follow the prompts. I exported the private key and provided a password, which produced a .pfx file. Then you just repeat the opposite process on the client machines: Open certmgr.msc, expand Certificates – Current User > Personal, right-click Certificates, choose All Tasks > Import, and point it at the .pfx file you created above. (Official help here.)

(There are more secure ways to manage these certificates – it is not likely you'd just want to deploy the certificate like this to all machines, as you will soon ask yourself what was the point? I was only doing this in my isolated environment for the purposes of this demo – I wanted to make sure my application was retrieving data over the wire and not just in local memory.)

We create two databases, one with an encrypted table, and one without. We do this to isolate connection strings and also to measure space usage. Of course, there are more granular ways to control which commands need to use an encryption-enabled connection – see the note titled "Controlling the performance impact…" in this article.

The tables look like this:

-- encrypted copy, in database Encrypted

CREATE TABLE dbo.Employees

(

ID INT IDENTITY(1,1) PRIMARY KEY,

LastName NVARCHAR(32) COLLATE Latin1_General_BIN2

ENCRYPTED WITH (ENCRYPTION_TYPE = DETERMINISTIC,

ALGORITHM = 'AEAD_AES_256_CBC_HMAC_SHA_256',

COLUMN_ENCRYPTION_KEY = ColumnKey) NOT NULL,

Salary INT

ENCRYPTED WITH (ENCRYPTION_TYPE = RANDOMIZED,

ALGORITHM = 'AEAD_AES_256_CBC_HMAC_SHA_256',

COLUMN_ENCRYPTION_KEY = ColumnKey) NOT NULL

);

-- unencrypted copy, in database Normal

CREATE TABLE dbo.Employees

(

ID INT IDENTITY(1,1) PRIMARY KEY,

LastName NVARCHAR(32) COLLATE Latin1_General_BIN2 NOT NULL,

Salary INT NOT NULL

);

With these tables in place, I wanted to set up a very simple command-line application to perform the following tasks against both the encrypted and unencrypted versions of the table:

- Insert 100,000 employees, one at a time

- Read through 100 random rows, 1,000 times

- Output timestamps before and after each step

So we have a stored procedure in a completely separate database used to produce random integers to represent salaries, and random Unicode strings of varying lengths. We're going to do this one at a time to better simulate real usage of 100,000 inserts happening independently (though not concurrently, as I am not brave enough to try to properly develop and manage a multi-threaded C# application, or try to coordinate and synchronize multiple instances of a single application).

CREATE DATABASE Utility;

GO

USE Utility;

GO

CREATE PROCEDURE dbo.GenerateNameAndSalary

@Name NVARCHAR(32) OUTPUT,

@Salary INT OUTPUT

AS

BEGIN

SET NOCOUNT ON;

SELECT @Name = LEFT(CONVERT(NVARCHAR(32), CRYPT_GEN_RANDOM(64)), RAND() * 32 + 1);

SELECT @Salary = CONVERT(INT, RAND()*100000)/100*100;

END

GO

A couple of rows of sample output (we don't care about the actual content of the string, just that it varies):

酹2ዌ륒㦢㮧羮怰㉤盿⚉嗝䬴敏⽁캘♜鼹䓧

98600

贓峂쌄탠❼缉腱蛽☎뱶

72000

Then the stored procedures the application will ultimately call (these are identical in both databases, since your queries don't need to be changed to support Always Encrypted):

CREATE PROCEDURE dbo.AddPerson

@LastName NVARCHAR(32),

@Salary INT

AS

BEGIN

SET NOCOUNT ON;

INSERT dbo.Employees(LastName, Salary) SELECT @LastName, @Salary;

END

GO

CREATE PROCEDURE dbo.RetrievePeople

AS

BEGIN

SET NOCOUNT ON;

SELECT TOP (100) ID, LastName, Salary

FROM dbo.Employees

ORDER BY NEWID();

END

GO

Now, the C# code, starting with the connectionStrings portion of App.config. The important part being the Column Encryption Setting option for only the database with the encrypted columns (for brevity, assume all three connection strings contain the same Data Source, and the same SQL authentication User ID and Password):

And Program.cs (sorry, for demos like this, I'm terrible at going in and renaming things logically):

using System;

using System.Collections.Generic;

using System.Text;

using System.Configuration;

using System.Data;

using System.Data.SqlClient;

namespace AEDemo

{

class Program

{

static void Main(string[] args)

{

using (SqlConnection con1 = new SqlConnection())

{

Console.WriteLine(DateTime.UtcNow.ToString("hh:mm:ss.fffffff"));

string name;

string EmptyString = "";

int salary;

int i = 1;

while (i <= 100000)

{

con1.ConnectionString = ConfigurationManager.ConnectionStrings["Utility"].ToString();

using (SqlCommand cmd1 = new SqlCommand("dbo.GenerateNameAndSalary", con1))

{

cmd1.CommandType = CommandType.StoredProcedure;

SqlParameter n = new SqlParameter("@Name", SqlDbType.NVarChar, 32)

{ Direction = ParameterDirection.Output };

SqlParameter s = new SqlParameter("@Salary", SqlDbType.Int)

{ Direction = ParameterDirection.Output };

cmd1.Parameters.Add(n);

cmd1.Parameters.Add(s);

con1.Open();

cmd1.ExecuteNonQuery();

name = n.Value.ToString();

salary = Convert.ToInt32(s.Value);

con1.Close();

}

using (SqlConnection con2 = new SqlConnection())

{

con2.ConnectionString = ConfigurationManager.ConnectionStrings[args[0]].ToString();

using (SqlCommand cmd2 = new SqlCommand("dbo.AddPerson", con2))

{

cmd2.CommandType = CommandType.StoredProcedure;

SqlParameter n = new SqlParameter("@LastName", SqlDbType.NVarChar, 32);

SqlParameter s = new SqlParameter("@Salary", SqlDbType.Int);

n.Value = name;

s.Value = salary;

cmd2.Parameters.Add(n);

cmd2.Parameters.Add(s);

con2.Open();

cmd2.ExecuteNonQuery();

con2.Close();

}

}

i++;

}

Console.WriteLine(DateTime.UtcNow.ToString("hh:mm:ss.fffffff"));

i = 1;

while (i <= 1000)

{

using (SqlConnection con3 = new SqlConnection())

{

con3.ConnectionString = ConfigurationManager.ConnectionStrings[args[0]].ToString();

using (SqlCommand cmd3 = new SqlCommand("dbo.RetrievePeople", con3))

{

cmd3.CommandType = CommandType.StoredProcedure;

con3.Open();

SqlDataReader rdr = cmd3.ExecuteReader();

while (rdr.Read())

{

EmptyString += rdr[0].ToString();

}

con3.Close();

}

}

i++;

}

Console.WriteLine(DateTime.UtcNow.ToString("hh:mm:ss.fffffff"));

}

}

}

}

Then we can call the .exe with the following command lines:

AEDemoConsole.exe "Normal"

AEDemoConsole.exe "Encrypt"

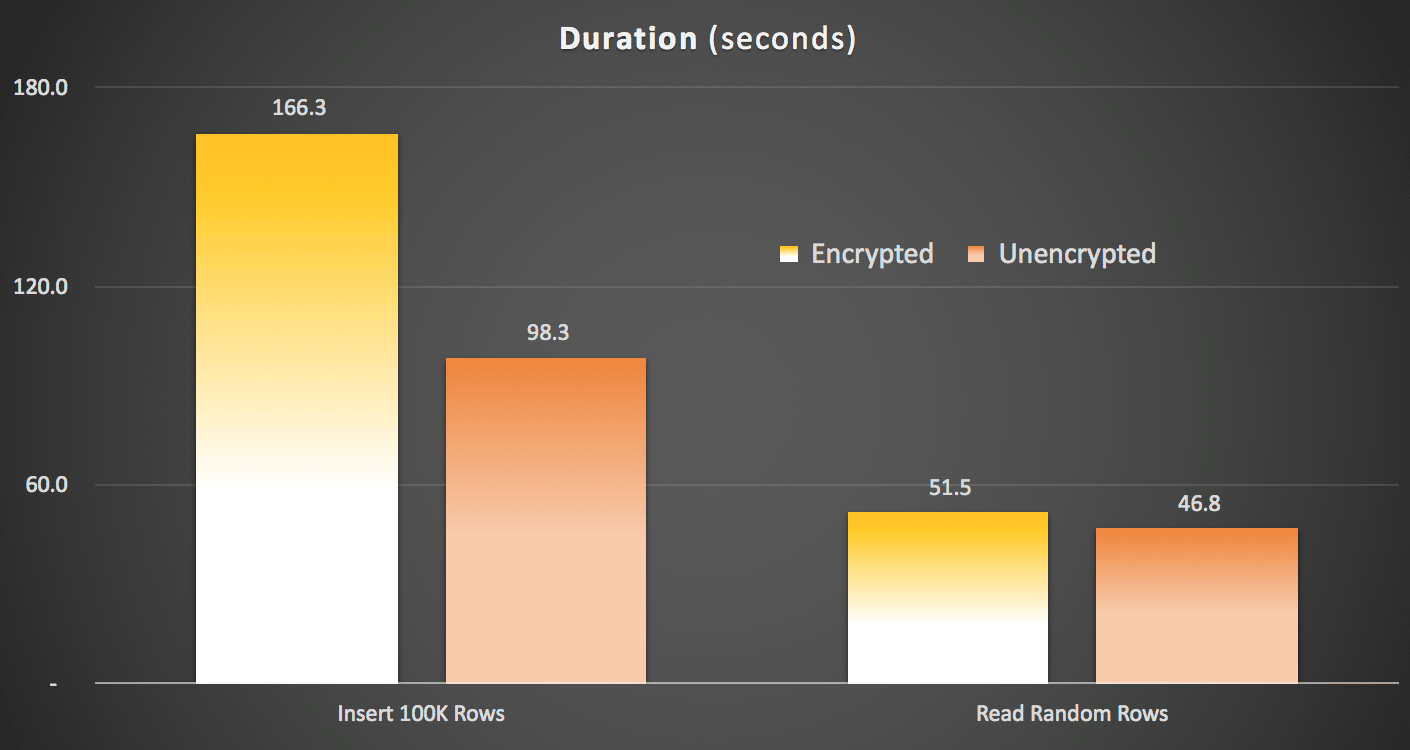

And it will produce three lines of output for each call: the start time, the time after 100,000 rows were inserted, and the time after 100 rows were read 1,000 times. Here were the results I saw on my system, averaged over 5 runs each:

Duration (seconds) of writing and reading data

Duration (seconds) of writing and reading data

There is a clear impact to writing the data - not quite 2X, but more than 1.5X. There was a much lower delta on reading and decrypting the data - at least in these tests - but that wasn't free, either.

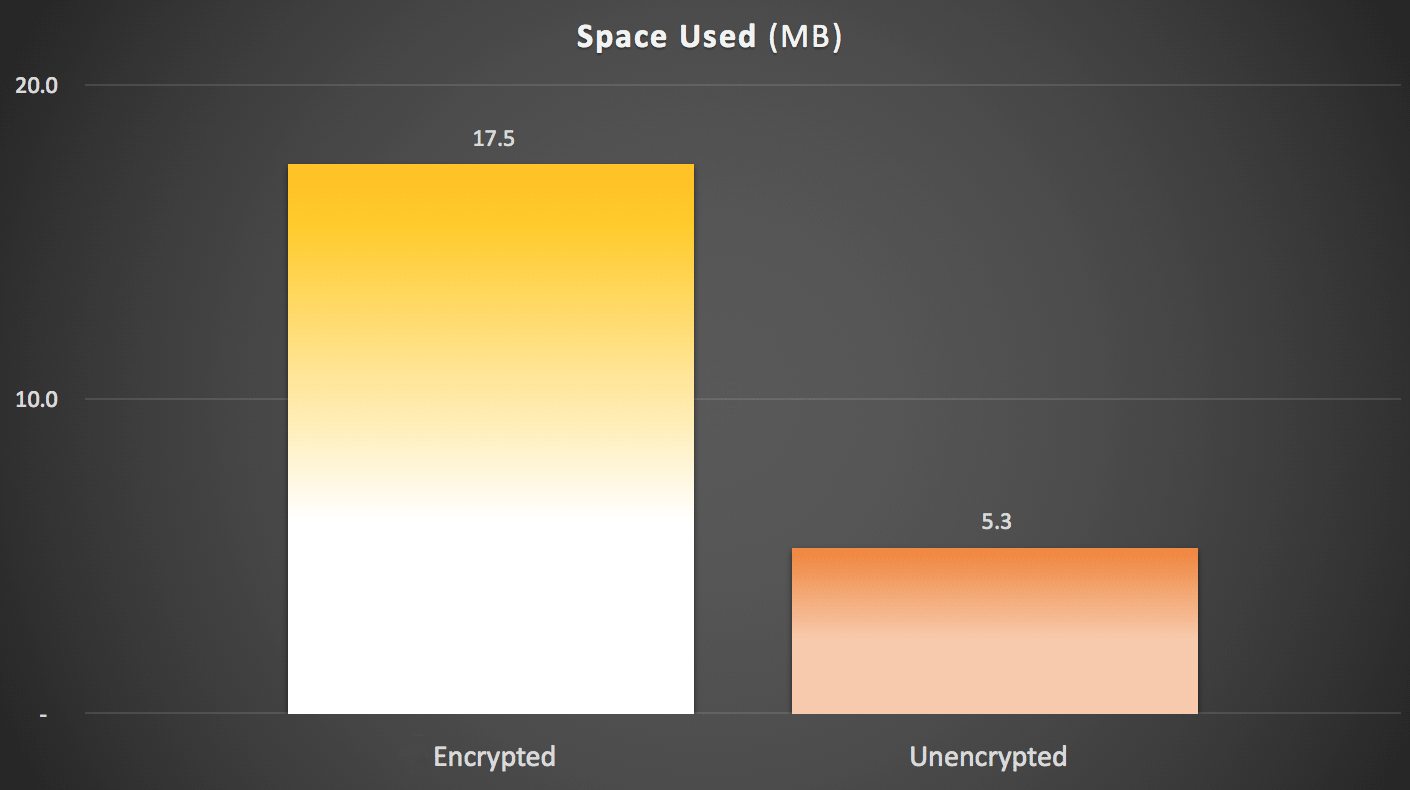

As far as space usage, there is roughly a 3X penalty for storing encrypted data (given the nature of most encryption algorithms, this shouldn't be shocking). Keep in mind this was on a table with only a single clustered primary key. Here were the figures:

So obviously there are some penalties with using Always Encrypted, as there typically are with just about all security-related solutions (the saying "no free lunch" comes to mind). I'll repeat that these tests were performed against CTP 2.2, which may be radically different than the final release of SQL Server 2016. Also, these differences I have observed may reflect only the nature of the tests I concocted; obviously I am hoping you can use this approach to test your results against your schema, on your hardware, and with your data access patterns.

While results will vary, thanks for doing this initial legwork for us.

Nice article… as usual. While the price is high I'm guessing it's better than the piecemeal approach that's currently in place.

I think it's funny that your C# looks like T-SQL. While loops and declaring variables at the top. I guess it's hard to shed those habits. When I started doing T-SQL I sometimes started to type in C# when I meant != and vice versa.

Yeah, I am not an OOP programmer, and have never been formally trained in C# or any other language for that matter. I picked up about as much as I needed to over the years to make things work, and would never profess to be a good model there. (I get the variable declaration thing but if I want to do something 10,000 times, what is better than a while loop and why?)

A for loop is usually better because it is the common idiom. You can immediately recognize it visually as a loop that does something 10000 times. It is a good thing to use the same idioms that everybody else is using.

You might seriously benefit from posting this code to Codereview Stack Exchange. They will give you a list of about a dozen very concrete things to improve. You would need to expend 1-2h to learn this and be a far better programmer after that. Code size will cut by ~30% and legibility improves dramatically.

Thanks, in my defense, I wasn't trying to demonstrate "here's how you write an awesome C# app," I was just trying to show "here's how the performance of this simple app compared, with encryption and without."

Also is it really true that nobody uses

whilein C# (and granted, from a brief search it seems a for loop is processed as a while under the covers anyway)? Is this like "nobody uses CURRENT_TIMESTAMP in SQL Server, they all use GETDATE()"? If everybody usesfor, why don't they deprecatewhile? (Obviously, for me,whileis more natural, because I haveWHILEin T-SQL, but nofor.)It's like CURRENT_TIMESTAMP, except that there are use cases for while. For example an infinite loop `while(true)`. In C# I'd write a cursor loop like this:

while(true) {

Fetch(…);

if (FetchStatus != 0) break;

Process();

}

That way there is no need for having one redundant fetch statement before the loop. In fact I do that the same way in T-SQL (while 0=0).

All structurally identical loops are compiled to the same IL and the same x86. Loops are a C# concept. They disappear during compilation. Foreach is the preferred loop where applicable.

And yes, I'm aware that this discussion completely misses the point of the article.

I do appreciate it and am more trying to understand than argue or any of that. (And I am working on a codereview post.)

Also, the reason I brought up GETDATE() vs. CURRENT_TIMESTAMP is that there is no tangible benefit to GETDATE(), even though that's what "everybody" uses. CURRENT_TIMESTAMP has the advantage that it is ANSI standard and more portable, but I might be the only one who favors it, I presume because most people don't want to type those extra characters. (And yes, I get that there is no CURRENT_UTC_TIMESTAMP, but I prefer SYSUTCDATETIME() over GETUTCDATE(). All of these options don't make the code less readable for me, though.)

Meanwhile, the Codereview people have descended on your code and pointed out (like predicted by me) a dozen issues :) Hope this was helpful to you.

(I also prefer SYSUTCDATETIME :) )

@tobi You might like my new code (but it certainly isn't reduced in size).

http://sqlperformance.com/2015/08/sql-server-2016/always-encrypted-performance-follow-up

How do you import the cert from alwaysencryptoion .pfx when we deploy the web apps to Azure Pass environment.

Hi Janu,

Sorry about the delay. I believe you got this information elsewhere, but just so that other readers have it, here is what Microsoft said about it:

Although we recommend Azure Key Vault for the ease of key management, you can use certificates as column master keys in Azure PaaS VMs. First, you need to export the certificate, including its private key, to a pfx file and upload the file to Azure – for detailed instructions, please see Step 3 in the following article: https://azure.microsoft.com/en-us/documentation/articles/cloud-services-configure-ssl-certificate/#step-3-upload-a-certificate. Then, you need to define the certificate in your web/worker role – please see https://azure.microsoft.com/en-us/documentation/articles/cloud-services-xml-certs/.

The best practice is to use different sets of keys (column encryption keys/column master keys) for dev/stage/prod. For now, you would need to set up the keys insides a database in each environment manually (or you can set up the database schema, including the keys, on a trusted machine on-premises, and have the database deployed to the target PaaS enviropnent as a bacpac using database Import/Export). Later on, we plan to add full support for continues integration for databases using Always Encrypted in SQL Server Data Tools (SSDT) and sqlpackage.exe/dacpac, including the ability to substitute the keys and re-encrypt the data on deployment from one environment to another.

https://azure.microsoft.com/en-us/documentation/articles/cloud-services-configure-ssl-certificate/

Aaron – we have a test app working on my local development PC. But when we export the cert and load it onto our webserver CurrentUser\Personal, the web application can't find it.

I believe that is because we run our app pool under NetworkService. I have even generated a LocalMachine\Personal cert for SQL 2016 to use, and give NetworkService access to it.

Same error. Our web server is Windows 2008, R2. Just wondered if anyone else had worked through this issue. Thanks for any input.

Hi,

I've done some work on comparing performance of AlwaysEncrypted to no encryption (with and without "column encryption setting" in connection string), as well as old style column level encryption using EncryptByKey function (CLE). In most real-life scenarios, one does not have a choice between encrypting and not encrypting, so the real question is which type of encryption will result in best performance.

I used a .net application to call an insert stored proc 100,000 times into an empty table. Results are as follows:

– No encryption and no "column encryption setting" in the connection string: 474 seconds

– No encryption but "column encryption setting" used in connection string: 993 seconds

– AlwaysEncrypted: 996 seconds

– CLE with EncryptByKey: 2,261 seconds

My take from this is that AlwaysEncrypted has stellar performance compared to alternative encryption approach. Not only is it faster end to end, but is scales better because the performance hit is not on the database but on the client which can easily be scaled out. Additional benefit is that it is more secure as the data is encrypted in transit and cannot be discovered using SQL Profiler or network sniffing tools.

Interestingly, there is practically no difference between AlwaysEncrypted and no encryption when "column encryption setting" is enabled. The reason is that when that option is used, SQL adapter makes an extra call to sniff out parameters (sp_describe_parameter_encryption), regardless of whether there is something to encrypt or not. The overhead of this operation seems significant because the insert is so fast that another call doubles the stats, but it is really very small and static overhead. Encryption itself adds no significant overhead. Developers, make sure you disable "column encryption setting" if you don't need it to avoid this overhead.

Let me know if you want me to send the source code for the tests.

Do you know the name of the process? I want to see how much CPU, Disk, and Memory it takes.

I'm researching the encryption impact on the server, and I'm testing the AE encryption of one name column on about 100k rows.

The docs say the perf impact of the initial encryption can be "significant".

Also (rhetorically here), what happens if the process stops mid-way through the encryption process? I assume it's all corrupted and you have to restore the current backup. Another thing to test.

Hi, nice article indeed but I am wondering would this encryption be applied on a non-empty table/column and the column(s) that has relationships with other columns in schema as well??????

I don't think you can use encrypted columns in relational constraints. Are you really using sensitive data as part of a relationship? Could you give a practical example?