Back when SQL Server 2012 was still in beta, I blogged about the new FORMAT() function: SQL Server v.Next (Denali) : CTP3 T-SQL Enhancements : FORMAT().

At that time, I was so excited about the new functionality, that I didn't even think to do any performance testing. I did address this in a more recent blog post, but solely in the context of stripping time from a datetime: Trimming time from datetime – a follow-up.

Last week, my good friend Jason Horner (blog | @jasonhorner) trolled me with these tweets:

|

|

My issue with this is just that FORMAT() looks convenient, but it is extremely inefficient compared to other approaches (oh and that AS VARCHAR thing is bad too). If you're doing this onesy-twosy and for small resultsets, I wouldn't worry too much about it; but at scale, it can get pretty expensive. Let me illustrate with an example. First, let's create a small table with 1000 pseudo-random dates:

SELECT TOP (1000) d = DATEADD(DAY, CHECKSUM(NEWID())%1000, o.create_date)

INTO dbo.dtTest

FROM sys.all_objects AS o

ORDER BY NEWID();

GO

CREATE CLUSTERED INDEX d ON dbo.dtTest(d);

Now, let's prime the cache with the data from this table, and illustrate three of the common ways people tend to present just the time:

SELECT d,

CONVERT(DATE, d),

CONVERT(CHAR(10), d, 120),

FORMAT(d, 'yyyy-MM-dd')

FROM dbo.dtTest;

Now, let's perform individual queries that use these different techniques. We'll run them each 5 times and we'll run the following variations:

- Selecting all 1,000 rows

- Selecting TOP (1) ordered by the clustered index key

- Assigning to a variable (which forces a full scan, but prevents SSMS rendering from interfering with performance)

Here is the script:

-- select all 1,000 rows

GO

SELECT d FROM dbo.dtTest;

GO 5

SELECT d = CONVERT(DATE, d) FROM dbo.dtTest;

GO 5

SELECT d = CONVERT(CHAR(10), d, 120) FROM dbo.dtTest;

GO 5

SELECT d = FORMAT(d, 'yyyy-MM-dd') FROM dbo.dtTest;

GO 5

-- select top 1

GO

SELECT TOP (1) d FROM dbo.dtTest ORDER BY d;

GO 5

SELECT TOP (1) CONVERT(DATE, d) FROM dbo.dtTest ORDER BY d;

GO 5

SELECT TOP (1) CONVERT(CHAR(10), d, 120) FROM dbo.dtTest ORDER BY d;

GO 5

SELECT TOP (1) FORMAT(d, 'yyyy-MM-dd') FROM dbo.dtTest ORDER BY d;

GO 5

-- force scan but leave SSMS mostly out of it

GO

DECLARE @d DATE;

SELECT @d = d FROM dbo.dtTest;

GO 5

DECLARE @d DATE;

SELECT @d = CONVERT(DATE, d) FROM dbo.dtTest;

GO 5

DECLARE @d CHAR(10);

SELECT @d = CONVERT(CHAR(10), d, 120) FROM dbo.dtTest;

GO 5

DECLARE @d CHAR(10);

SELECT @d = FORMAT(d, 'yyyy-MM-dd') FROM dbo.dtTest;

GO 5

Now, we can measure the performance with the following query (my system is pretty quiet; on yours, you may need to perform more advanced filtering than just execution_count):

SELECT

[t] = CONVERT(CHAR(255), t.[text]),

s.total_elapsed_time,

avg_elapsed_time = CONVERT(DECIMAL(12,2),s.total_elapsed_time / 5.0),

s.total_worker_time,

avg_worker_time = CONVERT(DECIMAL(12,2),s.total_worker_time / 5.0),

s.total_clr_time

FROM sys.dm_exec_query_stats AS s

CROSS APPLY sys.dm_exec_sql_text(s.[sql_handle]) AS t

WHERE s.execution_count = 5

AND t.[text] LIKE N'%dbo.dtTest%'

ORDER BY s.last_execution_time;

Results in my case were fairly consistent:

| Query (truncated) | Duration (microseconds) | |||

|---|---|---|---|---|

| total_elapsed | avg_elapsed | total_clr | ||

| SELECT 1,000 rows | SELECT d FROM dbo.dtTest ORDER BY d; |

1,170 |

234.00 |

0 |

SELECT d = CONVERT(DATE, d) FROM dbo.dtTest ORDER BY d; |

2,437 |

487.40 |

0 |

|

SELECT d = CONVERT(CHAR(10), d, 120) FROM dbo.dtTest ORD ... |

151,521 |

30,304.20 |

0 |

|

SELECT d = FORMAT(d, 'yyyy-MM-dd') FROM dbo.dtTest ORDER ... |

240,152 |

48,030.40 |

107,258 |

|

| SELECT TOP (1) | SELECT TOP (1) d FROM dbo.dtTest ORDER BY d; |

251 |

50.20 |

0 |

SELECT TOP (1) CONVERT(DATE, d) FROM dbo.dtTest ORDER BY ... |

440 |

88.00 |

0 |

|

SELECT TOP (1) CONVERT(CHAR(10), d, 120) FROM dbo.dtTest ... |

301 |

60.20 |

0 |

|

SELECT TOP (1) FORMAT(d, 'yyyy-MM-dd') FROM dbo.dtTest O ... |

1,094 |

218.80 |

589 |

|

| Assign variable | DECLARE @d DATE; SELECT @d = d FROM dbo.dtTest; |

639 |

127.80 |

0 |

DECLARE @d DATE; SELECT @d = CONVERT(DATE, d) FROM dbo.d ... |

644 |

128.80 |

0 |

|

DECLARE @d CHAR(10); SELECT @d = CONVERT(CHAR(10), d, 12 ... |

1,972 |

394.40 |

0 |

|

DECLARE @d CHAR(10); SELECT @d = FORMAT(d, 'yyyy-MM-dd') ... |

118,062 |

23,612.40 |

98,556 |

|

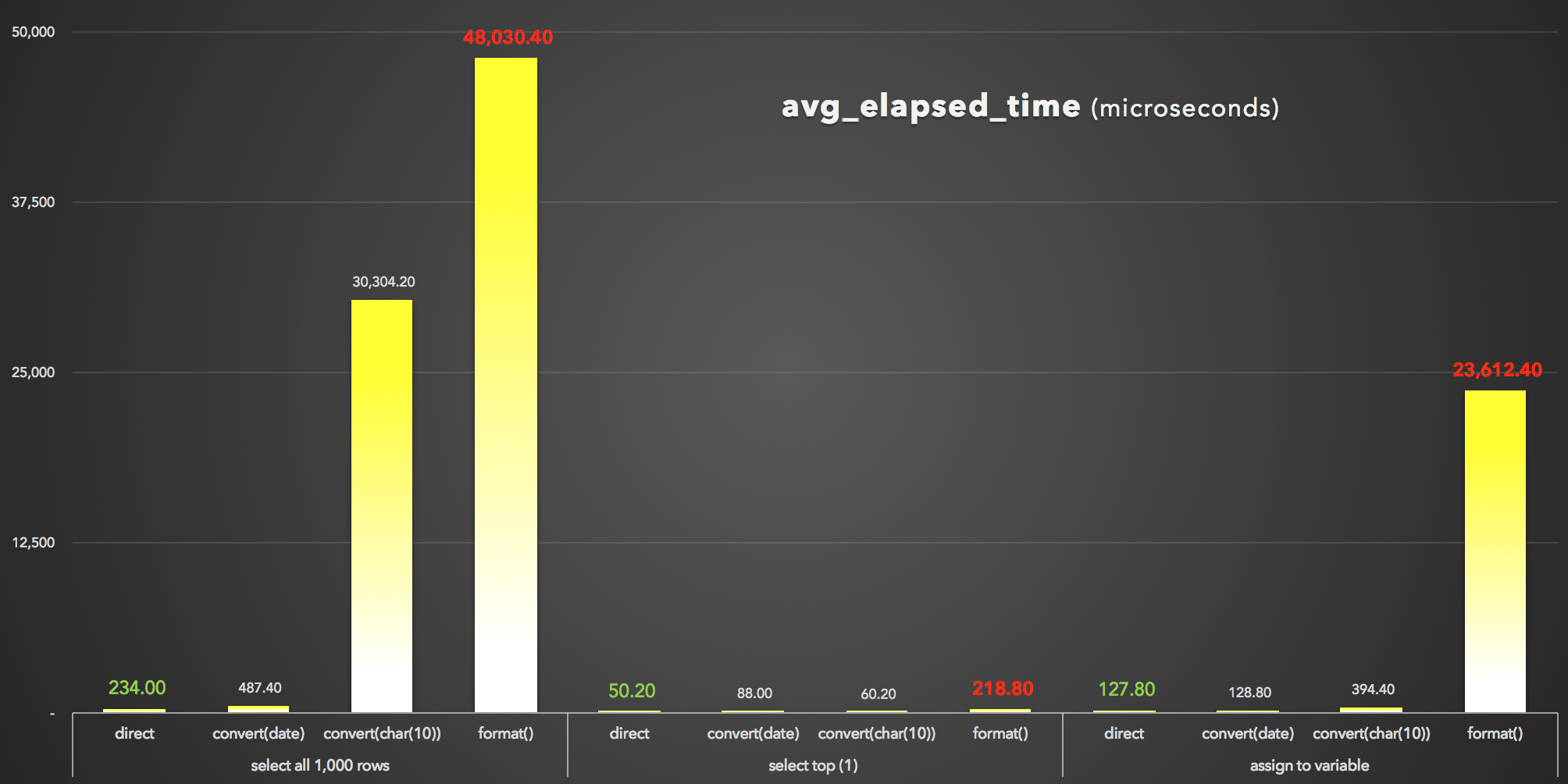

And to visualize the avg_elapsed_time output (click to enlarge):

FORMAT() is clearly the loser : avg_elapsed_time results (microseconds)

FORMAT() is clearly the loser : avg_elapsed_time results (microseconds)

What we can learn from these results (again):

- First and foremost,

FORMAT()is expensive. FORMAT()can, admittedly, provide more flexibility and give more intuitive methods that are consistent with those in other languages like C#. However, in addition to its overhead, and whileCONVERT()style numbers are cryptic and less exhaustive, you may have to use the older approach anyway, sinceFORMAT()is only valid in SQL Server 2012 and newer.- Even the standby

CONVERT()method can be drastically expensive (though only severely so in the case where SSMS had to render the results - it clearly handles strings differently than date values). - Just pulling the datetime value directly out of the database was always most efficient. You should profile what additional time it takes for your application to format the date as desired at the presentation tier - it's highly likely that you're not going to want SQL Server to get involved with prettying format at all (and in fact many would argue that this is where that logic always belongs).

We're only talking microseconds here, but we're also only talking 1,000 rows. Scale that out to your actual table sizes, and the impact of choosing the wrong formatting approach could be devastating.

If you want to try out this experiment on your own machine, I've uploaded a sample script: FormatIsNiceAndAllBut.sql_.zip

Thanks for the article.

I was reading this eagerly because I frequently have need to cast a Date as a string for many weird and wonderful reasons, and I always like to use the best performing function for the job. However, your clear winner here is Convert(DATE and that's not actually changing the datatype. It's just changing the output style of the DateTime value.

It's certainly useful information, but the Convert(DATE function itself is creating a different output to Convert(CHAR and Format(, so it's not surprising their performances are so different.

Additionally to format – when You store date as date, then sometime is usefully to add cast to datetime

select

…

, cast(salesdate as datetime /* or smalldatetime */) as salesdate

from salesdata

becouse most popular analytical tools (like excel and access) couldn't recognize the date and time

Henn

Tim, it is changing the data type (date != datetime) but you are right it is not converting it to a string. Converting to a string is really only worse when you're doing it repeatedly, thousands of times – in the other cases, "worse" is kind of a misnomer. Those are microseconds, remember. :-) I will try to run another test where all of the outcomes are strings (however the point of this article was to demonstrate that FORMAT() is not what you want in any case, and that in most cases it doesn't have to be produced as a string by SQL Server to be displayed the way you want).

Ok, that's fine, but are you telling me there are modern versions of Microsoft Office tools that can't interpret a date without time? I find that hard to believe.

(Anyway, my point here was to not use FORMAT(), not whether or not your tools can deal with date vs. datetime.)

The reverse set (and worse yet low contrast) presentation of the code samples may be stylish–it is also however not especially easy for my 70 year old eyes to read–and I suspect the eyes of others younger than myself.

What you have done is worthwhile, why are you masking it?

-cliff-

Very informative.

Sorry, the black on white is not easy on my eyes. I tried colors on light yellow and people complained about that too. I talked a bit about the arrival at a dark color theme here – it wasn't for trendy, it was about how it looks in the back of a room on a projector, and I've gotten quite used to it. I don't know that I can come up with a color scheme that will make everyone happy.

You can easily hit Ctrl+ to make the text larger, copy/paste into your own editor, etc.

Thanks for the article! I am surprised that FORMAT() is this bad. How bad is the ANSI/ISO Standard "CAST (date_string AS DATE)"? Since display work in not ever done in the database layer, I would ;think that this might be more common than violating the tiered architecture of SQL.

Thanks Joe, I think you will find that internally

CAST(x AS DATE)andCONVERT(DATE, x)perform exactly the same. I tend to use the latter because I often have to use style numbers (for converting both between dates and strings and between binary values and their string representations).Front projection and backlit monitors are of course totally different mediums, and I appreciate the need to "pick one" to suit the majority of your needs. Have you considered a paler yellow ("canary") background with color fonts?

I have found it to work well with many systems I have designed, for example:

http://www.paladinmicro.com/images/ScreenCap02.jpg

I made a test by myself which compares Format(1, '00000000') to Right('00000000' + cast(1 as varchar(8)), 8) (for numbers with leading zeroes). Format was about 17 times slower than Right() using 1 mio rows.

Since display work in not ever done in the database layer…

Of course, unless it has to be. Our shop runs "compliance" on user data. There are hundreds of possible tests, and each one checks for something different. If there is an anomaly, a narrative has to be stored identifying the offending information. That may include an account number, client name, address, date of birth, security information, various amounts, transaction types, dates, etc. Each test is formatted differently and the output from it varies from the next. But, to meet the company, and government, requirements, there has to be a clear narrative indicating what the anomaly was. This means converting dates, dollars, quantities, etc. into strings in order to build the narrative associated with the test.

To try to store the associated values separately and have the UI pull those pieces together and build the proper narrative would be a monumental task at best. Especially when a request is made to change the narrative and unless you have a start-end date associated with whatever presentation code is trying to reconstruct the narrative, which adds even more complexity, you have a nightmare, because you now change the format of all past narratives. In the world of programming, the statements "never" or "not ever" are almost always a moot point.

I know this is a little old, but I recently performed some optimizations at a client. In this case it was to remove a scalar function from a query that hit 20+ tables, 5 of which have 100s of millions of rows each. Needless to say this query had a cost of 8.9 MILLION (not just because of the scalar function). The scalar function simply took 3 float inputs and formatted them as a textual description (length x height x width). Simple enough, so I refactored that code to use the format functions as required by the business:

CASE TABLE1.Dim3 WHEN 0 THEN 'Coil:' ELSE " END

+ FORMAT(TABLE1.Dim1, '#0.0000') + 'x' + FORMAT(TABLE1.Dim2, '#0.0000')

+ CASE TABLE1.Dim3 WHEN 0 THEN " ELSE 'x' + FORMAT(TABLE1.Dim3, '#0.0000') END AS Dimension

Much to my surprise the query was still not running in parallel (just under 100 cost after yanking out some other stuff, we have cost threshold set to 50). After a bit of trial and error I identified the FORMAT function as the culprit for not allowing the query to go parallel! Not a huge problem, I just reverted back to specific conversions to complete the task:

CASE WHEN ISNULL(TABLE1.Dim3, 0) = 0 THEN 'Coil:' ELSE " END

+ CONVERT(VARCHAR, CONVERT(DECIMAL (12, 4), TABLE1.Dim1)) + 'x' + CONVERT(VARCHAR, CONVERT(DECIMAL (12, 4), TABLE1.Dim2))

+ CASE TABLE1.Dim3 WHEN 0 THEN " ELSE 'x' + CONVERT(VARCHAR, CONVERT(DECIMAL (12, 4), TABLE1.Dim3)) END AS Dimension,

So, from a performance standpoint I deem this function useless if it still forces a query to run single threaded!

Thanks Tony! As an aside, I suggest being careful with

varcharwithout length. In some cases the output will be truncated to a single character with no warning or error.Correct on the VARCHAR without length being identified. In this case 30 characters is more than sufficient. I opted to keep the code consistent rather than tweak the length info on this one :).

Interesting. I still like FORMAT because of the simplicity compared to the CONVERT(VARCHAR, 104 etc. to get a specific date format. I find myself to look out this page over and over again as I can never memorize the convert options correctly ;-).

I guess that this page is one of the most visited pages within the SQL server documentation.

However FORMAT offers not everything you need as I tried to put at Microsoft's attention with https://connect.microsoft.com/SQLServer/feedback/details/813987/format-character-function-should-be-able-to-completely-replace-datepart-and-convert-functions .

Martin,

Please, please, please don't take this the wrong way. It is NOT an attack on you, but on the general mindset of thinking that so often seems to prevail.

The comment, "I still like FORMAT because of the simplicity compared to the CONVERT…" That kind of sounds like, after someone writes an article stating how set based operations can outperform cursors by a factor of up to 1000, someone makes the comment, "I still like cursors because I don't have to think about making something set based." I don't remember all the formatting options either (I want commas in my number format, I want to preserve white space in XML data, I want my date formatted Nov 14 2016, etc.) but that shortcut to https://msdn.microsoft.com/en-us/library/ms187928.aspx on my desktop sure helps.

This splinter in my finger is killing me, but it is zebra wood and looks so cool, so I'm not going to remove it :-)

Martin, given this article is regarding performance, I felt it was necessary to outline how FORMAT forces a single threaded execution. If you're using this over large datasets I would have to agree with Doug. The mindset of ease of use should always lose out to performance. Just my 2 cents with all due respect :).

I know this a really old discussion, but even in June 2017, Excel 2016 still can't handle the SQL Server date types other than DATETIME properly – using Microsoft's own 'From SQL Server' wizard to connect to a dummy table with DATETIME, DATE, DATETIME2, DATETIMEOFFSET and TIME fields; only the DATETIME field works as expected, complete with date filters in a table, but the DATE field comes through to Excel as 'text'. I thought maybe PowerPivot would work, but it too fails.

Great post. I borrowed your test technique and ran some tests against CONCAT, FORMATMESSAGE and "regular" string concatenation as well. CONCAT performs about as good as "regular" concatenation (ever so slightly worse, but not by much. Much to my dismay, FORMATMESSAGE performs several times worse than either of the other methods. Seems like this behavior is not limited to the FORMAT function.

Aaron, I know this is an old post of yours but I ran some tests to see how the performance degraded as more and more rows were added. I did not not run that many tests, but the performance difference is incredibly drastic as the row count increases.

SELECT COUNT(*) –1,331,000

FROM master.sys.objects o

CROSS JOIN master.sys.objects o2

CROSS JOIN master.sys.objects o3

SET STATISTICS TIME ON

/******************************TEST 1********************************************/

DECLARE @ret varchar(50)

SELECT TOP(500000)

@ret = FORMAT(o.create_date, 'MM/dd/yyyy')

, @ret = FORMAT(o.create_date, 'yyyy-MM-dd')

, @ret = FORMAT(o.create_date, 'MM-dd-yyyy')

, @ret = FORMAT(o.create_date, 'HH:mm:ss')

FROM master.sys.objects o

CROSS JOIN master.sys.objects o2

CROSS JOIN master.sys.objects o3

GO

— 5000 ROWS

— CPU Elapsed

–Total 00:00:00.625 00:00:00.639

— 50000 ROWS

— CPU Elapsed

–Total 00:00:05.906 00:00:06.145

— 500000 ROWS

— CPU Elapsed

–Total 00:00:52.750 00:00:55.203

/******************************TEST 2********************************************/

DECLARE @ret varchar(50)

SELECT TOP(500000)

@ret = CONVERT(VARCHAR(10), o.create_date, 101)

, @ret = CONVERT(VARCHAR(10), o.create_date, 120)

, @ret = CONVERT(VARCHAR(10), o.create_date, 110)

, @ret = CONVERT(VARCHAR(10), o.create_date, 108)

FROM master.sys.objects o

CROSS JOIN master.sys.objects o2

CROSS JOIN master.sys.objects o3

GO

— 5000 ROWS

— CPU Elapsed

–Total 00:00:00.031 00:00:00.036

— 50000 ROWS

— CPU Elapsed

–Total 00:00:00.359 00:00:00.364

— 500000 ROWS

— CPU Elapsed

–Total 00:00:00.937 00:00:00.936

Hmmm… if I was exclusively using SQL 2012 or higher I might choose to use FORMAT for the flexibility regardless of these figures.

Even with the worst case average of 48030.40 microseconds that converts to 0.04 seconds – i.e. an imperceivably small amount of time.

I think the old adage of SQL still applies here – "it depends". Being aware of the performance hit is all you need to know and then you can test with your expected data processing limit.

But lets not forget if you need to format those dates whether you do it in SQL or in C# (for example) is going to take time. I'd say that it's better to do it in 1 pass rather than have to fetch the data into C# and then do some post processing on it.

Yes – it depends. If you have a database, where thousands of queries per second ask for formated values for a website, it could cost you significant CPU power (= SQL Server licenses).

On the other hand – when the formating is done on the customers client / browser, it costs you absolute nothing and even with an very old notebook / tablet, the customer would not see a slowdown for formating 50 numbers locally.