In a departure from my ‘knee-jerk performance tuning’ series, I’d like to discuss how index fragmentation can creep up on you under some circumstances.

What is Index Fragmentation?

Most people think of ‘index fragmentation’ as meaning the problem where the index leaf pages are out of order – the index leaf page with the next key value is not the one that’s physically contiguous in the data file to the index leaf page currently being examined. This is called logical fragmentation (and some people refer to it as external fragmentation – a confusing term that I don’t like).

Logical fragmentation happens when an index leaf page is full and space is required on it, either for an insert or to make an existing record longer (from updating a variable-length column). In that case, the Storage Engine creates a new, empty page and moves 50 % of the rows (usually, but not always) from the full page to the new page. This operation creates space in both pages, allowing the insert or update to proceed, and is called a page split. There are interesting pathological cases involving repeated page splits from a single operation and page splits that cascade up the index levels, but they’re beyond the scope of this post.

When a page split occurs, it usually causes logical fragmentation because the new page that’s allocated is highly unlikely to be physically contiguous to the one that’s being split. When an index has lots of logical fragmentation, index scans are slowed down because the physical reads of the necessary pages cannot be done as efficiently (using multi-page ‘readahead’ reads) when the leaf pages are not stored in order in the data file.

That’s the basic definition of index fragmentation, but there’s a second kind of index fragmentation that most people don’t consider: low page density (sometimes call internal fragmentation, again, a confusing term I don’t like).

Page density is a measure of how much data is stored on an index leaf page. When a page split occurs with the usual 50/50 case, each leaf page (the splitting one and the new one) are left with a page density of only 50%. The lower the page density, the more empty space there is in the index and so the more disk space and buffer pool memory you can think of as being wasted. I blogged about this problem a few years back and you can read about it here.

Now that I’ve given a basic definition of the two kinds of index fragmentation, I’m going to refer to them collectively as simply ‘fragmentation.’

For the remainder of this post I’d like to discuss three cases where clustered indexes can become fragmented even if you’re avoiding operations that would obviously cause fragmentation (i.e. random inserts and updating records to be longer).

Fragmentation from Deletes

“How can a delete from a clustered index leaf page cause a page split?” you might be asking. It won’t, under normal circumstances (and I sat thinking about it for a few minutes to make sure there wasn’t some weird pathological case! But see the section below…) However, deletes can cause page density to get progressively lower.

Imagine the case where the clustered index has a bigint identity key value, so inserts will always go to the right-hand side of the index and will never, ever be inserted into an earlier portion of the index (barring someone reseeding the identity value – potentially very problematic!). Now imagine that the workload deletes records from the table that are no longer required, after which the background ghost cleanup task will reclaim the space on the page and it will become free space.

In the absence of any random inserts (impossible in our scenario unless someone reseeds the identity or specifies a key value to use after enabling SET IDENTITY INSERT for the table), no new records will ever use the space that was freed from the deleted records. This means that the average page density of the earlier portions of the clustered index will steadily decrease, leading to increasing amount of wasted disk space and buffer pool memory as I described earlier.

Deletes can cause fragmentation, as long as you consider page density as part of ‘fragmentation.’

Fragmentation from Snapshot Isolation

SQL Server 2005 introduced two new isolation levels: snapshot isolation and read-committed snapshot isolation. These two have slightly different semantics, but basically allow queries to see a point-in-time view of a database, and for lock-collision-free selects. That’s a vast simplification, but it’s enough for my purposes.

To facilitate these isolation levels, the development team at Microsoft that I led implemented a mechanism called versioning. The way that versioning works is that whenever a record changes, the pre-change version of the record is copied into the version store in tempdb, and the changed recorded gets a 14-byte versioning tag added on the end of it. The tag contains a pointer to the previous version of the record, plus a timestamp that can be used to determine what is the correct version of a record for a particular query to read. Again, hugely simplified, but it’s only the addition of the 14-bytes that we’re interested in.

So whenever a record changes when either of these isolation levels is in effect, it may expand by 14 bytes if there isn’t already a versioning tag for the record. What if there isn’t enough space for the extra 14 bytes on the index leaf page? That’s right, a page split will occur, causing fragmentation.

Big deal, you might think, as the record is changing anyway so if it was changing size anyway then a page split would probably have occurred. No – that logic only holds if the record change was to increase the size of a variable-length column. A versioning tag will be added even if a fixed-length column is updated!

That’s right – when versioning is in play, updates to fixed-length columns can cause a record to expand, potentially causing a page split and fragmentation. What's even more interesting is that a delete will also add the 14-byte tag, so a delete in a clustered index could cause a page split when versioning is in use!

The bottom line here is that enabling either form of snapshot isolation can lead to fragmentation suddenly starting to occur in clustered indexes where previously there was no possibility of fragmentation.

Fragmentation from Readable Secondaries

The last case I want to discuss is using readable secondaries, part of the availability group feature that was added in SQL Server 2012.

When you enable a readable secondary, all queries you do against the secondary replica are converted to using snapshot isolation under the covers. This prevents the queries from blocking the constant replaying of log records from the primary replica, as the recovery code acquires locks as it goes along.

To do this, there needs to be 14-byte versioning tags on records on the secondary replica. There’s a problem, because all replicas need to be identical, so that the log replay works. Well, not quite. The versioning tag contents aren’t relevant as they’re only used on the instance that created them. But the secondary replica can’t add versioning tags, making records longer, as that would change the physical layout of records on a page and break the log replaying. If the versioning tags were already there though, it could use the space without breaking anything.

So that’s exactly what happens. The Storage Engine makes sure that any needed versioning tags for the secondary replica are already there, by adding them on the primary replica!

As soon as a readable secondary replica of a database is created, any update to a record in the primary replica causes the record to have an empty 14-byte tag added, so that the 14-bytes is properly accounted for in all the log records. The tag isn’t used for anything (unless snapshot isolation is enabled on the primary replica itself), but the fact that it’s created causes the record to expand, and if the page is already full then…

Yes, enabling a readable secondary causes the same effect on the primary replica as if you enabled snapshot isolation on it – fragmentation.

Summary

Don’t think that because you’re avoiding using GUIDs as cluster keys and avoiding updating variable-length columns in your tables then your clustered indexes will be immune to fragmentation. As I’ve described above, there are other workload and environmental factors that can cause fragmentation problems in your clustered indexes that you need to be aware of.

Now don’t knee-jerk and think that you shouldn’t delete records, shouldn’t use snapshot isolation, and shouldn’t use readable secondaries. You just have to be aware that they can all cause fragmentation and know how to detect, remove, and mitigate it.

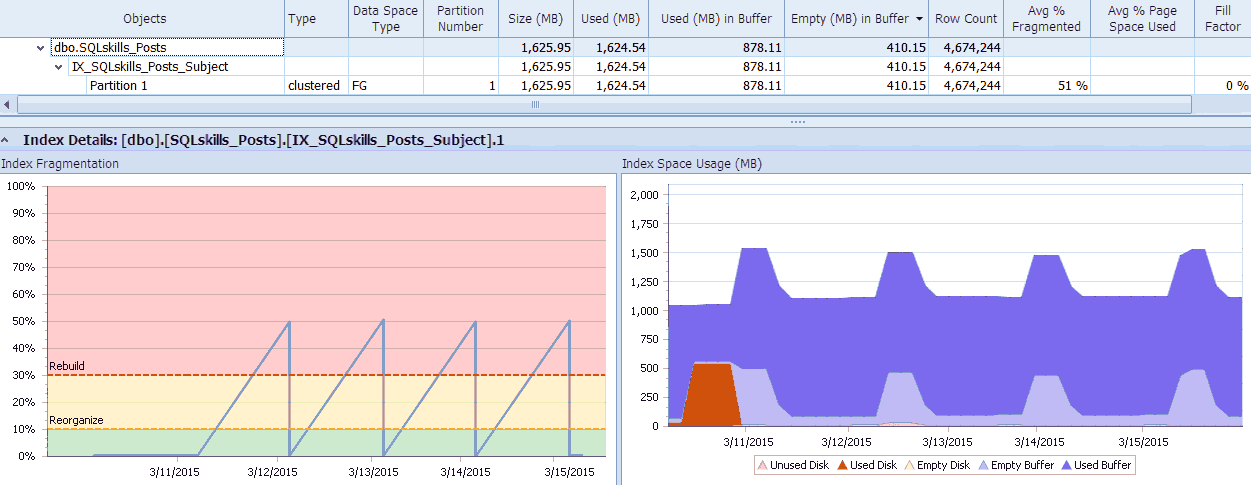

SQL Sentry has a cool tool, Fragmentation Manager, which you can use as an add-on to Performance Advisor to help figure out where fragmentation problems are and then address them. You may be surprised at the fragmentation you find when you check! As a quick example, here I can visually see – down to the individual partition level – how much fragmentation exists, how quickly it got that way, any patterns that exist, and the actual impact it has on wasted memory in the system:

SQL Sentry Fragmentation Manager data (click to enlarge)

SQL Sentry Fragmentation Manager data (click to enlarge)

In my next post, I’ll discuss more about fragmentation and how to mitigate it to make it less problematic.

Paul,

I had heard about the readable secondary situation, probably from you. We aren't using the secondary as readable but we are thinking of it. If we change the secondary to be readable from Read-intent only will this cause our databases to grow as the 14-byte versioning tag will get added? If it will what actions will make this happen? IE Index-rebuild, Update

Thanks

Chris

Any time you enable a replica for reading, the versioning tags start to get added on the primary. All the same DML operations that would cause them to be added under normal snapshot isolation apply. Index rebuild isn't DML, and doesn't change a record (only it's physical location), so no tag is generated for that case.

Based on your comment then Paul I can assume that the versioning tag would appear once we change from Read-intent only to Readable and our databases would swell up as existing rows get updated and any new rows would automatically have them added. We could finish up with a mix of unchanged rows with no tags and other rows with tags.

Thanks

Chris

Correct. Whether the database would increase in size or not because of the page splits depends on how much free space there is in the data files, but you'll start seeing fragmentation occurring.

Paul,

If we change to readable we would have a mix of versioned tagged rows and ones without. Could this be a problem? I suppose other than updating every row in all the databases we would have to live with this.

Thanks

Chris

(Reply nesting limit reached). No – no problem Chris.

The one thing that's always seemed strange is that sys.dm_db_index_physical_stats provides a single fragmentation percentage. It really should detail both logical fragmentation and page density separately. Any ideas what formula has been used to come up with the number that's shown in the DMV?

Greg – you're confused. The DMV has given both fragmentation numbers since 2005 when I implemented it. Avg_fragmentation_in_percent is the logical fragmentation and avg_page_space_used_in_percent is the page density. Books Online for the DMV explains this also. Thanks

Excellent thanks Paul. That makes sense. I had presumed that they must have morphed both into the first figure somehow. Every management script that I've ever seen (including Ola Hallengren's ones), only consider the first value ie: logical fragmentation, yet clearly page density should also be being considered (apart from when row sizes are bizarre).

I've just been doing a review of the index maintenance processing at my new workplace and encountered a few of these, along with a few other classic index maintenance mistakes (rebuilding stats with a sample rate straight after rebuilding the index for one).

The biggest issue I'm seeing here though is a misconception about fillfactor, which tags into the point about deletes to a table causing page fullness fragmentation. In this scenario, some large tables have been created with a fillfactor of 80 on the clustered index, which is clustered using an identity(1,1). This is also mimmicked in the rebuild operations on the table, ensuring that the table takes up 20% more space than required and hurting the performance of the server generally now due to an increased number of pages being read into the buffer. It's something that I've seen for several companies now, so think it's definitely something worth highlighing.

Paul, why is it that setting an index's fill factor lower than 100% for an index rebuild operation (in response to fragmentation) creates empty space in existing pages but not in newly created ones (I've read this)? I've found the best predictor of the future to be the past, and when an index repeatedly suffers from fragmentation, it's a pretty good bet that it will eventually have similar fragmentation on newly created pages. Would it not make sense to apply an index's fill factor to all of its pages, both existing ones and those to be created in the future?

Hi Lee – it's because fill factor is only applied during an index build or rebuild. Let's say you create a fill factor of 70% for an index. For new pages, once they fill up to 70% full, what should happen next? Do they then never get used again for new record insertions? Figuring out under what circumstances the page would subsequently be used instead of going to another new page would be too complex, so it's far easier to not apply fill factor to new pages.

Thanks for your response. I have one more question: It seems that there is an implied inverse relationship between fill factors and index fragmentation. I have developed an algorithm that uses an index's history of fill factor settings and the resultant fragmentation before the next index rebuild operation, to dynamically calculate the next fill factor for the next index rebuild. My experience is that this has seemed to work well. When the fill factor eventually settles on what appears to be an optimum fill factor, the fill factor calculation algorithm generates approximately the same fill factor recommendation for every index rebuild operation. But, I could be fooling myself. Would you consider what I'm doing to be a practical approach to estimating optimum fill factors or would you recommend I consider some other way of determining them?

No problem. That's a valid approach. If fill factor X results in fragmentation, lower the fill factor until you reach a good value. An alternative to lowering the fill factor is to increase the periodicity of your index maintenance, if having too low a fill factor results in memory pressure on your buffer pool.

Paul,

I know asked about this 2 years ago when you issued this blog post. We have AG's defined with Read-intent only under the readable Secondary but haven't started to use the secondary for that purpose. This is fro SQL 2016 SP1.

If I understand your comment;-

Any time you enable a replica for reading, the versioning tags start to get added on the primary

then we are already generating versioning tags on the Primary?

Please confirm.

Thanks

Chris

Correct. Doesn't matter if you're actually connecting to the readable-secondary or not, SQL Server has to provision the space on the primary just in case you *do* connect to the secondary.

Thank you so much Paul.

probably confirms why our databases are expanding quicker as well as the effect of adding query store to them for 2016.

Chris