Parameter Sniffing

Query parameterization promotes the reuse of cached execution plans, thereby avoiding unnecessary compilations, and reducing the number of ad-hoc queries in the plan cache.

These are all good things, provided the query being parameterized really ought to use the same cached execution plan for different parameter values. An execution plan that is efficient for one parameter value may not be a good choice for other possible parameter values.

When parameter sniffing is enabled (the default), SQL Server chooses an execution plan based on the particular parameter values that exist at compilation time. The implicit assumption is that parameterized statements are most commonly executed with the most common parameter values. This sounds reasonable enough (even obvious) and indeed it often works well.

A problem can occur when an automatic recompilation of the cached plan occurs. A recompilation may be triggered for all sorts of reasons, for example because an index used by the cached plan has been dropped (a correctness recompilation) or because statistical information has changed (an optimality recompile).

Whatever the exact cause of the plan recompilation, there is a chance that an atypical value is being passed as a parameter at the time the new plan is generated. This can result in a new cached plan (based on the sniffed atypical parameter value) that is not good for the majority of executions for which it will be reused.

It is not easy to predict when a particular execution plan will be recompiled (for example, because statistics have changed sufficiently) resulting in a situation where a good-quality reusable plan can be suddenly replaced by a quite different plan optimized for atypical parameter values.

One such scenario occurs when the atypical value is highly selective, resulting in a plan optimized for a small number of rows. Such plans will often use single-threaded execution, nested loops joins, and lookups. Serious performance issues can arise when this plan is reused for different parameter values that generate a much larger number of rows.

Disabling Parameter Sniffing

Parameter sniffing can be disabled using documented trace flag 4136. The trace flag is also supported for per-query use via the QUERYTRACEON query hint. Both apply from SQL Server 2005 Service Pack 4 onward (and slightly earlier if you apply cumulative updates to Service Pack 3).

Starting with SQL Server 2016, parameter sniffing can also be disabled at the database level, using the PARAMETER_SNIFFING argument to ALTER DATABASE SCOPED CONFIGURATION.

When parameter sniffing is disabled, SQL Server uses average distribution statistics to choose an execution plan.

This also sounds like a reasonable approach (and can help avoid the situation where the plan is optimized for an unusually selective parameter value), but it is not a perfect strategy either: A plan optimized for an ‘average’ value might well end up being seriously sub-optimal for the commonly-seen parameter values.

Consider an execution plan that contains memory-consuming operators like sorts and hashes. Because memory is reserved before query execution starts, a parameterized plan based on average distribution values can spill to tempdb for common parameter values that produce more data than the optimizer expected.

Memory reservations cannot usually grow during query execution, regardless of how much free memory the server may have. Certain applications do benefit from turning parameter sniffing off (see this archive post by the Dynamics AX Performance Team for an example).

For most workloads, disabling parameter sniffing entirely is the wrong solution, and may even be a disaster. Parameter sniffing is a heuristic optimization: It works better than using average values on most systems, most of the time.

Query Hints

SQL Server provides a range of query hints and other options to tune the behaviour of parameter sniffing:

- The

OPTIMIZE FOR (@parameter = value)query hint builds a reusable plan based on a specific value. OPTIMIZE FOR (@parameter UNKNOWN)uses average distribution statistics for a particular parameter.OPTIMIZE FOR UNKNOWNuses average distribution for all parameters (same effect as trace flag 4136).- The

WITH RECOMPILEstored procedure option compiles a fresh procedure plan for every execution. - The

OPTION (RECOMPILE)query hint compiles a fresh plan for an individual statement.

The old technique of “parameter hiding” (assigning procedure parameters to local variables, and referencing the variables instead) has the same effect as specifying OPTIMIZE FOR UNKNOWN. It can be useful on instances earlier than SQL Server 2008 (the OPTIMIZE FOR hint was new for 2008).

It could be argued that every parameterized statement should be checked for sensitivity to parameter values, and either left alone (if the default behaviour works well) or explicitly hinted using one of the options above.

This is rarely done in practice, partly because performing a comprehensive analysis for all possible parameter values can be time-consuming and requires quite advanced skills.

Most often, no such analysis is performed and parameter-sensitivity problems are addressed as and when they occur in production.

This lack of prior analysis is probably one main reason parameter sniffing has a poor reputation. It pays to be aware of the potential for problems to arise, and to perform at least a quick analysis on statements that are likely to cause performance problems when recompiled with an atypical parameter value.

What is a parameter?

Some would say that a SELECT statement referencing a local variable is a “parameterized statement” of sorts, but that is not the definition SQL Server uses.

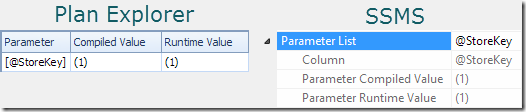

A reasonable indication that a statement uses parameters can be found by looking at the plan properties (see the Parameters tab in Sentry One Plan Explorer. Or click the query plan root node in SSMS, open the Properties window, and expand the Parameter List node):

The ‘compiled value’ shows the sniffed value of the parameter used to compile the cached plan. The ‘runtime value’ shows the value of the parameter on the particular execution captured in the plan.

Either of these properties may be blank or missing in different circumstances. If a query is not parameterized, the properties will simply all be missing.

Just because nothing is ever simple in SQL Server, there are situations where the parameter list can be populated, but the statement is still not parameterized. This can occur when SQL Server attempts simple parameterization (discussed later) but decides the attempt is “unsafe”. In that case, parameter markers will be present, but the execution plan is not in fact parameterized.

Sniffing is not just for Stored Procedures

Parameter sniffing also occurs when a batch is explicitly parameterized for reuse using sp_executesql.

For example:

EXECUTE sys.sp_executesql

N'

SELECT

P.ProductID,

P.Name,

TotalQty = SUM(TH.Quantity)

FROM Production.Product AS P

JOIN Production.TransactionHistory AS TH

ON TH.ProductID = P.ProductID

WHERE

P.Name LIKE @NameLike

GROUP BY

P.ProductID,

P.Name;

',

N'@NameLike nvarchar(50)',

@NameLike = N'K%';

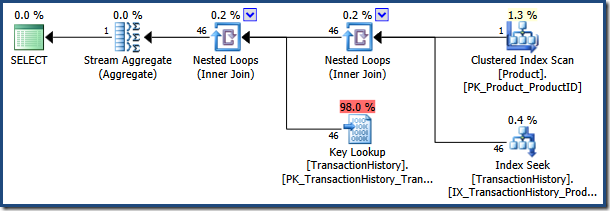

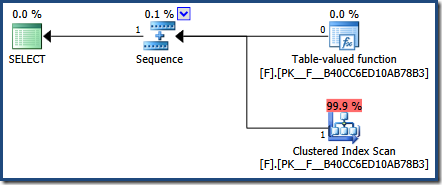

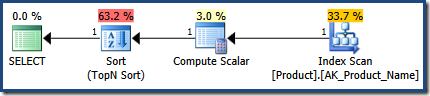

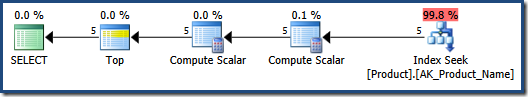

The optimizer chooses an execution plan based on the sniffed value of the @NameLike parameter. The parameter value “K%” is estimated to match very few rows in the Product table, so the optimizer chooses a nested loop join and key lookup strategy:

![]()

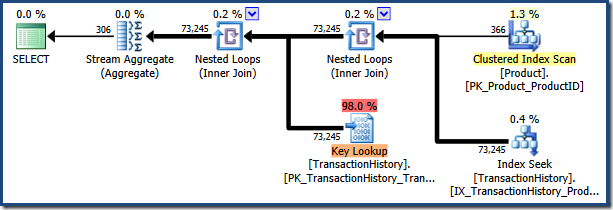

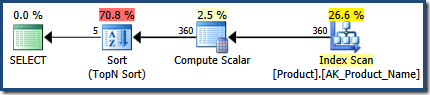

Executing the statement again with a parameter value of “[H-R]%” (which will match many more rows) reuses the cached parameterized plan:

EXECUTE sys.sp_executesql

N'

SELECT

P.ProductID,

P.Name,

TotalQty = SUM(TH.Quantity)

FROM Production.Product AS P

JOIN Production.TransactionHistory AS TH

ON TH.ProductID = P.ProductID

WHERE

P.Name LIKE @NameLike

GROUP BY

P.ProductID,

P.Name;

',

N'@NameLike nvarchar(50)',

@NameLike = N'[H-R]%';

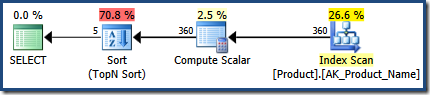

![]()

The AdventureWorks sample database is too small to make this a performance disaster, but this plan is certainly not optimal for the second parameter value.

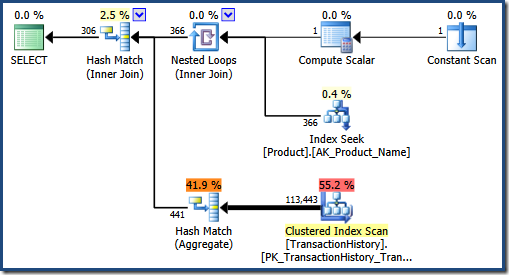

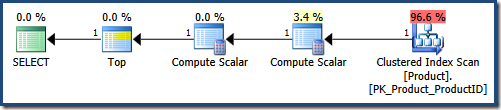

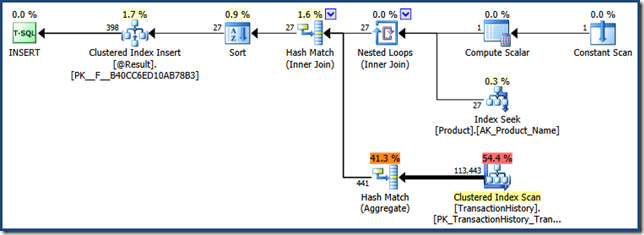

We can see the plan the optimizer would have chosen by clearing the plan cache and executing the second query again:

![]()

With a larger number of matches expected, the optimizer determines that a hash join and hash aggregation are better strategies.

T-SQL Functions

Parameter sniffing occurs with T-SQL functions as well, though the way execution plans are generated can make this more difficult to see.

There are good reasons to avoid T-SQL scalar and multi-statement functions in general, so for educational purposes only, here is a T-SQL multi-statement table-valued function version of our test query:

CREATE FUNCTION dbo.F

(@NameLike nvarchar(50))

RETURNS @Result TABLE

(

ProductID integer NOT NULL PRIMARY KEY,

Name nvarchar(50) NOT NULL,

TotalQty integer NOT NULL

)

WITH SCHEMABINDING

AS

BEGIN

INSERT @Result

SELECT

P.ProductID,

P.Name,

TotalQty = SUM(TH.Quantity)

FROM Production.Product AS P

JOIN Production.TransactionHistory AS TH

ON TH.ProductID = P.ProductID

WHERE

P.Name LIKE @NameLike

GROUP BY

P.ProductID,

P.Name;

RETURN;

END;

The following query uses the function to display information for product names starting with ‘K’:

SELECT

Result.ProductID,

Result.Name,

Result.TotalQty

FROM dbo.F(N'K%') AS Result;

Seeing parameter sniffing with an embedded function is more difficult because SQL Server does not return a separate post-execution (actual) query plan for each function invocation. The function could be called many times within a single statement, and users would not be impressed if SSMS tried to display a million function call plans for a single query.

As a result of this design decision, the actual plan returned by SQL Server for our test query is not very helpful:

Nevertheless, there are ways to see parameter sniffing in action with embedded functions. The method I have chosen to use here is to inspect the plan cache:

SELECT

DEQS.plan_generation_num,

DEQS.execution_count,

DEQS.last_logical_reads,

DEQS.last_elapsed_time,

DEQS.last_rows,

DEQP.query_plan

FROM sys.dm_exec_query_stats AS DEQS

CROSS APPLY sys.dm_exec_sql_text(DEQS.plan_handle) AS DEST

CROSS APPLY sys.dm_exec_query_plan(DEQS.plan_handle) AS DEQP

WHERE

DEST.objectid = OBJECT_ID(N'dbo.F', N'TF');

This result shows that the function plan has been executed once, at a cost of 201 logical reads with 2891 microseconds elapsed time, and the most recent execution returned one row. The XML plan representation returned shows that the parameter value was sniffed:

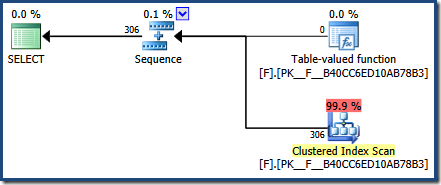

![]()

Now run the statement again, with a different parameter:

SELECT

Result.ProductID,

Result.Name,

Result.TotalQty

FROM dbo.F(N'[H-R]%') AS Result;

The post-execution plan shows that 306 rows were returned by the function:

The plan cache query shows the cached execution plan for the function has been reused (execution_count = 2):

It also shows a much higher number of logical reads, and a longer elapsed time compared with the previous run. This is consistent with reusing a nested loops and lookup plan, but to be completely sure, the post-execution function plan can be captured using Extended Events or the SQL Server Profiler tool:

![]()

Because parameter sniffing applies to functions, these modules can suffer from the same unexpected changes in performance commonly associated with stored procedures.

For example, the first time a function is referenced, a plan might be cached that does not use parallelism. Subsequent executions with parameter values that would benefit from parallelism (but reuse the cached serial plan) will show unexpectedly poor performance.

This issue can be tricky to identify because SQL Server does not return separate post-execution plans for function calls as we have seen. Using Extended Events or Profiler to routinely capture post-execution plans can be extremely resource-intensive, so it often makes sense to use that technique in a very targeted fashion. The difficulties around debugging function parameter-sensitivity issues mean it is even more worthwhile doing an analysis (and coding defensively) before the function hits production.

Parameter-sniffing works exactly the same way with T-SQL scalar user-defined functions (unless in-lined, on SQL Server 2019 onward). In-line table-valued functions do not generate a separate execution plan for each invocation, because (as the name says) these are in-lined into the calling query before compilation.

Beware Sniffed NULLs

Clear the plan cache and request an estimated (pre-execution) plan for the test query:

SELECT

Result.ProductID,

Result.Name,

Result.TotalQty

FROM dbo.F(N'K%') AS Result;

You will see two execution plans, the second of which is for the function call:

A limitation of parameter sniffing with embedded functions in estimated plans means the parameter value is sniffed as NULL (not “K%”):

![]()

In versions of SQL Server before 2012, this plan (optimized for a NULL parameter) is cached for reuse. This is unfortunate, because NULL is unlikely to be a representative parameter value, and it was certainly not the value specified in the query.

SQL Server 2012 (and later) does not cache plans resulting from an “estimated plan” request, though it will still display a function plan optimized for a NULL parameter value at compilation time.

Simple and Forced Parameterization

An ad-hoc T-SQL statement containing constant literal values can be parameterized by SQL Server, either because the query qualifies for simple parameterization or because the database option for forced parameterization is enabled (or a plan guide is used to the same effect).

A statement parameterized in this way is also subject to parameter sniffing. The following query qualifies for simple parameterization:

SELECT

A.AddressLine1,

A.City,

A.PostalCode

FROM Person.Address AS A

WHERE

A.AddressLine1 = N'Heidestieg Straße 8664';

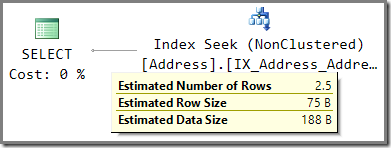

The estimated execution plan shows an estimate of 2.5 rows based on the sniffed parameter value:

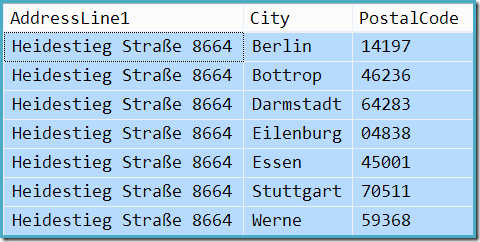

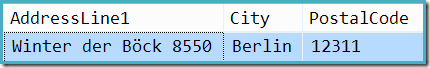

In fact, the query returns 7 rows (cardinality estimation is not perfect, even where values are sniffed):

At this point, you may be wondering where the evidence is that this query was parameterized, and the resulting parameter value sniffed. Run the query a second time with a different value:

SELECT

A.AddressLine1,

A.City,

A.PostalCode

FROM Person.Address AS A

WHERE

A.AddressLine1 = N'Winter der Böck 8550';

The query returns one row:

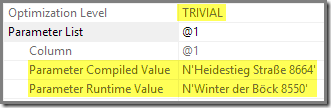

The execution plan shows the second execution reused the parameterized plan that was compiled using a sniffed value:

Parameterization and sniffing are separate activities

An ad-hoc statement can be parameterized by SQL Server without parameter values being sniffed.

To demonstrate, we can use trace flag 4136 to disable parameter sniffing for a batch that will be parameterized by the server:

DBCC FREEPROCCACHE;

DBCC TRACEON (4136);

GO

SELECT

A.AddressLine1,

A.City,

A.PostalCode

FROM Person.Address AS A

WHERE

A.AddressLine1 = N'Heidestieg Straße 8664';

GO

SELECT

A.AddressLine1,

A.City,

A.PostalCode

FROM Person.Address AS A

WHERE

A.AddressLine1 = N'Winter der Böck 8550';

GO

DBCC TRACEOFF (4136);

The script results in statements that are parameterized, but the parameter value is not sniffed for cardinality estimation purposes. To see this, we can inspect the plan cache:

WITH XMLNAMESPACES

(DEFAULT 'http://schemas.microsoft.com/sqlserver/2004/07/showplan')

SELECT

DECP.cacheobjtype,

DECP.objtype,

DECP.usecounts,

DECP.plan_handle,

parameterized_plan_handle =

DEQP.query_plan.value

(

'(//StmtSimple)[1]/@ParameterizedPlanHandle',

'NVARCHAR(100)'

)

FROM sys.dm_exec_cached_plans AS DECP

CROSS APPLY sys.dm_exec_sql_text(DECP.plan_handle) AS DEST

CROSS APPLY sys.dm_exec_query_plan(DECP.plan_handle) AS DEQP

WHERE

DEST.[text] LIKE N'%AddressLine1%'

AND DEST.[text] NOT LIKE N'%XMLNAMESPACES%';

The results show two cache entries for the ad-hoc queries, linked to the parameterized (prepared) query plan by the parameterized plan handle.

The parameterized plan is used twice:

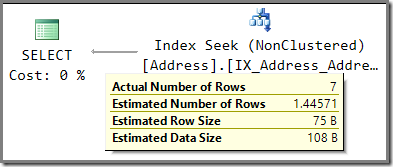

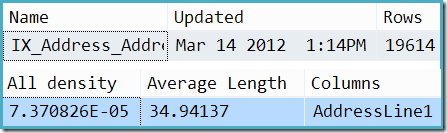

The execution plan shows a different cardinality estimate now that parameter sniffing is disabled:

Compare the estimate of 1.44571 rows with the 2.5 row estimate used when parameter sniffing was enabled.

With sniffing disabled, the estimate comes from average frequency information about the AddressLine1 column. An extract of the DBCC SHOW_STATISTICS output for the index in question shows how this number was calculated: Multiplying the number of rows in the table (19,614) by the density (7.370826e-5) gives the 1.44571 row estimate.

Side note: It is commonly believed that only integer comparisons using a unique index can qualify for simple parameterization. I deliberately chose this example (a string comparison using a non-unique index) to refute that.

WITH RECOMPILE and OPTION (RECOMPILE)

When a parameter-sensitivity problem is encountered, a common piece of advice on forums and Q&A sites is to “use recompile” (assuming the other tuning options presented earlier are unsuitable). Unfortunately, that advice is often misinterpreted to mean adding WITH RECOMPILE option to the stored procedure.

Using WITH RECOMPILE effectively returns us to SQL Server 2000 behaviour, where the entire stored procedure is recompiled on every execution.

A better alternative, on SQL Server 2005 and later, is to use the OPTION (RECOMPILE) query hint on just the statement that suffers from the parameter-sniffing problem. This query hint results in a recompilation of the problematic statement only. Execution plans for other statements within the stored procedure are cached and reused as normal.

Using WITH RECOMPILE also means the compiled plan for the stored procedure is not cached. As a result, no performance information is maintained in DMVs such as sys.dm_exec_query_stats.

Using the query hint instead means that a compiled plan can be cached, and performance information is available in the DMVs (though it is limited to the most recent execution, for the affected statement only).

For instances running at least SQL Server 2008 build 2746 (Service Pack 1 with Cumulative Update 5), using OPTION (RECOMPILE) has another significant advantage over WITH RECOMPILE: Only OPTION (RECOMPILE) enables the Parameter Embedding Optimization.

The Parameter Embedding Optimization

Sniffing parameter values allows the optimizer to use the parameter value to derive cardinality estimates. Both WITH RECOMPILE and OPTION (RECOMPILE) result in query plans with estimates calculated from the actual parameter values on each execution.

The Parameter Embedding Optimization takes this process a step further. Query parameters are replaced with literal constant values during query parsing.

The parser is capable of surprisingly complex simplifications, and subsequent query optimization may refine things even further. Consider the following stored procedure, which features the WITH RECOMPILE option:

CREATE PROCEDURE dbo.P

@NameLike nvarchar(50),

@Sort tinyint

WITH RECOMPILE

AS

BEGIN

SELECT TOP (5)

ProductID,

Name

FROM Production.Product

WHERE

@NameLike IS NULL

OR Name LIKE @NameLike

ORDER BY

CASE WHEN @Sort = 1 THEN ProductID ELSE NULL END ASC,

CASE WHEN @Sort = 2 THEN ProductID ELSE NULL END DESC,

CASE WHEN @Sort = 3 THEN Name ELSE NULL END ASC,

CASE WHEN @Sort = 4 THEN Name ELSE NULL END DESC;

END;

The procedure is executed twice, with the following parameter values:

EXECUTE dbo.P

@NameLike = N'K%',

@Sort = 1;

GO

EXECUTE dbo.P

@NameLike = N'[H-R]%',

@Sort = 4;

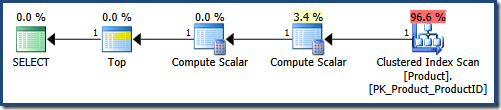

Because WITH RECOMPILE is used, the procedure is fully recompiled on every execution. The parameter values are sniffed each time, and used by the optimizer to calculate cardinality estimates.

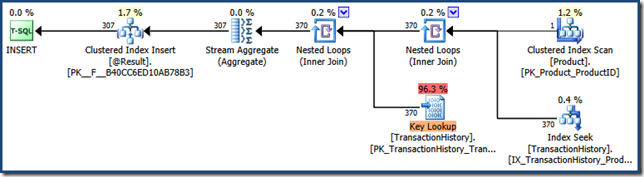

The plan for the first procedure execution is exactly correct, estimating 1 row:

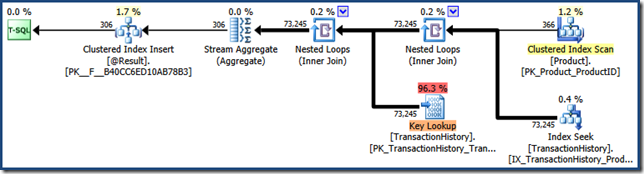

The second execution estimates 360 rows, very close to the 366 seen at run time:

Both plans use the same general execution strategy: Scan all rows in an index, applying the WHERE clause predicate as a residual; compute the CASE expression used in the ORDER BY clause; and perform a Top N Sort on the result of the CASE expression.

OPTION (RECOMPILE)

Now re-create the stored procedure using an OPTION (RECOMPILE) query hint instead of WITH RECOMPILE:

CREATE PROCEDURE dbo.P

@NameLike nvarchar(50),

@Sort tinyint

AS

BEGIN

SELECT TOP (5)

ProductID,

Name

FROM Production.Product

WHERE

@NameLike IS NULL

OR Name LIKE @NameLike

ORDER BY

CASE WHEN @Sort = 1 THEN ProductID ELSE NULL END ASC,

CASE WHEN @Sort = 2 THEN ProductID ELSE NULL END DESC,

CASE WHEN @Sort = 3 THEN Name ELSE NULL END ASC,

CASE WHEN @Sort = 4 THEN Name ELSE NULL END DESC

OPTION (RECOMPILE);

END;

Executing the stored procedure twice with the same parameter values as before produces dramatically different execution plans.

This is the first execution plan (with parameters requesting names starting with “K”, ordered by ProductID ascending):

The parser embeds the parameter values in the query text, resulting in the following intermediate form:

SELECT TOP (5)

ProductID,

Name

FROM Production.Product

WHERE

'K%' IS NULL

OR Name LIKE 'K%'

ORDER BY

CASE WHEN 1 = 1 THEN ProductID ELSE NULL END ASC,

CASE WHEN 1 = 2 THEN ProductID ELSE NULL END DESC,

CASE WHEN 1 = 3 THEN Name ELSE NULL END ASC,

CASE WHEN 1 = 4 THEN Name ELSE NULL END DESC;

The parser then goes further, removing contradictions and fully evaluating the CASE expressions. This results in:

SELECT TOP (5)

ProductID,

Name

FROM Production.Product

WHERE

Name LIKE 'K%'

ORDER BY

ProductID ASC,

NULL DESC,

NULL ASC,

NULL DESC;

You will get an error message if you try to submit that query directly to SQL Server, because ordering by a constant value is not allowed. Nevertheless, this is the form produced by the parser. It is allowed internally because it arose as a result of applying the parameter embedding optimization. The simplified query makes life a lot easier for the query optimizer:

The Clustered Index Scan applies the LIKE predicate as a residual. The Compute Scalar provides the constant NULL values. The Top returns the first 5 rows in the order provided by the Clustered Index (avoiding a sort). In a perfect world, the query optimizer would also remove the Compute Scalar that defines the NULLs, as they are not used during query execution.

The second execution follows exactly the same process, resulting in a query plan (for names beginning with letters “H” to “R”, ordered by Name descending) like this:

This plan features a Nonclustered Index Seek that covers the LIKE range, a residual LIKE predicate, the constant NULLs as before, and a Top (5). The query optimizer chooses to perform a BACKWARD range scan in the Index Seek to once again avoid sorting.

Compare the plan above with the one produced using WITH RECOMPILE, which cannot use the parameter embedding optimization:

This demo example might have been better implemented as a series of IF statements in the procedure (one for each combination of parameter values). This could provide similar query plan benefits, without incurring a statement compilation each time. In more complex scenarios, the statement-level recompile with parameter embedding provided by OPTION (RECOMPILE) can be an extremely useful optimization technique.

An Embedding Restriction

There is one scenario where using OPTION (RECOMPILE) will not result in the parameter embedding optimization being applied. If the statement assigns to a variable, parameter values are not embedded:

CREATE PROCEDURE dbo.P

@NameLike nvarchar(50),

@Sort tinyint

AS

BEGIN

DECLARE

@ProductID integer,

@Name nvarchar(50);

SELECT TOP (1)

@ProductID = ProductID,

@Name = Name

FROM Production.Product

WHERE

@NameLike IS NULL

OR Name LIKE @NameLike

ORDER BY

CASE WHEN @Sort = 1 THEN ProductID ELSE NULL END ASC,

CASE WHEN @Sort = 2 THEN ProductID ELSE NULL END DESC,

CASE WHEN @Sort = 3 THEN Name ELSE NULL END ASC,

CASE WHEN @Sort = 4 THEN Name ELSE NULL END DESC

OPTION (RECOMPILE);

END;

Because the SELECT statement now assigns to a variable, the query plans produced are the same as when WITH RECOMPILE was used. Parameter values are still sniffed and used by the query optimizer for cardinality estimation, and OPTION (RECOMPILE) still only compiles the single statement, only the benefit of parameter embedding is lost.

The problem that I've had with RECOMPILE (either variant) is that it requires updated statistics, and in cases where I've had the most issues with parameter sniffing, I also have issues with updated statistics. As a result, I've often ended up going back to OPTIMIZE FOR UNKNOWN. I've run into this on large tables in which relatively small sets of records are interested and processed. For example a table with millions of rows, into which batches of anywhere from 10 to 100,000 records get inserted at a time and then processed. The query plans for 10 records are different than for 100,000 records, but if you just insert the records and then immediately run a stored proc, the statistics aren't yet up to date, so when you RECOMPILE with the appropriate parameter value, that value isn't present in the statistics so it looks like 1 row is going to be returned, when in fact there are 100,000 rows, etc.

I've had this where the only way to get the truly correct execution plan is to do a full update of statistics, which ends up taking longer than using a bad query plan.

But I do also use WITH RECOMPILE on some stored procs too, ones with lots of different statements that all use the same parameters. But yeah, if you have a proc with many statements, which don't all share the same predicates, then OPTION(RECOMPILE) makes sense.

Thanks

If you're not already familiar with it, have a read of Fabiano Amorim's excellent Simple Talk article on the Ascending Key Problem. Generally speaking though, you're right. All the sniffing-tuning options are there for different circumstances, it's just a question of understanding which one to use and when.

Great link, thanks!

Paul,

Thanks for the (lengthy and comprehensive) write-up. I do have a clarification question:

You mentioned in the "T-SQL Functions" section that caching a serial plan for the function was one possible consequence of parameter sniffing. I have been under the impression that multi-statement functions (the example you used) wouldn't receive parallel plans under any circumstances because the T-SQL statements would by necessity always involve UPDATE/INSERT/DELETE on a table variable, and U/I/D on "tabvars" cannot be parallelized. [I've heard you can get around this by using a cursor (!), which might make sense for some query patterns… i.e. those that aggregate down to a few rows]

This wasn't the main thrust of your article, of course, and perhaps you were thinking of scalar functions for that paragraph, but if I'm just misinformed and there's a way to get parallel plans in msTVFs, I'd love to correct my understanding.

Thanks, :-)

Aaron

Thanks Paul, great article.

Hi Aaron,

Early drafts of this article did indeed use a scalar T-SQL function, which brought the cached parallel plan thing to mind. The same consideration does apply to multi-statement T-SQL functions, though it will be rarer because (as you say) statements that write to a table variable do not qualify for parallel execution. Other statements within the function may qualify though, for example (using the ContosoRetailDW sample database):

CREATE FUNCTION dbo.F (@StoreKey integer) RETURNS @T TABLE (StoreKey integer PRIMARY KEY, TotalSales money NULL) WITH SCHEMABINDING AS BEGIN DECLARE @TotalSales money = ( SELECT SUM(FOS.SalesAmount) FROM dbo.FactOnlineSales AS FOS WHERE FOS.StoreKey = @StoreKey ); INSERT @T (StoreKey, TotalSales) SELECT FOS.StoreKey, SUM(FOS.SalesAmount) FROM dbo.FactOnlineSales AS FOS GROUP BY FOS.StoreKey HAVING SUM(FOS.SalesAmount) <= @TotalSales RETURN; END;The first assignment statement in that function uses a parallel plan.

Cheers Craig, I trust all is well in Canterbury :)

Nice link address by the way.

Hello, Paul!

Thanks for pointing to an interesting nuance with function, NULL and showplan_xml caching, never paid attention to that. It is also interesting, how it works when option(recompile) is involved!

I also would like to suggest you to mention, that parameter embedding optimization is available for local variables too, though it might not be quite obvious until you look at the actual (not estimated) execution plan.

Thanks.

Dima.

Thanks. Yes, there is a lot more to say about PEO (local variables, filtered indexes and so on) but the article is already 3700 words! I'll think about it.

Well, maybe a series of articles then? =)

BTW, I recalled one more case when PEO might be not applied to some of the params. It is when parameter is affected by "optimize for" clause (but it still may be applied if parameter is not affected by this clause).

Example:

Hi Paul,

The parser magic in the example with the

ORDER BYandOPTION(RECOMPILE)is quite cool! That’s a new one to me. I was hoping to see the same effect when using an inline UDF instead of the procedure:CREATE FUNCTION dbo.F ( @NameLike nvarchar(50), @Sort tinyint ) RETURNS TABLE AS RETURN SELECT TOP (5) ProductID, Name FROM Production.Product WHERE @NameLike IS NULL OR Name LIKE @NameLike ORDER BY CASE WHEN @Sort = 1 THEN ProductID ELSE NULL END ASC, CASE WHEN @Sort = 2 THEN ProductID ELSE NULL END DESC, CASE WHEN @Sort = 3 THEN Name ELSE NULL END ASC, CASE WHEN @Sort = 4 THEN Name ELSE NULL END DESC; GO SELECT * FROM dbo.F(N'K%', 1);The parameter values are replaced by the constants because like you say in the article the inner query gets inlined. The parser seems to handle the filter part well, ignoring the inapplicable

@NameLike IS NULL, so you do get an Index Seek. But unfortunately, it doesn't do the same magic with theORDER BYlike it does when the query usesOPTION(RECOMPILE), so there’s still a sort operator in the plan. Of course you can addOPTION(RECOMPILE)to the query against the function, but I was hoping to get a similar effect without it. Oh well…Thanks for the nice coverage of the topic!

Cheers,

Itzik

Ah, of course! I'd completely forgotten about assignment operations with heavier-duty queries. Very nice, thanks! :-)

Hi Itzik,

Yes I agree it is very interesting. I can quite see how the parser would refuse to apply plan-reuse-affecting simplifications unless an explicit

OPTION(RECOMPILE)was specified, but I wonder whether it is that way just because anything else would be too complex to validate in the general case. In this example, it seems safe to me to apply the full set of simplifications because they are fully contained within the in-line function, but I admit I haven't given it the full thought it deserves (yet).Paul

Irrelevant:

Something is very wrong with the CSS in this page…

Text words overlap and text gets hidden at the right of the page. :(

I cannot read freely. :(

Oh.. It is fixed after a page refresh…

Web magic as usual…

:|

Yes that happens to me sometimes as well. Refresh works for me too.

Speaking of AX and parameter sniffing, The AX performance team eventually found out that disabling sniffing is far more trouble than it's worth, and implemented a more fine grained approach:

"More often than not the overall performance degraded when trace flag 4136 has been used"

http://blogs.msdn.com/b/axperf/archive/2013/07/29/overcoming-parameter-sniffing-issue-in-microsoft-dynamics-ax-2012-r2-cu6.aspx

Excellent post Paul! I want to know what is your general advice to avoid parameter sniffing?

We just hit this issue again with a Linq to SQL query that timed out (30s) (the query is exec sp_executesql N'SELECT bla bla Where column > @p1, N'p1 bigint' etc.)

We copy and pasted the query from the profiler to SQL Enterprise Manager to analyze and execute took only a few seconds on the same server/database.

We retried running the application and it timed out, running it EM takes a few second.

Finally dbcc freeprocdb fixed the issue (for now).

Is Enterprise Manager doing some magic that is using a different execution plan?

Hi Paul,

I need more info on "how to overcome parameter sniffing ?" I always thought that sp_executesql is best way to overcome it apart from "option recompile".Can you explain this when free or any other link will do.

Hi Paul.

You have already mention about "various way to overcome Parameter Sniffing"

I think my doubt is clear. sp_executesql is more about security concern.

sp_executesql can also reuse cache plan but is prone to Parameter Sniffing.

Hi Paul,

Thanks very much for these useful information. Is there any way to use conditional logic in parameterized prepared statements like we can do in SQL modules (stored procedures and/or functions) ? I would like to escape unnecessary recompilations using OPTION (RECOMPILE) at any level.

Other words how to suggest optimizer to use one index (which will be scanned) for one parameter value and other index (which will be used for seek) for all other parameter values ?

Problem is present because Entity Framework uses sp_executesql which will do force statement caching and though is prone to parameter sniffing.