In my last post, we saw how a query featuring a scalar aggregate could be transformed by the optimizer to a more efficient form. As a reminder, here’s the schema again:

CREATE TABLE dbo.T1 (pk integer PRIMARY KEY, c1 integer NOT NULL);

CREATE TABLE dbo.T2 (pk integer PRIMARY KEY, c1 integer NOT NULL);

CREATE TABLE dbo.T3 (pk integer PRIMARY KEY, c1 integer NOT NULL);

GO

INSERT dbo.T1 (pk, c1)

SELECT n, n

FROM dbo.Numbers AS N

WHERE n BETWEEN 1 AND 50000;

GO

INSERT dbo.T2 (pk, c1)

SELECT pk, c1 FROM dbo.T1;

GO

INSERT dbo.T3 (pk, c1)

SELECT pk, c1 FROM dbo.T1;

GO

CREATE INDEX nc1 ON dbo.T1 (c1);

CREATE INDEX nc1 ON dbo.T2 (c1);

CREATE INDEX nc1 ON dbo.T3 (c1);

GO

CREATE VIEW dbo.V1

AS

SELECT c1 FROM dbo.T1

UNION ALL

SELECT c1 FROM dbo.T2

UNION ALL

SELECT c1 FROM dbo.T3;

GO

-- The test query

SELECT MAX(c1)

FROM dbo.V1;

Plan Choices

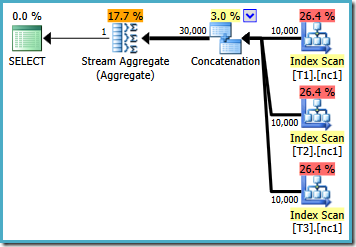

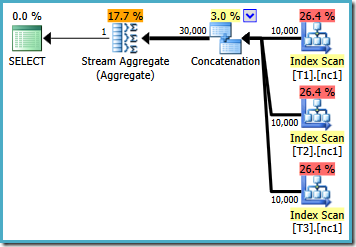

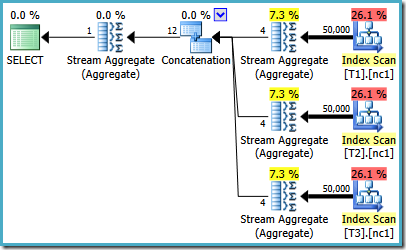

With 10,000 rows in each of the base tables, the optimizer comes up with a simple plan that computes the maximum by reading all 30,000 rows into an aggregate:

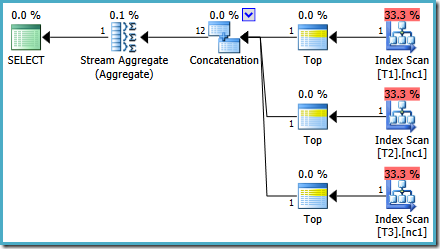

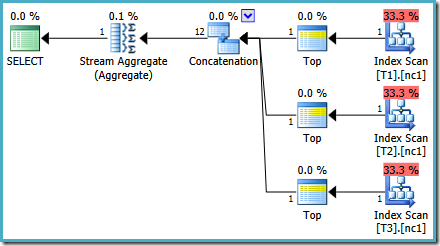

With 50,000 rows in each table, the optimizer spends a bit more time on the problem and finds a smarter plan. It reads just the top row (in descending order) from each index and then computes the maximum from just those 3 rows:

An Optimizer Bug

You might notice something a bit odd about that estimated plan. The Concatenation operator reads one row from three tables and somehow produces twelve rows! This is an error is caused by a bug in cardinality estimation that I reported in May 2011. It is still not fixed as of SQL Server 2014 CTP 1 (even if the new cardinality estimator is used) but I hope it will be addressed for the final release.

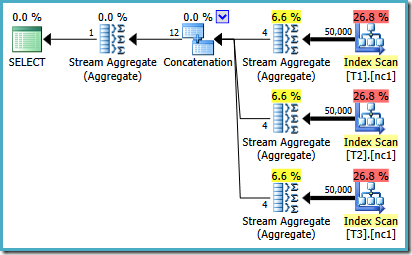

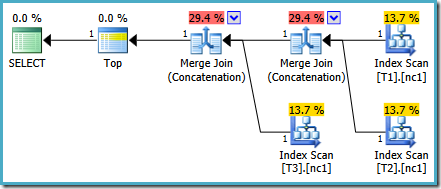

To see how the error arises, recall that one of the plan alternatives considered by the optimizer for the 50,000 row case has partial aggregates below the Concatenation operator:

It is the cardinality estimation for these partial MAX aggregates that is at fault. They estimate four rows where the result is guaranteed to be one row. You may see a number other than four – it depends on how many logical processors are available to the optimizer at the time the plan is compiled (see the bug link above for more details).

The optimizer later replaces the partial aggregates with Top (1) operators, which recalculate the cardinality estimate correctly. Sadly, the Concatenation operator still reflects the estimates for the replaced partial aggregates (3 * 4 = 12). As a result, we end up with a Concatenation that reads 3 rows and produces 12.

Using TOP instead of MAX

Looking again at the 50,000 row plan, it seems the biggest improvement found by the optimizer is to use Top (1) operators instead of reading all rows and calculating the maximum value using brute force. What happens if we try something similar and rewrite the query using Top explicitly?

SELECT TOP (1) c1

FROM dbo.V1

ORDER BY c1 DESC;

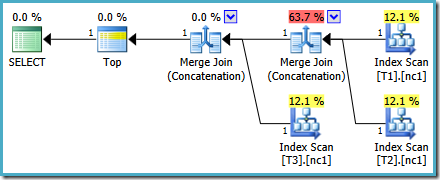

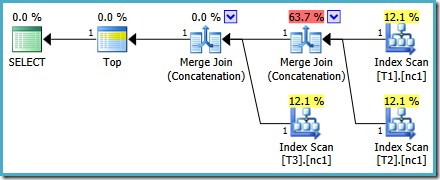

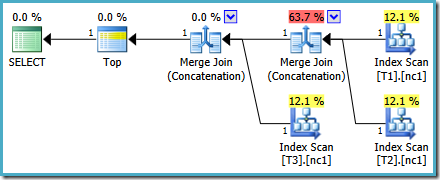

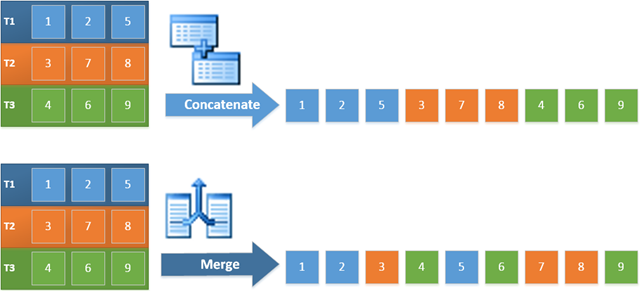

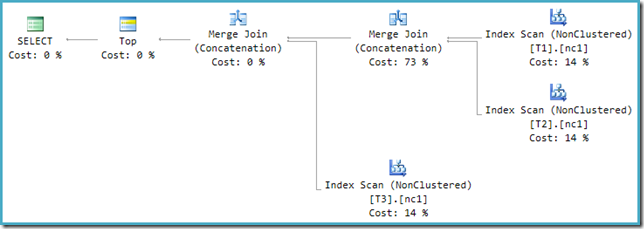

The execution plan for the new query is:

This plan is quite different from the one chosen by the optimizer for the MAX query. It features three ordered Index Scans, two Merge Joins running in Concatenation mode, and a single Top operator. This new query plan has some interesting features that are worth examining in a bit of detail.

Plan Analysis

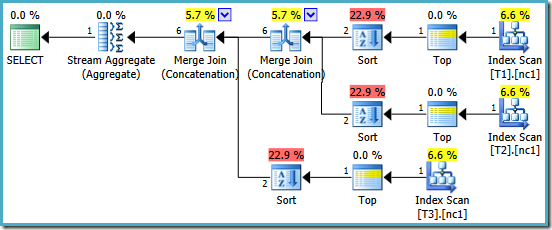

The first row (in descending index order) is read from each table’s nonclustered index, and a Merge Join operating in Concatenation mode is used. Although the Merge Join operator is not performing a join in the normal sense, this operator’s processing algorithm is easily adapted to concatenating its inputs instead of applying join criteria.

The benefit of using this operator in the new plan is that Merge Concatenation preserves the sort order across its inputs. By contrast, a regular Concatenation operator reads from its inputs in sequence. The diagram below illustrates the difference (click to expand):

The order-preserving behaviour of Merge Concatenation means that the first row produced by the leftmost Merge operator in the new plan is guaranteed to be the row with the highest value in column c1 across all three tables. More specifically, the plan operates as follows:

- One row is read from each table (in index descending order); and

- Each merge performs one test to see which of its input rows has the higher value

This seems a very efficient strategy, so it might seem odd that the optimizer’s MAX plan has an estimated cost of less than half of the new plan. To a large extent, the reason is that order-preserving Merge Concatenation is assumed to be more expensive than a simple Concatenation. The optimizer does not realize that each Merge can only ever see a maximum of one row, and over-estimates its cost as a result.

More Costing Issues

Strictly speaking we are not comparing apples with apples here, because the two plans are for different queries. Comparing costs like that is generally not a valid thing to do, though SSMS does exactly that by displaying cost percentages for different statements in a batch. But, I digress.

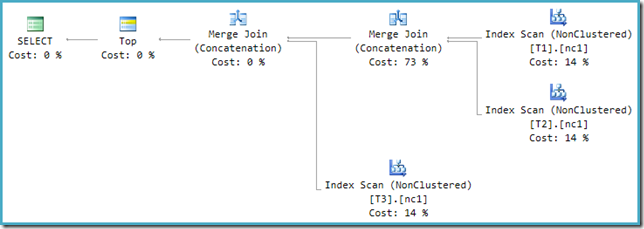

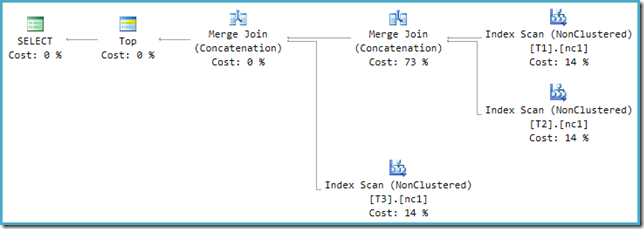

If you look at the new plan in SSMS instead of SQL Sentry Plan Explorer you will see something like this:

One of the Merge Join Concatenation operators has an estimated cost of 73% while the second one (operating on exactly the same number of rows) is shown as costing nothing at all. Another sign that something is wrong here is that the operator cost percentages in this plan do not sum to 100%.

Optimizer versus Execution Engine

The problem lies in an incompatibility between the optimizer and execution engine. In the optimizer, Union and Union All can have 2 or more inputs. In the execution engine, only the Concatenation operator is able to accept 2 or more inputs; Merge Join requires exactly two inputs, even when configured to perform a concatenation rather than a join.

To resolve this incompatibility, a post-optimization rewrite is applied to translate the optimizer’s output tree into a form the execution engine can handle. Where a Union or Union All with more than two inputs is implemented using Merge, a chain of operators is needed. With three inputs to the Union All in the present case, two Merge Unions are needed:

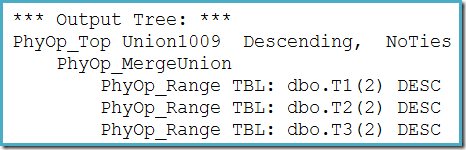

We can see the optimizer’s output tree (with three inputs to a physical merge union) using trace flag 8607:

An incomplete fix

Unfortunately, the post-optimization rewrite isn’t perfectly implemented. It makes a bit of a mess of the costing numbers. Rounding issues aside, the plan costs add up to 114% with the extra 14% coming from the input to the extra Merge Join Concatenation generated by the rewrite:

The rightmost Merge in this plan is the original operator in the optimizer’s output tree. It is assigned the full cost of the Union All operation. The other merge is added by the rewrite and receives a zero cost.

Whichever way we choose to look at it (and there are different issues that affect regular Concatenation) the numbers look odd. Plan Explorer does its best to work around the broken information in the XML plan by at least ensuring the numbers add up to 100%:

This particular costing issue is fixed in SQL Server 2014 CTP 1:

The costs of the Merge Concatenation are now evenly split between the two operators, and the percentages add up to 100%. Because the underlying XML has been fixed, SSMS also manages to show the same numbers.

Which Plan Is Better?

If we write the query using MAX, we have to rely on the optimizer choosing to perform the extra work needed to find an efficient plan. If the optimizer finds an apparently good enough plan early on, it can produce a relatively inefficient plan that reads every row from each of the base tables:

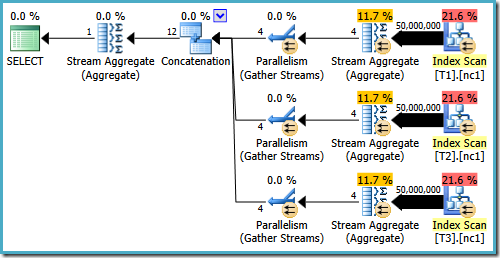

If you are running SQL Server 2008 or SQL Server 2008 R2, the optimizer will still choose an inefficient plan regardless of the number of rows in the base tables. The following plan was produced on SQL Server 2008 R2 with 50,000 rows:

Even with 50 million rows in each table, the 2008 and 2008 R2 optimizer just adds parallelism, it does not introduce the Top operators:

As mentioned in my previous post, trace flag 4199 is required to get SQL Server 2008 and 2008 R2 to produce the plan with Top operators. SQL Server 2005 and 2012 onward do not require the trace flag:

TOP with ORDER BY

Once we understand what is going on in the previous execution plans, we can make a conscious (and informed) choice to rewrite the query using an explicit TOP with ORDER BY:

SELECT TOP (1) c1

FROM dbo.V1

ORDER BY c1 DESC;

The resulting execution plan may have cost percentages that look odd in some versions of SQL Server, but the underlying plan is sound. The post-optimization rewrite that causes the numbers to look odd is applied after query optimization is complete, so we can be sure the optimizer’s plan selection was not affected by this issue.

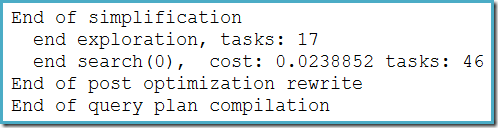

This plan does not change depending on the number of rows in the base table, and does not require any trace flags to generate. A small extra advantage is that this plan is found by the optimizer during the first phase of cost-based optimization (search 0):

The best plan selected by the optimizer for the MAX query required running two stages of cost-based optimization (search 0 and search 1).

There is a small semantic difference between the TOP query and the original MAX form that I should mention. If none of the tables contain a row, the original query would produce a single NULL result. The replacement TOP (1) query produces no output at all in the same circumstances. This difference is not often important in real-world queries, but it is something to be aware of. We can replicate the behaviour of TOP using MAX in SQL Server 2008 onward by adding an empty set GROUP BY:

SELECT MAX(c1)

FROM dbo.V1

GROUP BY ();

This change does not affect the execution plans generated for the MAX query in a way that is visible to end users.

MAX with Merge Concatenation

Given the success of Merge Join Concatenation in the TOP (1) execution plan, it is natural to wonder whether the same optimal plan could be generated for the original MAX query if we force the optimizer to use Merge Concatenation instead of regular Concatenation for the UNION ALL operation.

There is a query hint for this purpose – MERGE UNION – but sadly it only works correctly in SQL Server 2012 onward. In prior versions, the UNION hint only affects UNION queries, not UNION ALL. In SQL Server 2012 onward, we can try this:

SELECT MAX(c1)

FROM dbo.V1

OPTION (MERGE UNION)

We are rewarded with a plan that features Merge Concatenation. Unfortunately, it’s not quite everything we might have hoped for:

The interesting operators in this plan are the sorts. Notice the 1 row input cardinality estimation, and the 4 row estimation on the output. The cause should be familiar to you by now: it is the same partial aggregate cardinality estimation error we discussed earlier.

The presence of the sorts reveals one more issue with the partial aggregates. Not only do they produce an incorrect cardinality estimate, they also fail to preserve the index ordering that would make sorting unnecessary (Merge Concatenation requires sorted inputs). The partial aggregates are scalar MAX aggregates, guaranteed to produce one row so the issue of ordering ought to be moot anyway (there is only one way to sort one row!)

This is a shame, because without the sorts this would be a decent execution plan. If the partial aggregates were implemented properly, and the MAX written with a GROUP BY () clause, we might even hope that the optimizer could spot that the three Tops and final Stream Aggregate could be replaced by a single final Top operator, giving exactly the same plan as the explicit TOP (1) query. The optimizer does not contain that transformation today, and I don’t suppose it would be useful enough often enough to make its inclusion worthwhile in future.

Final Words

Using TOP will not always be preferable to MIN or MAX. In some cases it will produce a significantly less optimal plan. The point of this post is that understanding the transformations applied by the optimizer can suggest ways to rewrite the original query that may turn out to be helpful.

hi Paul,

Could you explain why Max() and Top (1) works for indexed views, but not for partitioned tables?

We've had to run a spoc that does this:

SELECT @MaxIDPartitionTable = ISNULL(MAX(IDPartitionedTable), 0) FROM ( SELECT * FROM (SELECT partition_number PartitionNumber FROM sys.partitions WHERE object_id = object_id('fct.VMP10MinData') and index_id = 1 ) T1 CROSS APPLY ( SELECT ISNULL(MAX(VMP10MinDataID),0) AS IDPartitionedTable FROM fct.VMP10MinData s WHERE $PARTITION.PF_MonthlyBySmalldatetime(s.TTimeStamp) = PartitionNumber ) AS o ) AS T2instead of

SELECT MAX(VMP10MinDataID) FROM fct.VMP10MinData VMD

on a largish table (5 billion rows) that is partitioned by TTimeStamp, when we only want to know how far up the watermark is.

Is this a bug with SQL Server?

Best regards,

Henrik Staun Poulsen

Hi Henrik,

It's a long-standing optimizer limitation. It must be tough to implement for some reason, because we (the wider community) have been making a lot of noise about this problem for years and years, but it still hasn't been addressed.

Paul

hi Paul,

Thank you very much for your reply.

I hope Microsoft gets this bug fixed.

Henrik