In several of my posts over the last year I’ve used the theme of people seeing a particular wait type and then reacting in a “knee-jerk” fashion to the wait being there. Typically this means following some poor Internet advice and taking a drastic, inappropriate action or jumping to a conclusion about what the root cause of the issue is and then wasting time and effort on a wild-goose chase.

One of the wait types where knee-jerk reactions are strongest, and where some of the poorest advice exists, is the CXPACKET wait. It’s also the wait type that is most commonly the top wait on people’s servers (according to my two big wait types surveys from 2010 and 2014 – see here for details), so I’m going to cover it in this post.

What does the CXPACKET wait type mean?

The simplest explanation is that CXPACKET means you’ve got queries running in parallel and you will *always* see CXPACKET waits for a parallel query. CXPACKET waits do NOT mean you have problematic parallelism – you need to dig deeper to determine that.

As an example of a parallel operator, consider the Repartition Streams operator, which has the following icon in graphical query plans:

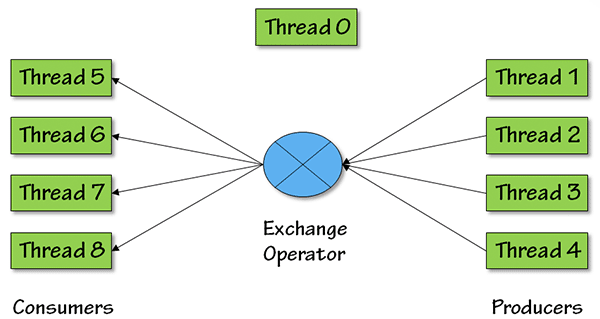

And here’s a picture that shows what’s going on in terms of parallel threads for this operator, with degree-of-parallelism (DOP) equal to 4:

For DOP = 4, there will be four producer threads, pulling data from earlier in the query plan, the data then goes back out to the rest of the query plan through four consumer threads.

You can see the various threads in a parallel operator that are waiting for a resource using the sys.dm_os_waiting_tasks DMV, in the exec_context_id column (this post has my script for doing this).

There is always a 'control' thread for any parallel plan, which by historical accident is always thread ID 0. The control thread always registers a CXPACKET wait, with the duration equal to the length of time the plan takes to execute. Paul White has a great explanation of threads in parallel plans here.

The only time non-control threads will register CXPACKET waits is if they complete before the other threads in the operator. This can happen if one of the threads gets stuck waiting for a resource for a long time, so look to see what the wait type is of the thread not showing CXPACKET (using my script above) and troubleshoot appropriately. This can also happen because of a skewed work distribution among the threads, and I’ll go into more depth on that case in my next post here (it’s caused by out-of-date statistics and other cardinality estimation problems).

Note that in SQL Server 2016 SP2 and SQL Server 2017 RTM CU3, consumer threads no longer register CXPACKET waits. They register CXCONSUMER waits, which are benign and can be ignored. This is to reduce the number of CXPACKET waits being generated, and the remaining ones are more likely to be actionable.

Unexpected parallelism?

Given that CXPACKET simply means you’ve got parallelism happening, the first thing to look at is whether you expect parallelism for the query that’s using it. My query will give you the query plan node ID where the parallelism is happening (it pulls out the node ID from the XML query plan if the wait type of the thread is CXPACKET) so look for that node ID and determine whether the parallelism makes sense.

One of the common cases of unexpected parallelism is when a table scan happens where you’re expecting a smaller index seek or scan. You’ll either see this in the query plan or you’ll see lots of PAGEIOLATCH_SH waits (discussed in detail here) along with the CXPACKET waits (a classic wait statistics pattern to look out for). There are a variety of causes of unexpected table scans, including:

- Missing nonclustered index so a table scan is the only alternative

- Out-of-date statistics so the Query Optimizer thinks a table scan is the best data access method to use

- An implicit conversion, because of a data type mismatch between a table column and a variable or parameter, which means a nonclustered index cannot be used

- Arithmetic being performed on a table column instead of a variable or parameter, which means a nonclustered index cannot be used

In all these cases, the solution is dictated by what you find the root cause to be.

But what if there’s no obvious root case and the query is just deemed expensive enough to warrant a parallel plan?

Preventing parallelism

Among other things, the Query Optimizer decides to produce a parallel query plan if the serial plan has a higher cost than the cost threshold for parallelism, an sp_configure setting for the instance. The cost threshold for parallelism (or CTFP) is set to five by default, which means that a plan doesn’t have to be very expensive to trigger the creation of a parallel plan.

One of the easiest ways to prevent unwanted parallelism is to increase the CTFP to a much higher number, with the higher you set it, the less likely parallel plans will be created. Some people advocate setting CTFP to somewhere between 25 and 50, but as with all tweakable settings, it’s best to test various values and see what works best for your environment. If you’d like a bit more of a programmatic method to help picking a good CTFP value, Jonathan wrote a blog post showing a query to analyze the plan cache and produce a suggested value for CTFP. As examples, we have one client with CTFP set to 200, and another set to the maximum – 32767 – as a way of forcibly preventing any parallelism whatsoever.

You might wonder why the second client had to use CTFP as a sledgehammer method for preventing parallelism when you’d think they could simply set the server ‘max degree of parallelism’ (or MAXDOP) to 1. Well, anyone with any permission level can specify a query MAXDOP hint and override the server MAXDOP setting, but CTFP cannot be overridden.

And that’s another method of limiting parallelism – setting a MAXDOP hint on the query you don’t want to go parallel.

You could also lower the server MAXDOP setting, but that’s a drastic solution as it can prevent everything from using parallelism. It’s common nowadays for servers to have mixed workloads, for instance with some OLTP queries and some reporting queries. If you lower the server MAXDOP, you’re going to hobble the performance of the reporting queries.

A better solution when there’s a mixed workload would be to use CTFP as I described above or to utilize Resource Governor (which is Enterprise-only I’m afraid). You can use Resource Governor to separate the workloads into workload groups, and then set a MAX_DOP (the underscore isn’t a typo) for each workload group. And the good thing about using Resource Governor is that the MAX_DOP cannot be overridden by a MAXDOP query hint.

Summary

Don't fall into the trap of thinking that CXPACKET waits automatically mean you’ve got bad parallelism happening, and certainly don’t follow some of the Internet advice I’ve seen of slamming the server by setting MAXDOP to 1. Take the time to investigate why you’re seeing CXPACKET waits and whether it’s something to be addressed or just an artifact of a workload that’s running correctly.

As far as general wait statistics are concerned, you can find more information about using them for performance troubleshooting in:

- My SQLskills blog post series, starting with Wait statistics, or please tell me where it hurts

- My Wait Types and Latch Classes library here

- My Pluralsight online training course SQL Server: Performance Troubleshooting Using Wait Statistics

- SQL Sentry Performance Advisor

In the next article in the series, I'll discuss skewed parallelism and give you a simple way to see it happening. Until then, happy troubleshooting!

Just an awesome post. There's so much "advice" and misinformation when the word MAXDOP is used. I hate the fact that SharePoint's best practice is to set mdop to 1. That works great for SP because they hint a large portion of their code but when companies have 10 other databases on their SP instance that mdop to 1 is a killer.

Great post! I do have one question about CTFP. Does it represents cost of query or as TN defines "….an estimated elapsed time in seconds required to run the serial plan on a specific hardware configuration."?

Can you explain please?

They're the same thing. (And thanks!)

In relation to DOP, within a query plan your explanation suggests that once DOP is calculated it is fixed for the entire plan.

That is let's say DOP based on available threads etc. gets calculated at 4, does that mean all operators capable of parallelism will go parallel with 4 theads regardless of the operator cost? Or will some operators go more parallel than others with some not going parallel at all?

Also to add to my confusion you reference jonathan"s blog whose query pulls operator costs from already parallel plans, but isn't this largely irrelevant given it is the serial cost that dictates CTFP not the operator cost?

Hopefully you can clarify my confusion.

Once the DOP is fixed, operators can use up to (2 x DOP) + 1 threads during plan execution. DOP is set for each batch. Nope – using the costs from the parallel plan is just as good a way to set CTFP.

Thanks for the reply.

Does the 2*DOP + 1 come from Producers and Consumers, and you also say "up to" implying some operators go less parallel, based on cost I assume?

In terms of the parallel cost, I don't think that is correct see this post for example http://www.brentozar.com/archive/2014/11/sql-server-cost-threshold-for-parallelism/, to quote the relevant section:

"Behind the scenes, the optimizer is considering many possible plans. The Cost Threshold for Parallelism setting comes into play during that process and uses costs [namely serial cost] that you don’t see in the final plan."

It depends on the operator how many threads it will need. A simple scan might just use DOP + 1.

For the CTFP, Brent's saying the same as me – CTFP is based on the cost of a serial plan as to whether the optimizer produces a parallel plan or not.

I and Jon are saying that you can tweak CTFP based on the costs from the parallel plan and it's good enough. You really have no choice, as there aren't any other costs you can get from plans in the plan cache that have already gone parallel.

Thanks Paul

Thanks for the post.

Is this the right way to do? – cxpacket is one of top waits on the server.

i determine, which queries face this wait type.

Then i run them several times with option (MAXDOP 1,2,4,8……etc) and set statistics time on.

To see wich degree of parallelism will work fastest. (Wa are talking about situation, when there is no table scan)

as i go from 1 to 8 CPU time increases and elapsed time decreases.

Should i use the sum of these two values to determine the best result?

It depends what you're trying to do. You need to pick the DOP that lets your overall workload perform best, but that's putting it very, very simplistically. Sounds like you need a higher DOP (without having any other info apart from what you've provided). No – don't sum them – they're different things. For a CPU-bound query, which it sounds like yours is, CPU time (the sum of all the time used by all processor cores) will increase as you allow more processor cores to be used. If that makes elapsed time decrease (which it will commonly do), and that helps the overall workload, that's a good thing. And of course, make sure that the query plan is actually what you'd expect for the T-SQL you're executing – just because it's running in parallel doesn't mean that a parallel plan is the most optimal.

Hi Paul,

In my environment; MAXDOP was set to 4 and the 16vCPU are there. My question is that when i am firing a insert statement in a database it is taking total 21 threads. As per the baove explanation the total no of thread should be 9(2*DOP+1).

Could you please explian this.

That's the maximum number of threads per operator, not per plan. Depends on the number of parallel zones and other things too.

Could the origin of the parallelism be the fact that my database is partitioned into 8 files, across 4 disks, and that my tempdb database is partitioned across 8 files in another disk?

No – the query optimizer knows nothing about the storage layout when compiling the query plan.

As usually great article Paul, it helped me a lot.

I just have one question or an advice for the below situation:

Physical Box, 512GB RAM, 80 Cores grouped by 10 in 8 NUMA nodes.

The SQL Server is using in total 40 Cores from only 4 NUMA nodes.

Each 5 cores from a NUMA node is assigned essentially to "Processor Affinity" and "I/O Affinity"(we have the Affinity off for Processor and I/O). I have set the MAXDOP value to 5 to limit the parallelism to only one NUMA node – but do you think this is kind of the best starting value for MAXDOP in this situation.

@I have read somewhere that it is recommended to limit the parallelism to the number of cores in one NUMA node. But since from one NUMA node I only have 5 cores related to Processor affinity – I have set the MAXDOP to 5. Should've started with some higher value or not?

What will be your advice in this situation?

Thanks in advance for your reply

Iliyan Rashev

That's what I'd start at, but you have a very unusual configuration (with half the processors set to I/O affinity only) so I'd want to investigate why you've configured things that way and probably change it. Not something we can do over comments on a blog post I'm afraid.

thanks for the great article Paul.I need help on one scenario in our project. We have our Dev & Test servers with 112 GB RAM and 16 vCPUs configuration. We have our Dev server with max DOP as 8 and Test has 2 . When we run one critical functionality which process 33k data is taking more time in dev than test. When we change the MaxDOP in dev to 2 it is running fast. Please let me know to understand this scenario. More maxdop should have good performance right? Please help. this query which is taking more time is having all temp tables and no query plan.

Hi Manoj – no, it depends on the query plan and the operations being done whether higher DOP will be faster than lower DOP – there's no guarantee. It sounds like this query may not benefit from parallelism so forcing a lower DOP or increasing cost threshold for parallelism may be called for. Very hard to give detailed advice without looking at the query in more depth I'm afraid.

HI Paul,

Thank you for the reply. I have tried lowering the Max DOP using "Option (MaxDoP 2)" and the query worked well. Do you see any issue going with this way. Just trying to know more about this method.

Nope – that's a good way to do it for individual queries.