A database snapshot provides a read-only view of a SQL Server database that is transactionally consistent with the state of the source database at the time that the database snapshot was created. A number of reasons exist for using database snapshots, for example reporting against a mirrored database, and DBCC CHECKDB also uses internal database snapshots from SQL Server 2005 onwards.

Database snapshots also provide the ability to roll back all of the changes that have occurred to a database since the database snapshot was created, but with a nasty side effect on the database’s transaction log that Paul blogged about here.

One of the things that isn’t commonly considered or shown around database snapshots is the performance impact that the snapshot has for the database write workload. The SQLCAT team published a whitepaper for SQL Server 2005, Database Snapshot Performance Considerations under I/O-Intensive Workloads, that investigated the performance impacts of database snapshots, and after working with a client recently where database snapshots resulted in performance problems, I wanted to test SQL Server 2012 and determine if there were any changes to the overhead of database snapshots seven years and three SQL Server releases later.

Test Configuration

To perform the testing of the effect of database snapshots on write workload performance, I used our Dell R720 performing a 1,000,000 row insert into a new table in an enlarged version of the AdventureWorks2012 database. The AdventureWorks2012 database was created with 8 data files spread across two Fusion-io ioDrive Duo 640GB SSDs that were each setup as two individual 320GB disks in Windows, presenting a total of 4 disks. To simplify explaining the configuration, the storage layout used for these tests is shown in the table below:

| Disk | Configuration | Usage |

|---|---|---|

| K | 15K RAID 5 – 6 Disk | Snapshot |

| L | Fusion-io Card2 – Side B | Log File |

| M | Fusion-io Card2 – Side A | 4 Data Files |

| N | Fusion-io Card1 – Side A | 4 Data Files |

| Q | Fusion-io Card1 – Side B | Tempdb |

| R | LSI Nytro BLP4-1600 | Snapshot |

Table 1 – Server Disk Layout and Usage

The storage for the database snapshot was either a RAID-5 array of six 15k RPM SAS drives connected through iSCSI, or an LSI Nytro BLP4-1600 PCI-E card.

The test workload used the following SELECT INTO statement to generate a 1,000,000 row table which was dropped between each of the tests.

SELECT TOP 1000000 *

INTO tmp_SalesOrderHeader

FROM Sales.SalesOrderHeaderEnlarged;

The tests were timed to measure the duration without a database snapshot, and then the duration with a database snapshot created on each of the storage devices to measure the performance degradation caused by writing page changes to the database snapshot sparse file. The tests were also run using two database snapshots on the same storage device to ascertain what the overhead of having additional database snapshots might be for the duplicated write operations that potentially have to be performed.

Results

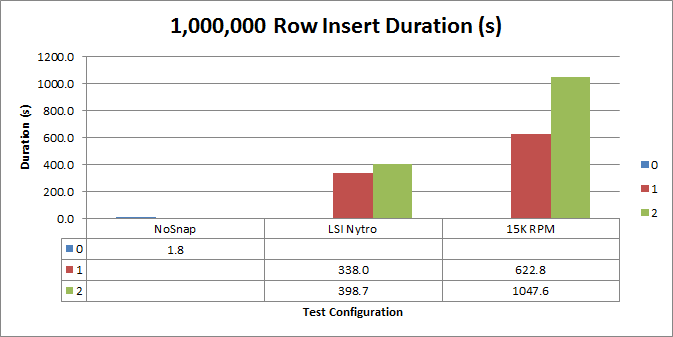

Each test configuration was executed ten times and the average duration, converted from milliseconds to seconds for easier viewing, is shown in Figure 1, for 0, 1, or 2 database snapshots.

The baseline tests with no database snapshots executed on average in 1.8 seconds, and even when the storage for the database snapshot files was equivalent in performance, the existence of a single database snapshot imposed overhead to the write performance for the database. The overhead of the second database snapshot is lower than having the first database snapshot in each of the tests, though the 15K RPM disks had a much more difficult time keeping up with the added write workload from the second database snapshot for the database.

The performance on the LSI Nytro card initially surprised me since it was also a PCI-X SSD. However, after discussing the results with Glenn, he mentioned that the Sandforce controller compression and slower write performance for random, low-compression data from his past tests on the drive. However, it still easily outclassed the spinning media.

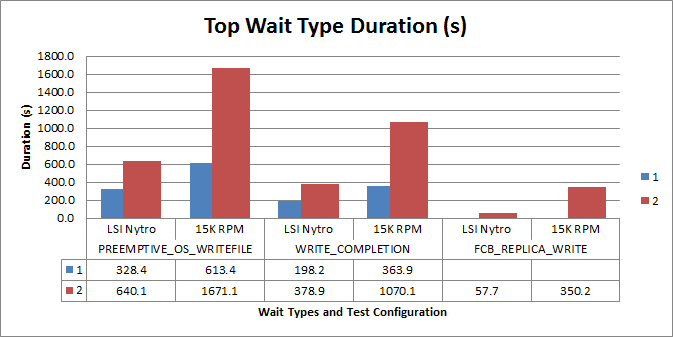

Before running the tests I was interested to know what wait types would occur during the tests, so as a part of the test configuration, I cleared sys.dm_os_wait_stats with DBCC SQLPERF and captured the output from the DMV for each test run into a table. The top waits for the single snapshot configurations were PREEMPTIVE_OS_WRITEFILE and WRITE_COMPLETION as shown in Figure 2, for 1 or 2 database snapshots.

One of the interesting items was the addition of FCB_REPLICA_WRITE waits when a second snapshot was created. After reviewing the single database snapshot wait results, and re-running a couple of rounds of tests, this wait never occurs for a single snapshot and only occurs when more than one snapshot exists and is associated with copying the pages into the database snapshot files. The wait times for the PREEMPTIVE_OS_WRITEFILE waits trend closely with the increases in execution duration for each of the configurations.

With these results in mind, when reviewing a system using the Waits and Queues methodology, seeing this wait type with higher values might be worth investigating whether or not database snapshots exist for any of the databases on the server.

Conclusion

When using database snapshots, even in SQL Server 2012, there is an overhead associated with the additional writes required for copying data pages to the sparse files for the snapshots. If using database snapshots is a part of your general configuration, I would really be careful about planning the I/O subsystem to meet the workload requirements for concurrent I/O activity to the database snapshot sparse files.

From the results of these tests I’d even consider placing database snapshots on SSDs ahead of tempdb for the write performance, and also for lower performance impact from the snapshot maintenance.

As always, your mileage may vary, and you will certainly want to test the performance of any configuration before placing it into production usage.

"the existence of a single database snapshot imposed overhead to the write performance "

This is an understatement given that it imposed an overhead of … 200x. Why 200x? I'd really like to investigate this extreme result to see what it means.

I currently use snapshots in my project, which is a 24×7 database used in a large financial services organisation. Functionally, the snapshot works well enabling us to maintain consistency even during our overnight batch. Non-functionally, the snapshot has been a total nightmare.

We did a lot of analysis of snapshot performance and for certain operations you find that the number of IOPS done with the snapshot present is 8x that of the non-snapshot case. The key reason for this, I believe, is that the snapshot database does not have the equivalent of a checkpoint process which means that every change results in a long stream of I/O making it harder to optimise into single writes. Also rather than allocating extents (8 pages) it writes individual pages to the sparse file. To get a feel for the extra IOPS, just try something like an UPDATE on a large table in the AdventureWorks database while monitoring various disk performance counters. You need to look very carefully at things like padding since you are particularly badly affected by page splits and so on. We ended up using partition switching extensively to help optimise data loading even in scenarios where you would not normally require it.

Having an I/O subsystem capable of very high IOPS is key to getting any kind of decent performance from snapshots so I complete agree that putting the snapshot on Fusion I/O or SSD is very good advice. SQL 2012 does offer one option to reduce the impact for some use cases – using Always On with an asynchronous replica and creating the snapshot on the replica allows your write operations on the primary to proceed at full speed and this is something we are looking to roll out.

Overall I wish more people would complain to MIcrosoft about the poor implementation of snapshots – with a bit more engineering this could I believe be a killer feature but as it stands today it creates as many problems as it solves.

Do you know if these write times are similar for read committed snapshot isolation as it uses a similar method for maintaining the snapshot in tempdb?

Hey Dandy,

RCSI does not use anything similar to a database snapshot and does not typically degrade performance significantly. The row versioning whitepaper (http://msdn.microsoft.com/en-us/library/ms345124(v=sql.90).aspx) explains how RCSI works, which is very different than copy-on-write to a sparse file using database snapshots.

Thanks for the clarification. I misunderstood the mechanism behind database snapshots. I thought they used row versions to track changes into the snapshot database. Didn't realize they did that at the page level. Thanks for the link as well. I was actually in the middle of it when you responded. I had read that while RCSI lessens read/write contention, but that it does add to the overhead for writes because of the row versioning. Wondering how much the versioning really affects writes. Again, my bad on misunderstanding database snapshots.

There is an additional 14 byte overhead for the row version identifier per row as it gets modified the next time after enabling RCSI:

http://blogs.msdn.com/b/sqlserverstorageengine/archive/2008/03/30/overhead-of-row-versioning.aspx

The row version in tempdb doesn't generally cause performance problems, and is used by other features in SQL Server like Online Index Rebuilds, Triggers, and MARS even when RCSI is not enabled.

http://msdn.microsoft.com/en-us/library/ms175492(v=sql.105).aspx

I've never had to turn off RCSI on a system because it excessively used/abused tempdb or caused significant performance problems to date. Keep in mind that this is what makes readable secondaries in 2012 Availability Groups possible:

http://blogs.msdn.com/b/sqlserverstorageengine/archive/2011/12/22/alwayson-impact-of-mapping-reporting-workload-to-snapshot-isolation-on-readable-secondary.aspx

In the meantime I have observed a database snapshot on a fast SSD. According to Process Monitor, single-page writes to random locations took ~15ms. This seems to indicate that NTFS sparse files are just very, very slow when allocating new pages at random locations. This SSD is basically incapable of taking 14ms for random IO. >1 order of magnitude difference.

So it seems that the NTFS driver is at fault.

I can't comment on whether NTFS is part of the problem but it is definitely the case that the number of I/Os being perfomed on a snapshot is up to 8x greater than when no snapshot is in place. The architecture of snapshots is not designed for performance.

Again, you guys are obviously playing in the Big Ballparks.

If not too late, can you please speak to the following areas:

1) Due to the relatively poor performance you mentioned, your "Safety level" appears to be configured for High-Safety and not High-Performance; Synchronous as opposed to Aysnch

2) Also, this number was captured while writing a million records. On Average, how many writes is going on? Just trying to figure what is your normal\sustained loads and what was the Performance impact for typical load

All in all, thanks for pointing out the late night stumbles, that is often missing in Books On Line (BOL).

I suppose you guys don't read that much; or at least read a little more than that.

Daniel Adeniji

With changes to SQLCAT blog, etc, the link to the whitepaper referenced above misses now. New home for it (at least in April 2017)

Database Snapshot Performance Considerations under I/O-Intensive Workloads

http://download.microsoft.com/download/d/9/4/d948f981-926e-40fa-a026-5bfcf076d9b9/dbsnapshotperf.docx

(145 kb Word document)