SQL Server 2012 AlwaysOn Availability Groups require a database mirroring endpoint for each SQL Server instance that will be hosting an availability group replica and/or database mirroring session. This SQL Server instance endpoint is then shared by one or more availability group replicas and/or database mirroring sessions and is the mechanism for communication between the primary replica and the associated secondary replicas.

Depending on the data modification workloads on the primary replica, the availability group messaging throughput requirements can be non-trivial. This activity is also sensitive to traffic from concurrent non-availability group activity. If throughput is suffering due to degraded bandwidth and concurrent traffic, you may consider isolating the availability group traffic to its own dedicated network adapter for each SQL Server instance hosting an availability replica. This post will describe this process and also briefly describe what you might expect to see in a degraded throughput scenario.

For this article, I’m using a five node virtual guest Windows Server Failover Cluster (WSFC). Each node in the WSFC has its own stand-alone SQL Server instance using non-shared local storage. Each node also has a separate virtual network adapter for public communication, a virtual network adapter for WSFC communication, and a virtual network adapter that we’ll dedicate to availability group communication. For the purposes of this post, we’ll focus on the information needed for the availability group dedicated network adapters on each node:

| WSFC Node Name | Availability Group NIC TCP/IPv4 Addresses |

|---|---|

| SQL2K12-SVR1 | 192.168.20.31 |

| SQL2K12-SVR2 | 192.168.20.32 |

| SQL2K12-SVR3 | 192.168.20.33 |

| SQL2K12-SVR4 | 192.168.20.34 |

| SQL2K12-SVR5 | 192.168.20.35 |

Setting up an availability group using a dedicated NIC is almost identical to a shared NIC process, only in order to “bind” the availability group to a specific NIC, I first have to designate the LISTENER_IP argument in the CREATE ENDPOINT command, using the aforementioned IP addresses for my dedicated NICs. Below shows the creation of each endpoint across the five WSFC nodes:

:CONNECT SQL2K12-SVR1

USE [master];

GO

CREATE ENDPOINT [Hadr_endpoint]

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = (192.168.20.31))

FOR DATA_MIRRORING (ROLE = ALL, ENCRYPTION = REQUIRED ALGORITHM AES);

GO

IF (SELECT state FROM sys.endpoints WHERE name = N'Hadr_endpoint') <> 0

BEGIN

ALTER ENDPOINT [Hadr_endpoint] STATE = STARTED;

END

GO

USE [master];

GO

GRANT CONNECT ON ENDPOINT::[Hadr_endpoint] TO [SQLSKILLSDEMOS\SQLServiceAcct];

GO

:CONNECT SQL2K12-SVR2

-- ...repeat for other 4 nodes...

After creating these endpoints associated with the dedicated NIC, the rest of my steps in setting up the availability group topology are no different than in a shared NIC scenario.

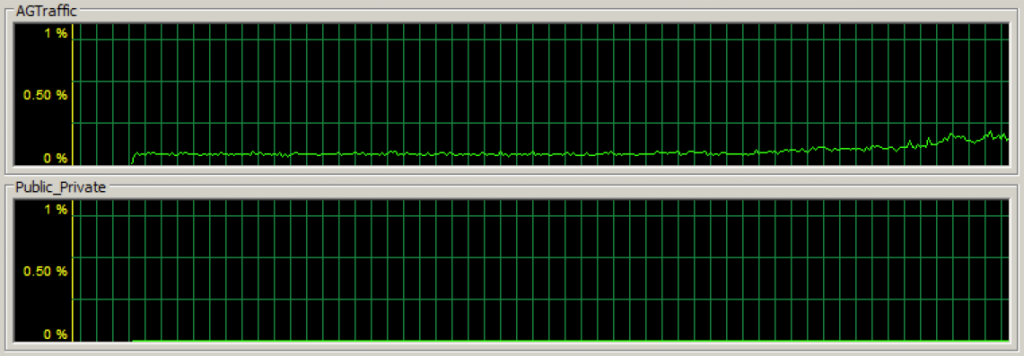

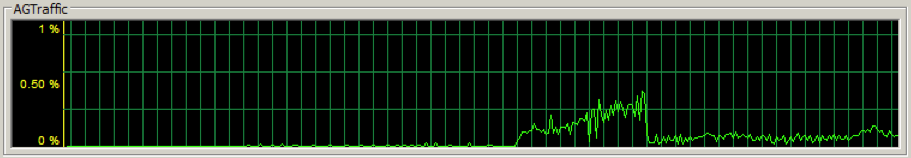

After creating my availability group, if I start driving data modification load against the primary replica availability databases, I can quickly see that the availability group communication traffic is flowing on the dedicated NIC using Task Manager on the networking tab (the first section is the throughput for the dedicated availability group NIC):

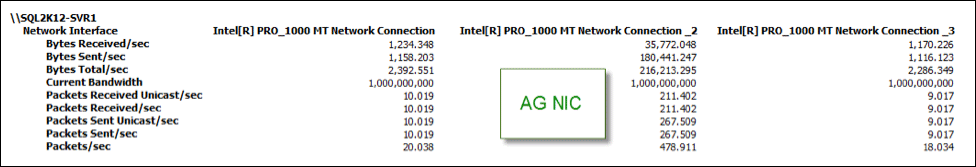

And I can also track the stats using various performance counters. In the below image, the Inetl[R] PRO_1000 MT Network Connection _2 is my dedicated availability group NIC and has the majority of NIC traffic compared to the two other NICs:

Now having a dedicated NIC for availability group traffic can be a way to isolate activity and theoretically improve performance, but if your dedicated NIC has insufficient bandwidth, as you might expect performance will suffer and the health of the availability group topology will degrade.

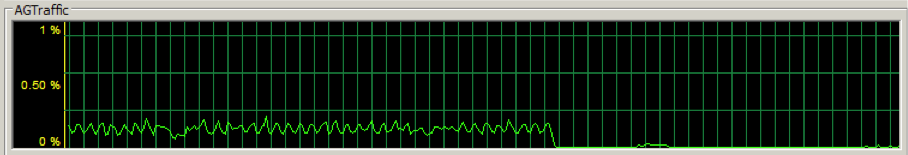

For example, I changed the dedicated availability group NIC on the primary replica to a 28.8 Kbps outgoing transfer bandwidth to see what would happen. Needless to say, it wasn’t good. The availability group NIC throughput dropped significantly:

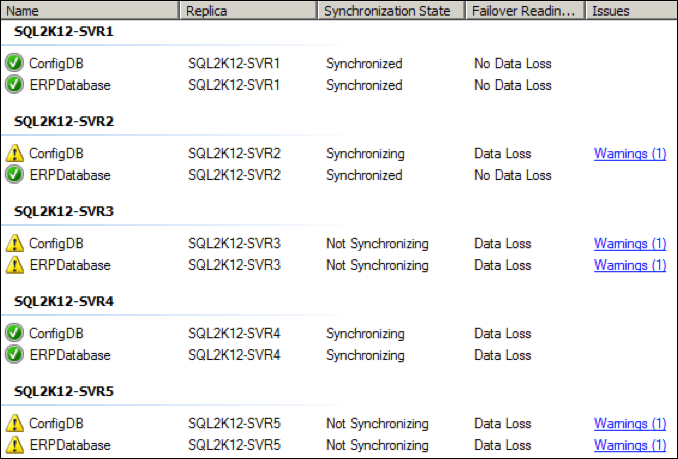

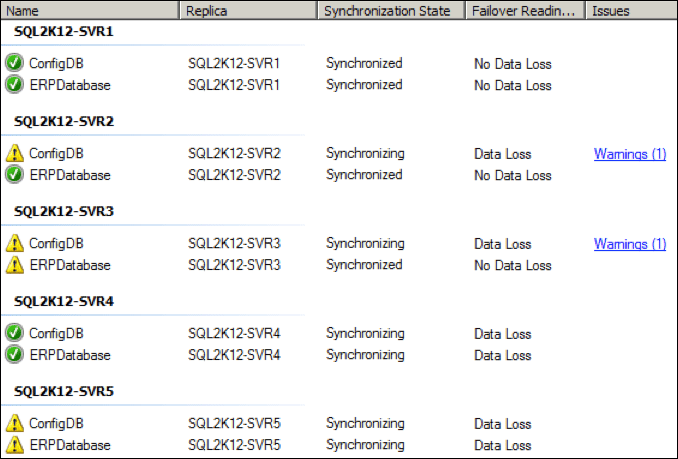

Within a few seconds, the health of the various replicas degraded, with a couple of the replicas moving to a “not synchronizing” state:

I increased the dedicated NIC on the primary replica to 64 Kbps and after a few seconds there was an initial catch-up spike as well:

While things improved, I did witness periodic disconnects and health warnings at this lower NIC throughput setting:

What about the associated wait statistics on the primary replica?

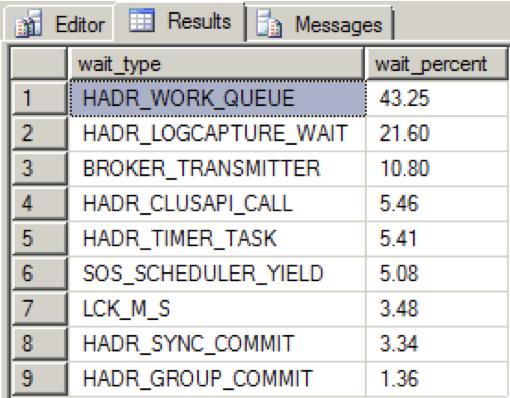

When there was plenty of bandwidth on the dedicated NIC and all availability replicas were in a healthy state, I saw the following distribution during my data loads over a 2 minute period:

HADR_WORK_QUEUE represents an expected background worker thread waiting for new work. HADR_LOGCAPTURE_WAIT represents another expected wait for new log records to become available and according to Books Online, is expected if the log scan is caught up or is reading from disk.

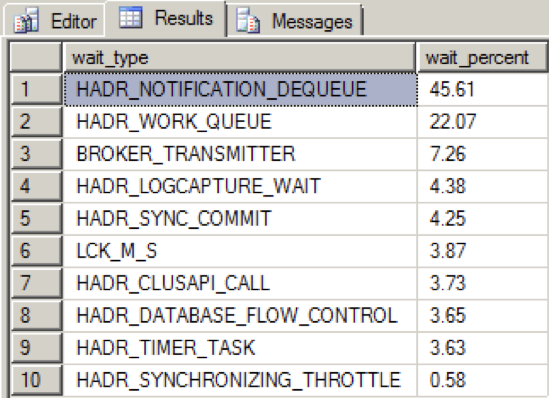

When I reduced the throughput of the NIC enough in order to get the availability group to an unhealthy state, the wait type distribution was as follows:

We now see a new top wait type, HADR_NOTIFICATION_DEQUEUE. This is one of those “internal use only” wait types as defined by Books Online, representing a background task that processes WSFC notifications. What’s interesting is that this wait type doesn’t point directly to an issue, and yet the tests show this wait type rise to the top in association with degraded availability group messaging throughput.

So the bottom line is isolating your availability group activity to a dedicated NIC can be beneficial if you’re providing a network throughput with sufficient bandwidth. However if you can’t guarantee good bandwidth even using a dedicated network, the health of your availability group topology will suffer.

Hi Joe. I have 4 active/active SQL Server 2012 SP1 CU9 4 node cluster on Win 2012 R2. The steps I took to setup the AG follow: 1) Created endpoints with IP of Separate NIC and port 5022, 2) Granted connect to the service account which is running SQL service on all SQL instances, 3) Had to make possible owners of SQL be the respective physical nodes or AG would not create the object in the cluster. 4) Restored db on primary and restoring in norecovery on secondary. 5) Ran the create script for AG which completed. 6) Attempted to JOIN secondary to the AG and got error–>A connection timeout has occurred while attempting to establish a connection to availability replica 'PRDCL2SQLV03' with id [09CB1D1A-7B15-4EEA-997F-2C0C19CEFA96]. Either a networking or firewall issue exists, or the endpoint address provided for the replica is not the database mirroring endpoint of the host server instance.

Hi Joe. I have 4 active/active SQL Server 2012 SP1 CU9 4 node cluster on Win 2012 R2. The steps I took to setup the AG follow: 1) Created endpoints with IP of Separate NIC and port 5022, 2) Granted connect to the service account which is running SQL service on all SQL instances, 3) Had to make possible owners of SQL be the respective physical nodes or AG would not create the object in the cluster. 4) Restored db on primary and restoring in norecovery on secondary. 5) Ran the create script for AG which completed. 6) Attempted to JOIN secondary to the AG and got error–>A connection timeout has occurred while attempting to establish a connection to availability replica 'PRDCL2SQLV03' with id [09CB1D1A-7B15-4EEA-997F-2C0C19CEFA96]. Either a networking or firewall issue exists, or the endpoint address provided for the replica is not the database mirroring endpoint of the host server instance.

Tried ideas in these articles too:

http://blogs.msdn.com/b/sqlserverfaq/archive/2010/03/31/step-by-step-guide-to-setup-a-dedicated-sql-database-mirroring-dbm-on-dedicated-nic-card.aspx

http://blogs.msdn.com/b/alwaysonpro/archive/2013/11/01/configuring-a-dedicated-network-for-replicating-changes-from-primary-to-secondary-replica.aspx

Hi Bryan,

Regarding the "a connection timeout has occurred" error…

Can you confirm the following details (per another SQLPerformance.com article):

http://sqlperformance.com/2013/04/system-configuration/troubleshooting-alwayson

"It turns out (and thanks to Thomas Stringer (@SQLife)) that this problem was being caused by a combination of symptoms: (a) Kerberos was not set up correctly, and (b) the encryption algorithm for the hadr_endpoint I had created defaulted to RC4. This would be okay if all of the standalone instances were also using RC4, but they weren't. Long story short, I dropped and re-created the endpoints again, on all the instances. Since this was a lab environment and I didn't really need Kerberos support (and because I had already invested enough time in these issues that I didn't want to chase down Kerberos problems too), I set up all endpoints to use Negotiate with AES".

You're creating new endpoints, but I just want to verify the encryption algorithm. Aaron mentions kerberos issues, but I don't believe he details them on this article (and there is quite a bit to that subject).

And can you confirm the troubleshooting you did regarding firewall port blocking (confirming those ports are indeed open)?

Joe

I did use AES encryption on all endpoints based on the recommendation in BOL.

I did try to troubleshoot the open port issue. Added telnet client to server and tried unsuccessfully to connect to the NIC IP and port. Added the IP for endpoint for both primary and secondary to the hostfile on both servers. Did ipconfig /flushdns and tried again to setup endpoints. Created AGs but could not join secondary to primary. The NICs are on their own private non-routeable(per our sysadmin) network connecting all four nodes. Can ping IPs just cannot telnet to IP and port. Wondering if adding them to DNS may be a solution. Even when I create the endpoint with dedicated IP and port the SQL Errorlog shows that the listener IP is different for the 5022 port: Listener_IP=192.168.210.21 Listener_Port=5022 is what I use for endpoint. When I look in the log it says SQL is listening on 10.2.21.37 5022.

This works if if I use the FQN:5022 for the servers. But that setup does not go across the dedicated NICs. Dedicated NICs seem like the way to go if I can get them to work.

Thank you.

The part that stands out for me in this scenario is the "cannot telnet to IP and port." Without the ports being accessible as expected, That will be a non-starter. Regarding DNS, the Availability Group Listener requires a unique DNS name, but it seems like this is down stream from the port issue and the log entries you described.

Regarding port selection – AG listeners allow for collocation with the SQL Server instance (from BOL". You can configure the default port to 1433 in order to allow for simplicity of the client connection strings. If using 1433, you do not need to designate a port number in a connection string. Also, since each availability group listener will have a separate virtual network name, each availability group listener configured on a single WSFC can be configured to reference the same default port of 1433.") I'm just wondering if you already have another known open port you can experiment with.

It is unlikely I'll be able to reproduce your issue. I did see a recent AG Listener / endpoint issue due to IPs that were in different zones from the WSFC, and the associated errors were equally vague.

Thanks,

Joe

Thanks. Will try a different port. And will discuss with our network team the inability to telnet.

Hi Joe, Building a 3 node FCI +AG solution. Like to use a dedicated network for the AG traffic. Question: Can I use the private NIC address and if so what happens when the FCI fails over to the passive node that has a different private NIC address? Will the AG traffic loose communication?

Hi Alfredo,

While I've personally tested the non-shared disk AG scenario with a dedicated network solution, I haven't tested an FCI (shared disk) + AG + dedicated network solution. But without testing, my initial concern would be regarding the complexity/support qualities of such a configuration. If you pursue testing this out, I'd recommend you have enough justification to do so (for example – you really need a dedicated network for AG traffic).

And with that preamble aside, thinking a bit more about your question… If I have "FCI 1" that can exist on "Node 1" and "Node 2" – my AG endpoint for "FCI 1" should be configured to be available from either of these nodes. And the same for "FCI 2" that can exist on "Node 3" and "Node 4" of the same Windows Server Failover Cluster. So the endpoint IP and port need to be accessible no matter which node hosts that particular FCI.

Best Regards,

Joe

Hi Joe,

Thanks for the response, really appreciate it and also appreciate the work you do.

I like to brainstorm a bit with you if I may.

Below is what I am thinking.

Three node WFCS

NODE1

NODE2

NODE3

Private NICs installed in each server attached to a dedicate switch for the heartbeat

NODE1 Private NIC = 10.10.10.1

NODE2 Private NIC = 10.10.10.2

NODE3 Private NIC = 10.10.10.3

Public NIC Teamed attached to another switch

Non Clusterd SQL Server Instance

NODE3\AOREP01

Clustered SQL Server FCI

SQLAO\INST1

SQLAO\INST2

AlwaysOn Group

AOGrp01

One SQL Server FCI will be installed on NODE1 and then NODE2 will be added as a fail-over partner. This instance name will be SQLAO\INST1.

A second SQL Server FCI will be installed on NODE2 and then NODE1 will be added as a fail-over partner. this instance name will be SQLAO\INST2

One non clustered SQL Server instance will be installed on NODE3 (NODE3\AOREP01)

An availability group titled AOGrp01 will be created on the clustered SQL Server SQLAO\INST1 and will include NODE3\AOREP01 as its replica residing on NODE3.

Now my confusion is…

If I configure the endpoints on NODE1 and NODE3 as follows how do I make sure, upon failure of NODE1 and SQLAO\INST1 moves to NODE2, synchronization continues to communication to the replica via the configured endpoints?

:CONNECT NODE1

CREATE ENDPOINT [Hadr_endpoint]

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = (10.10.10.1))

FOR DATA_MIRRORING (ROLE = ALL, ENCRYPTION = REQUIRED ALGORITHM AES);

GO

:CONNECT NODE3

CREATE ENDPOINT [Hadr_endpoint]

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = (10.10.1.3))

FOR DATA_MIRRORING (ROLE = ALL, ENCRYPTION = REQUIRED ALGORITHM AES);

GO

Now we would create the availability group to connect to private nics

:CONNECT NODE1

CREATE AVAILABILITY GROUP [AOGrp01]

WITH (AUTOMATED_BACKUP_PREFERENCE = SECONDARY)

FOR DATABASE [MyAGDB]

REPLICA ON N'NODE1' WITH (ENDPOINT_URL = N'TCP://10.10.10.1:5022', FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50, SECONDARY_ROLE(ALLOW_CONNECTIONS = NO)),

N'NODE3' WITH (ENDPOINT_URL = N'TCP://10.10.10.3:5022', FAILOVER_MODE = AUTOMATIC, AVAILABILITY_MODE = SYNCHRONOUS_COMMIT, BACKUP_PRIORITY = 50, SECONDARY_ROLE(ALLOW_CONNECTIONS = NO));

Note: Out of the gate, there will be only 5 databases participating in AO solution, however, in the very near future many more will jump on-board therefore, I need to prepare myself if we experience NIC saturation.

Hi Alfredo,

I see several discussion-points embedded in the architecture you are proposing. So I'm afraid I can't go through each of them – but I think the main thing I would wonder about is a potential for port collisions during collocation (if I'm understanding the design appropriately).

The architecture as you've described seems to exceed the complexity vs. supportability ratio that I watch out for. But I'd recommend that you test this out with a test environment if you can (virtual or physical) and test it out firsthand and ensure you aren't setting up an HA/DR solution that has too many layers of complexity (my concern is the mix of non-shared-disk instances with shared-disk instances, with a small number of WSFC nodes, coupled with the dedicated NIC requirements). I'd recommend putting such a proposed layout through the paces to ensure if is viable and supportable.

Best Regards,

Joe

Thought the below comment was interesting. If true how could one use a dedicated NIC?

Case 4: SQL Server is installed in a clustered environment.

On cluster, you cannot configure SQL Server to listen on a specific IP addresses. You must chose IPALL. The IP addresses on which the cluster instance will be listening on is determined by cluster resources (configurable through Cluster Administrator, by adding IP Address resources under SQL Network Name resource).

http://blogs.msdn.com/b/sqlblog/archive/2009/07/17/how-to-configure-sql-server-to-listen-on-different-ports-on-different-ip-addresses.aspx

Hi Joe,

Thank you very much for the response again. I appreciate your time and allowing me to brainstorm with you.

As you are probably gathered that I am in the design stages and would greatly entertain your services if you so desire, please send me an note to the email address listed.

If I remove the shared-disk FCI instances and only utilize non-shared-disks standalone instances will this remove the complexity you are concern with? And in the future we could add additional WFCS nodes and configure additional SQL Servers as a FCI solution.

Regards,

Alfredo

I took this guys advice and added the IP into the SQL Server Name cluster resource on each of the nodes. Was able to successfully add the dedicated IP on 3 of the 4 nodes. On the default instance, we added IP and it came online in the cluster, but SQL Service would not come online in the cluster. Why would it work on all named instances and not the default. Looked in SQL errorlog and SQL is coming online fine while the sql server reource says it is coming online.. But SQL resource eventually fails (viewed from Failover Cluster Manager). SQL fails to start as long as that dedicated IP is a resource, but comes up fine when I take it out–why only on the default instance. Was able to get the dedicated NICs on the 3 that worked setup and running with the endpoints and availability groups. So close, but so far away.

I had changed the port on default instance from 1433 to 2009. When I changed it back to 1433, the service came online even with the dedicated NIC IP. Wow, that is bizarre.

Opened a PSS case on the port issue. Turns out that the client connectivity in SQL Config Mange(ports on both 32 and 64 bit) had to be changed because the cluster health check makes a local connection and assume 1433 since default instance.

Hello Joe,

Having trouble getting the Availability Group replication traffic to traverse the dedicated network, I get the following message.

“Connection attempt failed with error: '10061(No connection could be made because the target machine actively refused it.)'.”

I have verified that all firewall settings are disabled and was able to telnet across this network from both nodes.

Now when I delete all AG objects and create it to traverse the public network it works just fine. What am I missing? Any assistance is greatly appreciated.

The two dedicated endpoints configuration that does not work:

CREATE ENDPOINT [DB07_aoinst1_endpoint]

STATE=STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = (10.0.20.21))

FOR DATA_MIRRORING (ROLE = ALL, AUTHENTICATION = WINDOWS NEGOTIATE

, ENCRYPTION = REQUIRED ALGORITHM AES)

GO

CREATE ENDPOINT [DB09_aoinst1_endpoint]

STATE=STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = (10.0.20.23))

FOR DATA_MIRRORING (ROLE = ALL, AUTHENTICATION = WINDOWS NEGOTIATE

, ENCRYPTION = REQUIRED ALGORITHM AES)

GO

The two endpoints that do work:

CREATE ENDPOINT [DB07_aoinst1_endpoint]

STATE=STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = ALL)

FOR DATA_MIRRORING (ROLE = ALL, AUTHENTICATION = WINDOWS NEGOTIATE

, ENCRYPTION = REQUIRED ALGORITHM AES)

GO

CREATE ENDPOINT [DB09_aoinst1_endpoint]

STATE=STARTED

AS TCP (LISTENER_PORT = 5022, LISTENER_IP = ALL)

FOR DATA_MIRRORING (ROLE = ALL, AUTHENTICATION = WINDOWS NEGOTIATE

, ENCRYPTION = REQUIRED ALGORITHM AES)

GO

Hi Alfredo,

In absence of issues TCP/IP being disabled or blocked ports – I’m assuming you also have granted login endpoint access as you did with the endpoint configured for LISTERN_IP = ALL?

Assuming “yes”, I searched for specific matches with your description – specific to error 10061 – and didn’t find exact matches on your particular issue in conjunction with AGs (definitely matches on other general connectivity issues, again related to firewall and NP instead of TCP). You might want to broaden the audience on this question and see if there are network-related issues still present – or see if others have encountered this exact issue.

Joe

Hello Joe,

I am currently working with our internal networking team to capture NETMON traces in hopes to identify why we cannot connect over this network. I will post my results once we are successful.

Joe,

I don't get it. I deleted all AG objects including the endpoints, recreated them, and now all is working. I have also verified AG replication is communicating over the dedicated network via task manager and NETMON.

Hello Joe, Maybe you can help me understand why this worked.

I am running two SQL Server 2014 instances on two different physical nodes. The SQL Server service account is running under the same account, for Kerberos authentication to work with AG. Viewing these services, from the “services applet”, the sql server service account can be seen as [domain]\account. However, from within SSMS I see [NT SERVICE]\MSSQL$AOINST1. For AG synchronization to work I needed to explicitly grant CONNECT on the SQL Server service account to each endpoints. I was under the impression if the instance of SQL Server ran as a service under the same domain account, no extra configuration was necessary. However, that was not the case as I need to grant the connect permission on the service account to the endpoint. Now I have both the actual domain account added along with the [NT SERVICE]\MSSQL$AOINST1 account. I am going to remove the [domain]\account and explicitly grant connect to [NT SERVICE]\MSSQL$AOINST1 account and see what happens.

Hello Joe,

I granted only connect permission to [NT SERVICE]\MSSQL$AOINST1 on the endpoint and not the actual domain account for each node. I received the "forceably closed…." error message. Not until I granted connect back to the actual domain account did it start working again.

Hi,

Have you managed to fix your initial issue with a replica connection timeouts?

I ask because I have a similar issue with alwayson replicas which are configured as FCIs to use a dedicated private network for data synch.

I get the following messages after adding db to Alwayson AG group:

"AlwaysOn Availability Groups Send Error (Error code 0x2, "NOT OK") was returned when sending a message for database ID 7. If the partner is running and visible over the network, retry the command using correctly configured partner-connection parameters.

Error: 35278, Severity: 17, State: 4.

" Availability database 'IDA_MASTER', which is in the secondary role, is being restarted to resynchronize with the current primary database. This is an informational message only. No user action is required."

I'll be glad to provide more details if someone can help.

Thanks,

Vic

Hi Joe, Can you please describe how to limit private NIC throughput?

Do you change system settings?

Thanks,

Vic

Bryan, were you able to use the dedicated NIC for AG traffic? I get the following errors when using a dedicated IP with port 5022 in the AG setup.

From the DMV:

Connection attempt failed with error: '10061(No connection could be made because the target machine actively refused it.)'

Windows Event Log:

A connection timeout has occurred while attempting to establish a connection to availability replica " with id [A3918B86-B110-4FC2-A3FD-EA4E990C2F7D]. Either a networking or firewall issue exists, or the endpoint address provided for the replica is not the database mirroring endpoint of the host server instance.

There are no firewall issues and I've checked connectivity via telnet. Granted CONNECT to the endpoint to the service account and the server domain accounts but still no luck. Any ideas?

TIA,

Kevin

Hey Kevin, check out http://sqlperformance.com/2013/04/system-configuration/troubleshooting-alwayson and http://blogs.lessthandot.com/index.php/DataMgmt/DBAdmin/setting-up-mirroring-to-sql

Hi Aaron, thanks for the links. I've checked them out and double checked the authentication and encryption settings in the script and everything looks good. When I create the endpoint with LISTENER_IP=ALL, the AG functions as expected. When specifying the dedicated NIC (LISTENER_IP = (10.60.1.88)), that's when we start seeing the issues I outlined in the previous post.

Thanks,

Kevin

Got it fixed. The endpoints were created with the two dedicated IP's but the CREATE AVAILABILITY GROUP statement used the FQDN in the ENDPOINT_URL section. Once I changed the ENDPOINT_URL to use the IP's of the dedicated NIC (TCP://10.60.1.88:5022), the AG started working properly with the dedicated network.