I've long been a proponent of choosing the correct data type. I've talked about some examples in a previous "Bad Habits" blog post, but this weekend at SQL Saturday #162 (Cambridge, UK), the topic of using DATETIME by default came up. In a conversation after my T-SQL : Bad Habits and Best Practices presentation, a user stated that they just use DATETIME even if they only need granularity to the minute or day, this way the date/time columns across their enterprise are always the same data type. I suggested that this might be wasteful, and that the consistency might not be worth it, but today I decided to set out to prove my theory.

TL;DR version

My testing below reveals that there are certainly scenarios where you may want to consider using a skinnier data type instead of sticking with DATETIME everywhere. But it is important to see where my tests for this pointed the other way, and it is also important to test these scenarios against your schema, in your environment, with hardware and data that is as true to production as possible. Your results may, and almost certainly will, vary.

The Destination Tables

Let's consider the case where granularity is only important to the day (we don't care about hours, minutes, seconds). For this we could choose DATETIME (like the user proposed), or SMALLDATETIME, or DATE on SQL Server 2008+. There are also two different types of data that I wanted to consider:

- Data that would be inserted roughly sequentially in real-time (e.g. events that are happening right now);

- Data that would be inserted randomly (e.g. birthdates of new members).

I started with 2 tables like the following, then created 4 more (2 for SMALLDATETIME, 2 for DATE):

CREATE TABLE dbo.BirthDatesRandom_Datetime

(

ID INT IDENTITY(1,1) PRIMARY KEY,

dt DATETIME NOT NULL

);

CREATE TABLE dbo.EventsSequential_Datetime

(

ID INT IDENTITY(1,1) PRIMARY KEY,

dt DATETIME NOT NULL

);

CREATE INDEX d ON dbo.BirthDatesRandom_Datetime(dt);

CREATE INDEX d ON dbo.EventsSequential_Datetime(dt);

-- Then repeat for DATE and SMALLDATETIME.

And my goal was to test batch insert performance in those two different ways, as well as the impact on overall storage size and fragmentation, and finally the performance of range queries.

Sample Data

To generate some sample data, I used one of my handy techniques for generating something meaningful from something that is not: the catalog views. On my system this returned 971 distinct date/time values (1,000,000 rows altogether) in about 12 seconds:

;WITH y AS

(

SELECT TOP (1000000) d = DATEADD(SECOND, x, DATEADD(DAY, DATEDIFF(DAY, x, 0), '20120101'))

FROM

(

SELECT s1.[object_id] % 1000

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

) AS x(x) ORDER BY NEWID()

)

SELECT DISTINCT d FROM y;

I put these million rows into a table so I could simulate sequential/random inserts using different access methods for the exact same data from three different session windows:

CREATE TABLE dbo.Staging

(

ID INT IDENTITY(1,1) PRIMARY KEY,

source_date DATETIME NOT NULL

);

;WITH Staging_Data AS

(

SELECT TOP (1000000) dt = DATEADD(SECOND, x, DATEADD(DAY, DATEDIFF(DAY, x, 0), '20110101'))

FROM

(

SELECT s1.[object_id] % 1000

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

) AS sd(x) ORDER BY NEWID()

)

INSERT dbo.Staging(source_date)

SELECT dt

FROM y

ORDER BY dt;

This process took a little bit longer to complete (20 seconds). Then I created a second table to store the same data but distributed randomly (so that I could repeat the same distribution across all inserts).

CREATE TABLE dbo.Staging_Random

(

ID INT IDENTITY(1,1) PRIMARY KEY,

source_date DATETIME NOT NULL

);

INSERT dbo.Staging_Random(source_date)

SELECT source_date

FROM dbo.Staging

ORDER BY NEWID();

Queries to Populate the Tables

Next, I wrote a set of queries to populate the other tables with this data, using three query windows to simulate at least a little bit of concurrency:

WAITFOR TIME '13:53';

GO

DECLARE @d DATETIME2 = SYSDATETIME();

INSERT dbo.{table_name}(dt) -- depending on method / data type

SELECT source_date

FROM dbo.Staging[_Random] -- depending on destination

WHERE ID % 3 = <0,1,2> -- depending on query window

ORDER BY ID;

SELECT DATEDIFF(MILLISECOND, @d, SYSDATETIME());

As in my last post, I pre-expanded the database to prevent any type of data file auto-growth events from interfering with the results. I do realize it is not completely realistic to perform million-row inserts in one pass, as I can't prevent log activity for such a large transaction from interfering, but it should do so consistently across each method. Given that the hardware I'm testing with is completely different from the hardware you're using, the absolute results shouldn't be a key takeaway, just the relative comparison.

(In a future test I will also try this with real batches coming in from log files with relatively mixed data, and using chunks of the source table in loops – I think those would be interesting experiments as well. And of course adding compression into the mix.)

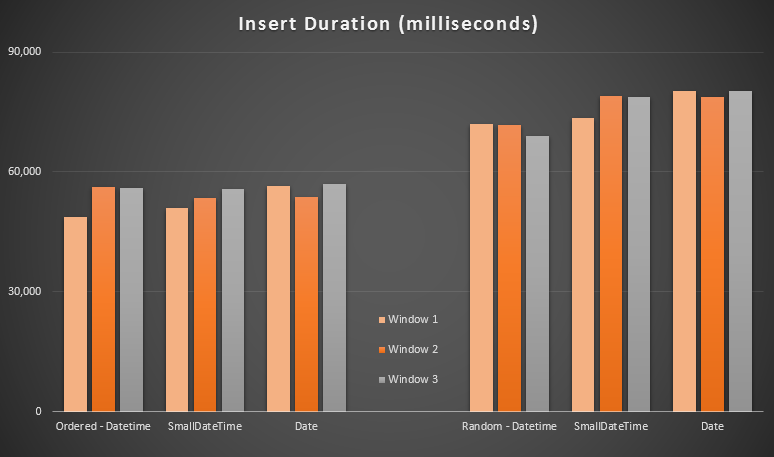

The results:

These results were not all that surprising to me – inserting in random order led to longer runtimes than inserting sequentially, something we can all take back to our roots of understanding how indexes in SQL Server work and how more "bad" page splits can happen in this scenario (I didn't monitor specifically for page splits in this exercise, but it is something I will consider in future tests).

I noticed that, on the random side, the implicit conversions on the incoming data might have had an impact on timings, since they seemed a little bit higher than the native DATETIME -> DATETIME inserts. So I decided to build two new tables containing source data: one using DATE and one using SMALLDATETIME. This would simulate, to some degree, converting your data type properly before passing it to the insert statement, such that an implicit conversion is not required during the insert. Here are the new tables and how they were populated:

CREATE TABLE dbo.Staging_Random_SmallDatetime

(

ID INT IDENTITY(1,1) PRIMARY KEY,

source_date SMALLDATETIME NOT NULL

);

CREATE TABLE dbo.Staging_Random_Date

(

ID INT IDENTITY(1,1) PRIMARY KEY,

source_date DATE NOT NULL

);

INSERT dbo.Staging_Random_SmallDatetime(source_date)

SELECT CONVERT(SMALLDATETIME, source_date)

FROM dbo.Staging_Random ORDER BY ID;

INSERT dbo.Staging_Random_Date(source_date)

SELECT CONVERT(DATE, source_date)

FROM dbo.Staging_Random ORDER BY ID;

This did not have the effect I was hoping for – timings were similar in all cases. So that was a wild goose chase.

Space Used & Fragmentation

I ran the following query to determine how many pages were reserved for each table:

SELECT

name = 'dbo.' + OBJECT_NAME([object_id]),

pages = SUM(reserved_page_count)

FROM sys.dm_db_partition_stats

GROUP BY OBJECT_NAME([object_id])

ORDER BY pages;

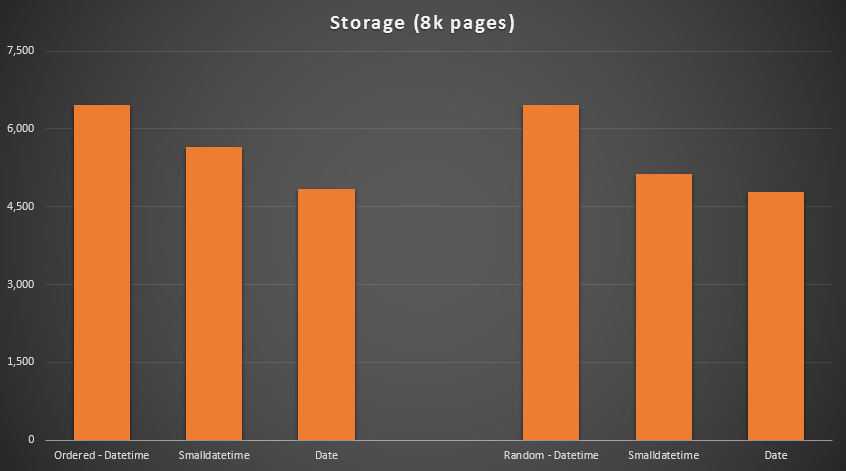

The results:

No rocket science here; use a smaller data type, you should use fewer pages. Switching from DATETIME to DATE consistently yielded a 25% reduction in number of pages used, while SMALLDATETIME reduced the requirement by 13-20%.

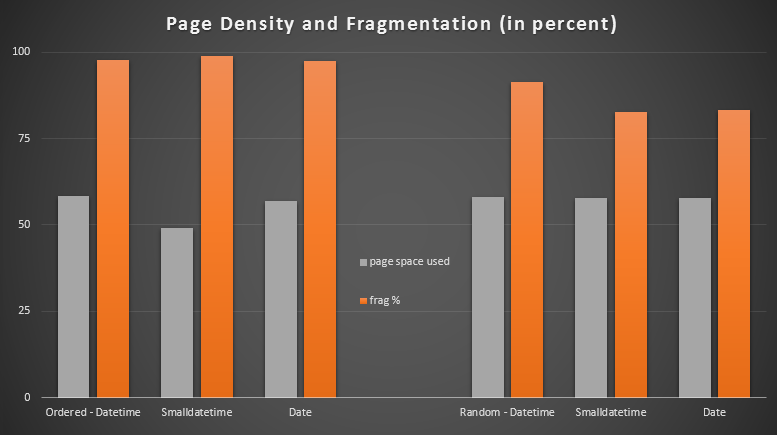

Now for fragmentation and page density on the non-clustered indexes (there was very little difference for the clustered indexes):

SELECT '{table_name}',

index_id

avg_page_space_used_in_percent,

avg_fragmentation_in_percent

FROM sys.dm_db_index_physical_stats

(

DB_ID(), OBJECT_ID('{table_name}'),

NULL, NULL, 'DETAILED'

)

WHERE index_level = 0 AND index_id = 2;

Results:

I was quite surprised to see the ordered data become almost completely fragmented, while the data that was inserted randomly actually ended up with slightly better page usage. I've made a note that this warrants further investigation outside the scope of these specific tests, but it may be something you'll want to check on if you have non-clustered indexes that are relying on largely sequential inserts.

[An online rebuild of the non-clustered indexes on all 6 tables ran in 7 seconds, putting page density back up to the 99.5% range, and bringing fragmentation down to under 1%. But I didn't run that until performing the query tests below…]

Range Query Test

Finally, I wanted to see the impact on runtimes for simple date range queries against the different indexes, both with the inherent fragmentation caused by OLTP-type write activity, and on a clean index that is rebuilt. The query itself is pretty simple:

SELECT TOP (200000) dt

FROM dbo.{table_name}

WHERE dt >= '20110101'

ORDER BY dt;

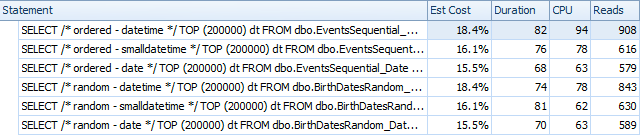

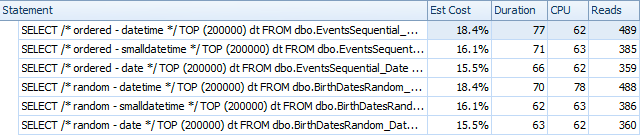

Here are the results before the indexes were rebuilt, using SQL Sentry Plan Explorer:

And they differ slightly after the rebuilds:

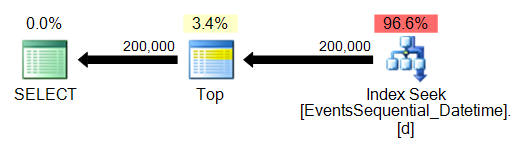

Essentially we see slightly higher duration and reads for the DATETIME versions, but very little difference in CPU. And the differences between SMALLDATETIME and DATE are negligible in comparison. All of the queries had simplistic query plans like this:

(The seek is, of course, an ordered range scan.)

Conclusion

While admittedly these tests are quite fabricated and could have benefited from more permutations, they do show roughly what I expected to see: the biggest impacts on this specific choice are on space occupied by the non-clustered index (where choosing a skinnier data type will certainly benefit), and on the time required to perform inserts in arbitrary, rather than sequential, order (where DATETIME only has a marginal edge).

I'd love to hear your ideas on how to put data type choices like these through more thorough and punishing tests. I do plan to go into more details in future posts.