I briefly mentioned that batch mode data is normalized in my last article Batch Mode Bitmaps in SQL Server. All data in a batch is represented by an eight-byte value in this particular normalized format, regardless of the underlying data type.

That statement no doubt raises some questions, not least about how data with a length much greater than eight bytes can possibly be stored that way. This article explores the batch data normalized representation, explains why not all eight-byte data types can fit within 64 bits, and shows an example of how all this affects batch-mode performance.

Demo

I am going to start with an example that shows batch data format making an important difference to an execution plan. You will need SQL Server 2016 (or later) and Developer Edition (or equivalent) to reproduce the results shown here.

The first thing we will need is a table of bigint numbers from 1 to 102,400 inclusive. These numbers will be used to populate a columnstore table shortly (the number of rows is the minimum needed to obtain a single compressed segment).

DROP TABLE IF EXISTS #Numbers;

GO

CREATE TABLE #Numbers (n bigint NOT NULL PRIMARY KEY);

GO

INSERT #Numbers (n)

SELECT

n = ROW_NUMBER() OVER (ORDER BY @@SPID)

FROM master.dbo.spt_values AS SV1

CROSS JOIN master.dbo.spt_values AS SV2

ORDER BY

n

OFFSET 0 ROWS

FETCH FIRST 102400 ROWS ONLY

OPTION (MAXDOP 1);

Successful aggregate pushdown

The following script uses the numbers table to create another table containing the same numbers offset by a specific value. This table uses columnstore for its primary storage to produce batch mode execution later on.

DROP TABLE IF EXISTS #T;

GO

CREATE TABLE #T (c1 bigint NOT NULL);

GO

DECLARE

@Start bigint = CONVERT(bigint, -4611686018427387905);

INSERT #T (c1)

SELECT

c1 = @Start + N.n

FROM #Numbers AS N;

GO

CREATE CLUSTERED COLUMNSTORE INDEX c ON #T

WITH (MAXDOP = 1);

Run the following test queries against the new columnstore table:

SELECT

c = COUNT_BIG(*)

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

SELECT

m = MAX(T.c1)

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

SELECT

s = SUM(T.c1 + CONVERT(bigint, 4611686018427387904))

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

The addition inside the SUM is to avoid overflow. You can skip the WHERE clauses (to avoid a trivial plan) if you are running SQL Server 2017.

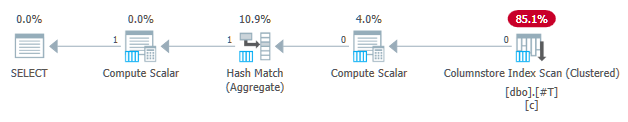

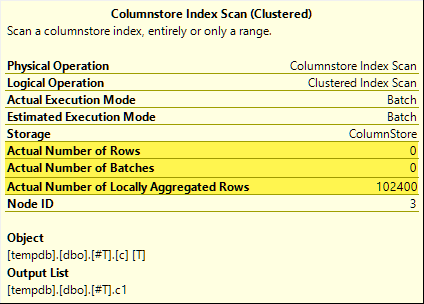

Those queries all benefit from aggregate pushdown. The aggregate is computed at the Columnstore Index Scan rather than the batch-mode Hash Aggregate operator. Post-execution plans show zero rows emitted by the scan. All 102,400 rows were ‘locally aggregated’.

The SUM plan is shown below as an example:

Unsuccessful aggregate pushdown

Now drop then recreate the columnstore test table with the offset decreased by one:

DROP TABLE IF EXISTS #T;

GO

CREATE TABLE #T (c1 bigint NOT NULL);

GO

DECLARE

-- Note this value has decreased by one

@Start bigint = CONVERT(bigint, -4611686018427387906);

INSERT #T (c1)

SELECT

c1 = @Start + N.n

FROM #Numbers AS N;

GO

CREATE CLUSTERED COLUMNSTORE INDEX c ON #T

WITH (MAXDOP = 1);

Run exactly the same aggregate pushdown test queries as before:

SELECT

c = COUNT_BIG(*)

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

SELECT

m = MAX(T.c1)

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

SELECT

s = SUM(T.c1 + CONVERT(bigint, 4611686018427387904))

FROM #T AS T

WHERE 1 = (SELECT 1) -- Avoid trivial plan

OPTION (MAXDOP 1);

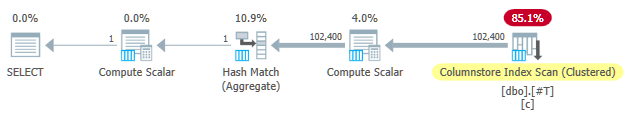

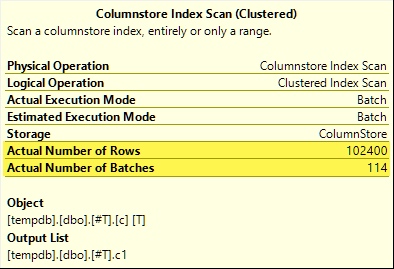

This time, only the COUNT_BIG aggregate achieves aggregate pushdown (SQL Server 2017 only). The MAX and SUM aggregates do not. Here is the new SUM plan for comparison with the one from the first test:

All 102,400 rows (in 114 batches) are emitted by the Columnstore Index Scan, processed by the Compute Scalar, and sent to the Hash Aggregate.

Why the difference? All we did was to offset the range of numbers stored in the columnstore table by one!

Explanation

I mentioned in the introduction that not all eight-byte data types can fit in 64 bits. This fact is important because many columnstore and batch mode performance optimizations only work with data 64 bits in size. Aggregate pushdown is one of those things. There are many more performance features (not all documented) that work best (or at all) only when the data fits in 64 bits.

In our specific example, aggregate pushdown is disabled for a columnstore segment when it contains even one data value that does not fit in 64 bits. SQL Server can determine this from the minimum and maximum value metadata associated with each segment without checking all the data. Each segment is evaluated separately.

Aggregate pushdown still works for the COUNT_BIG aggregate only in the second test. This is an optimization added at some point in SQL Server 2017 (my tests were run on CU16). It is logical to not disable aggregate pushdown when we are only counting rows, and not doing anything with the specific data values. I could not find any documentation for this improvement, but that is not so unusual these days.

As a side note, I noticed that SQL Server 2017 CU16 enables aggregate pushdown for the previously-unsupported data types real, float, datetimeoffset, and numeric with precision greater than 18 — when the data fits in 64 bits. This is also undocumented at the time of writing.

Ok, but why?

You may be asking the very reasonable question: Why does one set of bigint test values apparently fit in 64 bits but the other does not?

If you guessed the reason was related to NULL, give yourself a tick. Even though the test table column is defined as NOT NULL, SQL Server uses the same normalized data layout for bigint whether the data allows nulls or not. There are reasons for this, which I will unpack bit by bit.

Let me start with some observations:

- Every column value in a batch is stored in exactly eight bytes (64 bits) regardless of the underlying data type. This fixed-size layout makes everything easier and faster. Batch mode execution is all about speed.

- A batch is 64KB in size and contains between 64 and 900 rows, depending on the number of columns being projected. This makes sense given that column data sizes are fixed at 64 bits. More columns means fewer rows can fit in each 64KB batch.

- Not all SQL Server data types can fit in 64 bits, even in principle. A long string (to take one example) might not even fit in a whole 64KB batch (if that were allowed), let alone a single 64-bit entry.

SQL Server solves this last issue by storing an 8-byte reference to data larger than 64 bits. The ‘large’ data value is stored elsewhere in memory. You might call this arrangement “off-row” or “out-of-batch” storage. Internally it is referred to as deep data.

Now, eight-byte data types cannot fit in 64 bits when nullable. Take bigint NULL for example . The non-null data range might require the full 64 bits, and we still need another bit to indicate null or not.

Solving the problems

The creative and efficient solution to these challenges is to reserve the lowest significant bit (LSB) of the 64-bit value as a flag. The flag indicates in-batch data storage when the LSB is clear (set to zero). When the LSB is set (to one), it can mean one of two things:

- The value is null; or

- The value is stored off-batch (it is deep data).

These two cases are distinguished by the state of the remaining 63 bits. When they are all zero, the value is NULL. Otherwise, the ‘value’ is a pointer to deep data stored elsewhere.

When viewed as an integer, setting the LSB means that pointers to deep data will always be odd numbers. Nulls are represented by the (odd) number 1 (all other bits are zero). In-batch data is represented by even numbers because the LSB is zero.

This does not mean that SQL Server can only store even numbers within a batch! It just means that the normalized representation of the underlying column values will always have a zero LSB when stored “in-batch”. This will make more sense in a moment.

Batch Data Normalization

Normalization is performed in different ways, depending on the underlying data type. For bigint the process is:

- If the data is null, store the value 1 (only LSB set).

- If the value can be represented in 63 bits, shift all the bits one place to the left and zero the LSB. When looking at the value as an integer, this means doubling the value. For example the

bigintvalue 1 is normalized to the value 2. In binary, that is seven all-zero bytes followed by00000010. The LSB being zero indicates this is data stored inline. When SQL Server needs the original value, it right-shifts the 64-bit value by one position (throwing away the LSB flag). - If the value cannot be represented in 63 bits, the value is stored off-batch as deep data. The in-batch pointer has the LSB set (making it an odd number).

The process of testing if a bigint value can fit in 63 bits is:

- Store the raw*

bigintvalue in 64-bit processor registerr8. - Store double the value of

r8in registerrax. - Shift the bits of

raxone place to the right. - Test if the values in

raxandr8are equal.

* Note the raw value can not reliably be determined for all data types by a T-SQL conversion to a binary type. The T-SQL result may have a different byte order and may also contain metadata e.g. time fractional second precision.

If the test at step 4 passes, we know the value can be doubled and then halved within 64 bits — preserving the original value.

A reduced range

The upshot of all this is that the range of bigint values that can be stored in-batch is reduced by one bit (because the LSB is not available). The following inclusive ranges of bigint values will be stored off-batch as deep data:

- -4,611,686,018,427,387,905 to -9,223,372,036,854,775,808

- +4,611,686,018,427,387,904 to +9,223,372,036,854,775,807

In return for accepting that these bigint range limitations, normalization allows SQL Server to store (most) bigint values, nulls, and deep data references in-batch. This is a lot simpler and more space-efficient than having separate structures for nullability and deep data references. It also makes processing batch data with SIMD processor instructions a lot easier.

Normalization of other data types

SQL Server contains normalization code for each of the data types supported by batch mode execution. Each routine is optimized to handle the incoming binary layout efficiently, and to only create deep data when necessary. Normalization always results in the LSB being reserved to indicate nulls or deep data, but the layout of the remaining 63 bits vary per data type.

Always in-batch

Normalized data for the following data types is always stored in-batch since they never need more than 63 bits:

datetime(n)– rescaled internally totime(7)datetime2(n)– rescaled internally todatetime2(7)integersmallinttinyintbit– uses thetinyintimplementation.smalldatetimedatetimerealfloatsmallmoney

It depends

The following data types may be stored in-batch or deep data depending on the data value:

bigint– as described previously.money– same range in-batch asbigintbut divided by 10,000.numeric/decimal– 18 decimal digits or fewer in-batch regardless of declared precision. For example thedecimal(38,9)value -999999999.999999999 can be represented as the 8 byte integer -999999999999999999 (f21f494c589c0001hex), which can be doubled to -1999999999999999998 (e43e9298b1380002hex) reversibly within 64 bits. SQL Server knows where the decimal point goes from the data type scale.datetimeoffset(n)– in-batch if the runtime value will fit indatetimeoffset(2)regardless of declared fractional seconds precision.timestamp– internal format is different from the display. For example atimestampdisplayed from T-SQL as0x000000000099449Ais represented internally as9a449900 00000000(in hex). This value is stored as deep data because it does not fit in 64-bits when doubled (left-shifted one bit).

Always deep data

The following are always stored as deep data (except nulls):

uniqueidentifiervarbinary(n)– including(max)binarychar/varchar(n)/nchar/nvarchar(n)/sysnameincluding(max)– these types may also use a dictionary (when available).text/ntext/image/xml– uses thevarbinary(n)implementation.

To be clear, nulls for all batch-mode compatible data types are stored in-batch as the special value ‘one’.

Final Thoughts

You may expect to make the best of the available columnstore and batch mode optimizations when using data types and values that fit in 64 bits. You will also have the best chance of benefiting from incremental product improvements over time, for example the latest improvements to aggregate pushdown noted in the main text. Not all of the performance advantages will be so visible in execution plans, or even documented. Nevertheless, the differences can be extremely significant.

I should also mention that data is normalized when a row-mode execution plan operator provides data to a batch-mode parent, or when a non-columnstore scan produces batches (batch mode on rowstore). There is an invisible row-to-batch adapter that calls the appropriate normalization routine on each column value before adding it to the batch. Avoiding data types with complicated normalization and deep data storage can produce performance benefits here as well.

Like!