UPDATE: September 2nd, 2021 (Originally published July 26th, 2012.)

A lot of things change over the course of a few major versions of our favorite database platform. SQL Server 2016 brought us STRING_SPLIT, a native function that eliminates the need for many of the custom solutions we’ve needed before. It’s fast, too, but it’s not perfect. For example, it only supports a single-character delimiter, and it doesn’t return anything to indicate the order of the input elements. I’ve written several articles about this function (and STRING_AGG, which arrived in SQL Server 2017) since this post was written:

- Performance Surprises and Assumptions : STRING_SPLIT()

- STRING_SPLIT() in SQL Server 2016 : Follow-Up #1

- STRING_SPLIT() in SQL Server 2016 : Follow-Up #2

- SQL Server Split String Replacement Code with STRING_SPLIT

- Comparing string splitting / concatenation methods

- Solve old problems with SQL Server’s new STRING_AGG and STRING_SPLIT functions

- Dealing with the single-character delimiter in SQL Server's STRING_SPLIT function

- Please help with STRING_SPLIT improvements

- A way to improve STRING_SPLIT in SQL Server – and you can help

I’m going to leave the below content here for posterity and historical relevance, and also because some of the testing methodology is relevant to other problems aside from splitting strings, but please see some of the above references for information about how you should be splitting strings in modern, supported versions of SQL Server – as well as this post, which explains why splitting strings maybe isn’t a problem you want the database to solve in the first place, new function or not.

I know many people are bored of the "split strings" problem, but it still seems to come up almost daily on forum and Q & A sites like Stack Overflow. This is the problem where people want to pass in a string like this:

EXEC dbo.UpdateProfile @UserID = 1, @FavoriteTeams = N'Patriots,Red Sox,Bruins';

Inside the procedure, they want to do something like this:

INSERT dbo.UserTeams(UserID, TeamID) SELECT @UserID, TeamID

FROM dbo.Teams WHERE TeamName IN (@FavoriteTeams);

This doesn't work because @FavoriteTeams is a single string, and the above translates to:

INSERT dbo.UserTeams(UserID, TeamID) SELECT @UserID, TeamID

FROM dbo.Teams WHERE TeamName IN (N'Patriots,Red Sox,Bruins');

SQL Server is therefore going to try to find a team named Patriots,Red Sox,Bruins, and I'm guessing there is no such team. What they really want here is the equivalent of:

INSERT dbo.UserTeams(UserID, TeamID) SELECT @UserID, TeamID

FROM dbo.Teams WHERE TeamName IN (N'Patriots', N'Red Sox', N'Bruins');

But since there is no array type in SQL Server, this is not how the variable is interpreted at all – it's still a simple, single string that happens to contain some commas. Questionable schema design aside, in this case the comma-separated list needs to be "split" into individual values – and this is the question that frequently spurs a lot of "new" debate and commentary about the best solution to achieve just that.

The answer seems to be, almost invariably, that you should use CLR. If you can't use CLR – and I know there are many of you out there who can't, due to corporate policy, the pointy-haired boss, or stubbornness – then you use one of the many workarounds that exist. And many workarounds exist.

But which one should you use?

I'm going to compare the performance of a few solutions – and focus on the question everyone always asks: "Which is fastest?" I'm not going to belabor the discussion around *all* of the potential methods, because several have already been eliminated due to the fact that they simply don't scale. And I may re-visit this in the future to examine the impact on other metrics, but for now I'm just going to focus on duration. Here are the contenders I am going to compare (using SQL Server 2012, 11.00.2316, on a Windows 7 VM with 4 CPUs and 8 GB of RAM):

CLR

If you wish to use CLR, you should definitely borrow code from fellow MVP Adam Machanic before thinking about writing your own (I've blogged before about re-inventing the wheel, and it also applies to free code snippets like this). He spent a lot of time fine-tuning this CLR function to efficiently parse a string. If you are currently using a CLR function and this is not it, I strongly recommend you deploy it and compare – I tested it against a much simpler, VB-based CLR routine that was functionally equivalent, but the VB approach performed about three times worse than Adam's.

So I took Adam's function, compiled the code to a DLL (using csc), and deployed just that file to the server. Then I added the following assembly and function to my database:

CREATE ASSEMBLY CLRUtilities FROM 'c:\DLLs\CLRUtilities.dll'

WITH PERMISSION_SET = SAFE;

GO

CREATE FUNCTION dbo.SplitStrings_CLR

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE ( Item NVARCHAR(4000) )

EXTERNAL NAME CLRUtilities.UserDefinedFunctions.SplitString_Multi;

GO

XML

This is the typical function I use for one-off scenarios where I know the input is "safe," but is not one I recommend for production environments (more on that below).

CREATE FUNCTION dbo.SplitStrings_XML

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN

(

SELECT Item = y.i.value('(./text())[1]', 'nvarchar(4000)')

FROM

(

SELECT x = CONVERT(XML, ''

+ REPLACE(@List, @Delimiter, '')

+ '').query('.')

) AS a CROSS APPLY x.nodes('i') AS y(i)

);

GO

A very strong caveat has to ride along with the XML approach: it can only be used if you can guarantee that your input string does not contain any illegal XML characters. One name with <, > or & and the function will blow up. So regardless of the performance, if you're going to use this approach, be aware of the limitations – it should not be considered a viable option for a generic string splitter. I'm including it in this round-up because you may have a case where you can trust the input – for example it is possible to use for comma-separated lists of integers or GUIDs.

Numbers table

This solution uses a Numbers table, which you must build and populate yourself. (We've been requesting a built-in version for ages.) The Numbers table should contain enough rows to exceed the length of the longest string you'll be splitting. In this case we'll use 1,000,000 rows:

SET NOCOUNT ON;

DECLARE @UpperLimit INT = 1000000;

WITH n AS

(

SELECT

x = ROW_NUMBER() OVER (ORDER BY s1.[object_id])

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

CROSS JOIN sys.all_objects AS s3

)

SELECT Number = x

INTO dbo.Numbers

FROM n

WHERE x BETWEEN 1 AND @UpperLimit;

GO

CREATE UNIQUE CLUSTERED INDEX n ON dbo.Numbers(Number)

WITH (DATA_COMPRESSION = PAGE);

GO

(Using data compression will drastically reduce the number of pages required, but obviously you should only use this option if you are running Enterprise Edition. In this case the compressed data requires 1,360 pages, versus 2,102 pages without compression – about a 35% savings.)

CREATE FUNCTION dbo.SplitStrings_Numbers

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN

(

SELECT Item = SUBSTRING(@List, Number,

CHARINDEX(@Delimiter, @List + @Delimiter, Number) - Number)

FROM dbo.Numbers

WHERE Number <= CONVERT(INT, LEN(@List))

AND SUBSTRING(@Delimiter + @List, Number, LEN(@Delimiter)) = @Delimiter

);

GO

Common Table Expression

This solution uses a recursive CTE to extract each part of the string from the "remainder" of the previous part. As a recursive CTE with local variables, you'll note that this had to be a multi-statement table-valued function, unlike the others which are all inline.

CREATE FUNCTION dbo.SplitStrings_CTE

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS @Items TABLE (Item NVARCHAR(4000))

WITH SCHEMABINDING

AS

BEGIN

DECLARE @ll INT = LEN(@List) + 1, @ld INT = LEN(@Delimiter);

WITH a AS

(

SELECT

[start] = 1,

[end] = COALESCE(NULLIF(CHARINDEX(@Delimiter,

@List, 1), 0), @ll),

[value] = SUBSTRING(@List, 1,

COALESCE(NULLIF(CHARINDEX(@Delimiter,

@List, 1), 0), @ll) - 1)

UNION ALL

SELECT

[start] = CONVERT(INT, [end]) + @ld,

[end] = COALESCE(NULLIF(CHARINDEX(@Delimiter,

@List, [end] + @ld), 0), @ll),

[value] = SUBSTRING(@List, [end] + @ld,

COALESCE(NULLIF(CHARINDEX(@Delimiter,

@List, [end] + @ld), 0), @ll)-[end]-@ld)

FROM a

WHERE [end] < @ll ) INSERT @Items SELECT [value] FROM a WHERE LEN([value]) > 0

OPTION (MAXRECURSION 0);

RETURN;

END

GO

Jeff Moden's splitter A function based on Jeff Moden's splitter with minor changes to support longer strings

Over on SQLServerCentral, Jeff Moden presented a splitter function that rivaled the performance of CLR, so I thought it only fair to include a variation using a similar approach in this round-up. I had to make a few minor changes to his function in order to handle our longest string (500,000 characters), and also made the naming conventions similar:

CREATE FUNCTION dbo.SplitStrings_Moden

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE

WITH SCHEMABINDING AS

RETURN

WITH E1(N) AS ( SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1

UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1

UNION ALL SELECT 1 UNION ALL SELECT 1 UNION ALL SELECT 1),

E2(N) AS (SELECT 1 FROM E1 a, E1 b),

E4(N) AS (SELECT 1 FROM E2 a, E2 b),

E42(N) AS (SELECT 1 FROM E4 a, E2 b),

cteTally(N) AS (SELECT 0 UNION ALL SELECT TOP (DATALENGTH(ISNULL(@List,1)))

ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) FROM E42),

cteStart(N1) AS (SELECT t.N+1 FROM cteTally t

WHERE (SUBSTRING(@List,t.N,1) = @Delimiter OR t.N = 0))

SELECT Item = SUBSTRING(@List, s.N1, ISNULL(NULLIF(CHARINDEX(@Delimiter,@List,s.N1),0)-s.N1,8000))

FROM cteStart s;

As an aside, for those using Jeff Moden's solution, you may consider using a Numbers table as above, and experimenting with a slight variation on Jeff's function:

CREATE FUNCTION dbo.SplitStrings_Moden2

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE

WITH SCHEMABINDING AS

RETURN

WITH cteTally(N) AS

(

SELECT TOP (DATALENGTH(ISNULL(@List,1))+1) Number-1

FROM dbo.Numbers ORDER BY Number

),

cteStart(N1) AS

(

SELECT t.N+1

FROM cteTally t

WHERE (SUBSTRING(@List,t.N,1) = @Delimiter OR t.N = 0)

)

SELECT Item = SUBSTRING(@List, s.N1,

ISNULL(NULLIF(CHARINDEX(@Delimiter, @List, s.N1), 0) - s.N1, 8000))

FROM cteStart AS s;

(This will trade slightly higher reads for slightly lower CPU, so may be better depending on whether your system is already CPU- or I/O-bound.)

Sanity checking

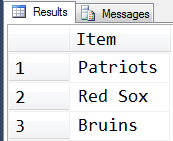

Just to be sure we're on the right track, we can verify that all five functions return the expected results:

DECLARE @s NVARCHAR(MAX) = N'Patriots,Red Sox,Bruins';

SELECT Item FROM dbo.SplitStrings_CLR (@s, N',');

SELECT Item FROM dbo.SplitStrings_XML (@s, N',');

SELECT Item FROM dbo.SplitStrings_Numbers (@s, N',');

SELECT Item FROM dbo.SplitStrings_CTE (@s, N',');

SELECT Item FROM dbo.SplitStrings_Moden (@s, N',');

And in fact, these are the results we see in all five cases...

The Test Data

Now that we know the functions behave as expected, we can get to the fun part: testing performance against various numbers of strings that vary in length. But first we need a table. I created the following simple object:

CREATE TABLE dbo.strings

(

string_type TINYINT,

string_value NVARCHAR(MAX)

);

CREATE CLUSTERED INDEX st ON dbo.strings(string_type);

I populated this table with a set of strings of varying lengths, making sure that roughly the same set of data would be used for each test - first 10,000 rows where the string is 50 characters long, then 1,000 rows where the string is 500 characters long, 100 rows where the string is 5,000 characters long, 10 rows where the string is 50,000 characters long, and so on up to 1 row of 500,000 characters. I did this both to compare the same amount of overall data being processed by the functions, as well as to try to keep my testing times somewhat predictable.

I use a #temp table so that I can simply use GO <constant> to execute each batch a specific number of times:

SET NOCOUNT ON;

GO

CREATE TABLE #x(s NVARCHAR(MAX));

INSERT #x SELECT N'a,id,xyz,abcd,abcde,sa,foo,bar,mort,splunge,bacon,';

GO

INSERT dbo.strings SELECT 1, s FROM #x;

GO 10000

INSERT dbo.strings SELECT 2, REPLICATE(s,10) FROM #x;

GO 1000

INSERT dbo.strings SELECT 3, REPLICATE(s,100) FROM #x;

GO 100

INSERT dbo.strings SELECT 4, REPLICATE(s,1000) FROM #x;

GO 10

INSERT dbo.strings SELECT 5, REPLICATE(s,10000) FROM #x;

GO

DROP TABLE #x;

GO

-- then to clean up the trailing comma, since some approaches treat a trailing empty string as a valid element:

UPDATE dbo.strings SET string_value = SUBSTRING(string_value, 1, LEN(string_value)-1) + 'x';

Creating and populating this table took about 20 seconds on my machine, and the table represents about 6 MB worth of data (about 500,000 characters times 2 bytes, or 1 MB per string_type, plus row and index overhead). Not a huge table, but it should be large enough to highlight any differences in performance between the functions.

The Tests

With the functions in place, and the table properly stuffed with big strings to chew on, we can finally run some actual tests to see how the different functions perform against real data. In order to measure performance without factoring in network overhead, I used SQL Sentry Plan Explorer, running each set of tests 10 times, collecting the duration metrics, and averaging.

The first test simply pulled the items from each string as a set:

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

DECLARE @string_type TINYINT = ; -- 1-5 from above

SELECT t.Item FROM dbo.strings AS s

CROSS APPLY dbo.SplitStrings_(s.string_value, ',') AS t

WHERE s.string_type = @string_type;

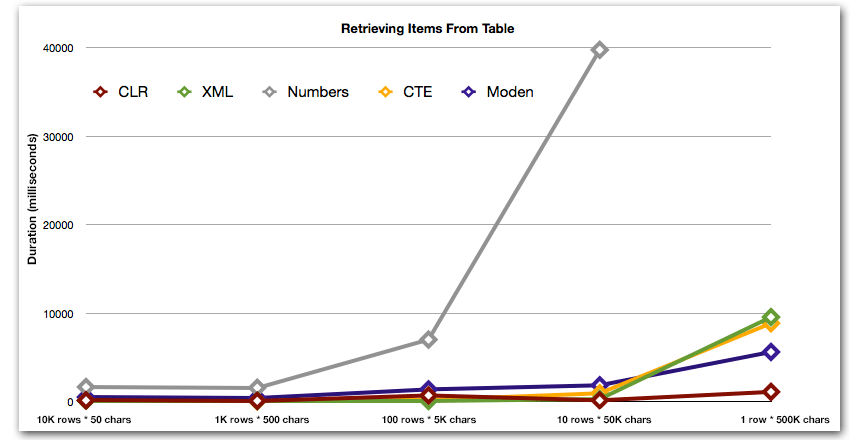

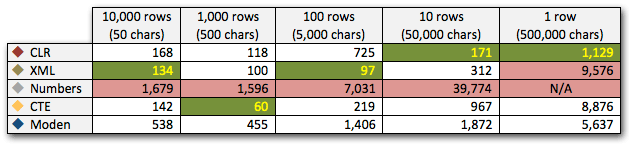

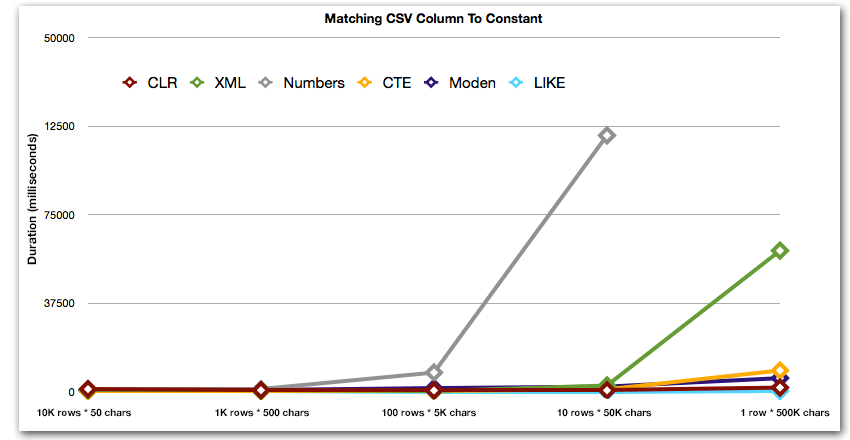

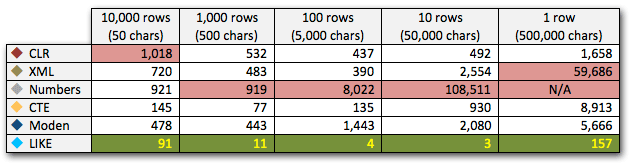

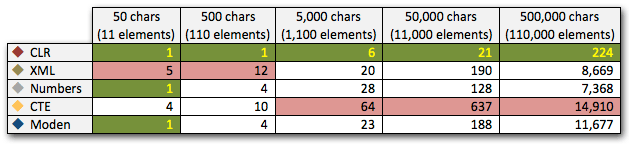

The results show that as the strings get larger, the advantage of CLR really shines. At the lower end, the results were mixed, but again the XML method should have an asterisk next to it, since its use depends on relying on XML-safe input. For this specific use case, the Numbers table consistently performed the worst:

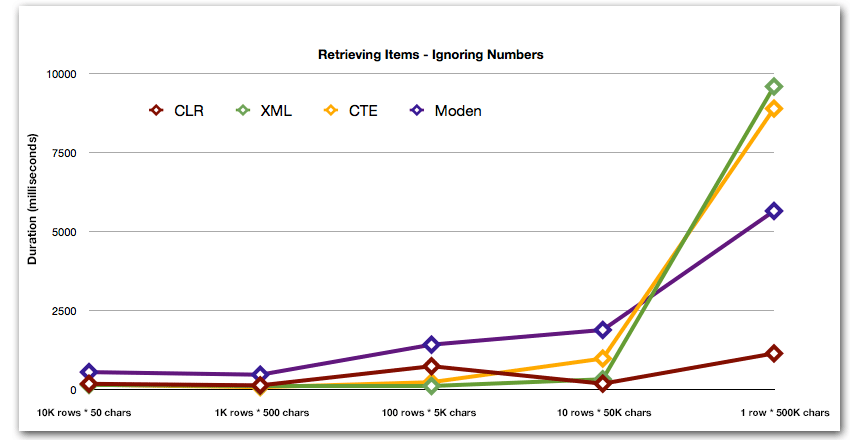

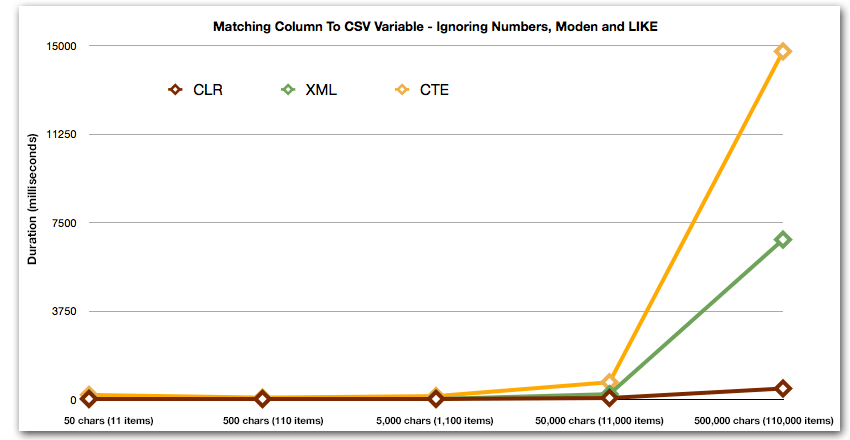

After the hyperbolic 40-second performance for the numbers table against 10 rows of 50,000 characters, I dropped it from the running for the last test. To better show the relative performance of the four best methods in this test, I've dropped the Numbers results from the graph altogether:

Next, let's compare when we perform a search against the comma-separated value (e.g. return the rows where one of the strings is 'foo'). Again we'll use the five functions above, but we'll also compare the result against a search performed at runtime using LIKE instead of bothering with splitting.

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

DECLARE @i INT = , @search NVARCHAR(32) = N'foo';

;WITH s(st, sv) AS

(

SELECT string_type, string_value

FROM dbo.strings AS s

WHERE string_type = @i

)

SELECT s.string_type, s.string_value FROM s

CROSS APPLY dbo.SplitStrings_(s.sv, ',') AS t

WHERE t.Item = @search;

SELECT s.string_type

FROM dbo.strings

WHERE string_type = @i

AND ',' + string_value + ',' LIKE '%,' + @search + ',%';

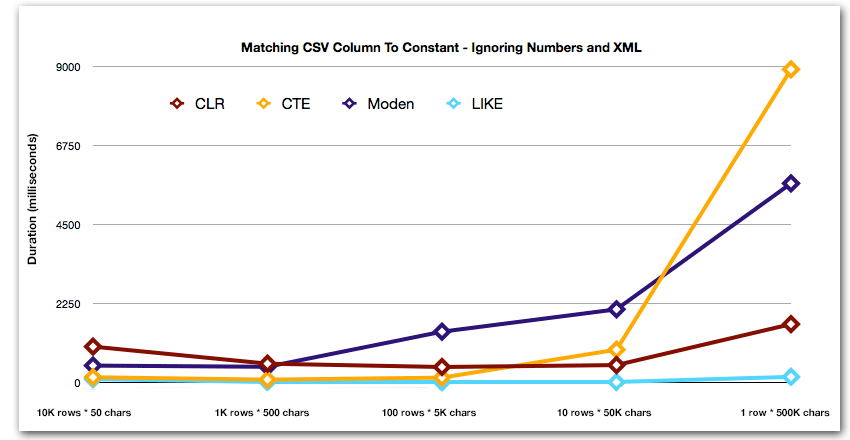

These results show that, for small strings, CLR was actually the slowest, and that the best solution is going to be performing a scan using LIKE, without bothering to split the data up at all. Again I dropped the Numbers table solution from the 5th approach, when it was clear that its duration would increase exponentially as the size of the string went up:

And to better demonstrate the patterns for the top 4 results, I've eliminated the Numbers and XML solutions from the graph:

Next, let's look at replicating the use case from the beginning of this post, where we're trying to find all the rows in one table that exist in the list being passed in. As with the data in the table we created above, we're going to create strings varying in length from 50 to 500,000 characters, store them in a variable, and then check a common catalog view for existing in the list.

DECLARE

@i INT = , -- value 1-5, yielding strings 50 - 500,000 characters

@x NVARCHAR(MAX) = N'a,id,xyz,abcd,abcde,sa,foo,bar,mort,splunge,bacon,';

SET @x = REPLICATE(@x, POWER(10, @i-1));

SET @x = SUBSTRING(@x, 1, LEN(@x)-1) + 'x';

SELECT c.[object_id]

FROM sys.all_columns AS c

WHERE EXISTS

(

SELECT 1 FROM dbo.SplitStrings_(@x, N',') AS x

WHERE Item = c.name

)

ORDER BY c.[object_id];

SELECT [object_id]

FROM sys.all_columns

WHERE N',' + @x + ',' LIKE N'%,' + name + ',%'

ORDER BY [object_id];

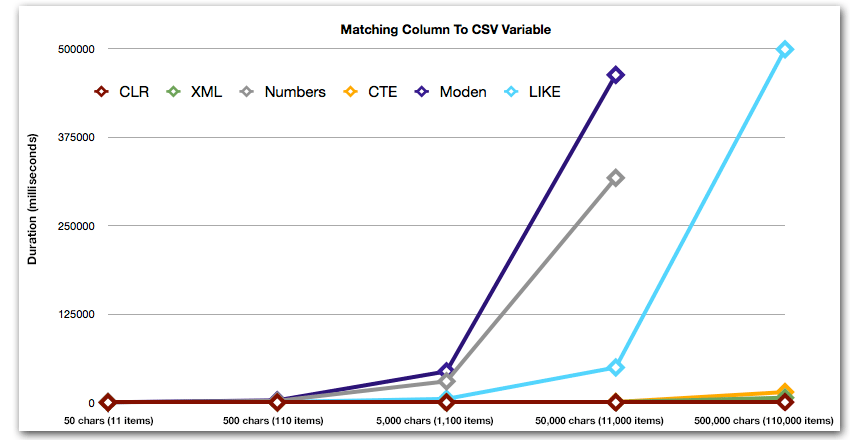

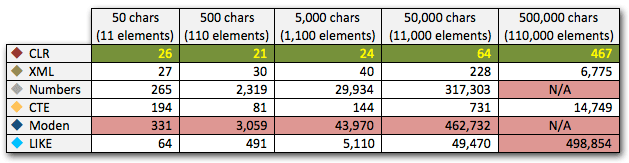

These results show that, for this pattern, several methods see their duration increase exponentially as the size of the string goes up. At the lower end, XML keeps good pace with CLR, but this quickly deteriorates as well. CLR is consistently the clear winner here:

And again without the methods that explode upward in terms of duration:

Finally, let's compare the cost of retrieving the data from a single variable of varying length, ignoring the cost of reading data from a table. Again we'll generate strings of varying length, from 50 - 500,000 characters, and then just return the values as a set:

DECLARE

@i INT = , -- value 1-5, yielding strings 50 - 500,000 characters

@x NVARCHAR(MAX) = N'a,id,xyz,abcd,abcde,sa,foo,bar,mort,splunge,bacon,';

SET @x = REPLICATE(@x, POWER(10, @i-1));

SET @x = SUBSTRING(@x, 1, LEN(@x)-1) + 'x';

SELECT Item FROM dbo.SplitStrings_(@x, N',');

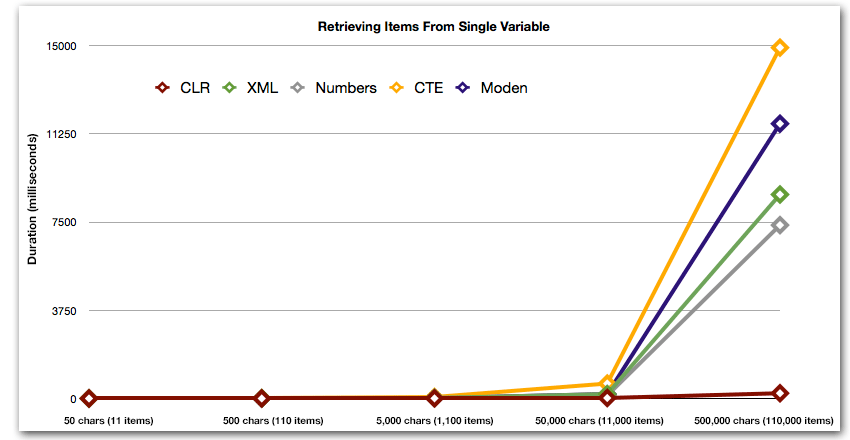

These results also show that CLR is fairly flat-lined in terms of duration, all the way up to 110,000 items in the set, while the other methods keep decent pace until some time after 11,000 items:

Conclusion

In almost all cases, the CLR solution clearly out-performs the other approaches - in some cases it's a landslide victory, especially as string sizes increase; in a few others, it's a photo finish that could fall either way. In the first test we saw that XML and CTE out-performed CLR at the low end, so if this is a typical use case *and* you are sure that your strings are in the 1 - 10,000 character range, one of those approaches might be a better option. If your string sizes are less predictable than that, CLR is probably still your best bet overall - you lose a few milliseconds at the low end, but you gain a whole lot at the high end. Here are the choices I would make, depending on the task, with second place highlighted for cases where CLR is not an option. Note that XML is my preferred method only if I know the input is XML-safe; these may not necessarily be your best alternatives if you have less faith in your input.

The only real exception where CLR is not my choice across the board is the case where you're actually storing comma-separated lists in a table, and then finding rows where a defined entity is in that list. In that specific case, I would probably first recommend redesigning and properly normalizing the schema, so that those values are stored separately, rather than using it as an excuse to not use CLR for splitting.

If you can't use CLR for other reasons, there isn't a clear-cut "second place" revealed by these tests; my answers above were based on overall scale and not at any specific string size. Every solution here was runner up in at least one scenario - so while CLR is clearly the choice when you can use it, what you should use when you cannot is more of an "it depends" answer - you'll need to judge based on your use case(s) and the tests above (or by constructing your own tests) which alternative is better for you.

Addendum : An alternative to splitting in the first place

The above approaches require no changes to your existing application(s), assuming they are already assembling a comma-separated string and throwing it at the database to deal with. One option you should consider, if either CLR is not an option and/or you can modify the application(s), is using Table-Valued Parameters (TVPs). Here is a quick example of how to utilize a TVP in the above context. First, create a table type with a single string column:

CREATE TYPE dbo.Items AS TABLE

(

Item NVARCHAR(4000)

);

Then the stored procedure can take this TVP as input, and join on the content (or use it in other ways - this is just one example):

CREATE PROCEDURE dbo.UpdateProfile

@UserID INT,

@TeamNames dbo.Items READONLY

AS

BEGIN

SET NOCOUNT ON;

INSERT dbo.UserTeams(UserID, TeamID) SELECT @UserID, t.TeamID

FROM dbo.Teams AS t

INNER JOIN @TeamNames AS tn

ON t.Name = tn.Item;

END

GO

Now in your C# code, for example, instead of building a comma-separated string, populate a DataTable (or use whatever compatible collection might already hold your set of values):

DataTable tvp = new DataTable();

tvp.Columns.Add(new DataColumn("Item"));

// in a loop from a collection, presumably:

tvp.Rows.Add(someThing.someValue);

using (connectionObject)

{

SqlCommand cmd = new SqlCommand("dbo.UpdateProfile", connectionObject);

cmd.CommandType = CommandType.StoredProcedure;

SqlParameter tvparam = cmd.Parameters.AddWithValue("@TeamNames", tvp);

tvparam.SqlDbType = SqlDbType.Structured;

// other parameters, e.g. userId

cmd.ExecuteNonQuery();

}

You might consider this to be a prequel to a follow-up post.

Of course this doesn't play well with JSON and other APIs - quite often the reason a comma-separated string is being passed to SQL Server in the first place.

Just reading through the comments here points out the difficulties of trying to track down code in forums. If you're sharing code with the community, have one page where the latest version of the code lives. Source code control tools like Codeplex.com and Github.com are fantastic for this: they let an original author like Jeff create something, and then let other people contribute improvements from all over the world, while letting a core set of moderators control what gets merged into the main branch of code.

Yes, please. A repository.

One thing I will add on the delimited TSQL splitter function. I'm not going to wade into the performance issue, but it's important to note that the modified version posed as is will return incorrect answers if any of the "answers" is more than 8K in size.

This might be considered an edge case, but then again I'd think that keeping large strings of 500K or larger (of loosely formatted data) in a DB might be considered an edge either way (we're skirting fairly close to not using the right tool for the right purpose).

In any case – the 8K limit was worth mentioning.

Correct me if I'm wrong but isnt the CTE function being classed as deterministic and therefore only materialising the worktable on the first pass ? If I make the strings we are parsing different on each line then I see a very different picture.

I just noticed that the plan for the XML method has injected also a lazy spool operator and is operating in a similar fashion (i.e. Cheating), go go gadget Numbers Table!

I don't consider this "cheating" – isn't that exactly what determinism is supposed to do? To treat the same input in the same way all the time? Do you think this change will yield different end results than my main point, which is: "stop doing this with T-SQL and use CLR or TVPs instead"?

It probably will not change the point, although if some of these methods are being optimised for determinism and others arent, we may choose the wrong routine for the job.

Here are My Stats for CTE, XML: on my laptop SQL2012 RTM

Using Article build statement for strings:

XML – type=1 CPU 93, Reads 20133, Duration 105

XML – type=2 CPU 70, Reads 2308, Duration 90

XML – type=3 CPU 90, Reads 2841, Duration 100

CTE – type=1 CPU 80, Reads 30320, Duration 100

CTE – type=2 CPU 62, Reads 4283, Duration 72

Adding a unique number of to the end of each string:

XML – type=1 CPU 3400, Reads 116, Duration 3400

XML – type=2 CPU 7000, Reads 85, Duration 7000

XML – type=3 CPU 1250, Reads 118, Duration 1250

CTE – type=1 CPU 7000, Reads 1200000, Duration 7300

CTE – type=2 CPU 6700, Reads 1110000, Duration 6900

CTE – type=3 CPU 6000, Reads 1100000, Duration 6300

Numbers Table results seems to remain consistent (albeit stats are not great to begin with), presumably because the optimiser cannot guarantee determinism and has to plan to do all the work.

I havent had time to test the other 3 routines yet so i'm not sure if they are affected by this.

So we can almost be done with this topic. I followed this up with a TSQL vs CLR test. I followed the same methodology performed here. Bottom line is that the CLR method is the best overall solution but if your usage scenario fits in a certain sized box, a TSQL solution would also work. It is written up here

If you have installed Master Data Services (MDS), you can use the mdq.Split function.

http://msdn.microsoft.com/en-us/library/ee633810(SQL.105).aspx

this cte is faster than SplitStrings_CTE:

CREATE function [dbo].[f_split]

(

@param nvarchar(max),

@delimiter char(1)

)

returns @t table (val nvarchar(max), seq int)

as

begin

set @param += @delimiter

;with a as

(

select cast(1 as bigint) f, charindex(@delimiter, @param) t, 1 seq

union all

select t + 1, charindex(@delimiter, @param, t + 1), seq + 1

from a

where charindex(@delimiter, @param, t + 1) > 0

)

insert @t

select substring(@param, f, t – f), seq from a

option (maxrecursion 0)

return

end

Thanks Thomas, I have experienced many cases where recursive CTEs performed quite well at low recursion counts, but abysmal at scale. While this is likely also true of the CTE you're replacing, I'd be curious at what size of string and number of elements/delimiters did you test this approach with? What was the magnitude of the difference?

I'm trying to delim on a carriage return by char(13) using Jeff Moden's technique. Doesn't seem to be picking it up. Is there a right way to do this I'm overlooking? Thank you!

Are you sure it's a CHAR(13) and not CHAR(10) or CHAR(13)+CHAR(10)? This works fine for me, except that the 2nd and 3rd rows have a leading whitespace character:

Turns out it works with CHAR(10). Thanks!

Looks like you have a typo in dbo.SplitStrings_Numbers

AND SUBSTRING(@Delimiter + @List, Number, 1) = @Delimiter

should probably be

AND SUBSTRING(@Delimiter + @List, Number, LEN(@Delimiter)) = @Delimiter

Good eyes Mikael! Fixed. I should always test with delimiters > 1 character.

Ahhh… the old Unix/Windows text file chestnut strikes again… I always handle this by checking if the string contains \n characters and then removing all the \r(s)… Then you can quickly split on \n…

The existing recursive CTE is operating with varchar, the one I posted is operating with numeric positions. I did some testing last year in a stackoverflow question. http://stackoverflow.com/questions/17481479/parse-comma-separated-string-to-make-in-list-of-strings-in-the-where-clause/17481595#17481595. In my test it was way faster than the xml-split. Don't forget to read my comment to Alexander Sigachov

This sort of thing makes me yearn for a SQL site akin to the old http://www.xbeat.net/vbspeed/ which proposed code specification with a variety of test and then tracked the performance of all working submissions.

Hi.

These are pretty good split string I have to say. But I cant seem to break this '{(QUESTION_ID:3 QUESTION:Do you offer lessons for physically challenged? ANSWER:Yes ANSWER_ID:1 QUESTION_LBL:Physically Challenged Lessons)},{(QUESTION_ID:4 QUESTION:Do you offer learners lessons? ANSWER:Yes ANSWER_ID:1 QUESTION_LBL:Learners Lessons)},{(QUESTION_ID:5 QUESTION:Do you do forklift driving instructions? ANSWER:No ANSWER_ID:2 QUESTION_LBL:Forklift Instructions)},{(QUESTION_ID:6 QUESTION:Language Preference? ANSWER:Xhosa ANSWER_ID:13,English ANSWER_ID:10 QUESTION_LBL:Language Preference)},{(QUESTION_ID:7 QUESTION:What are the areas you cover? ANSWER:East London, East London Sorroundings QUESTION_LBL:Areas Covered)},{(QUESTION_ID:8 QUESTION:Do you have dual control vehicles? ANSWER:Yes ANSWER_ID:1 QUESTION_LBL:Dual Control Vehicles)},{(QUESTION_ID:9 QUESTION:Do you have female/male instructors? ANSWER:Male ANSWER_ID:17 QUESTION_LBL:Instructors)},{(QUESTION_ID:10 QUESTION:Do you have Manual cars/ Automatic cars? ANSWER:Manual ANSWER_ID:19 QUESTION_LBL:Types of Cars)},{(QUESTION_ID:15 QUESTION:What is the duration of a lesson? ANSWER:1hr to 2hrs QUESTION_LBL:Lesson Duration)},{(QUESTION_ID:16 QUESTION:Do you do pickups and drop offs? ANSWER:Pickup ANSWER_ID:21,Dropoff ANSWER_ID:22 QUESTION_LBL:Pickup/Dropoff)},{(QUESTION_ID:18 QUESTION:Which driving codes do you offer ? ANSWER:Code C (Code 10) ANSWER_ID:55,Code A1 (Code 1) ANSWER_ID:51,Code B (Code 8) ANSWER_ID:53,Code EC1 (Code 14) ANSWER_ID:57 QUESTION_LBL:Code)}'

into columns being QUESTION_ID,QUESTION,ANSWER_ID…..QUESTION_LBL please help

Well first I highly recommend not using split functions against this entire string in the first place. Why even chuck that thing in that format at SQL Server to deal with? Surely if your application can build that string from the data it has, it can instead build a properly structured TVP from a DataTable or some similar collection. If you create a table type like this:

It shouldn't be difficult to create a DataTable that already has the data formulated in that structure. Then you just pass the DataTable directly as a parameter and forget all this string building and deconstructing, which is a lot of extra work and isn't really SQL Server's forte in the first place. See this post for some more info.

In the meantime, can you explain how exactly you want the output (e.g. what do you do with the multiple answer_id values for question 18?).

If the string I'm trying to parse has no separators in it, but rather, is comprised of well-known 4 character codes – is there a recommended method for this type of parsing?

For more detail, I'll have a table of records with these variable-length strings (each string's length, while variable, is a multiple of 4 characters)

Example Input:

Desired Output:

Thanks for the great collection here.

Yes this isn't a big stretch from what I have, in fact the logic in the function is a bit simpler. Assuming you have the same

Numberstable I created above, create this function:CREATE FUNCTION dbo.SplitCodesByLength ( @Codes NVARCHAR(MAX), @Len TINYINT ) RETURNS TABLE WITH SCHEMABINDING AS RETURN ( SELECT TOP (LEN(@Codes)/@Len) Number, Code = SUBSTRING(@Codes,@Len*(Number-1)+1,@Len) FROM dbo.Numbers WHERE Number <= LEN(@Codes)/@Len -- seems redundant, but it isn't ORDER BY Number ); GOSample usage - note that if you want to force order the plan is impacted quite a bit:

HTH,

Aaron

Hi, there seem some bug in the CTE solution, for example if the @List = 'm,,,dede,,,dddd' and @Delimiter = ',,,'

the split result is "m,,,dede" and "dddd"

@victor you are right, the bug is obscure – when the very first element is exactly one character long *AND* the delimiter is greater than one character. I've fixed it.