A few weeks ago, I made a pretty big deal about SQL Server 2016 Service Pack 1. Many features previously reserved for Enterprise Edition were unleashed to lower editions, and I was ecstatic to learn about these changes.

Nonetheless, I'm seeing a few people who are, let's say, a bit less excited than I am.

It's important to keep in mind that the changes here weren't meant to provide complete feature parity across all editions; they were for the specific purpose of creating a more consistent programming surface area. Now customers can use features like In-Memory OLTP, Columnstore, and compression without worrying about the targeted edition(s) – only about how well they will scale. Several security features that didn't really seem to have anything to do with edition are opened up as well. The one I understood the least was Always Encrypted; I couldn't fathom why only Enterprise customers needed to protect things like credit card data. Transparent Data Encryption is still Enterprise-only, on versions earlier than SQL Server 2019, because this isn't really a programmability feature (either it's on or it's not).

So what's really in it for Standard Edition customers?

I think the biggest problem most people have is that max memory in Standard Edition is still limited to 128GB. They look at that and say, "Gee, thanks for all the features, but the memory limit means I can't really use them."

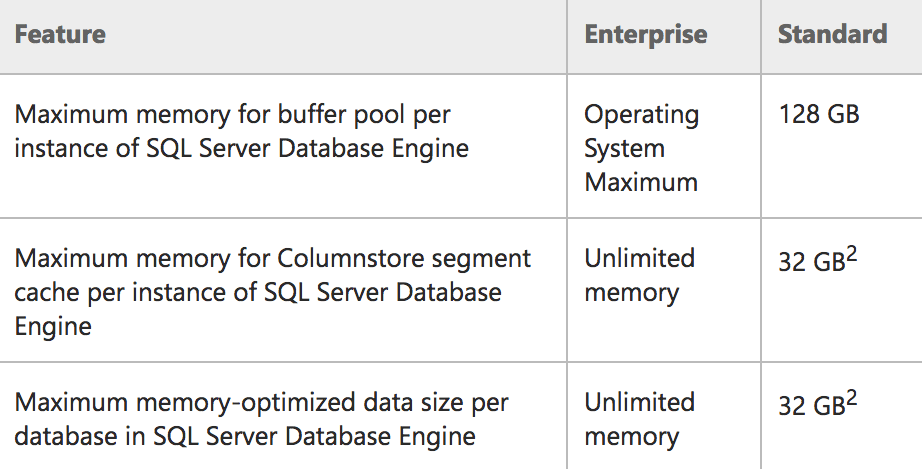

However, the surface area changes bring about performance improvement opportunities, even if that wasn't their original intention (or even if it was – I wasn't in any of those meetings). Let's take a closer look at a small section of the fine print (from the official docs):

Memory limits for Enterprise/Standard in SQL Server 2016 SP1

Memory limits for Enterprise/Standard in SQL Server 2016 SP1

The astute reader will notice that the buffer pool limit wording has changed, from:

To:

This is a better description of what really happens in Standard Edition: a 128GB limit for the buffer pool only, and other memory reservations can be over and above that (think pools like the plan cache). So, in effect, a Standard Edition server could use 128GB of buffer pool, then max server memory could be higher and support more memory used for other reservations. Similarly, Express Edition is now properly documented to use 1.4GB for the buffer pool.

You might also notice some very specific wording in that left-most column (e.g. "per instance" and "per database") for the features that are being exposed in Standard Edition for the first time. To be more specific:

- The instance is limited to 128GB of memory for the buffer pool.

- The instance can have an additional 32GB allocated to Columnstore objects, over and above the buffer pool limit.

- Each user database on the instance can have an additional 32GB allocated to memory-optimized tables, over and above the buffer pool limit.

And to be crystal clear: These memory limits for ColumnStore and In-Memory OLTP are NOT subtracted from the buffer pool limit, as long as the server has more than 128GB of memory available. If the server has less than 128GB, you will see these technologies compete with buffer pool memory, and in fact be limited to a % of max server memory. More details are available in this post from Microsoft's Parikshit Savjani.

I don't have hardware handy to test the extent of this, but if you had a machine with 256GB or 512GB of memory, you could theoretically use it all with a single Standard Edition instance, if you could – for example – spread your In-Memory data across databases in <= 32GB chunks, for a total of 128GB + (32GB * (# of databases)). If you wanted to use ColumnStore instead of In-Memory, you could spread your data across multiple instances, giving you (128GB + 32GB) * (# of instances). And you could combine these strategies for ((128GB + 32GB ColumnStore) * (# of instances)) + (32GB In-Memory * (# of databases * # of instances)).

Whether breaking your data up in this way is practical for your application, I'm not sure; I'm only suggesting it's possible. Some of you might already be doing some of these things to get better usage out of Standard Edition on servers with more than 128GB of memory.

With ColumnStore specifically, in addition to being allowed to use 32GB in addition to the buffer pool, keep in mind that the compression you can get here means you can often fit a lot more into that 32GB limit than you could with the same data in traditional row-store. And if you can't use ColumnStore for whatever reason (or it still won't fit into 32GB), you can now implement traditional page or row compression – this might not allow you to fit your entire database into the 128GB buffer pool, but it might enable more of your data to be in memory at any given time.

Similar things are possible in Express (at a lower scale), where you can have 1.4GB for buffer pool, but an additional ~352MB per instance for ColumnStore, and ~352MB per database for In-Memory OLTP.

But Enterprise Edition still has lots of upside

There are many other differentiators to keep interest in Enterprise Edition, aside from unlimited memory limits all around – from online rebuilds and merry-go-round scans to full-on Availability Groups and all the virtualization rights you can shake a stick at. Even ColumnStore indexes have well-defined performance enhancements reserved for Enterprise Edition.

So just because there are some techniques that will allow you to get more out of Standard Edition, that does not mean it will magically scale to meet your performance needs. Like my other posts about "doing it on a budget" (e.g. partitioning and readable secondaries), you can certainly spend time and effort kludging together a solution, but it will only get you so far. The point of this post was simply to demonstrate that you can get farther with Standard Edition in 2016 SP1 than you ever could before.

Very nice post, Aaron!

Some MVPs and other SQL Server bloggers do seem either confused or disappointed that SQL Server 2016 Standard Edition is not 100% the same as SQL Server 2016 Enterprise Edition after the SP1 changes. I would really like for Microsoft to raise or eliminate the buffer pool memory limit in SQL Server vNext, and I think that there should be parity for security features, but beyond that, I am very happy with the SP1 feature changes.

Enterprise Edition does have so much added value, from a number of different perspectives. Hopefully, Microsoft (and other bloggers) will help get more information out there to make this even more clear. I know I am going to try to help make that case.

Nice post, Aaron.

When the SP1 was released, the limit for ColumnStore and In-Memory stated that it was one quarter of the memory up to 32GB. Reading your post and the one from the Tiger Team, it seems that the 32GB is available even if the max memory for the instance is limited to 32GB. Am I right ?

Sorry, you're right, I was talking specifically about the case where you have more than 128GB of memory (after all, people who are complaining about the 128GB limit aren't running on machines that have 32GB installed).

Excellent post —

Since In-Memory OLTP also supports columnstore, it's not clear to me if the in-mem columnstore counts towards the per-db in-mem limit, or the per-instance columnstore limit.

Great question Ned! I actually don't know (and as I mentioned, I would test all this stuff, if someone gave me a lab full of capable hardware). If I had to guess, I would say that it would count against both.

My current system also doesn't have enough memory to test this, so I've contacted Microsoft for clarity. Will report back here if/when I hear from them.

Aaron, I received the following from Microsoft:

"Columnstore on memory-optimized tables counts towards per-DB cap.

The Columnstore-related cap is for the cache size used with Columnstore segments for disk-based tables, which are not memory-resident.

Columnstore indexes on memory-optimized tables are memory-resident, and their size counts toward the memory-optimized data cap, which is per-DB."

What about compression? From what i understand, the compressed pages are also compressed in Ram. If you compress your data, you could have more data in buffer pool than before? Isn't the data decompressed when sent to output buffer? If thats the case, you will have a "bigger" buffer pool depending on compression ratio.

Right, if you go back to the article you'll see I mention that you can get some level of memory relief now even if you aren't going to use these newer features:

"And if you can't use ColumnStore for whatever reason (or it still won't fit into 32GB), you can now implement traditional page or row compression – this might not allow you to fit your entire database into the 128GB buffer pool, but it might enable more of your data to be in memory at any given time."

As for your further question, from the very beginning of the feature, Data Compression: Strategy, Capacity Planning and Best Practices has said:

"There is no in-memory, decompressed copy of the compressed page."

The same document explains that data is only decompressed when it is "read for filtering, sorting, joining, as part of a query response" or "updated by an application."

Compression ratio can certainly have an impact on your buffer pool size, but better compression ratios can't lead to worse numbers there.

In fact, if the buffer pool contained decompressed copies of the data, that would largely defeat the purpose of compression in the first place (which isn't just about saving disk space).

How do buffer pool extensions factor into the new SQL 2016 SP1 Standard Edition buffer pool memory limits?

H John, sorry about the delay. No changes here. Buffer Pool Extensions can still use up to 4x BP in Standard Edition and 32x BP in Enterprise Edition.

If interested please help bump standard to be modernized to support more cores/ram… it should really be modernized every 4-6 years.

https://connect.microsoft.com/SQLServer/feedback/details/3135172/increase-ram-memory-limit-in-sql-2014-2016-standard-to-256gb-and-core-support