Virtualization is very popular for organizations: it allows them to better utilize hardware by combining multiple servers onto a single host, provides HA capabilities, and gives a reduction in various costs like heating/cooling, SQL Server licenses, and hardware. I’ve been involved in numerous projects with organizations to help them migrate from physical to virtual environments and have helped them experience these benefits.

What I want to share with you in this article is a peculiar issue I came across while working with Hyper-V on Windows Server 2012 R2 using Dynamic Memory. I must admit that most of my knowledge of virtualization has been with VMware, however that’s changing now.

When working with SQL Server on VMware I always recommend to set reservations for memory so when I encountered this Dynamic Memory feature with Hyper-V I had to do some research. I found an article (Hyper-V Dynamic Memory Configuration Guide) that explains many of the benefits and system requirements for using Dynamic Memory. This feature is pretty cool in how you can provide a virtual machine with more or less memory without it having to be powered off.

Playing around with Hyper-V I’ve found provisioning virtual machines to be straightforward and easy to learn. With little effort I was able to build a lab environment to simulate the experience my customer was having. Credit goes to my boss for providing me with awesome hardware to work with. I am running a Dell M6800 with an i7 processor, 32GB of RAM and two 1TB SSDs. This beast is better than a lot of servers I have worked on.

Using VMware Workstation 11 on my laptop, I created a Windows Server 2012 R2 guest with 4 vCPUs, 24GB of RAM and 100GB of storage. Once the guest was created and patched I added the Hyper-V role and provisioned a guest under Hyper-V. The new guest was built with Windows Server 2012 R2 with 2 vCPUs, 22GB of RAM and 60GB of storage running SQL Server 2014 RTM.

I ran three sets of tests, each using dynamic memory. For each test I used Red Gate's SQL Data Generator against the AdventureWorks2014 database to fill up the buffer pool. For the first test I started with 512MB for the Startup RAM value since that is the minimum amount of memory to start Windows Server 2012 R2 and the buffer pool stopped increasing at around 8GB.

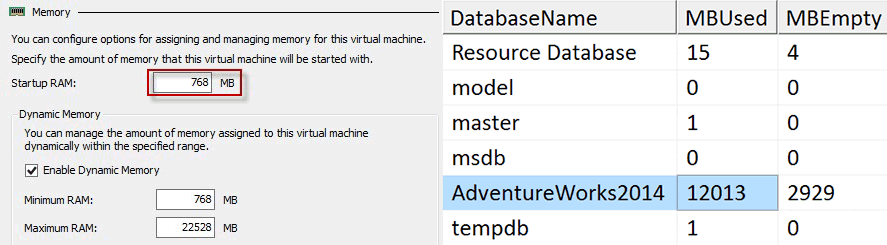

For each test I would truncate my test table, shut down the guest, modify the memory settings and start the guest back up. For the second test I increased the Startup RAM to 768MB and the buffer pool only increased to just over 12GB in size.

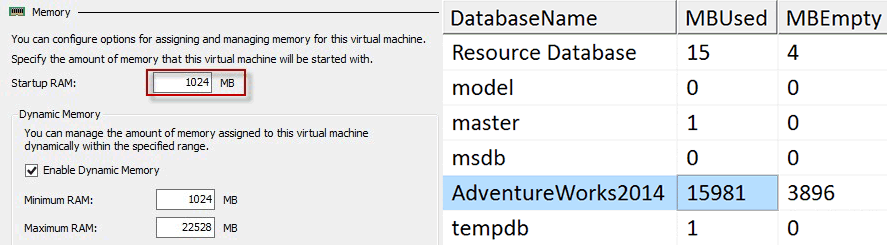

For the third and final test increased the Startup RAM to 1024MB, ran the data generator and the buffer pool was able to increase to just under 16GB.

Doing a little math on these values shows that the buffer pool can’t grow more than 16 times the Startup RAM. This can be very problematic for SQL Server if the Startup RAM is less than 1/16 the size of the maximum memory. Let’s think about a Hyper-V guest with 64GB of RAM running SQL Server with a Startup RAM value of 1GB. We’ve observed that the buffer pool would not be able to use more than 16GB with this configuration, but if we set the Startup RAM value to 4096MB then the buffer pool would be able to increase 16 times allowing us to use all 64GB.

The only references I could find about why the buffer pool is limited to 16 times the Startup RAM value were on pages 8 and 16 in the whitepaper, Best Practices for Running SQL Server with HVDM. This document explains that since the buffer cache value is computed at startup time, it is a static value and doesn’t grow. However if SQL Server detects that Hot Add Memory is supported then it increases the size reserved for the virtual address space for the buffer pool by 16 times the startup memory. This document also states that this behavior affects SQL Server 2008 R2 and earlier, however my test were conducted on Windows Server 2012 R2 with SQL Server 2014 so I will be contacting Microsoft to get the best practices document updated.

Since most production DBAs do not provision virtual machines or work heavily in that space, and virtualization engineers are not studying the latest and greatest SQL Server technology, I can understand how this important information about how the buffer pool handles Dynamic Memory is unknown to a lot of people.

Even following the articles can be misleading. In the article Hyper-V Dynamic Memory Configuration Guide, the description for Startup RAM reads:

Optimal memory utilization for whom, the host or the guest? If a virtualization admin was reading this, they would likely determine that it means the minimum memory allowed to start the operating system.

Being responsible for SQL Server means we need to know about other technologies that can influence our environment. With the introduction of SANs and virtualization we need to fully understand how things in those environments can negatively impact SQL Server and, more importantly, how to effectively communicate concerns to the people responsible for those systems. A DBA doesn’t necessarily need to know how to provision storage in a SAN or how to provision or be able to administer a VMWare or Hyper-V environment, but they should know the basics of how things work.

By knowing basics about how a SAN works with storage arrays, storage networks, multi-pathing and so on, as well as how the hypervisor works with the scheduling of CPUs and storage allocation within virtualization, a DBA can better communicate and troubleshoot when issues arise. Over the years I have successfully worked with a number of SAN and virtualization admins to build standards for SQL Server. These standards are unique to SQL Server and don’t necessarily apply to web or application servers.

DBAs can’t really rely on SAN and virtualization admins to fully understand best practices for SQL Server, regardless of how nice that would be, so we need to educate ourselves the best we can on how their areas of expertise can impact us.

During my testing I used a query from Paul Randal's blog post, Performance issues from wasted buffer pool memory, to determine how much buffer pool the AdventureWorks2014 database was using. I have included the code below:

SELECT

(CASE WHEN ([database_id] = 32767)

THEN N'Resource Database'

ELSE DB_NAME ([database_id]) END) AS [DatabaseName],

COUNT (*) * 8 / 1024 AS [MBUsed],

SUM (CAST ([free_space_in_bytes] AS BIGINT)) / (1024 * 1024) AS [MBEmpty]

FROM sys.dm_os_buffer_descriptors

GROUP BY [database_id];

This code is also great for troubleshooting which of your databases is consuming the majority of your buffer pool so you can know which database you should focus on tuning the high-cost queries. If you are a Hyper-V shop, check with your admin to see if Dynamic Memory could be configured in such a way that it is negatively impacting your server.

We are using SQL 2014 with dynamic memory enabled. 4096 MB startup RAM, 128 GB max RAM.

SQL Server uses something in the region of 90 GB, which seems to suggest that it is able to use more than the 16*Startup RAM value.

Please keep us updated on what Microsoft has to say about this for an official stamp on it :)

That issue sounds familiar Tim… Great post by the way! ;-)

Thank you for your wonderfull article.

Thanks for this post. I'm going to invert your statement in future conversations with my peers and to remind myself… "Network architects, SAN and virtualization admins can’t really rely on DBAs, engineers, researchers, accountants, marketers, product designers, etc. to fully understand best practices for computing and storage resource allocation, regardless of how nice that would be, so we need to educate ourselves the best we can so as to align our practices with relevant consideration given to the requirements of their roles and the tools and procedures they utilize."

We're a two man shop doing the work of what should be at least five. I don't think we can call ourselves DBAs, even though I do a fair bit of stored procedure and report mods; my associate is quite adept at running interference against the C-levels while I train myself on the fly to "git'r dun." I've always had this bit of concern gnawing on the edge of my consciousness with nearly every task I undertake on the IT management and maintenance side of things, unable to clearly identify what it is, so unable to quantify it or make it go away. Your statement here has concisely put in black and white that concern.

Nearly forty years of IT experience (and many of it the best kind in my opinion: supporting VARs as customers, then supporting users and systems in many verticals in the employ of a couple of those VARs) can't prepare me for everything, although I'm amused internally how often things don't change, only the speeds and feeds.. I've got a decent grasp on what SQL server CAN do vis-à-vis reliability, performance, and integrity. Many things I've thought would be common sense approach, I've been relieved to find that's just how it is implemented. I'm also not fooling myself into thinking I've scratched the surface of SQL's internals.

Anyways, the short of the long is, I recognize a gem of relevant knowledge when I see one, and today I've uncovered a shiny one in the experience you shared here as well as a bit of clarity that will go a long way toward aligning what I do each day with my user base. Thank you, sir.

In the interest of reciprocating knowledge: from what I (know; have been told; heard somewhere; picked up along my travels), and this is going back to the days before virtualization, SQL Server loves memory, and best practice is to give your server an amount memory at least equal to the size of your databases plus operating requirements. I'm not a database pro, but that one thing makes a lot of sense to me, so when I configure a virtual machine for the SQL role it is never going to have a startup amount of 512MB or even 4096MB. It is likely going to be a startup amount of whatever the current DB size is plus at least 4096MB, and expandable to a reasonable limit given the host's workload and resources. Following this practice hopefully I won't run into this issue. This gem of research you've shared definitely provides a solid reason for continuing this practice and adds another tangible benefit to doing so. Thanks, again, and hope this helps!