People wonder whether they should do their best to prevent exceptions, or just let the system handle them. I've seen several discussions where folks debate whether they should do whatever they can to prevent an exception, because error handling is "expensive." There is no doubt that error handling isn't free, but I would predict that a constraint violation is at least as efficient as checking for a potential violation first. This may be different for a key violation than a static constraint violation, for example, but in this post I'm going to focus on the former.

The primary approaches people use to deal with exceptions are:

- Just let the engine handle it, and bubble any exception back to the caller.

- Use

BEGIN TRANSACTIONandROLLBACKif@@ERROR <> 0. - Use

TRY/CATCHwithROLLBACKin theCATCHblock (SQL Server 2005+).

And many take the approach that they should check if they're going to incur the violation first, since it seems cleaner to handle the duplicate yourself than to force the engine to do it. My theory is that you should trust but verify; for example, consider this approach (mostly pseudo-code):

IF NOT EXISTS ([row that would incur a violation])

BEGIN

BEGIN TRY

BEGIN TRANSACTION;

INSERT ()...

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

-- well, we incurred a violation anyway;

-- I guess a new row was inserted or

-- updated since we performed the check

ROLLBACK TRANSACTION;

END CATCH

END

We know that the IF NOT EXISTS check does not guarantee that someone else won't have inserted the row by the time we get to the INSERT (unless we place aggressive locks on the table and/or use SERIALIZABLE), but the outer check does prevent us from trying to commit a failure and then having to roll back. We stay out of the entire TRY/CATCH structure if we already know that the INSERT will fail, and it would be logical to assume that – at least in some cases – this will be more efficient than entering the TRY/CATCH structure unconditionally. This makes little sense in a single INSERT scenario, but imagine a case where there is more going on in that TRY block (and more potential violations that you could check for in advance, meaning even more work that you might otherwise have to perform and then roll back should a later violation occur).

Now, it would be interesting to see what would happen if you used a non-default isolation level (something I'll treat in a future post), particularly with concurrency. For this post, though, I wanted to start slowly, and test these aspects with a single user. I created a table called dbo.[Objects], a very simplistic table:

CREATE TABLE dbo.[Objects]

(

ObjectID INT IDENTITY(1,1),

Name NVARCHAR(255) PRIMARY KEY

);

GO

I wanted to populate this table with 100,000 rows of sample data. To make the values in the name column unique (since the PK is the constraint I wanted to violate), I created a helper function that takes a number of rows and a minimum string. The minimum string would be used to make sure that either (a) the set started off beyond the maximum value in the Objects table, or (b) the set started at the minimum value in the Objects table. (I will specify these manually during the tests, verified simply by inspecting the data, though I probably could have built that check into the function.)

CREATE FUNCTION dbo.GenerateRows(@n INT, @minString NVARCHAR(32))

RETURNS TABLE

AS

RETURN

(

SELECT TOP (@n) name = name + '_' + RTRIM(rn)

FROM

(

SELECT a.name, rn = ROW_NUMBER() OVER

(PARTITION BY a.name ORDER BY a.name)

FROM sys.all_objects AS a

CROSS JOIN sys.all_objects AS b

WHERE a.name >= @minString

AND b.name >= @minString

) AS x

);

GO

This applies a CROSS JOIN of sys.all_objects onto itself, appending a unique row_number to each name, so the first 10 results would look like this:

Populating the table with 100,000 rows was simple:

INSERT dbo.[Objects](name)

SELECT name FROM dbo.GenerateRows(100000, N'')

ORDER BY name;

GO

Now, since we are going to be inserting new unique values into the table, I created a procedure to perform some cleanup at the beginning and end of each test – in addition to deleting any new rows we've added, it will also clean up the cache and buffers. Not something you want to code into a procedure on your production system, of course, but quite fine for local performance testing.

CREATE PROCEDURE dbo.EH_Cleanup

-- P.S. "EH" stands for Error Handling, not "Eh?"

AS

BEGIN

SET NOCOUNT ON;

DELETE dbo.[Objects] WHERE ObjectID > 100000;

DBCC FREEPROCCACHE;

DBCC DROPCLEANBUFFERS;

END

GO

I also created a log table to keep track of the start and end times for each test:

CREATE TABLE dbo.RunTimeLog

(

LogID INT IDENTITY(1,1),

Spid INT,

InsertType VARCHAR(255),

ErrorHandlingMethod VARCHAR(255),

StartDate DATETIME2(7) NOT NULL DEFAULT SYSUTCDATETIME(),

EndDate DATETIME2(7)

);

GO

Finally, the testing stored procedure handles a variety of things. We have three different error handling methods, as described in the bullets above: "JustInsert", "Rollback", and "TryCatch"; we also have three different insert types: (1) all inserts succeed (all rows are unique), (2) all inserts fail (all rows are duplicates), and (3) half inserts succeed (half the rows are unique, and half the rows are duplicates). Coupled with this are two different approaches: check for the violation before attempting the insert, or just go ahead and let the engine determine if it is valid. I thought this would give a good comparison of the different error handling techniques combined with different likelihoods of collisions to see whether a high or low collision percentage would significantly impact the results.

For these tests I picked 40,000 rows as my total number of insert attempts, and in the procedure I perform a union of 20,000 unique or non-unique rows with 20,000 other unique or non-unique rows. You can see that I hard-coded the cutoff strings in the procedure; please note that on your system these cutoffs will almost certainly occur in a different place.

CREATE PROCEDURE dbo.EH_Insert

@ErrorHandlingMethod VARCHAR(255),

@InsertType VARCHAR(255),

@RowSplit INT = 20000

AS

BEGIN

SET NOCOUNT ON;

-- clean up any new rows and drop buffers/clear proc cache

EXEC dbo.EH_Cleanup;

DECLARE

@CutoffString1 NVARCHAR(255),

@CutoffString2 NVARCHAR(255),

@Name NVARCHAR(255),

@Continue BIT = 1,

@LogID INT;

-- generate a new log entry

INSERT dbo.RunTimeLog(Spid, InsertType, ErrorHandlingMethod)

SELECT @@SPID, @InsertType, @ErrorHandlingMethod;

SET @LogID = SCOPE_IDENTITY();

-- if we want everything to succeed, we need a set of data

-- that has 40,000 rows that are all unique. So union two

-- sets that are each >= 20,000 rows apart, and don't

-- already exist in the base table:

IF @InsertType = 'AllSuccess'

SELECT @CutoffString1 = N'database_audit_specifications_1000',

@CutoffString2 = N'dm_clr_properties_1398';

-- if we want them all to fail, then it's easy, we can just

-- union two sets that start at the same place as the initial

-- population:

IF @InsertType = 'AllFail'

SELECT @CutoffString1 = N'', @CutoffString2 = N'';

-- and if we want half to succeed, we need 20,000 unique

-- values, and 20,000 duplicates:

IF @InsertType = 'HalfSuccess'

SELECT @CutoffString1 = N'database_audit_specifications_1000',

@CutoffString2 = N'';

DECLARE c CURSOR

LOCAL STATIC FORWARD_ONLY READ_ONLY

FOR

SELECT name FROM dbo.GenerateRows(@RowSplit, @CutoffString1)

UNION ALL

SELECT name FROM dbo.GenerateRows(@RowSplit, @CutoffString2);

OPEN c;

FETCH NEXT FROM c INTO @Name;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @Continue = 1;

-- let's only enter the primary code block if we

-- have to check and the check comes back empty

-- (in other words, don't try at all if we have

-- a duplicate, but only check for a duplicate

-- in certain cases:

IF @ErrorHandlingMethod LIKE 'Check%'

BEGIN

IF EXISTS (SELECT 1 FROM dbo.[Objects] WHERE Name = @Name)

SET @Continue = 0;

END

IF @Continue = 1

BEGIN

-- just let the engine catch

IF @ErrorHandlingMethod LIKE '%Insert'

BEGIN

INSERT dbo.[Objects](name) SELECT @name;

END

-- begin a transaction, but let the engine catch

IF @ErrorHandlingMethod LIKE '%Rollback'

BEGIN

BEGIN TRANSACTION;

INSERT dbo.[Objects](name) SELECT @name;

IF @@ERROR <> 0

BEGIN

ROLLBACK TRANSACTION;

END

ELSE

BEGIN

COMMIT TRANSACTION;

END

END

-- use try / catch

IF @ErrorHandlingMethod LIKE '%TryCatch'

BEGIN

BEGIN TRY

BEGIN TRANSACTION;

INSERT dbo.[Objects](name) SELECT @Name;

COMMIT TRANSACTION;

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION;

END CATCH

END

END

FETCH NEXT FROM c INTO @Name;

END

CLOSE c;

DEALLOCATE c;

-- update the log entry

UPDATE dbo.RunTimeLog SET EndDate = SYSUTCDATETIME()

WHERE LogID = @LogID;

-- clean up any new rows and drop buffers/clear proc cache

EXEC dbo.EH_Cleanup;

END

GO

Now we can call this procedure with various arguments to get the different behavior we're after, trying to insert 40,000 values (and knowing, of course, how many should succeed or fail in each case). For each 'error handling method' (just try the insert, use begin tran/rollback, or try/catch) and each insert type (all succeed, half succeed, and none succeed), combined with whether or not to check for the violation first, this gives us 18 combinations:

EXEC dbo.EH_Insert 'JustInsert', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'JustInsert', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'JustInsert', 'AllFail', 20000;

EXEC dbo.EH_Insert 'JustTryCatch', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'JustTryCatch', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'JustTryCatch', 'AllFail', 20000;

EXEC dbo.EH_Insert 'JustRollback', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'JustRollback', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'JustRollback', 'AllFail', 20000;

EXEC dbo.EH_Insert 'CheckInsert', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'CheckInsert', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'CheckInsert', 'AllFail', 20000;

EXEC dbo.EH_Insert 'CheckTryCatch', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'CheckTryCatch', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'CheckTryCatch', 'AllFail', 20000;

EXEC dbo.EH_Insert 'CheckRollback', 'AllSuccess', 20000;

EXEC dbo.EH_Insert 'CheckRollback', 'HalfSuccess', 20000;

EXEC dbo.EH_Insert 'CheckRollback', 'AllFail', 20000;

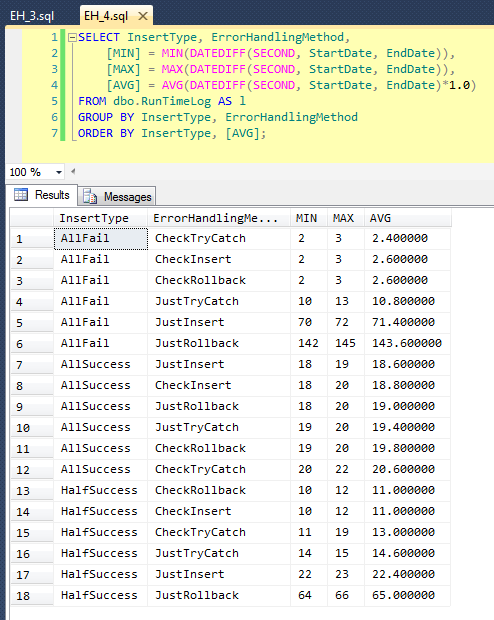

After we've run this (it takes about 8 minutes on my system), we've got some results in our log. I ran the entire batch five times to make sure we got decent averages and to smooth out any anomalies. Here are the results:

The graph that plots all of the durations at once shows a couple of serious outliers:

You can see that, in cases where we expect a high rate of failure (in this test, 100%), beginning a transaction and rolling back is by far the least attractive approach (3.59 milliseconds per attempt), while just letting the engine raise an error is about half as bad (1.785 milliseconds per attempt). The next worst performer was the case where we begin a transaction then roll it back, in a scenario where we expect about half of the attempts to fail (averaging 1.625 milliseconds per attempt). The 9 cases on the left side of the graph, where we are checking for the violation first, did not venture above 0.515 milliseconds per attempt.

Having said that, the individual graphs for each scenario (high % of success, high % of failure, and 50-50) really drive home the impact of each method.

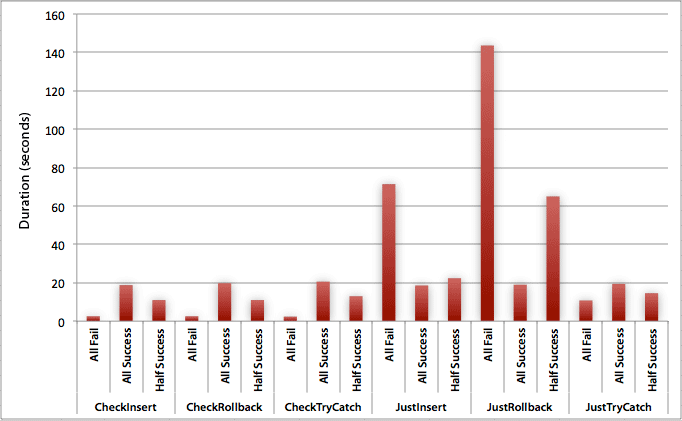

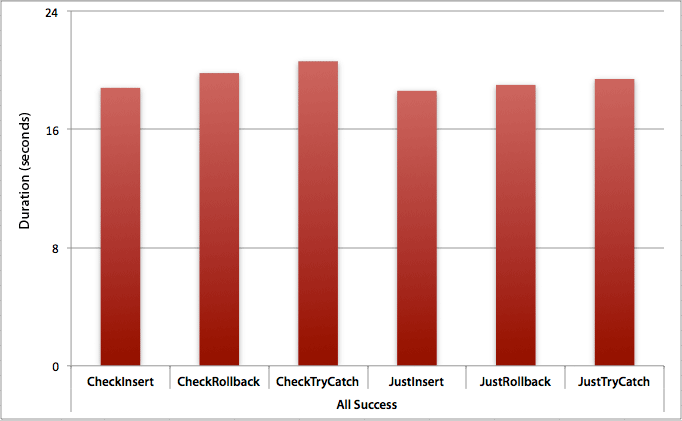

Where all the inserts succeed

In this case we see that the overhead of checking for the violation first is negligible, with an average difference of 0.7 seconds across the batch (or 125 microseconds per insert attempt):

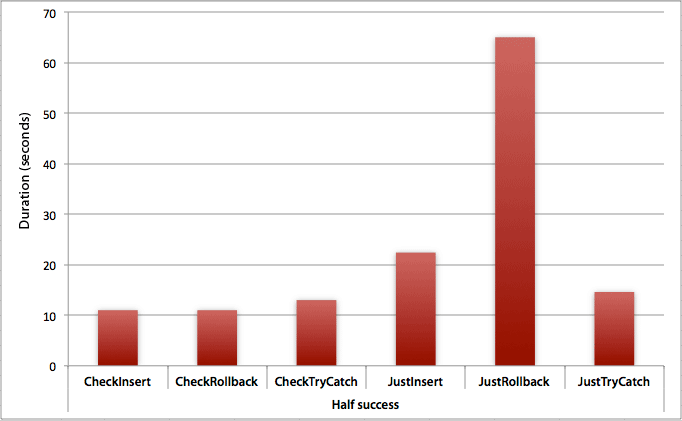

Where only half the inserts succeed

When half the inserts fail, we see a big jump in the duration for the insert / rollback methods. The scenario where we start a transaction and roll it back is about 6x slower across the batch when compared to checking first (1.625 milliseconds per attempt vs. 0.275 milliseconds per attempt). Even the TRY/CATCH method is 11% faster when we check first:

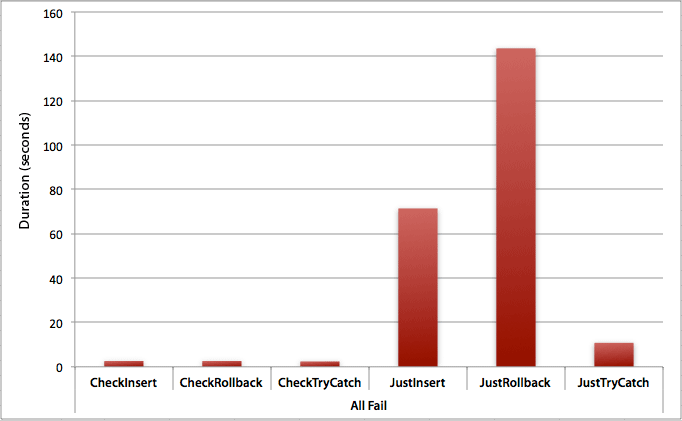

Where all the inserts fail

As you might expect, this shows the most pronounced impact of error handling, and the most obvious benefits of checking first. The rollback method is nearly 70x slower in this case when we don't check compared to when we do (3.59 milliseconds per attempt vs. 0.065 milliseconds per attempt):

What does this tell us? If we think we are going to have a high rate of failure, or have no idea what our potential failure rate will be, then checking first to avoid violations in the engine is going to be tremendously worth our while. Even in the case where we have a successful insert every time, the cost of checking first is marginal and easily justified by the potential cost of handling errors later (unless your anticipated failure rate is exactly 0%).

So for now I think I will stick to my theory that, in simple cases, it makes sense to check for a potential violation before telling SQL Server to go ahead and insert anyway. In a future post, I will look at the performance impact of various isolation levels, concurrency, and maybe even a few other error handling techniques.

[As an aside, I wrote a condensed version of this post as a tip for mssqltips.com back in February.]

Nice post Aaron. This is something I had never considered in the past – the performance implications of different error handling methods. We usually let the engine handle the error just because we are always in a hurry and that is the least amount of code. Recently I've been experimenting with try / catch but hadn't considered the performance implications. Thanks for taking the time to provide this additional perspective!

Very instructive post.

You might want to add to EH_Cleanup a

CHECKPOINT. Also, I'd use milliseconds to measure time. Using seconds will just cut off the ms information, vastly reducing precision.Thanks Tobi. I considered milliseconds but the discrepancies were large enough that the graphs would not have looked any different – they would just contain a wider legend. :-)

Nice Post

Nice Post – thanks.

Gives me the evidence I need without having to spend a load of time proving it myself.

many thanks for sharing your good work.

:)

How to get black screen in sql 2012

https://blogs.sentryone.com/aaronbertrand/making-ssms-pretty-my-dark-theme/

It would be nice if you used a consistent scale on your bar graphs.

I'll take it into consideration, thanks. Of course the problem with scaling all graphs in an article to the max value in any one graph, is that it can really drown out differences in all the other graphs. Sometimes you want to use a different scale to show either (a) there is very little difference (2nd chart) or (b) there is a big difference (3rd chart). If the 2nd chart were scaled to 160, it would just be a whole bunch of empty space, but if the 3rd chart were scaled to 160, the difference in duration would be much less pronounced (less than half). Can you point to any examples where all the charts use the same scale *and* each chart has significantly different max values?