I see a lot of advice out there that says something along the lines of, "Change your cursor to a set-based operation; that will make it faster." While that can often be the case, it's not always true. One use case I see where a cursor repeatedly outperforms the typical set-based approach is the calculation of running totals. This is because the set-based approach usually has to look at some portion of the underlying data more than one time, which can be an exponentially bad thing as the data gets larger; whereas a cursor – as painful as it might sound – can step through each row/value exactly once.

These are our basic options in most common versions of SQL Server. In SQL Server 2012, however, there have been several enhancements made to windowing functions and the OVER clause, mostly stemming from several great suggestions submitted by fellow MVP Itzik Ben-Gan (here is one of his suggestions). In fact Itzik has a new MS-Press book that covers all of these enhancements in much greater detail, entitled, "Microsoft SQL Server 2012 High-Performance T-SQL Using Window Functions."

So naturally, I was curious; would the new windowing functionality make the cursor and self-join techniques obsolete? Would they be easier to code? Would they be faster in any (never mind all) cases? What other approaches might be valid?

The Setup

To do some testing, let's set up a database:

USE [master];

GO

IF DB_ID('RunningTotals') IS NOT NULL

BEGIN

ALTER DATABASE RunningTotals SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE RunningTotals;

END

GO

CREATE DATABASE RunningTotals;

GO

USE RunningTotals;

GO

SET NOCOUNT ON;

GO

And then fill a table with 10,000 rows that we can use to perform some running totals against. Nothing too complicated, just a summary table with a row for each date and a number representing how many speeding tickets were issued. I haven't had a speeding ticket in a couple of years, so I don't know why this was my subconscious choice for a simplistic data model, but there it is.

CREATE TABLE dbo.SpeedingTickets

(

[Date] DATE NOT NULL,

TicketCount INT

);

GO

ALTER TABLE dbo.SpeedingTickets ADD CONSTRAINT pk PRIMARY KEY CLUSTERED ([Date]);

GO

;WITH x(d,h) AS

(

SELECT TOP (250)

ROW_NUMBER() OVER (ORDER BY [object_id]),

CONVERT(INT, RIGHT([object_id], 2))

FROM sys.all_objects

ORDER BY [object_id]

)

INSERT dbo.SpeedingTickets([Date], TicketCount)

SELECT TOP (10000)

d = DATEADD(DAY, x2.d + ((x.d-1)*250), '19831231'),

x2.h

FROM x CROSS JOIN x AS x2

ORDER BY d;

GO

SELECT [Date], TicketCount

FROM dbo.SpeedingTickets

ORDER BY [Date];

GO

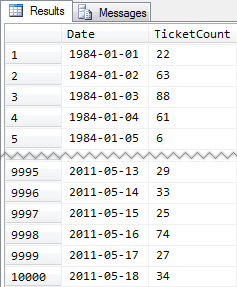

Abridged results:

So again, 10,000 rows of pretty simple data – small INT values and a series of dates from 1984 through May of 2011.

The Approaches

Now my assignment is relatively simple and typical of many applications: return a resultset that has all 10,000 dates, along with the cumulative total of all speeding tickets up to and including that date. Most people would first try something like this (we'll call this the "inner join" method):

SELECT

st1.[Date],

st1.TicketCount,

RunningTotal = SUM(st2.TicketCount)

FROM

dbo.SpeedingTickets AS st1

INNER JOIN

dbo.SpeedingTickets AS st2

ON st2.[Date] <= st1.[Date]

GROUP BY st1.[Date], st1.TicketCount

ORDER BY st1.[Date];

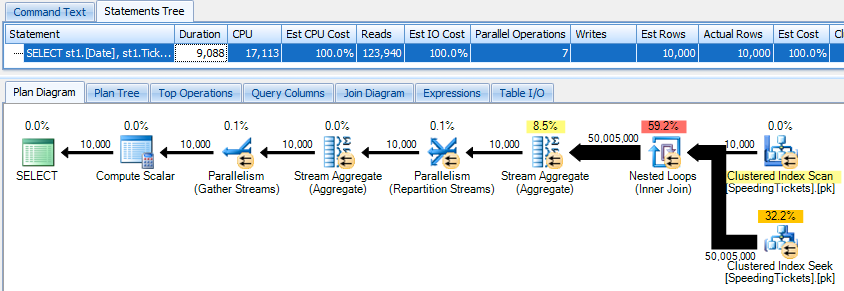

...and be shocked to discover that it takes nearly 10 seconds to run. Let's quickly examine why by viewing the graphical execution plan, using SQL Sentry Plan Explorer:

The big fat arrows should give an immediate indication of what is going on: the nested loop reads one row for the first aggregation, two rows for the second, three rows for the third, and on and on through the entire set of 10,000 rows. This means we should see roughly ((10000 * (10000 + 1)) / 2) rows processed once the entire set is traversed, and that seems to match with the number of rows shown in the plan.

Note that running the query without parallelism (using the OPTION (MAXDOP 1) query hint) makes the plan shape a little simpler, but does not help at all in either execution time or I/O; as shown in the plan, duration actually almost doubles, and reads only decrease by a very small percentage. Comparing to the previous plan:

There are plenty of other approaches that people have tried to get efficient running totals. One example is the "subquery method" which just uses a correlated subquery in much the same way as the inner join method described above:

SELECT

[Date],

TicketCount,

RunningTotal = TicketCount + COALESCE(

(

SELECT SUM(TicketCount)

FROM dbo.SpeedingTickets AS s

WHERE s.[Date] < o.[Date]), 0

)

FROM dbo.SpeedingTickets AS o

ORDER BY [Date];

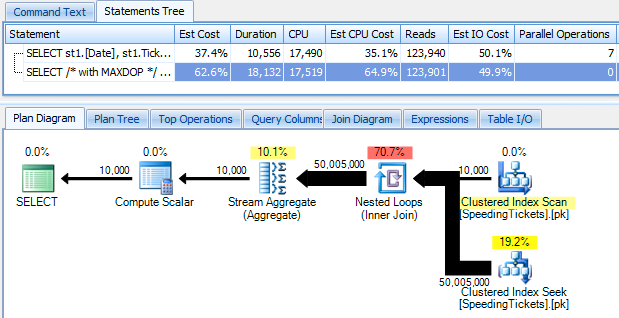

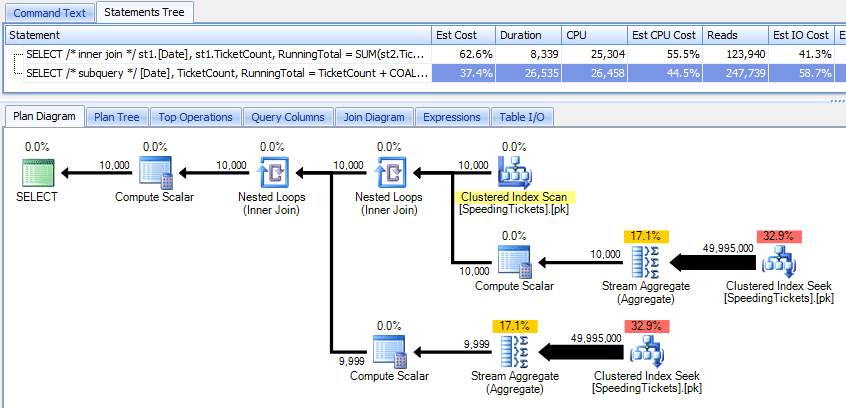

Comparing those two plans:

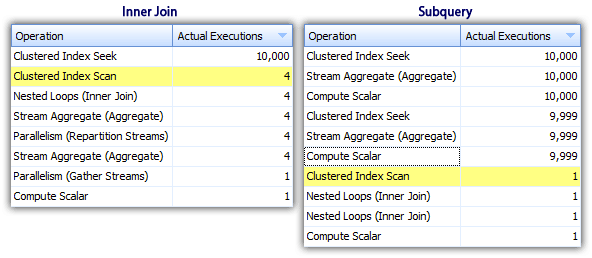

So while the subquery method appears to have a more efficient overall plan, it is worse where it matters: duration and I/O. We can see what contributes to this by digging into the plans a little deeper. By moving to the Top Operations tab, we can see that in the inner join method, the clustered index seek is executed 10,000 times, and all other operations are only executed a few times. However, several operations are executed 9,999 or 10,000 times in the subquery method:

So, the subquery approach seems to be worse, not better. The next method we'll try, I'll call the "quirky update" method. This is not exactly guaranteed to work, and I would never recommend it for production code, but I'm including it for completeness. Basically the quirky update takes advantage of the fact that during an update you can redirect assignment and math so that the variable increments behind the scenes as each row is updated.

DECLARE @st TABLE

(

[Date] DATE PRIMARY KEY,

TicketCount INT,

RunningTotal INT

);

DECLARE @RunningTotal INT = 0;

INSERT @st([Date], TicketCount, RunningTotal)

SELECT [Date], TicketCount, RunningTotal = 0

FROM dbo.SpeedingTickets

ORDER BY [Date];

UPDATE @st

SET @RunningTotal = RunningTotal = @RunningTotal + TicketCount

FROM @st;

SELECT [Date], TicketCount, RunningTotal

FROM @st

ORDER BY [Date];

I'll re-state that I don't believe this approach is safe for production, regardless of the testimony you'll hear from people indicating that it "never fails." Unless behavior is documented and guaranteed, I try to stay away from assumptions based on observed behavior. You never know when some change to the optimizer's decision path (based on a statistics change, data change, service pack, trace flag, query hint, what have you) will drastically alter the plan and potentially lead to a different order. If you really like this unintuitive approach, you can make yourself feel a little better by using the query option FORCE ORDER (and this will try to use an ordered scan of the PK, since that's the only eligible index on the table variable):

UPDATE @st

SET @RunningTotal = RunningTotal = @RunningTotal + TicketCount

FROM @st

OPTION (FORCE ORDER);

For a little more confidence at a slightly higher I/O cost, you can bring the original table back into play, and ensure that the PK on the base table is used:

UPDATE st

SET @RunningTotal = st.RunningTotal = @RunningTotal + t.TicketCount

FROM dbo.SpeedingTickets AS t WITH (INDEX = pk)

INNER JOIN @st AS st

ON t.[Date] = st.[Date]

OPTION (FORCE ORDER);

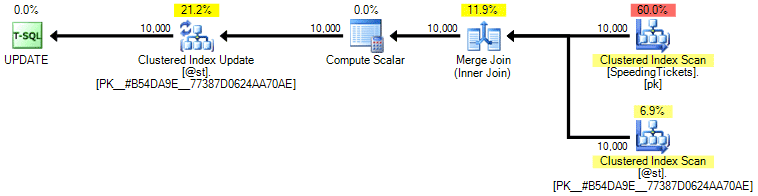

Personally I don't think it's that much more guaranteed, since the SET part of the operation could potentially influence the optimizer independent of the rest of the query. Again, I'm not recommending this approach, I'm just including the comparison for completeness. Here is the plan from this query:

Based on the number of executions we see in the Top Operations tab (I'll spare you the screen shot; it's 1 for every operation), it is clear that even if we perform a join in order to feel better about ordering, the quirky update allows the running totals to be calculated in a single pass of the data. Comparing it to the previous queries, it is much more efficient, even though it first dumps data into a table variable and is separated out into multiple operations:

This brings us to a "recursive CTE" method. This method uses the date value, and relies on the assumption that there are no gaps. Since we populated this data above, we know that it is a fully contiguous series, but in a lot of scenarios you can't make that assumption. So, while I've included it for completeness, this approach isn't always going to be valid. In any case, this uses a recursive CTE with the first (known) date in the table as the anchor, and the recursive portion determined by adding one day (adding the MAXRECURSION option since we know exactly how many rows we have):

;WITH x AS

(

SELECT [Date], TicketCount, RunningTotal = TicketCount

FROM dbo.SpeedingTickets

WHERE [Date] = '19840101'

UNION ALL

SELECT y.[Date], y.TicketCount, x.RunningTotal + y.TicketCount

FROM x INNER JOIN dbo.SpeedingTickets AS y

ON y.[Date] = DATEADD(DAY, 1, x.[Date])

)

SELECT [Date], TicketCount, RunningTotal

FROM x

ORDER BY [Date]

OPTION (MAXRECURSION 10000);

This query works about as efficiently as the quirky update method. We can compare it against the subquery and inner join methods:

Like the quirky update method, I would not recommend this CTE approach in production unless you can absolutely guarantee that your key column has no gaps. If you may have gaps in your data, you can construct something similar using ROW_NUMBER(), but it is not going to be any more efficient than the self-join method above.

And then we have the "cursor" approach:

DECLARE @st TABLE

(

[Date] DATE PRIMARY KEY,

TicketCount INT,

RunningTotal INT

);

DECLARE

@Date DATE,

@TicketCount INT,

@RunningTotal INT = 0;

DECLARE c CURSOR

LOCAL STATIC FORWARD_ONLY READ_ONLY

FOR

SELECT [Date], TicketCount

FROM dbo.SpeedingTickets

ORDER BY [Date];

OPEN c;

FETCH NEXT FROM c INTO @Date, @TicketCount;

WHILE @@FETCH_STATUS = 0

BEGIN

SET @RunningTotal = @RunningTotal + @TicketCount;

INSERT @st([Date], TicketCount, RunningTotal)

SELECT @Date, @TicketCount, @RunningTotal;

FETCH NEXT FROM c INTO @Date, @TicketCount;

END

CLOSE c;

DEALLOCATE c;

SELECT [Date], TicketCount, RunningTotal

FROM @st

ORDER BY [Date];

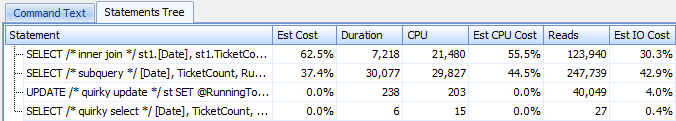

...which is a lot more code, but contrary to what popular opinion might suggest, returns in 1 second. We can see why from some of the plan details above: most of the other approaches end up reading the same data over and over again, whereas the cursor approach reads every row once and keeps the running total in a variable instead of calculating the sum over and over again. We can see this by looking at the statements captured by generating an actual plan in Plan Explorer:

We can see that over 20,000 statements have been collected, but if we sort by Estimated or Actual Rows descending, we find that there are only two operations that handle more than one row. Which is a far cry from a few of the above methods that cause exponential reads due to reading the same previous rows over and over again for each new row.

Now, let's take a look at the new windowing enhancements in SQL Server 2012. In particular, we can now calculate SUM OVER() and specify a set of rows relative to the current row. So, for example:

SELECT

[Date],

TicketCount,

SUM(TicketCount) OVER (ORDER BY [Date] RANGE UNBOUNDED PRECEDING)

FROM dbo.SpeedingTickets

ORDER BY [Date];

SELECT

[Date],

TicketCount,

SUM(TicketCount) OVER (ORDER BY [Date] ROWS UNBOUNDED PRECEDING)

FROM dbo.SpeedingTickets

ORDER BY [Date];

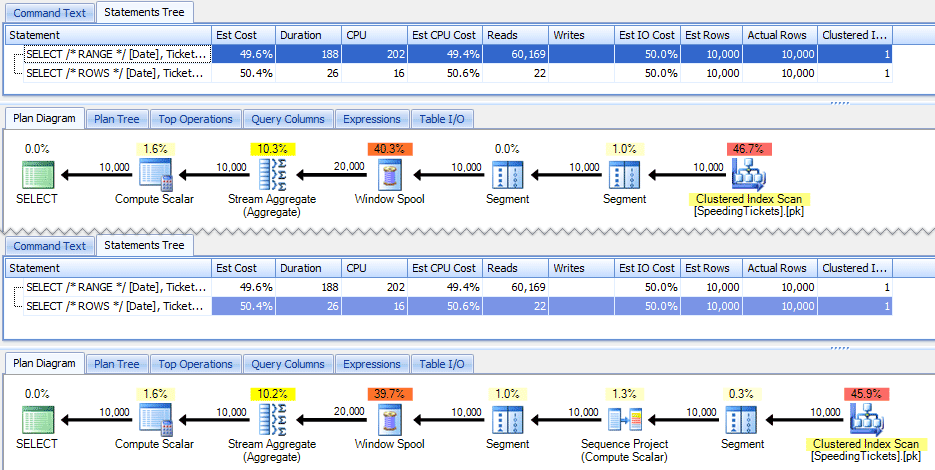

These two queries happen to give the same answer, with correct running totals. But do they work exactly the same? The plans suggest that they don't. The version with ROWS has an additional operator, a 10,000-row sequence project:

And that's about the extent of the difference in the graphical plan. But if you look a little closer at actual runtime metrics, you see minor differences in duration and CPU, and a huge difference in reads. Why is this? Well, this is because RANGE uses an on-disk spool, while ROWS uses an in-memory spool. With small sets the difference is probably negligible, but the cost of the on-disk spool can certainly become more apparent as sets get larger. I don't want to spoil the ending, but you might suspect that one of these solutions will perform better than the other in a more thorough test.

As an aside, the following version of the query yields the same results, but works like the slower RANGE version above:

SELECT

[Date],

TicketCount,

SUM(TicketCount) OVER (ORDER BY [Date])

FROM dbo.SpeedingTickets

ORDER BY [Date];

So as you're playing with the new windowing functions, you'll want to keep little tidbits like this in mind: the abbreviated version of a query, or the one that you happen to have written first, is not necessarily the one you want to push to production.

The Actual Tests

In order to conduct fair tests, I created a stored procedure for each approach, and measured the results by capturing statements on a server where I was already monitoring with SQL Sentry (if you are not using our tool, you can collect SQL:BatchCompleted events in a similar way using SQL Server Profiler).

By "fair tests" I mean that, for example, the quirky update method requires an actual update to static data, which means changing the underlying schema or using a temp table / table variable. So I structured the stored procedures to each create their own table variable, and either store the results there, or store the raw data there and then update the result. The other issue I wanted to eliminate was returning the data to the client - so the procedures each have a debug parameter specifying whether to return no results (the default), top/bottom 5, or all. In the performance tests I set it to return no results, but of course validated each to ensure that they were returning the right results.

The stored procedures are all modeled this way (I've attached a script that creates the database and the stored procedures, so I'm just including a template here for brevity):

CREATE PROCEDURE [dbo].[RunningTotals_]

@debug TINYINT = 0

-- @debug = 1 : show top/bottom 3

-- @debug = 2 : show all 50k

AS

BEGIN

SET NOCOUNT ON;

DECLARE @st TABLE

(

[Date] DATE PRIMARY KEY,

TicketCount INT,

RunningTotal INT

);

INSERT @st([Date], TicketCount, RunningTotal)

-- one of seven approaches used to populate @t

IF @debug = 1 -- show top 3 and last 3 to verify results

BEGIN

;WITH d AS

(

SELECT [Date], TicketCount, RunningTotal,

rn = ROW_NUMBER() OVER (ORDER BY [Date])

FROM @st

)

SELECT [Date], TicketCount, RunningTotal

FROM d

WHERE rn < 4 OR rn > 9997

ORDER BY [Date];

END

IF @debug = 2 -- show all

BEGIN

SELECT [Date], TicketCount, RunningTotal

FROM @st

ORDER BY [Date];

END

END

GO

And I called them in a batch as follows:

EXEC dbo.RunningTotals_DateCTE @debug = 0;

GO

EXEC dbo.RunningTotals_Cursor @debug = 0;

GO

EXEC dbo.RunningTotals_Subquery @debug = 0;

GO

EXEC dbo.RunningTotals_InnerJoin @debug = 0;

GO

EXEC dbo.RunningTotals_QuirkyUpdate @debug = 0;

GO

EXEC dbo.RunningTotals_Windowed_Range @debug = 0;

GO

EXEC dbo.RunningTotals_Windowed_Rows @debug = 0;

GO

I quickly realized that some of these calls were not appearing in Top SQL because the default threshold is 5 seconds. I changed that to 100 milliseconds (something you don't ever want to do on a production system!) as follows:

I will repeat: this behavior is not condoned for production systems!

I still found that one of the commands above was not getting caught by the Top SQL threshold; it was the Windowed_Rows version. So I added the following to that batch only:

EXEC dbo.RunningTotals_Windowed_Rows @debug = 0;

WAITFOR DELAY '00:00:01';

GO

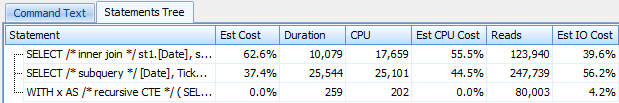

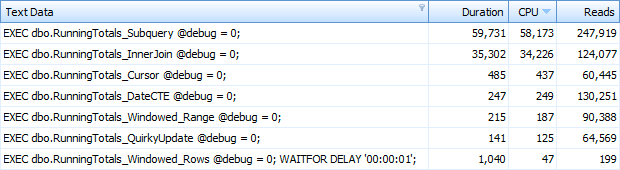

And now I was getting all 7 rows returned in Top SQL. Here they are ordered by CPU usage descending:

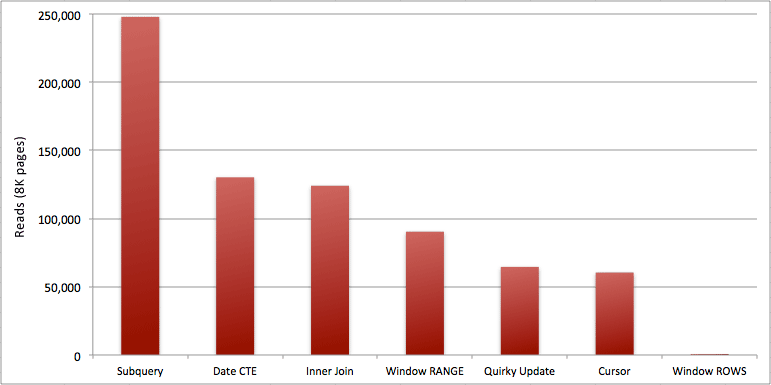

You can see the extra second I added to the Windowed_Rows batch; it wasn't getting caught by the Top SQL threshold because it completed in only 40 milliseconds! This is clearly our best performer and, if we have SQL Server 2012 available, it should be the method we use. The cursor is not half-bad, either, given either the performance or other issues with the remaining solutions. Plotting the duration on a graph is pretty meaningless - two high points and five indistinguishable low points. But if I/O is your bottleneck, you might find the visualization of reads interesting:

Conclusion

From these results we can draw a few conclusions:

- Windowed aggregates in SQL Server 2012 make performance issues with running totals computations (and many other next row(s) / previous row(s) problems) alarmingly more efficient. When I saw the low number of reads I thought for sure there was some kind of mistake, that I must have forgotten to actually perform any work. But no, you get the same number of reads if your stored procedure just performs an ordinary SELECT from the SpeedingTickets table. (Feel free to test this yourself with STATISTICS IO.)

- The issues I pointed out earlier about RANGE vs. ROWS yield slightly different runtimes (duration difference of about 6x - remember to ignore the second I added with WAITFOR), but read differences are astronomical due to the on-disk spool. If your windowed aggregate can be solved using ROWS, avoid RANGE, but you should test that both give the same result (or at least that ROWS gives the right answer). You should also note that if you are using a similar query and you don't specify RANGE nor ROWS, the plan will operate as if you had specified RANGE).

- The subquery and inner join methods are relatively abysmal. 35 seconds to a minute to generate these running totals? And this was on a single, skinny table without returning results to the client. These comparisons can be used to show people why a purely set-based solution is not always the best answer.

- Of the faster approaches, assuming you are not yet ready for SQL Server 2012, and assuming you discard both the quirky update method (unsupported) and the CTE date method (can't guarantee a contiguous sequence), only the cursor performs acceptably. It has the highest duration of the "faster" solutions, but the least amount of reads.

I hope these tests help give a better appreciation for the windowing enhancements that Microsoft has added to SQL Server 2012. Please be sure to thank Itzik if you see him online or in person, since he was the driving force behind these changes. In addition, I hope this helps open some minds out there that a cursor may not always be the evil and dreaded solution it is often depicted to be.

(As an addendum, I did test the CLR function offered by Pavel Pawlowski, and the performance characteristics were nearly identical to the SQL Server 2012 solution using ROWS. Reads were identical, CPU was 78 vs. 47, and overall duration was 73 instead of 40. So if you won't be moving to SQL Server 2012 in the near future, you may want to add Pavel's solution to your tests.)

Attachments: RunningTotals_Demo.sql.zip (2kb)

Excellant article Aaron. Regarding the "Quirky update" technique, Jeff Moden did some research and found that it gives correct result under certain rules. Here is the link http://www.sqlservercentral.com/articles/T-SQL/68467/ (you can see the rules under the last part of article). Also I always advice people to do this in the presentation layer if they want to show data there. Becuase it is almost very easy there to do while representing data.

Hi, Aaron!

Thanks for aggregating info about running totals.

One notice about measuring subquery method. As you can see, there is an extra brunch of stream aggregate in plan, but that is not an issue of subquery, that is because coalesce modeled by case. And when it is used in subquery coalesce(subquery,smth) -> case when (subquery) is not null then (subquery) else smth end.

You may vote for changing it here

https://connect.microsoft.com/SQLServer/feedback/details/336002/unnecessarily-bad-performance-for-coalesce-subquery

If you use instead isnull or <= to avoid it at all – you'll get another performance compatible to inner join consequent method (even a little bit higher in my case 10 sec IJ v.s. 8 sec SQ).

WBR!

@Madhivanan I still believe that Jeff Moden's observations are just that : observations. When you can show me official Microsoft documentation that supports and guarantees the correct behavior, then I'll feel comfortable recommending that approach to others. In the meantime, I find a cursor safer and, in this case at least, not terribly slower than the quirky update.

@SomewhereSomehow thanks, you spotted my attempt at sabotaging the subquery version. I purposely used COALESCE precisely for that reason (and I should have explained that). Truth is though, even without COALESCE the performance of that approach would be similar to INNER JOIN, maybe a little bit faster but still way out of contention to even be considered a serious, viable solution.

This is now the canonical article on the web about running totals in SQL Server.

You omitted the CROSS APPLY method, but I tested and it took about twice as long as the INNER JOIN approach. Great article as always, and I'm excited to see what else comes out of this site!

Excellent article, as always. Apart from the article, i'm astonished with the things that are possible with SQL Sentry Plan Explorer. I've seen it earlier from your other posts, but this is the first time i've had a deeper look. Thanks!

Great article Aaron, very thorough documentation and test results. Looking forward to seeing this blog site grow

Great article Aaron. I have been working to learn these new windowing functions in SQL Server and have used them some in Oracle and they work so well once you understand them! I appreciate how complete your analysis is in showing all the different ways to write the query – I'll be pinboarding this article! I also liked how you show that it always depends, even with cursors. Back in the day we used cursors all the time and I pretty much had assumed they were NEVER a good solution anymore. You show here a use case (for 2008, which is what we have still where I work) where the cursor makes sense. I always need that reminder that it ALWAYS depends..

Thanks for expanding into this blog – I have learned much in the short time since you guys created it.

-Todd

For what it's worth, there is a gap-allowing Date CTE that doesn't become an awful self-join. It adds two additional seek operations to the query for a total of 20,000 more reads, about 60 more cpu, and about 45 more duration. Not beating the INNER JOIN method, it isn't really a contender, but I wanted to include it for the sake of completeness. The naive "calculate the row number and join on it" query has 94,000 more reads and 100 times the duration of the original Date CTE, so it's clearly an incredible loser.

/* Gap-Allowing Date CTE */ WITH ST AS ( SELECT *, ( SELECT TOP 1 [Date] FROM dbo.SpeedingTickets AS S2 WHERE S2.[Date] > S1.[Date] ORDER BY S2.[Date] ) AS NextDate FROM dbo.SpeedingTickets AS S1 ), x AS ( SELECT [Date], TicketCount, RunningTotal = TicketCount, NextDate FROM ST WHERE [Date] = '19840101' UNION ALL SELECT y.[Date], y.TicketCount, x.RunningTotal + y.TicketCount, y.NextDate FROM x INNER JOIN ST AS y ON x.NextDate = y.[Date] ) SELECT [Date], TicketCount, RunningTotal FROM x ORDER BY [Date] OPTION (MAXRECURSION 0);Note… my apologies for any problems with the formatting of the above query. There are no instructions for how to format code or what kind of tags formatting options are available.

Thanks Erik. You can format code like the original article by using <pre lang="tsql">.

Thank you! I didn't realize there were mixed tabs and spaces so the indentation is messed up. I tried to use your preferred formatting styles but forgot a couple of `AS`es.

Sigh. That is, the > in the SQL code above.

Hi Aaron – kind of late to this article, but it is certainly very helpful.

I was able to get my example to work, but for only 1 group. How could I use the CURSOR, QUIRKY UPDATE or CTE if I have multiple groups which I want to start the running total at 0 for the beginning of each group, for example:

Aaron,

In the CTE version, you can include a "pre-process step" that does something like:

select st.*, row_number() over (order by [Date]) as seqnum

from SpeedingTickets st

This would guarantee a sequential ordering for the CTE approach, which might make it more competitive (conceptually and performance-wise) with the cursor-based approach.

Hi Aaron,

I have a requirement like instead of SUM i need to perform multiplication. But its not simple running multiplication few more logic's are present. But base in Running Multiplication

I have done it using your Recursive CTE method. But i want use SUM() OVER(Order BY) method but am not sure how t do it. Can you please help me out

Here is the link to my question http://stackoverflow.com/questions/32787506/running-multiplication-in-t-sql

There is no

PRODUCT() OVER()functionality in SQL Server 2012, and I don't thinkLAG()can be made dynamic, so you will need to stick with the method you're already using. I think you're in good hands here.Thank for sharing i try out to windows rows method. It really amazingly fast.

OK, total amateur here!

Trying to use and understand your SUM / OVER example:

SUM(TicketCount) OVER (ORDER BY [Date] ROWS UNBOUNDED PRECEDING

It seems that if you enter a new ticket record and "back date" the ticket into an earlier year, then you limit your results to (let's say) the current year (with a "where" clause), the above query ignores the back dated transaction in the running total. The running total is off by the amount of the back dated transaction.

If you don't limit the results, it works fine. Perhaps my "where" clause is misplaced or incorrect. Here's the entire code:

SELECT

[Date],

TicketCount,

SUM(TicketCount) OVER (ORDER BY [Date] ROWS UNBOUNDED PRECEDING)

FROM dbo.SpeedingTickets

WHERE YEAR([date])=2011 AND (MONTH([date]) BETWEEN 1 AND 12)

ORDER BY [Date];

Any way to revise my WHERE clause to make it work?

Not sure I follow how a back-dated row could possibly be treated any differently than a row that was inserted initially? Can you provide a more complete repro?

After some testing, I find that my where clause (include a rage of dates) ignores all data prior to the beginning of the date range in the "SUM / OVER" field. I thought that "ROWS UNBOUNDED PRECEDING" would include ALL data in the running balance prior to the date range.

I guess I should query the results of the SUM / OVER query and limit my date range there?

thanks for such deep analysis of different solutions

recommend where [date] between '01-01-2011' and '12-31-2011'

I absolutely agree that, as of 2012, the Quirky Update is no longer necessary and kudos for the cautionary statements.

However, a properly written Quirky Update (a term coined by Robyn Page in January 2007 at https://www.simple-talk.com/sql/learn-sql-server/robyn-pages-sql-server-cursor-workbench/ ) is second only to the "new" OVER clause functionality and is more than times faster than any form of RBAR, especially a Cursor. Try the following in your test harness and see.

IF OBJECT_ID(N'tempdb..#TestTable','U') IS NOT NULL

DROP TABLE #TestTable

CREATE TABLE #TestTable

(

[Date] DATE NOT NULL PRIMARY KEY CLUSTERED,

TicketCount INT NOT NULL,

RunningTotal INT

)

;

INSERT INTO #TestTable

([Date],TicketCount)

SELECT [Date],TicketCount

FROM dbo.SpeedingTickets

;

DECLARE @PrevTotal INT = 0

,@SafetyRN INT = 1

;

WITH cteSafety AS

(

SELECT SafetyRN = ROW_NUMBER() OVER (ORDER BY [DATE])

,[Date]

,TicketCount

,RunningTotal

FROM #TestTable

)

UPDATE tgt –CASE function will cause an error if the correct order is ever broken.

SET @PrevTotal = RunningTotal = @PrevTotal + TicketCount

,@SafetyRN = CASE WHEN SafetyRN = @SafetyRN THEN @SafetyRN + 1 ELSE 1/0 END

FROM cteSafety tgt WITH (TABLOCKX,INDEX(1)) –Never do this with a non-clustered index.

OPTION (MAXDOP 1)

;

SELECT * FROM #TestTable

;

The safety feature built into the code above is thanks to Paul White and Tom Thompson at http://www.sqlservercentral.com/Forums/Topic802558-203-7.aspx#bm981258 .

Run stats on my box…

Properly written Quirky Update: 32-41ms duration, 31-32 ms. CPU, 465 reads, 26 writes

Cursor: 398-418 ms. duration, 329-375 ms. CPU, 60537 reads, 51 writes

Rows Unbounded: 15 ms. duration, 15 ms. CPU, 22 reads, 0 writes –The undisputed king for T-SQL Running Totals

Of course, if you don't trust the Quirky Update, then use a Cursor but none of the Quirky Updates that I've written in the last 9 years have ever failed. As with anything else, you just need to follow the rules.

You trusting it does not compel me to trust it. Until "the rules" are documented by Microsoft, my advice is still going to be to stay away, even in cases where it's the second-best performer.

There are scenarios where the logic for a running total calculation is complex or dynamic, it changes based on results from the previous rows (think engineering, traffic, networks, etc.). In such cases it's sometimes complicated to write the logic with windowed functions alone, and generally a cursor type logic, a quirky update, or otherwise incremental process can do it. I've used cursors, quirky updates without issues.

The fact that a hammer is designed to draw nails into the wall doesn't mean I cannot use it as a paper weight, or display it as a sculpture.

About trusting Microsoft documentation, I've done that many times, and there are more cases where I've run into real problems because of it, than cases where the quirky updates failed ( 0 ). :-)

Thanks for sharing this solutions, Looking forward for more articles

I have a slightly different issue that I am trying to solve and, would love to be able to accomplish it using the windowed aggregate approach. Currently, the only(fastest) method that I have been able to successfully use is the Quirky Update method.

The piece where my task diverges from the example in this article is the following:

I am trying to get a running total, in my case it is an aggregate of total pages for a set of documents. However, I need to reset the aggregate to 0 once the sum of the total pages exceeds a user specified limit.

Example: I have a set of 100 documents and, to keep this simple, lets say each document contains 10 pages and I want to split them up into buckets of 250 pages per bucket. Using a case statement combined with a windowed aggregate, I can reset the running total once it reaches the first 250 page threshold but I can't figure out how to reset it for the remaining sets.

IF OBJECT_ID('TEMPDB..#documents') IS NOT NULL DROP TABLE #documents; CREATE TABLE #documents ( DocId INT PRIMARY KEY CLUSTERED NOT NULL, TotalPages INT, RunningTotal INT, BatchId INT ); GO ;WITH x(d,h) AS ( SELECT TOP (100) ROW_NUMBER() OVER (ORDER BY [object_id]), CONVERT(INT, RIGHT([object_id], 2)) FROM sys.all_objects ORDER BY [object_id] ) INSERT #documents(DocId, TotalPages, RunningTotal, BatchId) SELECT x.d, x.h, 0, 0 FROM x ORDER BY d; GO SELECT DocId , TotalPages , SUM(TotalPages) OVER(ORDER BY DocId ROWS UNBOUNDED PRECEDING) AS RunningTotal , CASE WHEN SUM(TotalPages) OVER(ORDER BY DocId ROWS UNBOUNDED PRECEDING) < 250 THEN SUM(TotalPages) OVER(ORDER BY DocId ROWS UNBOUNDED PRECEDING) --ELSE (SUM(TotalPages) OVER(ORDER BY DocId ROWS BETWEEN CURRENT ROW AND 1 FOLLOWING) + SUM(TotalPages) OVER(ORDER BY DocId ROWS UNBOUNDED PRECEDING)+ totalpages)-250 --???? END AS RunningTotal2 FROM #documents ORDER BY DocIdForgot to add the (working) quirky update example code:

[code language="tsql"]

IF OBJECT_ID('TEMPDB..#documents') IS NOT NULL DROP TABLE #documents;

CREATE TABLE #documents

(

DocId INT PRIMARY KEY CLUSTERED NOT NULL,

TotalPages INT,

RunningTotal INT,

BatchId INT

);

GO

;WITH x(d,h) AS

(

SELECT TOP (100)

ROW_NUMBER() OVER (ORDER BY [object_id]),

CONVERT(INT, RIGHT([object_id], 2))

FROM sys.all_objects

ORDER BY [object_id]

)

INSERT #documents(DocId, TotalPages, RunningTotal, BatchId)

SELECT

x.d,

x.h,

0,

0

FROM x

ORDER BY d;

GO

DECLARE @TotalPages INT =0;

DECLARE @RunningTotal INT =0;

DECLARE @BatchId INT = 0;

UPDATE d

SET @BatchId = d.BatchId = (CASE WHEN @RunningTotal+d.TotalPages >= 250 THEN @BatchId+1 ELSE @BatchId END)

, @RunningTotal = d.RunningTotal= (CASE WHEN @RunningTotal+d.TotalPages >= 250 THEN d.TotalPages ELSE @RunningTotal + d.TotalPages END)

FROM #documents AS d

SELECT *

FROM #documents

ORDER BY DocId

[/code]

I don't know whether you solved the problem some time ago but thought I'd share the following general approach which I've found quite useful and flexible in the past.

I've expanded the code out a little bit to try and reduce duplication of constants and logic tests, and allow you to break it apart and see what it's doing in each step. The basic approach rests on using a recursive query. It's a real dog in terms of readability, but it achieves its results in a purely set-based way in terms of SELECT statements.

Also, it assumes the doc_id column is sequential, but if not then it would just be a simple case of querying a sequential row_number column onto relevant rows of the document table, and using this as the basis for the self-join.

use DBCAC;

WITH

initialiser AS

(

SELECT

0 AS doc_id –special value we will filter out later

,0 AS num_pages –placeholder column

,10 AS pages_left –this is the maximum number of pages in a batch

,1 AS new_batch_flag –this flag is set when a document is placed first into a new batch

)

,iterator AS

(

SELECT

*

FROM

initialiser

UNION ALL

SELECT

curr.*

,IIF(

curr.num_pages > prev.pages_left

,(SELECT pages_left FROM initialiser) – curr.num_pages

,prev.pages_left – curr.num_pages

) AS pages_left

,IIF(

curr.num_pages > prev.pages_left

,1

,0

) AS new_batch_flag

FROM

docs AS curr

INNER JOIN

iterator AS prev

ON prev.doc_id = (curr.doc_id – 1)

)

,batch_numberer AS

(

SELECT

*

,SUM(new_batch_flag) OVER (ORDER BY doc_id ROWS UNBOUNDED PRECEDING) AS batch_number

FROM

iterator

)

,completion_filter AS

(

SELECT

*

FROM

batch_numberer

WHERE

doc_id > 0 –filter out the row which was the initialiser

)

SELECT

*

FROM

completion_filter

ORDER BY

doc_id

Also, just for clarity, these are the inputs and outputs:

Table: docs

doc_id num_pages

———– ———–

1 4

2 4

3 4

4 5

5 5

6 10

7 4

8 4

9 4

Output:

doc_id num_pages pages_left new_batch_flag batch_number

———– ———– ———– ————– ————

1 4 6 0 1

2 4 2 0 1

3 4 6 1 2

4 5 1 0 2

5 5 5 1 3

6 10 0 1 4

7 4 6 1 5

8 4 2 0 5

9 4 6 1 6