One of the filtered index use cases mentioned in Books Online concerns a column that contains mostly NULL values. The idea is to create a filtered index that excludes the NULLs, resulting in a smaller nonclustered index that requires less maintenance than the equivalent unfiltered index. Another popular use of filtered indexes is to filter NULLs from a UNIQUE index, giving the behaviour users of other database engines might expect from a default UNIQUE index or constraint: uniqueness being enforced only for the non-NULL values.

Unfortunately, the query optimizer has limitations where filtered indexes are concerned. This post looks at a couple of less well-known examples.

Sample Tables

We will use two tables (A & B) that have the same structure: a surrogate clustered primary key, a mostly-NULL column that is unique (disregarding NULLs), and a padding column that represents the other columns that might be in a real table.

The column of interest is the mostly-NULL one, which I have declared as SPARSE. The sparse option is not required, I just include it because I don’t get much chance to use it. In any case, SPARSE probably makes sense in a lot of scenarios where the column data is expected to be mostly NULL. Feel free to remove the sparse attribute from the examples if you like.

CREATE TABLE dbo.TableA

(

pk integer IDENTITY PRIMARY KEY,

data bigint SPARSE NULL,

padding binary(250) NOT NULL DEFAULT 0x

);

CREATE TABLE dbo.TableB

(

pk integer IDENTITY PRIMARY KEY,

data bigint SPARSE NULL,

padding binary(250) NOT NULL DEFAULT 0x

);

Each table contains the numbers from 1 to 2,000 in the data column with an additional 40,000 rows where the data column is NULL:

-- Numbers 1 - 2,000

INSERT

dbo.TableA WITH (TABLOCKX)

(data)

SELECT TOP (2000)

ROW_NUMBER() OVER (ORDER BY (SELECT NULL))

FROM sys.columns AS c

CROSS JOIN sys.columns AS c2

ORDER BY

ROW_NUMBER() OVER (ORDER BY (SELECT NULL));

-- NULLs

INSERT TOP (40000)

dbo.TableA WITH (TABLOCKX)

(data)

SELECT

CONVERT(bigint, NULL)

FROM sys.columns AS c

CROSS JOIN sys.columns AS c2;

-- Copy into TableB

INSERT dbo.TableB WITH (TABLOCKX)

(data)

SELECT

ta.data

FROM dbo.TableA AS ta;

Both tables get a UNIQUE filtered index for the 2,000 non-NULL data values:

CREATE UNIQUE NONCLUSTERED INDEX uqA

ON dbo.TableA (data)

WHERE data IS NOT NULL;

CREATE UNIQUE NONCLUSTERED INDEX uqB

ON dbo.TableB (data)

WHERE data IS NOT NULL;

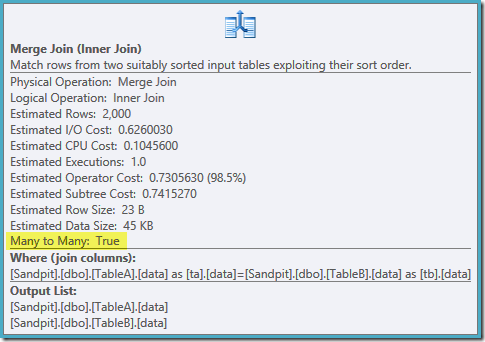

The output of DBCC SHOW_STATISTICS summarizes the situation:

DBCC SHOW_STATISTICS (TableA, uqA) WITH STAT_HEADER;

DBCC SHOW_STATISTICS (TableB, uqB) WITH STAT_HEADER;

Sample Query

The query below performs a simple join of the two tables – imagine the tables are in some sort of parent-child relationship and many of the foreign keys are NULL. Something along those lines anyway.

SELECT

ta.data,

tb.data

FROM dbo.TableA AS ta

JOIN dbo.TableB AS tb

ON ta.data = tb.data;

Default execution plan

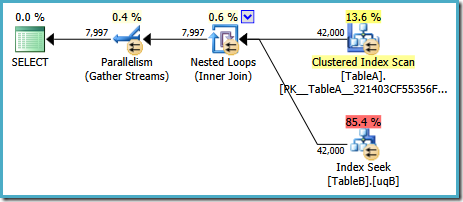

With SQL Server in its default configuration, the optimizer chooses an execution plan featuring a parallel nested loops join:

This plan has an estimated cost of 7.7768 magic optimizer units™.

There are some strange things about this plan, however. The Index Seek uses our filtered index on table B, but the query is driven by a Clustered Index Scan of table A. The join predicate is an equality test on the data columns, which will reject NULLs (regardless of the ANSI_NULLS setting). We might have hoped the optimizer would perform some advanced reasoning based on that observation, but no. This plan reads every row from table A (including the 40,000 NULLs), performs a seek into the filtered index on table B for each one, relying on the fact that NULL will not match NULL in that seek. This is a tremendous waste of effort.

The odd thing is that the optimizer must have realised the join rejects NULLs in order to choose the filtered index for the table B seek, but it did not think to filter NULLs from table A first – or better still, to simply scan the NULL-free filtered index on table A. You might wonder if this is a cost-based decision, maybe the statistics are not very good? Perhaps we should force the use of the filtered index with a hint? Hinting the filtered index on table A just results in the same plan with the roles reversed – scanning table B and seeking into table A. Forcing the filtered index for both tables produces error 8622: the query processor could not produce a query plan.

Adding a NOT NULL predicate

Suspecting the cause to be something to do with the implied NULL-rejection of the join predicate, we add an explicit NOT NULL predicate to the ON clause (or the WHERE clause if you prefer, it comes to the same thing here):

SELECT

ta.data,

tb.data

FROM dbo.TableA AS ta

JOIN dbo.TableB AS tb

ON ta.data = tb.data

AND ta.data IS NOT NULL;

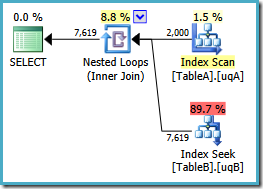

We added the NOT NULL check to the table A column because the original plan scanned that table’s clustered index rather than using our filtered index (the seek into table B was fine – it did use the filtered index). The new query is semantically exactly the same as the previous one, but the execution plan is different:

Now we have the hoped-for scan of the filtered index on table A, producing 2,000 non-NULL rows to drive the nested loop seeks into table B. Both tables are using our filtered indexes apparently optimally now: the new plan costs just 0.362835 units (down from 7.7768). We can do better, however.

Adding two NOT NULL predicates

The redundant NOT NULL predicate for table A worked wonders; what happens if we add one for table B as well?

SELECT

ta.data,

tb.data

FROM dbo.TableA AS ta

JOIN dbo.TableB AS tb

ON ta.data = tb.data

AND ta.data IS NOT NULL

AND tb.data IS NOT NULL;

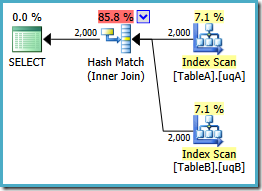

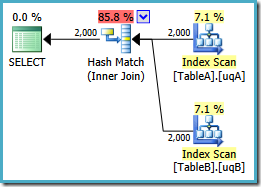

This query is still logically the same as the two previous efforts, but the execution plan is different again:

This plan builds a hash table for the 2,000 rows from table A, then probes for matches using the 2,000 rows from table B. The estimated number of rows returned is much better than the previous plan (did you notice the 7,619 estimate there?) and the estimated execution cost has dropped again, from 0.362835 to 0.0772056.

You could try forcing a hash join using a hint on the original or single-NOT NULL queries, but you won’t get the low-cost plan shown above. The optimizer just does not have the ability to fully reason about the NULL-rejecting behaviour of the join as it applies to our filtered indexes without both redundant predicates.

You are allowed to be surprised by this – even if it’s just the idea that one redundant predicate was not enough (surely if ta.data is NOT NULL and ta.data = tb.data, it follows that tb.data is also NOT NULL, right?)

Still not perfect

It’s a little surprising to see a hash join there. If you are familiar with the main differences between the three physical join operators, you probably know that hash join is a top candidate where:

- Pre-sorted input is not available

- The hash build input is smaller than the probe input

- The probe input is quite large

None of these things are true here. Our expectation would be that the best plan for this query and data set would be a merge join, exploiting the ordered input available from our two filtered indexes. We can try hinting a merge join, retaining the two extra ON clause predicates:

SELECT

ta.data,

tb.data

FROM dbo.TableA AS ta

JOIN dbo.TableB AS tb

ON ta.data = tb.data

AND ta.data IS NOT NULL

AND tb.data IS NOT NULL

OPTION (MERGE JOIN);

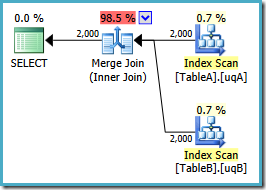

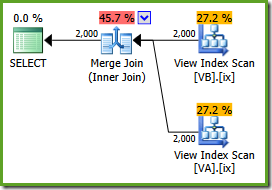

The plan shape is as we hoped:

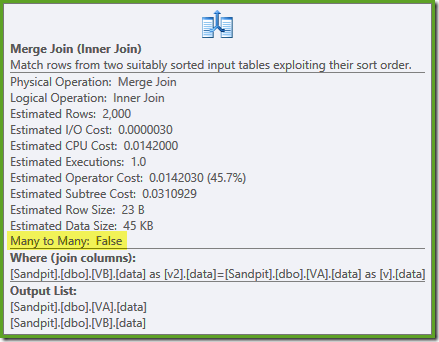

An ordered scan of both filtered indexes, great cardinality estimates, fantastic. Just one small problem: this execution plan is much worse; the estimated cost has jumped from 0.0772056 to 0.741527. The reason for the jump in estimated cost is revealed by checking the properties of the merge join operator:

This is an expensive many-to-many join, where the execution engine must keep track of duplicates from the outer input in a worktable, and rewind as necessary. Duplicates? We are scanning a unique index! It turns out the optimizer does not know that a filtered unique index produces unique values (connect item here). In fact this is a one-to-one join, but the optimizer costs it as if it were many-to-many, explaining why it prefers the hash join plan.

An Alternative Strategy

It seems we keep coming up against optimizer limitations when using filtered indexes here (despite it being a highlighted use case in Books Online). What happens if we try using views instead?

Using Views

The following two views just filter the base tables to show the rows where the data column is NOT NULL:

CREATE VIEW dbo.VA

WITH SCHEMABINDING AS

SELECT

pk,

data,

padding

FROM dbo.TableA

WHERE data IS NOT NULL;

GO

CREATE VIEW dbo.VB

WITH SCHEMABINDING AS

SELECT

pk,

data,

padding

FROM dbo.TableB

WHERE data IS NOT NULL;

Rewriting the original query to use the views is trivial:

SELECT

v.data,

v2.data

FROM dbo.VA AS v

JOIN dbo.VB AS v2

ON v.data = v2.data;

Remember this query originally produced a parallel nested loops plan costed at 7.7768 units. With the view references, we get this execution plan:

This is exactly the same hash join plan we had to add redundant NOT NULL predicates to get with the filtered indexes (the cost is 0.0772056 units as before). This is expected, because all we have essentially done here is to push the extra NOT NULL predicates from the query to a view.

Indexing the views

We can also try materializing the views by creating a unique clustered index on the pk column:

CREATE UNIQUE CLUSTERED INDEX cuq ON dbo.VA (pk);

CREATE UNIQUE CLUSTERED INDEX cuq ON dbo.VB (pk);

Now we can add unique nonclustered indexes on the filtered data column in the indexed view:

CREATE UNIQUE NONCLUSTERED INDEX ix ON dbo.VA (data);

CREATE UNIQUE NONCLUSTERED INDEX ix ON dbo.VB (data);

Notice the filtering is performed in the view, these nonclustered indexes are not themselves filtered.

The perfect plan

We are now ready to run our query against the view, using the NOEXPAND table hint:

SELECT

v.data,

v2.data

FROM dbo.VA AS v WITH (NOEXPAND)

JOIN dbo.VB AS v2 WITH (NOEXPAND)

ON v.data = v2.data;

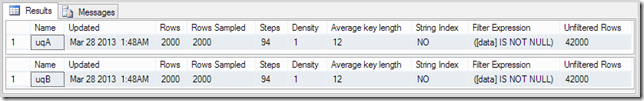

The execution plan is:

The optimizer can see the unfiltered nonclustered view indexes are unique, so a many-to-many merge join is not needed. This final execution plan has an estimated cost of 0.0310929 units – even lower than the hash join plan (0.0772056 units). This validates our expectation that a merge join ought to have the lowest estimated cost for this query and sample data set.

The NOEXPAND hints are needed even in Enterprise Edition to ensure the uniqueness guarantee provided by the view indexes is used by the optimizer.

Summary

This post highlights two important optimizer limitations with filtered indexes:

- Redundant join predicates can be necessary to match filtered indexes

- Filtered unique indexes do not provide uniqueness information to the optimizer

In some cases it may be practical to simply add the redundant predicates to every query. The alternative is to encapsulate the desired implied predicates in an unindexed view. The hash match plan in this post was much better than the default plan, even though the optimizer ought to be able to find the slightly better merge join plan. Sometimes, you may need to index the view and use NOEXPAND hints (required anyway for Standard Edition instances). In still other circumstances, none of these approaches will be suitable. Sorry about that :)

This entire post is basically one big connect item candidate… The optimizer seems to be dealing with much, much harder problems than these are. They *look* easy to fix with a clear value proposition.

Cool post, Paul, as usual!

Here's an alternative that produces a plan with the desired properties (ordered scans of filtered indexes and a one-to-one Merge join) at the cost of two redundant Stream Aggregate operators:

SELECT

ta.data,

tb.data

FROM (SELECT DISTINCT data

FROM dbo.TableA

WHERE data IS NOT NULL) AS ta

JOIN (SELECT DISTINCT data

FROM dbo.TableA

WHERE data IS NOT NULL) AS tb

ON ta.data = tb.data;

I'm getting a cost of 0.029167.

Cheers,

Itzik

Hi Itzik,

Yes that does the trick nicely! Adding the DISTINCT (or GROUP BY) to the views that reject NULLs would work too, of course. Thanks for reading and taking the time to comment!

Hello,

Just to be clear In the Merge join case when you say "this execution plan is much worse;" are you just talking about estimated costs or saying that it is actually likely to be worse in practice?

Craig Freedman mentions in the comments here (http://blogs.msdn.com/b/craigfr/archive/2006/08/03/687584.aspx) that if the second table does not contain any duplicates no rows end up getting inserted to the worktable for a many to many merge join.

Yes, the execution plan is much worse, comparing the estimated costs. No, this particular query is not likely to perform much less well in practice because although there is a small overhead in creating the work table and a change to the merge join algorithm, the major cost of a many-to-many merge join comes in the rewinding. Overall, though, the chosen plan is still much worse because it is wrong. Choosing a many-to-many merge join affects derived statistics and other memo properties. This misinformation is unlikely to be helpful in choosing a good plan from that point forward – something that could be important in more complex, real-world queries. Even in the simple example used here, the inaccurate costing of the merge join meant the optimizer chose parallel nested loops – misinformation is never a good thing.

Connect Item ++

Thanks for the article

This is a really well written article Paul, thanks for putting it together. I've voted for your connect item.

Hi Paul, thank you for this article.

One question – could you please elaborate a little more on the statement right before the Summary section: "The NOEXPAND hints are needed even in Enterprise Edition to ensure the uniqueness guarantee provided by the view indexes is used by the optimizer."

Are you referring to some undocumented feature?

There is something undocumented and interesting there, which I will cover in a future article :)

Looking forward, Paul :) thank you

Good information. I stumbled upon this article after having encountered the same/similar issue myself. I created a filtered index much like yours that was intended to be a covering index for a common join. It seemed to me that the "inner join" implied non-nullness in the columns it was joining so that the optimizer wouldn't have a problem selecting my non null filtered index, so I was surprised when it resulted in a table scan instead. I added the not null predicate, which was enough to fix the plan for this particular query. What was more interesting though, was that sometimes my filtered index *would* be selected when joining with additional tables, without me having to include the redundant non null predicate. So, it would seem that the optimizer will consider non null filtered indexes, but it doesn't seem to match my intuition about when it should.

Hi Paul. I just came across this article of yours again (thanks again for it – great reading).

However – yet again! – I am feeling I have the same question with regards to this NO EXPAND option.

In your response above you were referring to that future article which I might have missed.

If I did miss it, could you please point me to it?

Thank you!

Hi Paul, thank you for the article, I now finally see what you meant in your "Optimizer Limitations with Filtered Indexes" article back in 2013 :)

sorry, apparently I commented the wrong article, but thanks anyway, Paul – I just read your "Another Reason to Use NOEXPAND hints in Enterprise Edition" one! :)

Cheers. I get around to most things eventually :)

Are there any Connect items to vote for? I hit this issue again and again. IS NOT NULL probably is the most common filtered index predicate in the world.

Hey nullkiller, I list a couple of dozen in this post:

http://sqlperformance.com/2013/04/t-sql-queries/filtered-indexes

Do see the article Aaron linked to, but there is a Connect item to vote for in this article's text as well. Look for the text "connect item here" :)