In the SQL Server world, there are two types of people: those who like all of their objects to be prefixed, and those who don't. The former group is further divided into two categories: those who prefix stored procedures with sp_, and those who choose other prefixes (such as usp_ or proc_). A long-standing recommendation has been to avoid the sp_ prefix, both for performance reasons, and to avoid ambiguity or collisions if you happen to choose a name that already exists in the system catalog. Collisions are certainly still an issue, but assuming you've vetted your object name, is it still a performance issue?

TL;DR version: YES.

The sp_ prefix is still a no-no. But in this post I will explain why, how SQL Server 2012 might lead you to believe that this cautionary advice no longer applies, and some other potential side effects of choosing this naming convention.

What is the issue with sp_?

The sp_ prefix does not mean what you think it does: most people think sp stands for "stored procedure" when in fact it means "special." Stored procedures (as well as tables and views) stored in master with an sp_ prefix are accessible from any database without a proper reference (assuming a local version does not exist). If the procedure is marked as a system object (using sp_MS_marksystemobject (an undocumented and unsupported system procedure that sets is_ms_shipped to 1), then the procedure in master will execute in the context of the calling database. Let's look at a simple example:

CREATE DATABASE sp_test;

GO

USE sp_test;

GO

CREATE TABLE dbo.foo(id INT);

GO

USE master;

GO

CREATE PROCEDURE dbo.sp_checktable

AS

SELECT DB_NAME(), name

FROM sys.tables WHERE name = N'foo';

GO

USE sp_test;

GO

EXEC dbo.sp_checktable; -- runs but returns 0 results

GO

EXEC master..sp_MS_marksystemobject N'dbo.sp_checktable';

GO

EXEC dbo.sp_checktable; -- runs and returns results

GO

Results:

(0 row(s) affected) sp_test foo (1 row(s) affected)

The performance issue comes from the fact that master might be checked for an equivalent stored procedure, depending on whether there is a local version of the procedure, and whether there is in fact an equivalent object in master. This can lead to extra metadata overhead as well as an additional SP:CacheMiss event. The question is whether this overhead is tangible.

So let's consider a very simple procedure in a test database:

CREATE DATABASE sp_prefix;

GO

USE sp_prefix;

GO

CREATE PROCEDURE dbo.sp_something

AS

BEGIN

SELECT 'sp_prefix', DB_NAME();

END

GO

And equivalent procedures in master:

USE master;

GO

CREATE PROCEDURE dbo.sp_something

AS

BEGIN

SELECT 'master', DB_NAME();

END

GO

EXEC sp_MS_marksystemobject N'sp_something';

CacheMiss : Fact or Fiction?

If we run a quick test from our test database, we see that executing these stored procedures will never actually invoke the versions from master, regardless of whether we properly database- or schema-qualify the procedure (a common misconception) or if we mark the master version as a system object:

USE sp_prefix;

GO

EXEC sp_prefix.dbo.sp_something;

GO

EXEC dbo.sp_something;

GO

EXEC sp_something;

Results:

sp_prefix sp_prefix sp_prefix sp_prefix sp_prefix sp_prefix

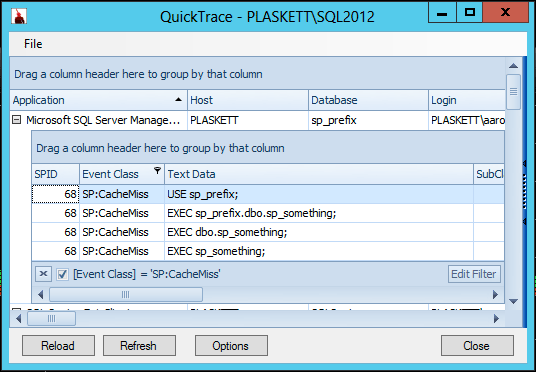

Let's also run a Quick TraceSP:CacheMiss events:

We see CacheMiss events for the ad hoc batch that calls the stored procedure (since SQL Server generally won't bother caching a batch that consists primarily of procedure calls), but not for the stored procedure itself. Both with and without the sp_something procedure existing in master (and when it exists, both with and without it being marked as a system object), the calls to sp_something in the user database never "accidentally" call the procedure in master, and never generate any CacheMiss events for the procedure.

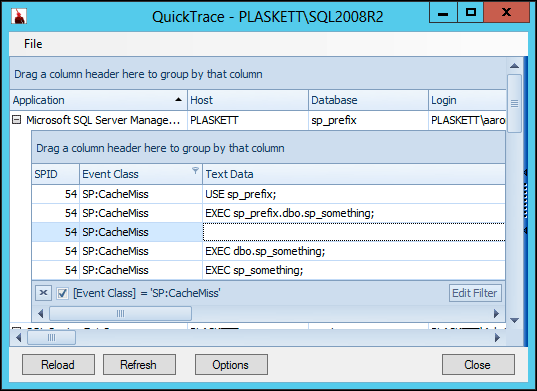

This was on SQL Server 2012. I repeated the same tests above on SQL Server 2008 R2, and found slightly different results:

So on SQL Server 2008 R2 we see an additional CacheMiss event that does not occur in SQL Server 2012. This occurs in all scenarios (no equivalent object master, an object in master marked as a system object, and an object in master not marked as a system object). Immediately I was curious whether this additional event would have any noticeable impact on performance.

Performance Issue: Fact or Fiction?

I made an additional procedure without the sp_ prefix to compare raw performance, CacheMiss aside:

USE sp_prefix;

GO

CREATE PROCEDURE dbo.proc_something

AS

BEGIN

SELECT 'sp_prefix', DB_NAME();

END

GO

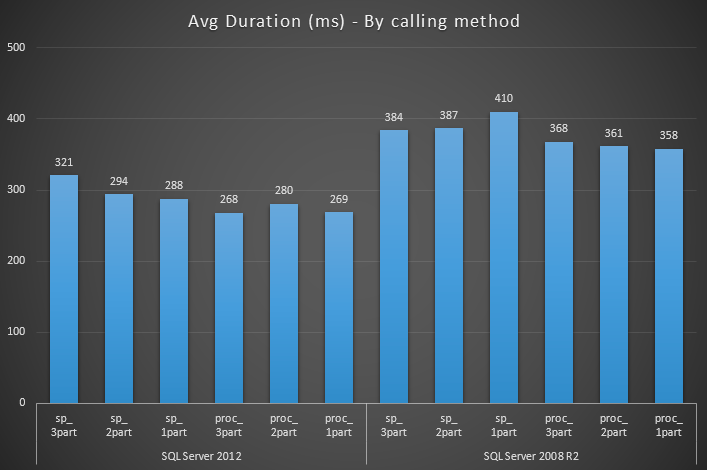

So the only difference between sp_something and proc_something. I then created wrapper procedures to execute them 1000 times each, using EXEC sp_prefix.dbo.<procname>, EXEC dbo.<procname> and EXEC <procname> syntax, with equivalent stored procedures living in master and marked as a system object, living in master but not marked as a system object, and not living in master at all.

USE sp_prefix;

GO

CREATE PROCEDURE dbo.wrap_sp_3part

AS

BEGIN

DECLARE @i INT = 1;

WHILE @i <= 1000

BEGIN

EXEC sp_prefix.dbo.sp_something;

SET @i += 1;

END

END

GO

CREATE PROCEDURE dbo.wrap_sp_2part

AS

BEGIN

DECLARE @i INT = 1;

WHILE @i <= 1000

BEGIN

EXEC dbo.sp_something;

SET @i += 1;

END

END

GO

CREATE PROCEDURE dbo.wrap_sp_1part

AS

BEGIN

DECLARE @i INT = 1;

WHILE @i <= 1000

BEGIN

EXEC sp_something;

SET @i += 1;

END

END

GO

-- repeat for proc_something

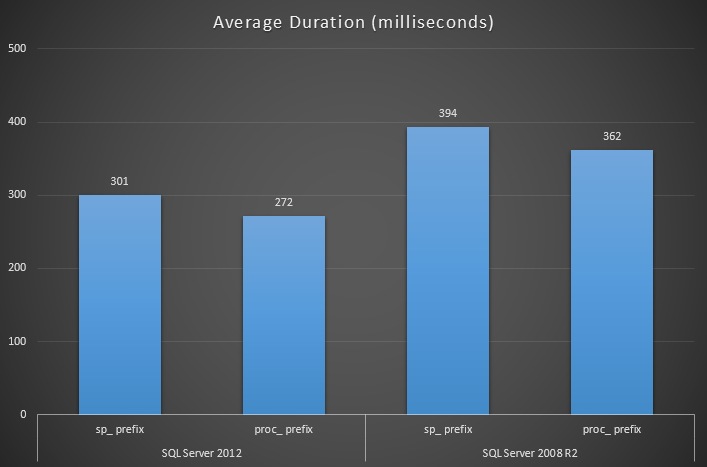

Measuring runtime duration of each wrapper procedure with SQL Sentry Plan Explorer, the results show that using the sp_ prefix has a significant impact on average duration in almost all cases (and certainly on average):

We also see that the performance of SQL Server 2012 trends much better than the performance on SQL Sevrer 2008 R2 - no other variables are different. Both instances are on the same host, and neither is under memory or other pressure of any kind. This could be a combination of the additional CacheMiss event and those transparent improvements you get from enhancements made to the database engine between versions.

Another side effect : Ambiguity

If you create a stored procedure that references an object you created, say dbo.sp_helptext, and you didn't realize (or didn't care) that this name collides with a system procedure name, then there is potential ambiguity when someone is reviewing your stored procedure. They will most likely assume you meant the system procedure, not a different procedure you created that happens to share its name.

Another interesting thing happens when you create a stored procedure that references a stored procedure prefixed with sp_ that just happens to also exist in master. Let's pick an existing procedure that you might not be immediately familiar with (and therefore might be a more likely representative of the scenario I'm describing): sp_resyncuniquetable.

CREATE PROCEDURE dbo.test1

AS

BEGIN

EXEC dbo.sp_resyncuniquetable;

END

GO

In Management Studio, IntelliSense doesn't underline the stored procedure name as invalid, because there is a valid procedure with that name in master. So without seeing a squiggly line underneath, you might assume the procedure is already there (and assuming the procedure in master can be executed without error, this might pass QA/testing as well). If you choose a different name for your resync procedure, let's say proc_resyncuniquetable, there is absolutely no chance for this ambiguity (unless someone manually created that procedure in master, which I guess could happen). If the procedure doesn't exist yet, the caller will still be created successfully (due to deferred name resolution), but you will receive this warning:

The module 'test1' depends on the missing object 'dbo.proc_resyncuniquetable'. The module will still be created; however, it cannot run successfully until the object exists.

One more source of ambiguity can occur in this scenario. The following sequence of events is entirely plausible:

- You create the initial version of a procedure, say,

sp_foo. - The deployer accidentally creates a version in master (and maybe notices, or maybe doesn't, but in either case doesn't clean up).

- The deployer (or someone else) creates the procedure, this time in the right database.

- Over time, you make multiple modifications to

your_database.dbo.sp_foo. - You replace

sp_foowithsp_superfoo, and deletesp_foofrom the user database. - When updating the application(s) to reference the new stored procedure, you might miss a replacement or two for various reasons.

So in this scenario, the application is still calling sp_foo, and it's not failing - even though you've deleted the local copy - since it finds what it thinks is an equivalent in master. Not only is this stored procedure in master not equivalent to sp_superfoo, it's not even equivalent to the latest version of sp_foo.

"Procedure not found" is a much easier problem to troubleshoot than "Procedure doesn't exist - but code calling it works, and doesn't quite return the expected results."

Conclusion

I still think that, even though the behavior has changed slightly in SQL Server 2012, you shouldn't be using the sp_ prefix at any time, unless your intention is to create a stored procedure in master *and* mark it as a system object. Otherwise you are exposed to these performance issues as well as potential ambiguity on multiple fronts.

And personally, I don't think stored procedures need to have any prefix at all - but I have less tangible evidence to convince you of that, other than asking you what other type of object could it possible be? You can't execute a view, or a function, or a table...

As I suggest often, I don't really care what your naming convention is, as long as you're consistent. But I think you should avoid potentially harmful prefixes like sp_.

Very interesting indeed. I'd always told my classes that the performance difference was measurable, but insignificant. Now, with empirical evidence, it looks to consistently consume nearly 10%. Hardly insignificant! Good analysis, -Kev

Interesting read, Aaron! Great stuff.

– Tom

Good post Aaron. The myth is that when you create a procedure with prefix sp_ which is always available in master database, the master's is always get executed. I made a post to show that this is true only if the owner of the procedure is sys (ie system proecudure). Here is my post http://beyondrelational.com/modules/2/blogs/70/posts/10989/prefixing-procedure-names-with-sp-is-bad-practice-is-this-true.aspx There are also some interesting comments in that post

Very interesting post indeed! Great stuff!

I have always been a strong supporter of code that's readable (i.e. less or no ambiguity exists) and one that's easier to troubleshoot. So, using the "sp_" prefix in user procedures has always been a "no-no" for me.

Also, it was interesting to see the performance statistics and comparison between SQL 2012 and SQL 2008 – that just goes on to show the improvements that have been made under the hood to make SQL Server a better and more competitive product.

Thanks a lot for taking the time out and sharing this research, Aaron! Have a nice day!

Aaron – great stuff. I appreciate the link to SQL Sentry too – that looks like a cool tool.

Some good analysis Aaron, I do have one question though.

Do you know if it would make a difference using the sp_ prefix outside of the default schema? For example: mydb.myschema.sp_Prefix

Thanks for the thorough article

Thanks Jamie,

I did not test that, and do not know off-hand at the moment.

But let me turn the question around for you: why would you want to do this? Let's say the difference is negligible or zero. Why use the sp_ prefix in that one scenario, but not in any other scenario? What does it gain you except to make your naming convention inconsistent and conditional?

Jamie, have you read the linked posted at my earlier comment? It would make a difference. Any procedure starts sp_ and not owned by sys object will behave differently based on which database you are executing that procedure. Here is a link http://beyondrelational.com/modules/2/blogs/70/posts/10989/prefixing-procedure-names-with-sp-is-bad-practice-is-this-true.aspx

Aaron, is there a way to edit the comment? Currently it is not possible. I wish there should be an option :)

It gains me a couple of days of extra work as I have picked up an existing system for performance improvements where this pattern has been implemented. Personally I would not do this but before I went ahead with a database wide rename I wanted to establish just how much of a problem it actually is.

Madhivanan seems to have the answer (I have looked through the article, thanks M) and there is no explicit comment about penalties for prefixes with custom schema's. To summarise what you are saying then; even though Master has no mySchema schema, that just by having the sp prefix there would be a performance hit for the additional lookup's and that there is no difference if the stored proc references were fully qualified or not.

Great article Aaron. Just what I was looking for In fact, above and beyond what I needed. Thanks!

we have a vendor system, where changing the names of stored procedures is NOT currently an option. Will executing 1 or more existing sp_ prefix stored procedures as [databasename].[dbo.].[sp_STOREDPROCEDURENAME] eliminate the overhead, where sql server would not exactly in which database and which schema to find the stored procedure? Thanks!!

I didn't test that explicitly, but since I observed the same behavior with and without the explicit database prefix, I suspect it's still the same overhead. Tell your vendor to read this and fix it.

Wow. I admire and appreciate people that don't give half baked answers, but instead, give detailed understandable explanations, with examples as proof. Thanks.

What I find ironic:

Microsoft Support might tell you the behavior of sp_ is undocumented and should not be used. On the other hand, if the Microsoft Support person you get is under pressure to close support cases as quickly as possible, you may be allowed to hang yourself.

What I find cowardly:

Microsoft's Documentation team (as far as I can tell) refuses officially to document the pitfalls of using sp_. And yet the Documentation team effusive documents SQL Server's use of the sp_ prefix.

I am guessing:

Over a decade ago there was one Microsoft lawyer who decreed this behavior shall remain undocumented. Nowadays I suspect Microsoft Support has 7-10 levels of managers and Microsoft Documentation 3-5 levels. I think no Microsoft manager has yet found it in their best interest to challenge that old decree. My guess is, the more levels of managers Microsoft adds, the smaller the chance if transparency there will be.

I could be wrong:

If someone finds the behavior 'officially' documented on https://docs.microsoft.com, please post that documentation's title, and its URL.

Hi Bill,

this is documented by Microsoft.

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-procedure-transact-sql?view=sql-server-2017

"Avoid the use of the sp_ prefix when naming procedures…."